Investigating Many-Shot In-Context Learning in LLMs

Introduction to In-Context Learning

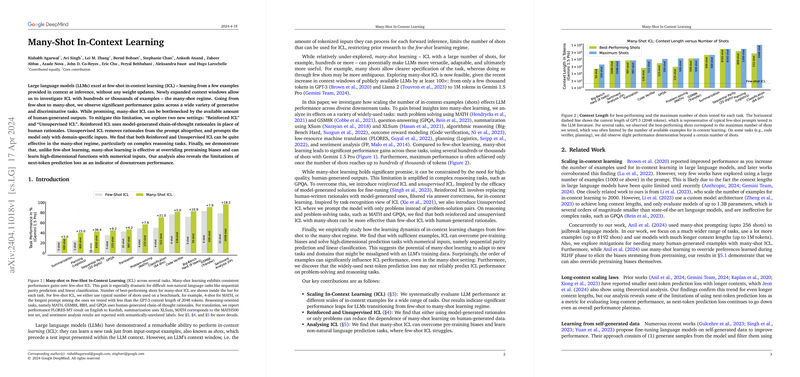

In-context learning (ICL) equips LLMs to adapt to new tasks using example inputs and outputs termed "shots." Traditionally constrained by token limitations in the model's context window, most research has focused on few-shot ICL. However, expanding context windows in recent LLMs creates opportunities to paper the impacts of many-shot ICL, where hundreds or thousands of shots can be used. This shift promises greater task adaptability and performance improvements but also introduces new challenges such as the dependence on high-quality, large-scale human-generated outputs.

Key Contributions of the Study

The paper offers several significant insights into the scaling of in-context examples:

- Performance Gains Across Tasks: Extending in-context shots consistently improved LLM performance across various tasks compared to traditional few-shot learning, particularly when leveraging the larger context windows of the Gemini 1.5 Pro model.

- Reinforced and Unsupervised ICL: Innovations include Reinforced ICL, which employs model-generated rationales instead of human-written ones, and Unsupervised ICL, which uses problem sets without paired solutions, reducing reliance on labor-intensive human-generated content.

- Overcoming Pre-Training Biases: The paper demonstrates that many-shot ICL can counteract biases from the pre-training phase, particularly when provided with a sufficient number of examples.

- Exploration of Non-Natural Language Prediction Tasks: The expansion into tasks that involve logical or structured outputs beyond standard text indicates that many-shot ICL can be effective for a broader array of applications than previously possible with few-shot approaches.

Detailed Analysis and Findings

Scaling with Context Length

The research highlights improvements in task performance correlating with the number of shots used in ICL as context lengths increase. This scalability is evident in tasks as diverse as machine translation, summarization, and even complex reasoning, showcasing the utility of many-shot approaches facilitated by modern LLMs with expanded context capabilities.

Reinforced and Unsupervised In-Context Learning

- Reinforced ICL: By using model-generated solutions and selecting those that achieve correct outcomes, this approach offers a scalable alternative to using expensive human-generated data, showing considerable promise in reasoning tasks.

- Unsupervised ICL: This proposal removes solutions from the training data altogether, asking the model to infer answers based solely on input problems. While successful in some contexts, it highlights the variability in effectiveness depending on the nature of the task.

Overcoming Pre-Training Biases

Through extensive testing, this paper illustrates that many-shot learning configurations allow LLMs to surpass inherent biases instilled during their initial training phase. This finding is crucial for deploying LLMs in scenarios where neutrality and absence of pre-existing biases are critical.

Further Implications and Future Work

The findings encourage further exploration into optimal configurations of in-context learning, particularly regarding the number of examples and their arrangement within prompts. There is also a need to refine our understanding of why performance may plateau or decrease with an excessive number of examples. Continual advancements in the development of longer-context models will play a pivotal role in harnessing the full potential of many-shot ICL, which could redefine the operational capabilities of LLMs across diverse domains.