- The paper introduces an innovative modular pipeline that infers, merges, and evaluates conversational goals in LLM dialogues.

- It leverages prompt engineering with generative LLMs like GPT-4 to execute sequential goal inference, merging, and evaluation in real time.

- Empirical results show reduced cognitive load and improved user engagement, validating OnGoal’s effectiveness in managing multi-turn interactions.

OnGoal: Tracking and Visualizing Conversational Goals in Multi-Turn Dialogue with LLMs

Introduction and Motivation

The increasing complexity and length of multi-turn dialogues with LLMs have introduced significant challenges for users in tracking, evaluating, and reviewing their conversational goals. Traditional linear chat interfaces, while familiar, are ill-suited for managing evolving objectives, especially as LLMs may forget, ignore, or misinterpret user intent over extended interactions. The OnGoal system addresses these limitations by augmenting the chat interface with real-time goal tracking, evaluation, and visualization, thereby supporting users in maintaining alignment between their objectives and LLM responses.

System Architecture and Goal Pipeline

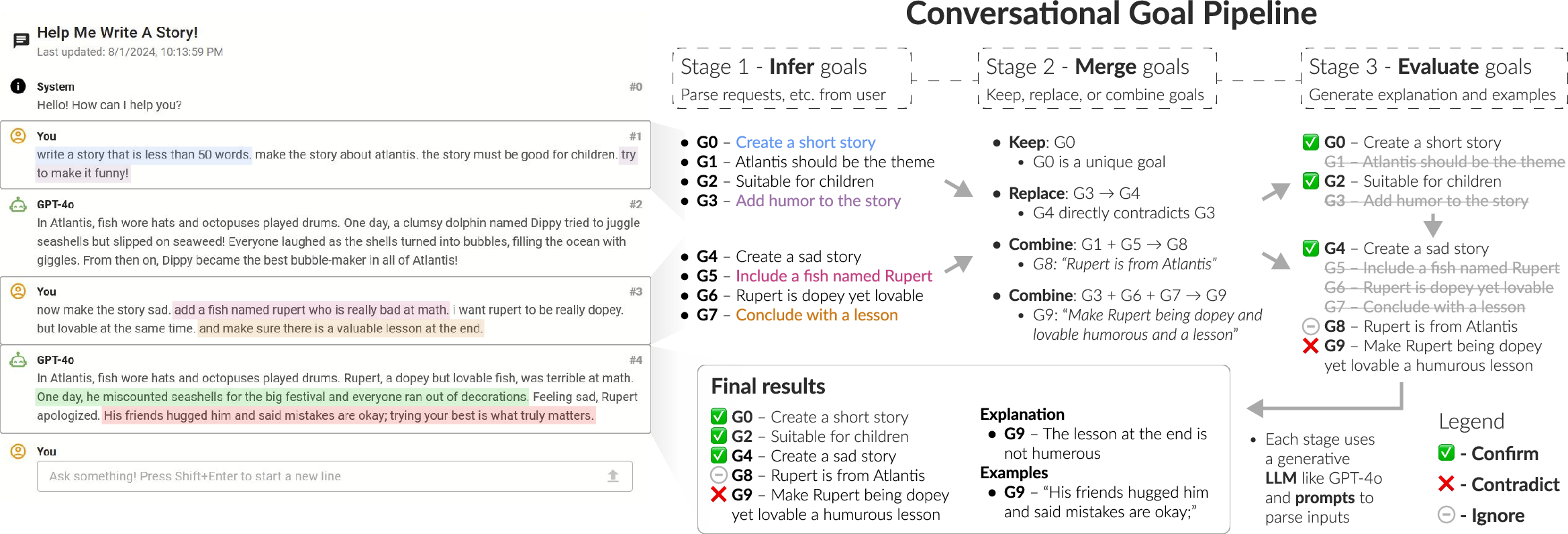

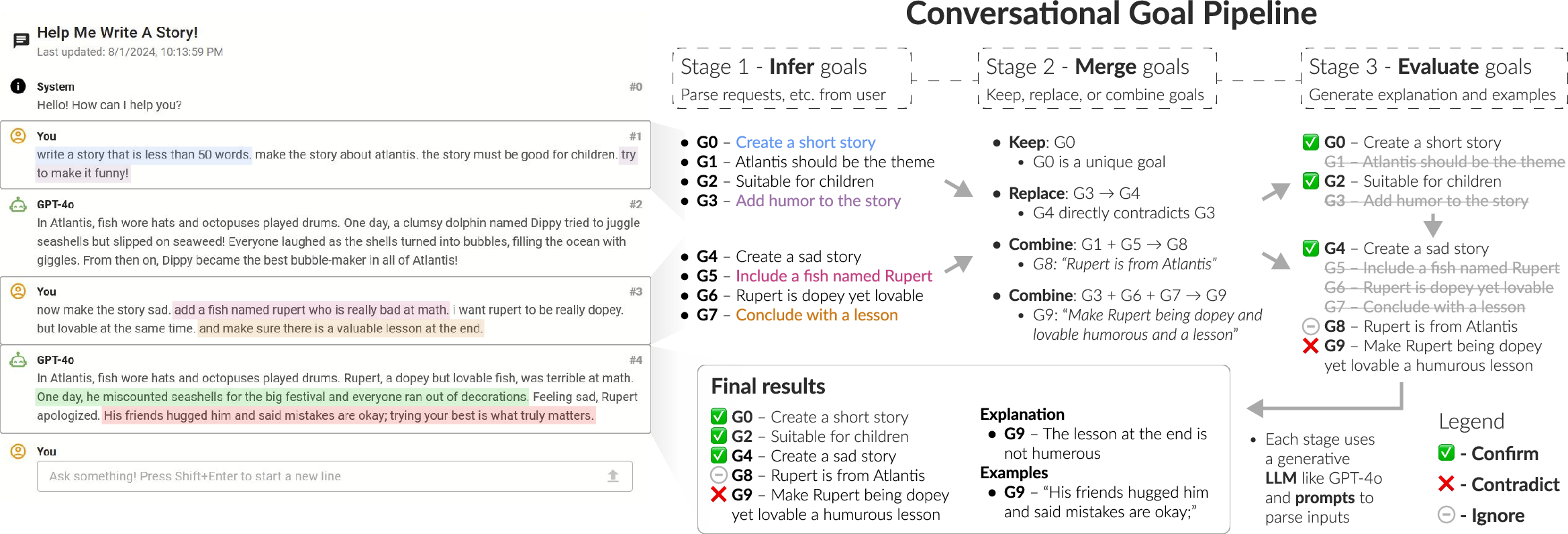

OnGoal introduces a modular, LLM-driven goal pipeline that operates independently from the main chat LLM. The pipeline consists of three sequential stages: goal inference, goal merging, and goal evaluation. Each stage is implemented via prompt engineering and executed using a generative LLM (e.g., GPT-4o). The pipeline is designed to be domain-agnostic but is tuned for writing tasks in the current implementation.

- Goal Inference: Extracts all user-specified conversational goals (questions, requests, offers, suggestions) from each user message.

- Goal Merging: Reconciles newly inferred goals with existing goals, handling redundancy, contradiction, and evolution of objectives.

- Goal Evaluation: Assesses each goal against the LLM's response, categorizing the outcome as confirm, contradict, or ignore, and provides explanations and supporting evidence.

Figure 1: An example of the conversational goal pipeline in action, showing inference, merging, and evaluation of goals with visual feedback in the chat interface.

This architecture enables real-time, global tracking of conversational goals, with the flexibility to adapt to different LLMs and domains by modifying prompt templates and goal definitions.

User Interface and Visualization Components

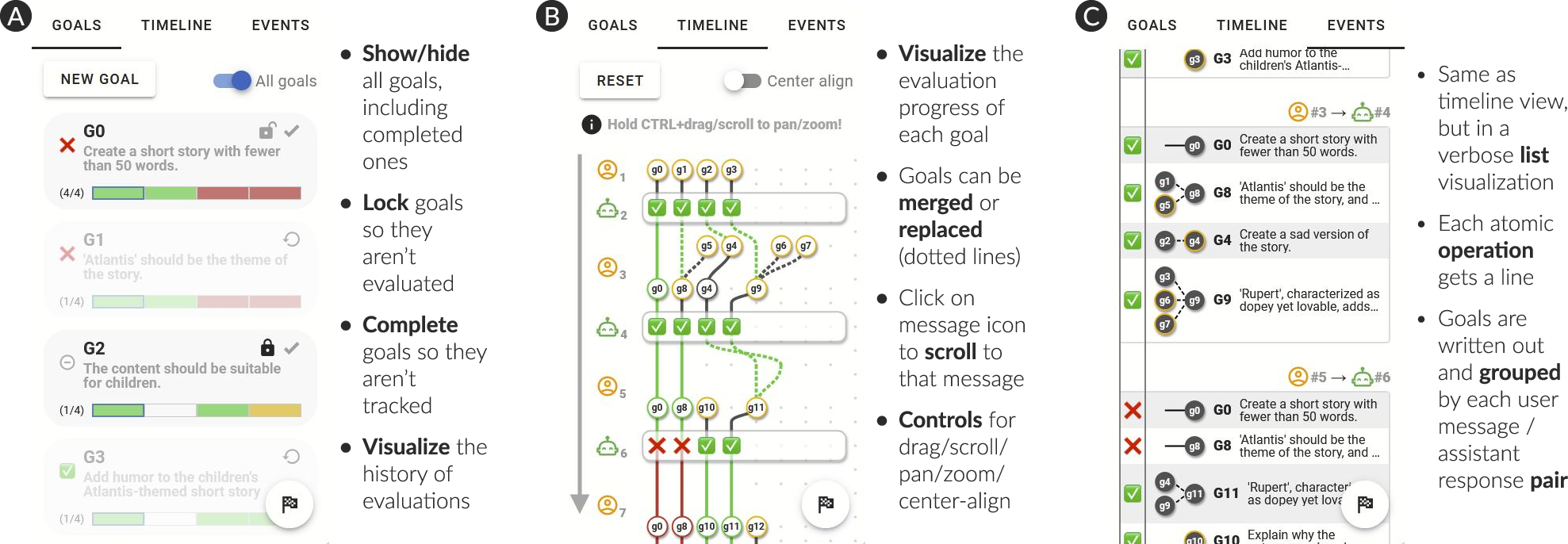

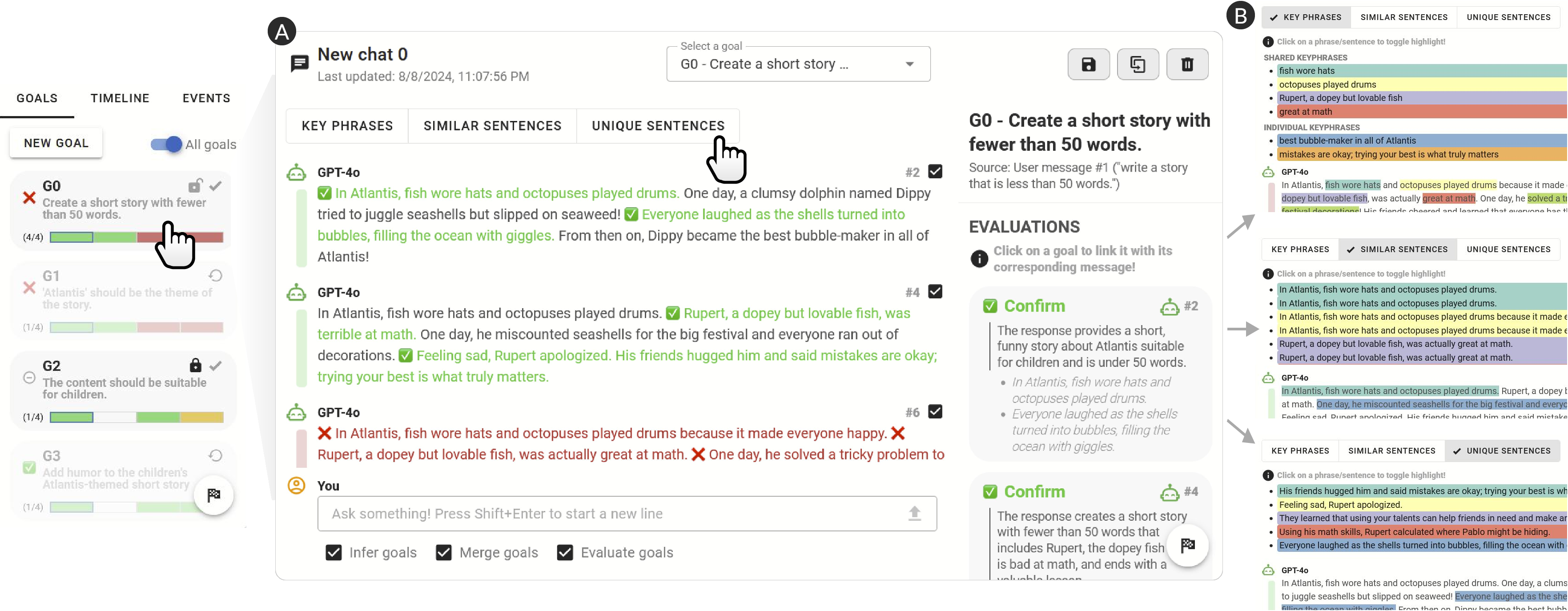

OnGoal integrates several visualization modalities to support sensemaking and reduce cognitive load:

Implementation Details

The backend is implemented in Python, leveraging the OpenAI API for LLM inference and evaluation. The frontend is built with Vue.js and D3.js for interactive visualizations. The system is LLM-agnostic and can be adapted to open-source or local models with minimal changes. Prompt templates for each pipeline stage are provided, enabling reproducibility and extensibility.

Empirical Evaluation

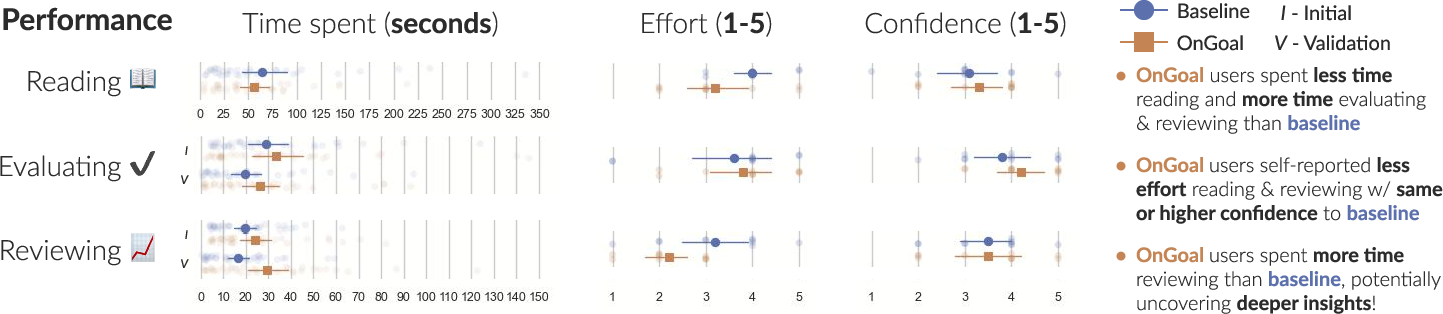

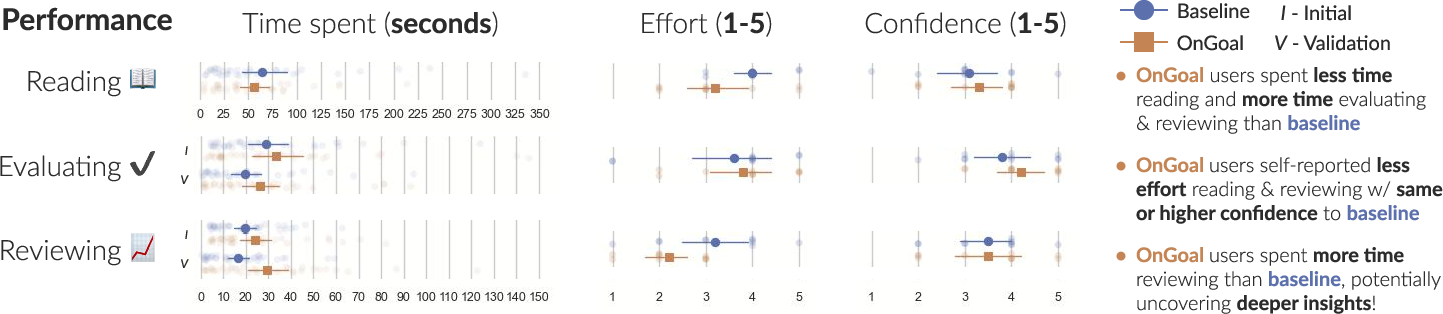

A controlled user paper (n=20) compared OnGoal to a baseline chat interface without goal tracking or visualization. Participants completed writing tasks requiring satisfaction of multiple, sometimes conflicting, goals. Key findings include:

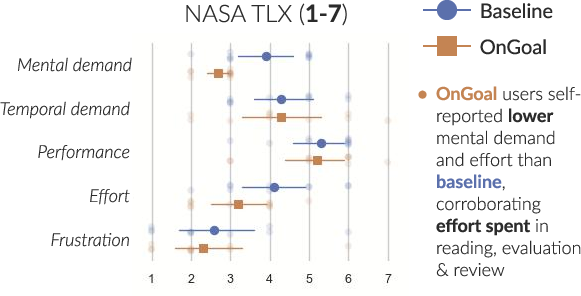

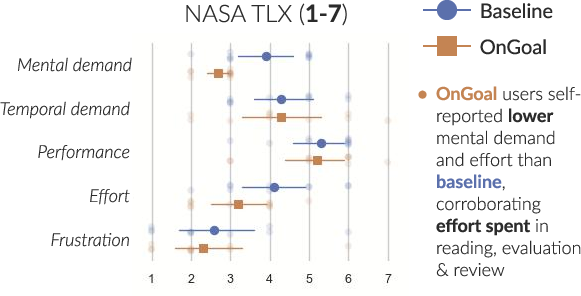

- Reduced Cognitive Load: OnGoal users reported significantly lower mental demand and effort (NASA TLX), and spent less time reading chat logs, reallocating effort to evaluating and reviewing goals.

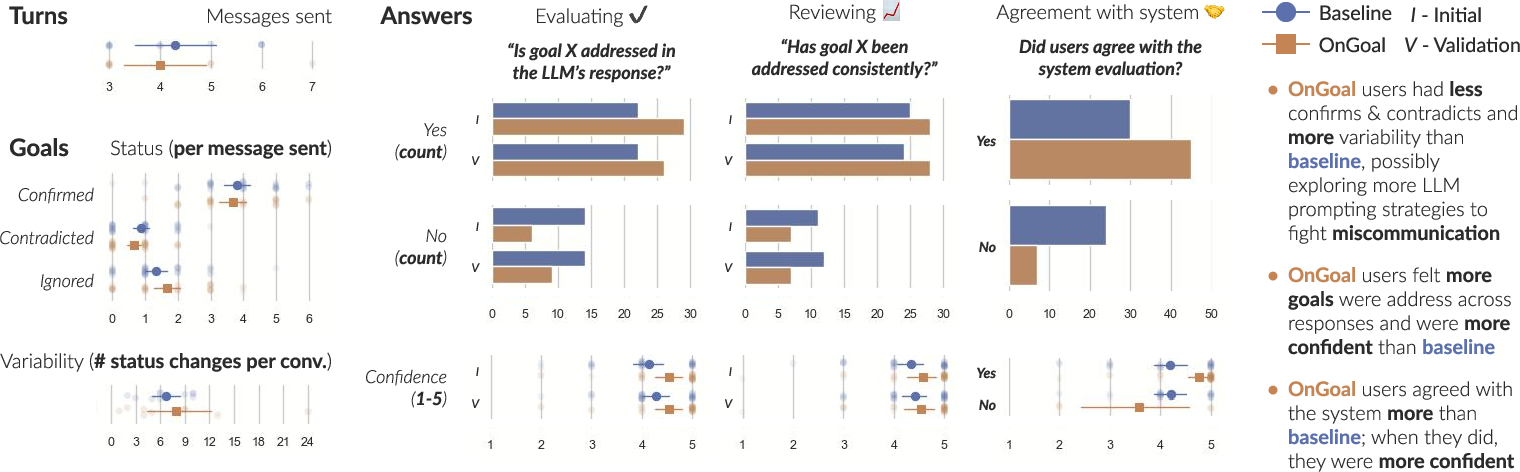

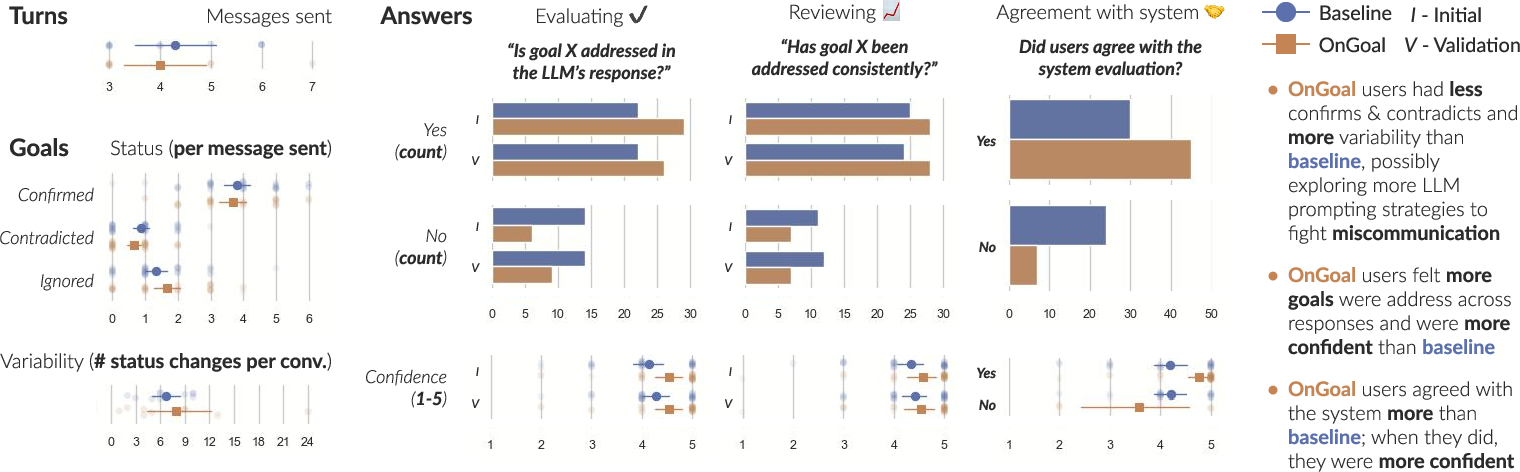

- Enhanced Goal Management: Users employing OnGoal experimented more with prompting strategies, adapted their communication based on system feedback, and demonstrated higher variability in goal status changes, indicating more active engagement.

- Improved Confidence and Agreement: OnGoal users expressed higher confidence in their evaluations and greater agreement with system-generated goal assessments.

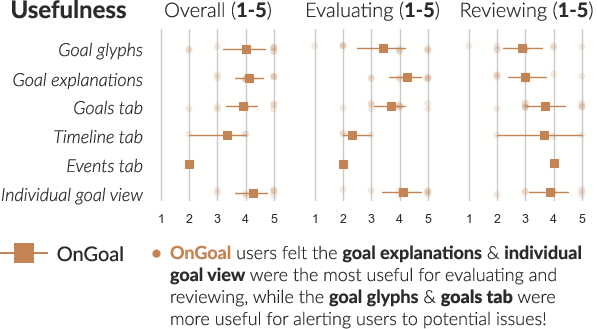

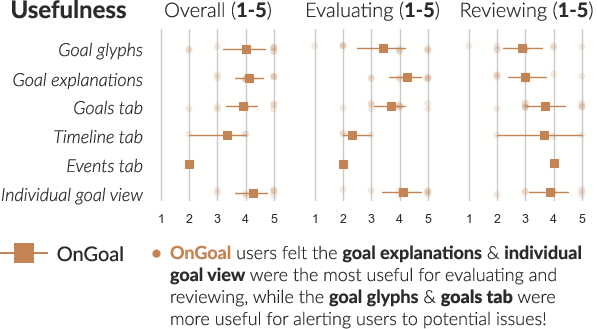

- Feature Usefulness: Inline goal glyphs and explanations were rated most useful for evaluation, while the individual goal view and text highlighting were critical for reviewing progress and identifying LLM issues.

Figure 4: Time allocation differences between interfaces, with OnGoal users spending more time on evaluation and review, and less on exhaustive reading.

Figure 5: NASA TLX workload ratings, showing lower mental demand and effort for OnGoal compared to baseline.

Figure 6: Interaction metrics, including turns taken, goal status changes, and agreement with system evaluations.

Figure 7: Usefulness ratings for OnGoal features, highlighting the importance of explanations and individual goal views.

Thematic Analysis and Emergent Issues

Qualitative analysis revealed that OnGoal facilitated more dynamic and reflective goal communication, allowed users to offload cognitive effort to the system, and enabled more effective identification of LLM misalignment or drift. However, the introduction of explicit goal evaluation also surfaced interpretive complexity and occasional disagreement between user and system assessments, underscoring the need for user feedback mechanisms and further refinement of evaluation prompts.

Design Implications and Future Directions

The paper suggests several actionable implications for the design of LLM chat interfaces:

- Support Multiple Goal Communication Strategies: Interfaces should accommodate both proactive and reactive goal setting, and allow for flexible, iterative refinement of objectives.

- Visualize Goal Alignment and Progress: Real-time, context-sensitive visualizations can reduce cognitive load and improve user awareness of LLM behavior.

- Enable Feedback and Personalization: Incorporating user feedback into the goal evaluation pipeline can improve alignment and trust, and support adaptation to individual preferences.

- Extend to Local and Fine-Grained Goals: Future work should explore local (e.g., paragraph- or sentence-level) goal tracking and support for more complex, hierarchical goal structures.

Limitations

The current evaluation is limited to writing tasks and a specific set of goal types. The generalizability to other domains (e.g., programming, data analysis) and the robustness of the goal pipeline across different LLMs remain open questions. Additionally, the subjective nature of goal evaluation highlights the need for more sophisticated, possibly user-adaptive, evaluation mechanisms.

Conclusion

OnGoal demonstrates that augmenting LLM chat interfaces with explicit, real-time goal tracking and visualization can significantly improve users' ability to manage, evaluate, and review their conversational objectives in multi-turn dialogue. The system architecture, prompt engineering strategies, and visualization techniques presented are extensible to a wide range of LLM-driven applications. Future research should focus on expanding the scope of goal types, integrating user feedback for adaptive evaluation, and exploring longitudinal effects in open-ended, real-world tasks.