- The paper introduces AWorld, which achieves a 14.6x faster rollout generation via distributed processing to enhance agentic AI performance on GAIA.

- It integrates modular components including agent construction, communication protocols, and state management to streamline scalable reinforcement learning.

- Empirical results demonstrate substantial improvements in model pass rates, underscoring efficient experience generation as critical for robust agentic learning.

AWorld: Orchestrating the Training Recipe for Agentic AI

Introduction

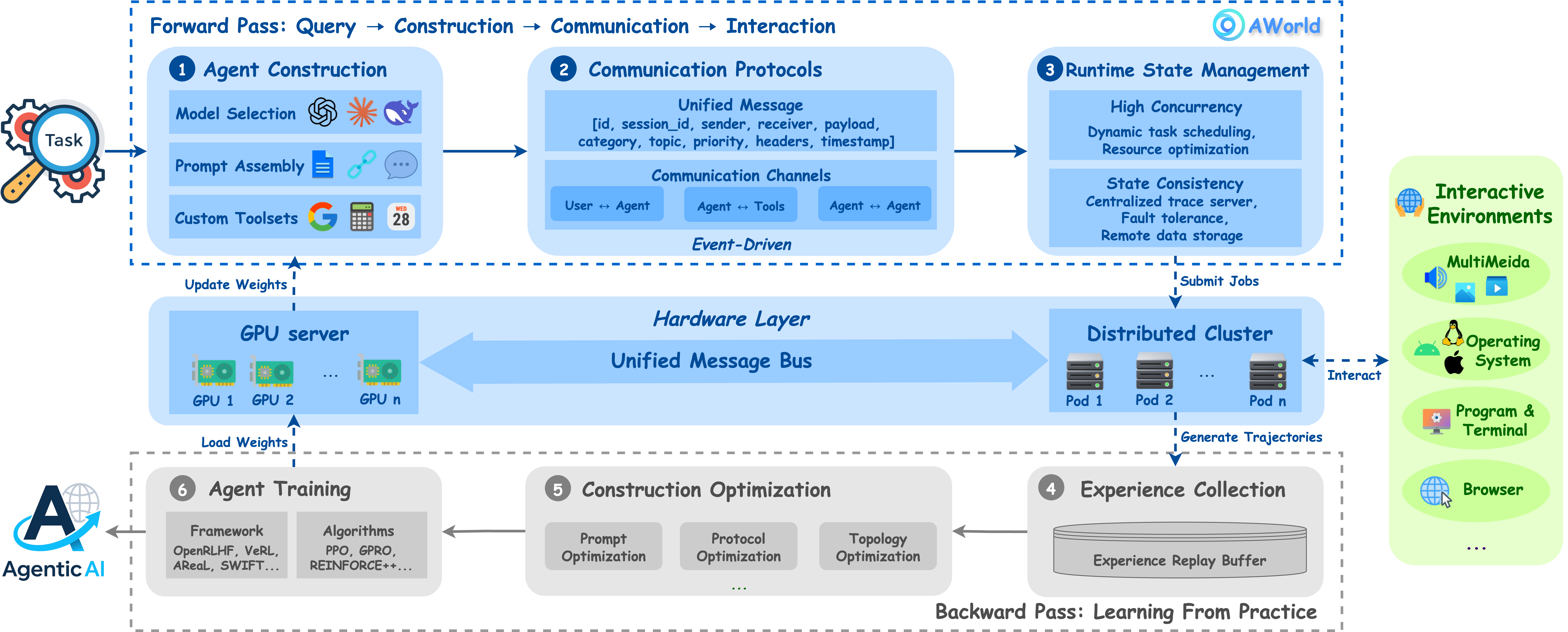

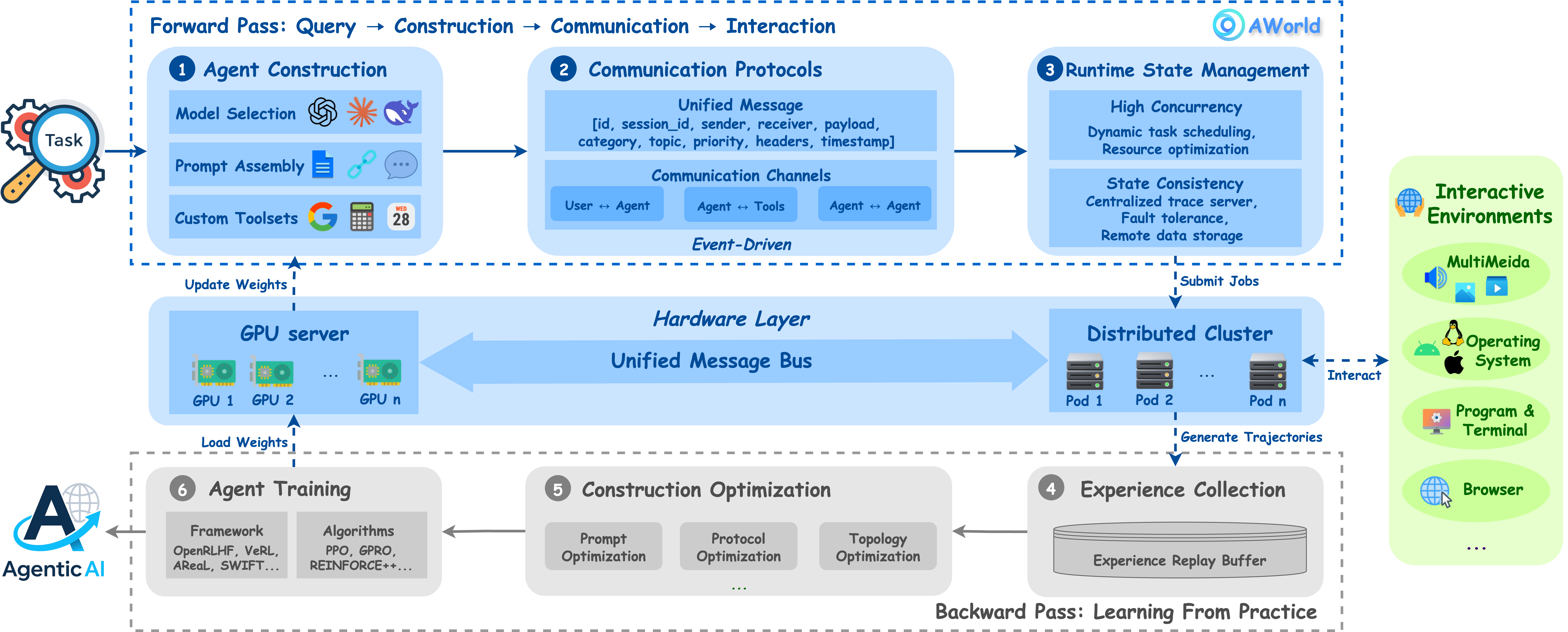

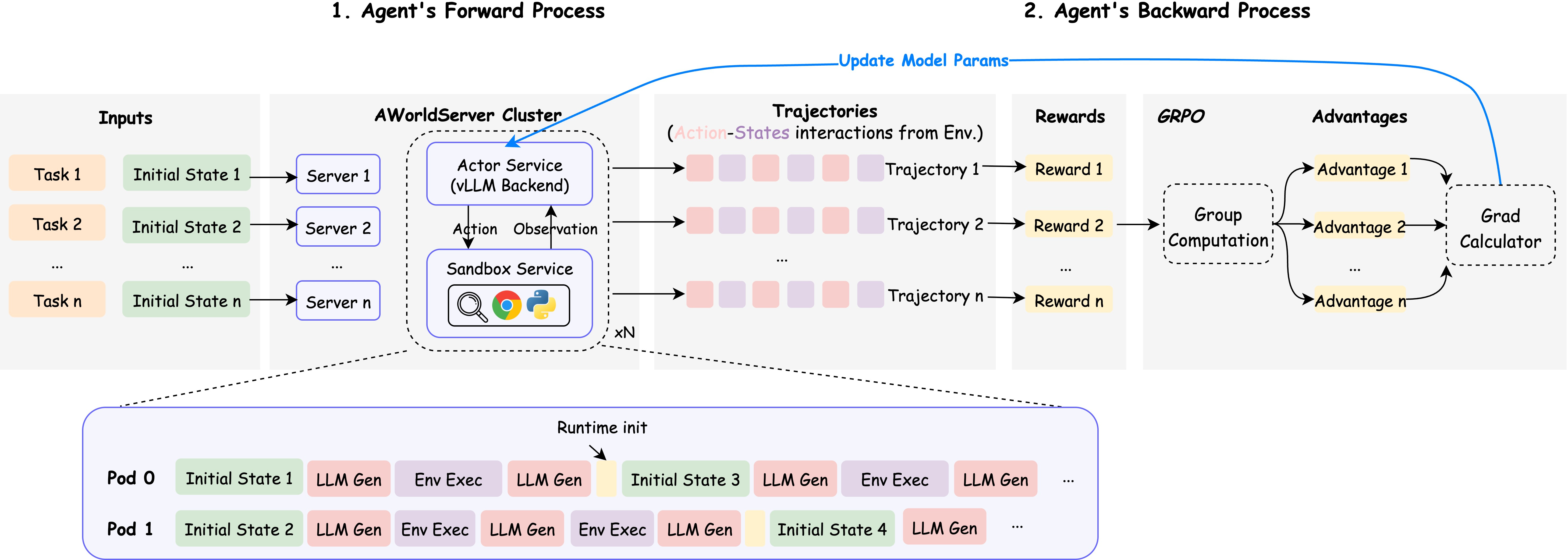

The paper presents AWorld, an open-source framework designed to address the computational bottlenecks in agentic AI training, particularly those arising from inefficient experience generation in complex environments. The work is motivated by the limitations of current LLMs in solving real-world, multi-step reasoning tasks, as exemplified by the GAIA benchmark. AWorld integrates model selection, runtime construction, communication protocols, and training orchestration to enable scalable, efficient agent-environment interaction and reinforcement learning.

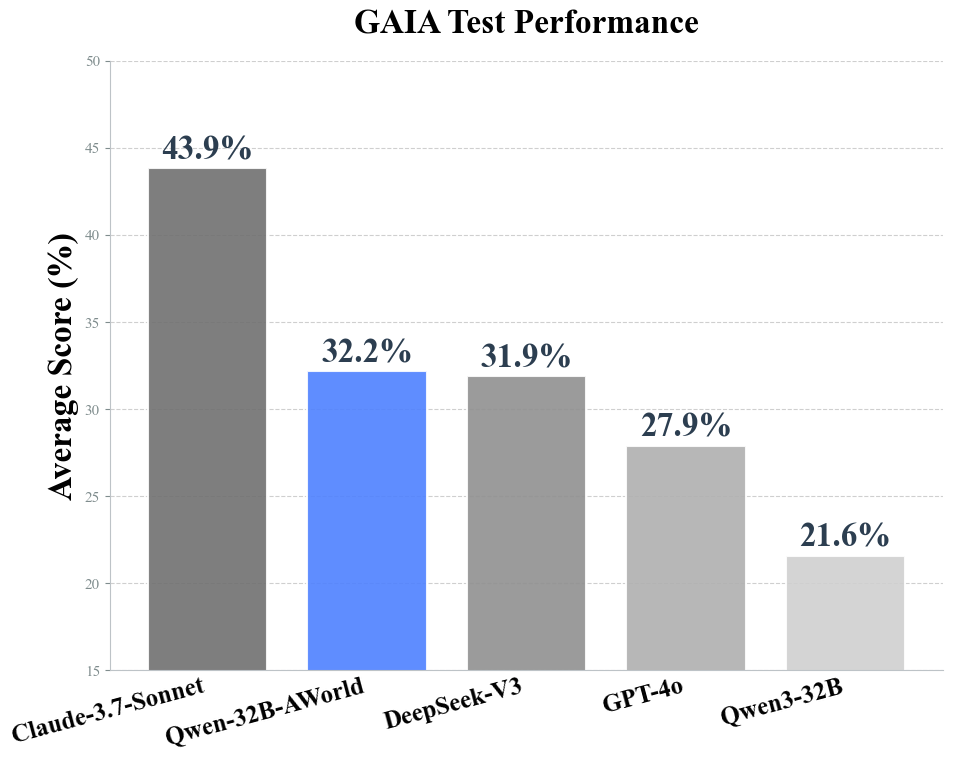

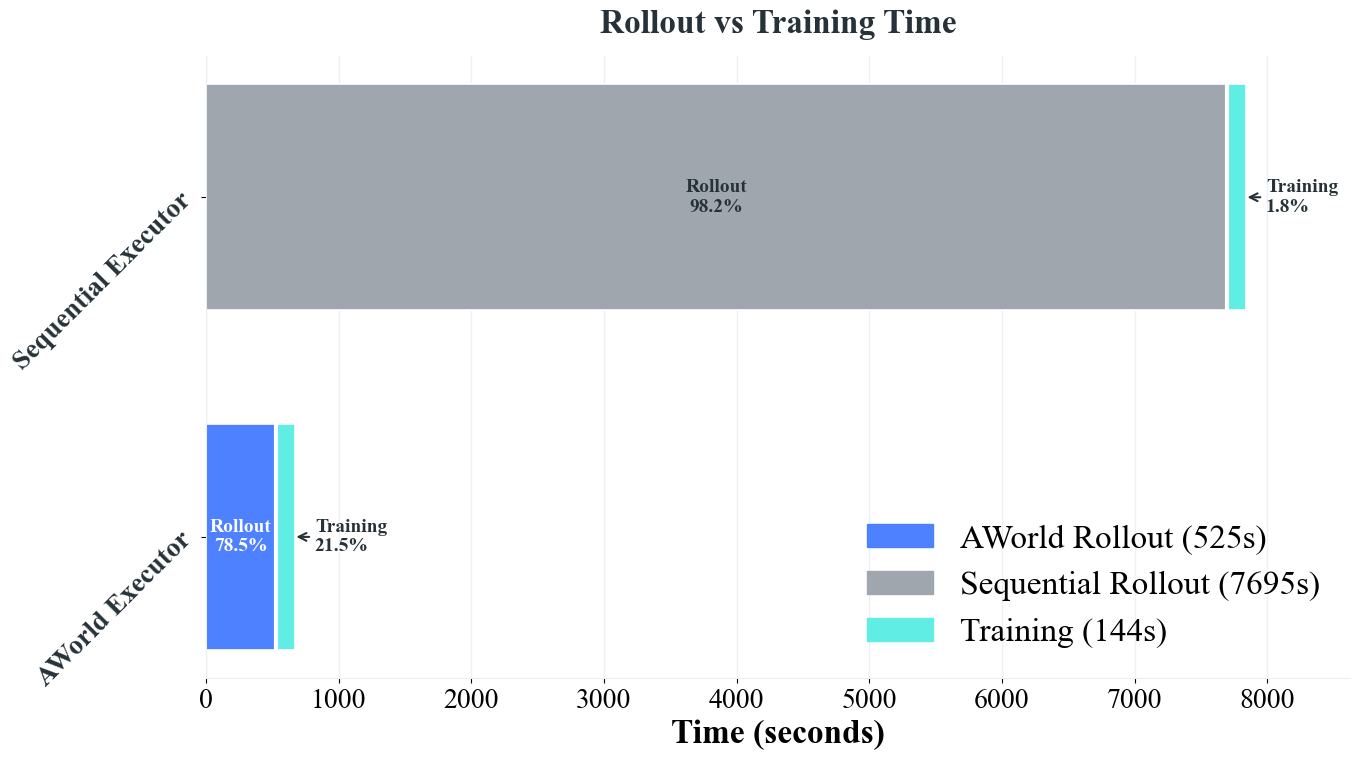

Figure 1: AWorld enables substantial performance gains on GAIA by accelerating experience generation 14.6x via distributed rollouts, making RL practical for large models.

Framework Architecture

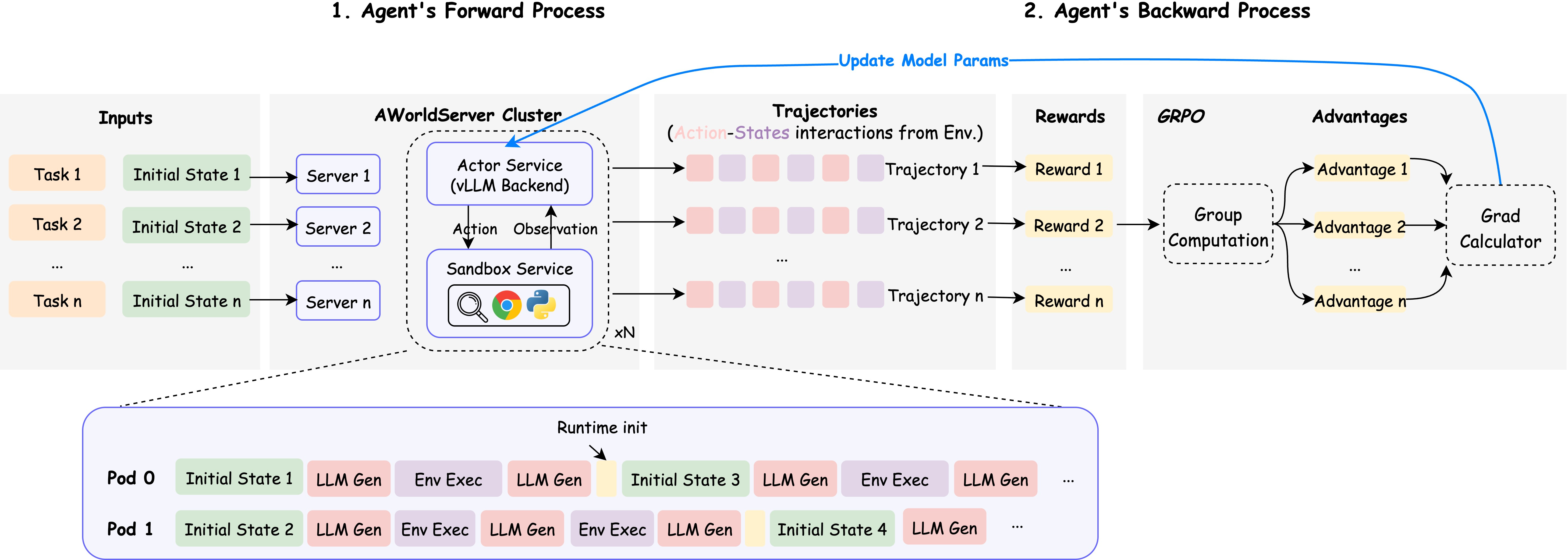

AWorld is architected to support the full lifecycle of agentic AI development, from agent instantiation to distributed training. The framework is modular, comprising four principal components:

- Agent Construction: Agents are instantiated with configurable logic, toolsets, and planning capabilities. The system supports prompt assembly, custom tool integration (e.g., browser automation, code execution), and flexible agent topologies for multi-agent collaboration.

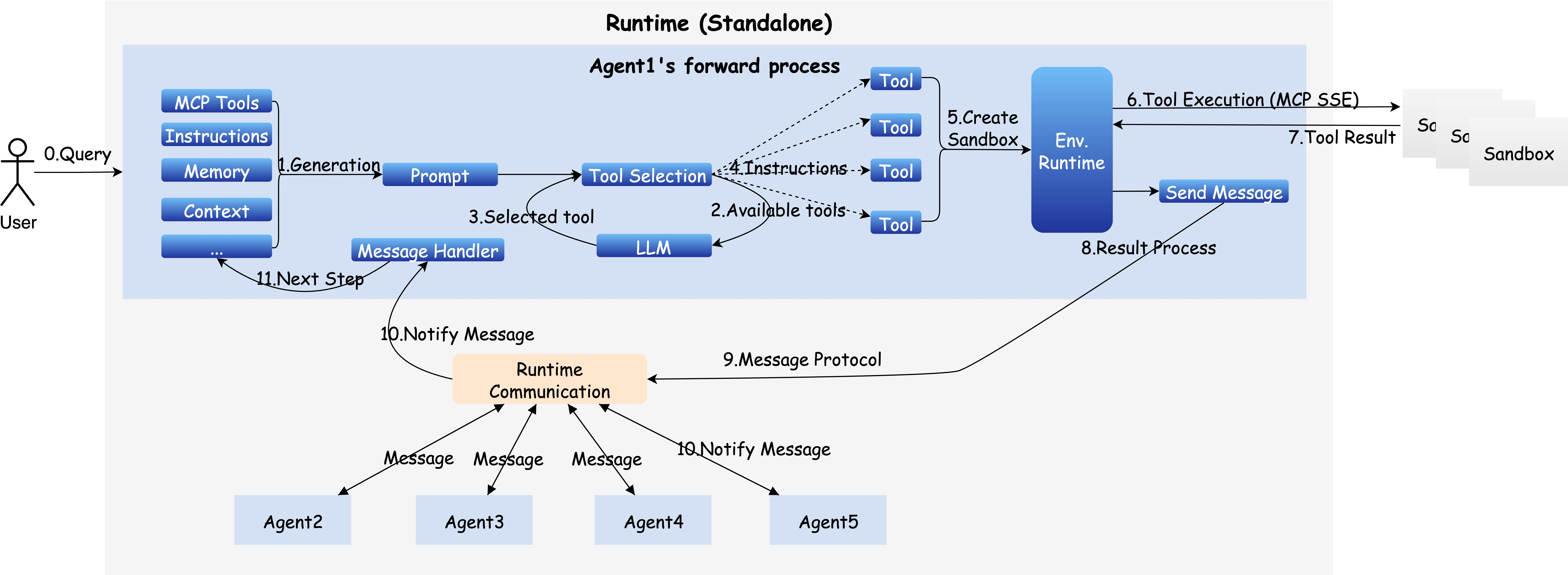

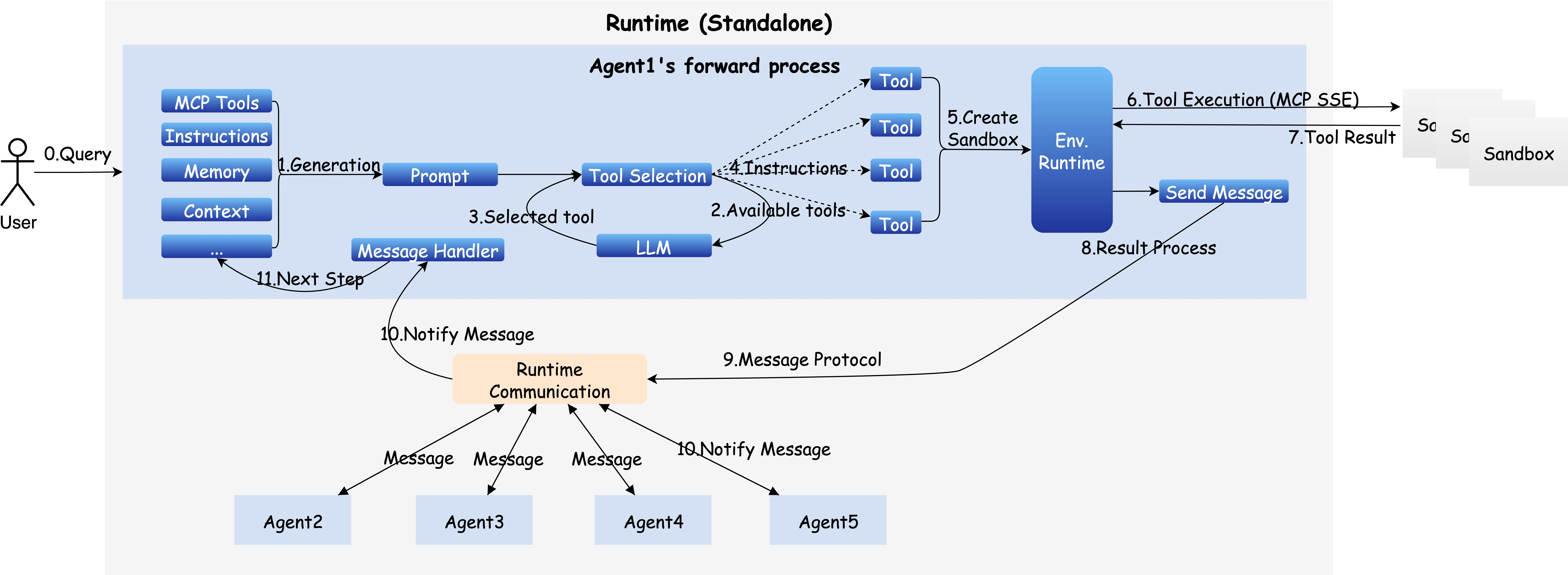

- Communication Protocols: A unified message-passing architecture enables robust communication between agents, tools, and environments. The Message API supports event-driven workflows, error handling, and extensibility for complex task coordination.

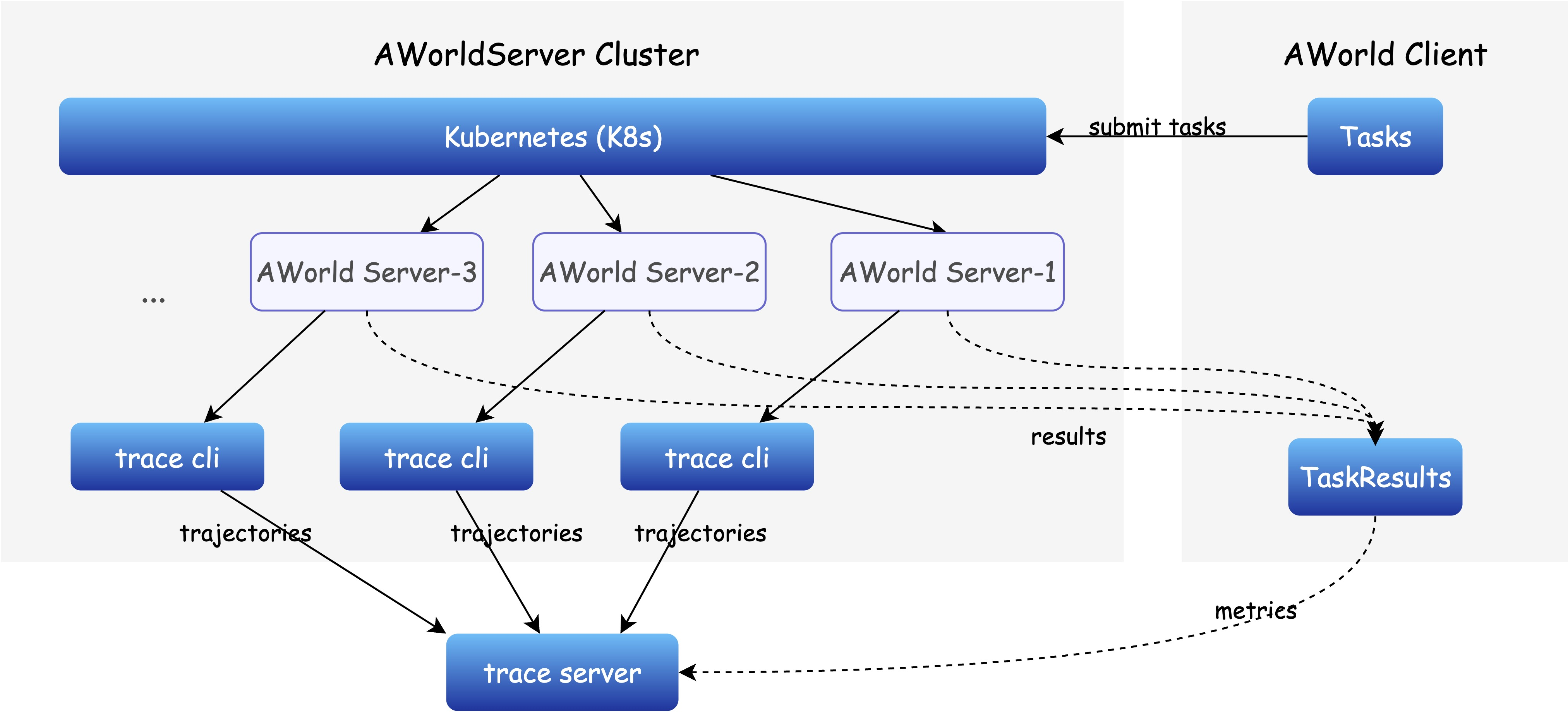

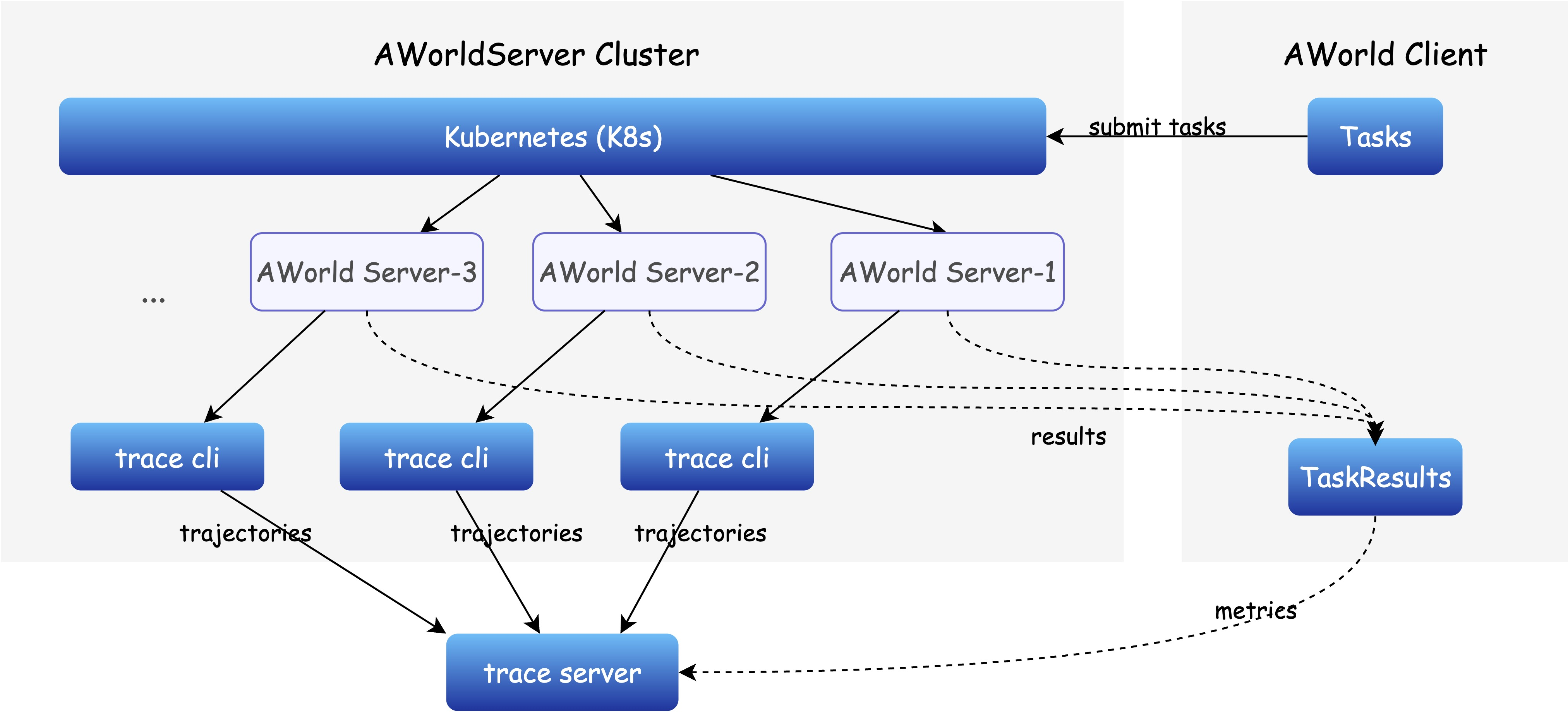

- Runtime State Management: Distributed execution is managed via Kubernetes, allowing high-concurrency rollouts across sandboxed environments. State consistency is maintained through centralized trace servers and remote storage, supporting recovery and large-scale evaluation.

- Training Orchestration: AWorld decouples rollout generation from model training, integrating with external RL frameworks (e.g., SWIFT, OpenRLHF) to enable scalable policy optimization.

Figure 2: The AWorld architecture supports both forward (experience generation) and backward (policy optimization) passes, enabling closed-loop agentic learning.

Figure 3: Message workflow in AWorld runtime, illustrating agent query handling and inter-component communication.

Distributed Rollout and Experience Generation

AWorld's core innovation lies in its distributed rollout engine, which orchestrates massive parallelization of agent-environment interactions. By leveraging Kubernetes, the system can concurrently execute thousands of long-horizon tasks, each encapsulated in isolated pods. This design overcomes the resource contention and instability inherent in single-node setups, making large-scale experience generation tractable.

Figure 4: Massively parallel rollouts in AWorld, managed by Kubernetes, enable scalable data generation for RL.

Figure 5: Action-state rollout demonstration, with multiple pods running in parallel to maximize experience throughput.

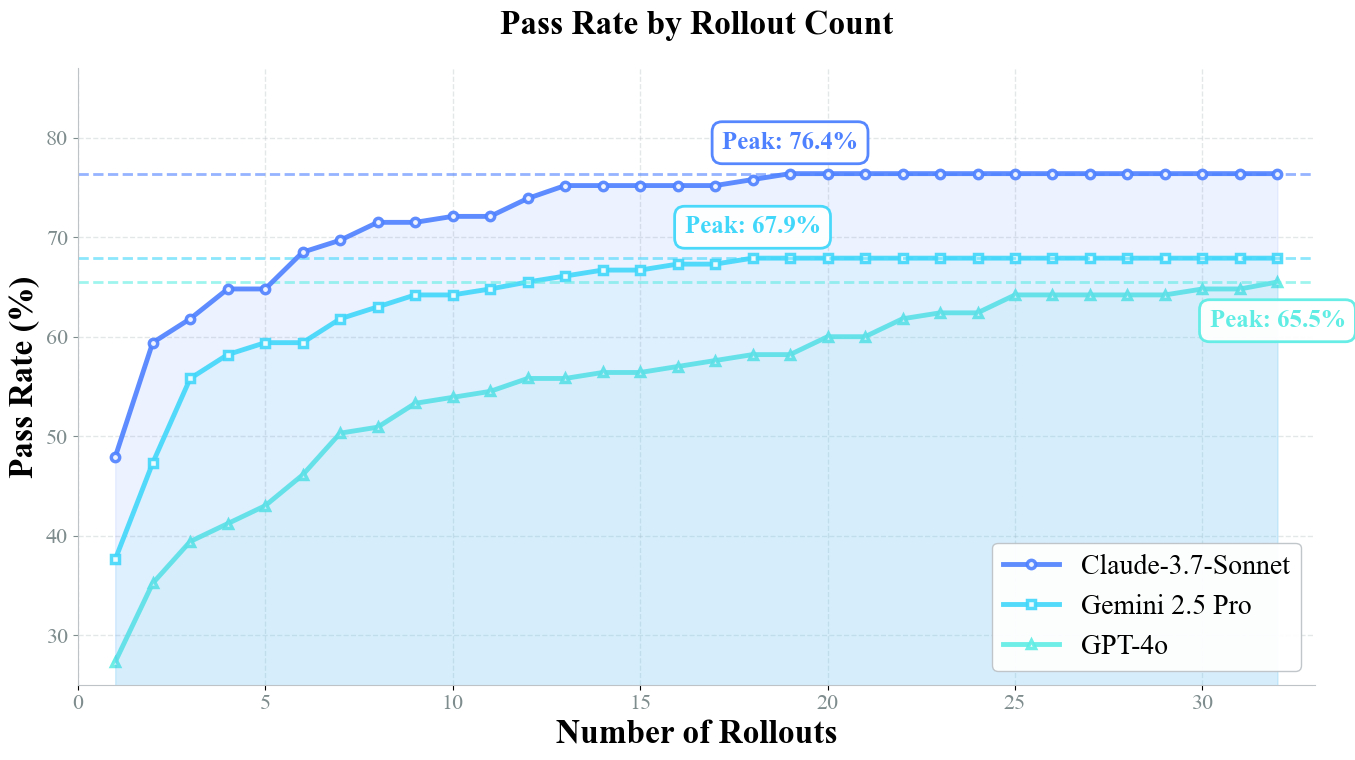

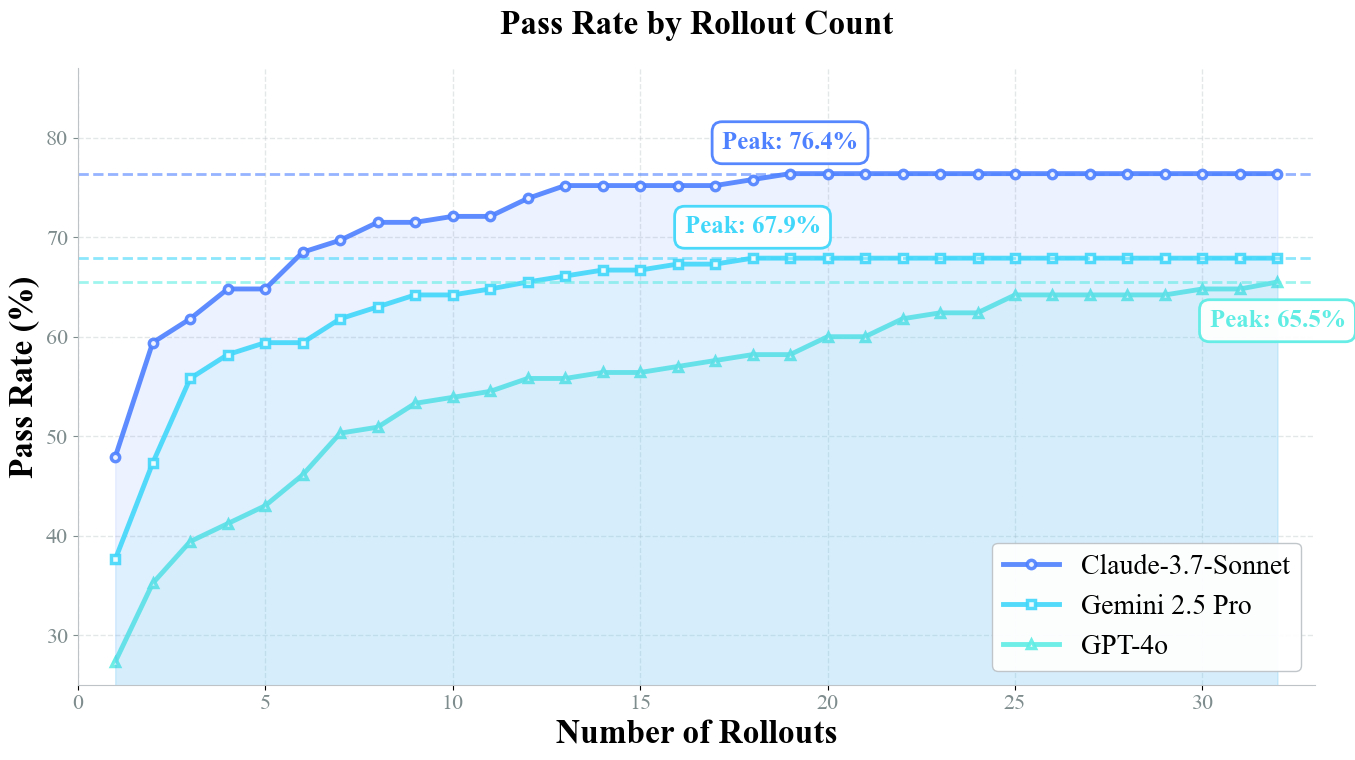

The paper provides a systematic analysis of the relationship between rollout scale and agent performance on the GAIA benchmark. Experiments demonstrate a universal trend: increasing the number of rollouts per task leads to substantial improvements in pass rates for all evaluated models. For example, Claude-3.7-Sonnet's pass@1 increases from 47.9% to 76.4% as rollouts scale from 1 to 32, while GPT-4o's pass@1 more than doubles.

Figure 6: Pass rate as a function of rollout scale on GAIA; all models benefit from increased interaction attempts.

This result empirically validates the necessity of efficient, large-scale experience generation for agentic learning. The bottleneck in agent training is not model optimization but the ability to generate sufficient successful trajectories for RL.

Efficiency Gains and Training Outcomes

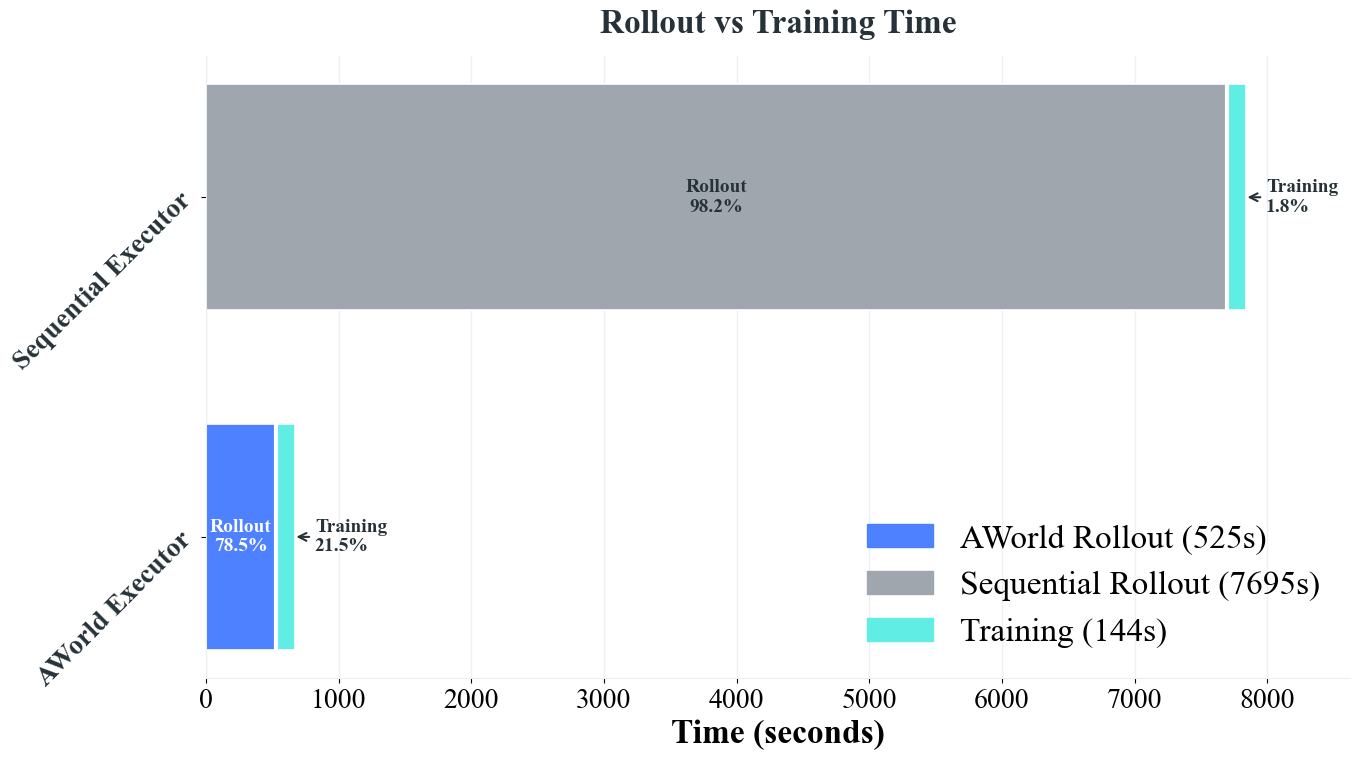

AWorld achieves a 14.6x speedup in rollout generation compared to sequential single-node execution, reducing the total cycle time for rollout and training from 7839s to 669s. This efficiency shift enables practical RL on complex benchmarks.

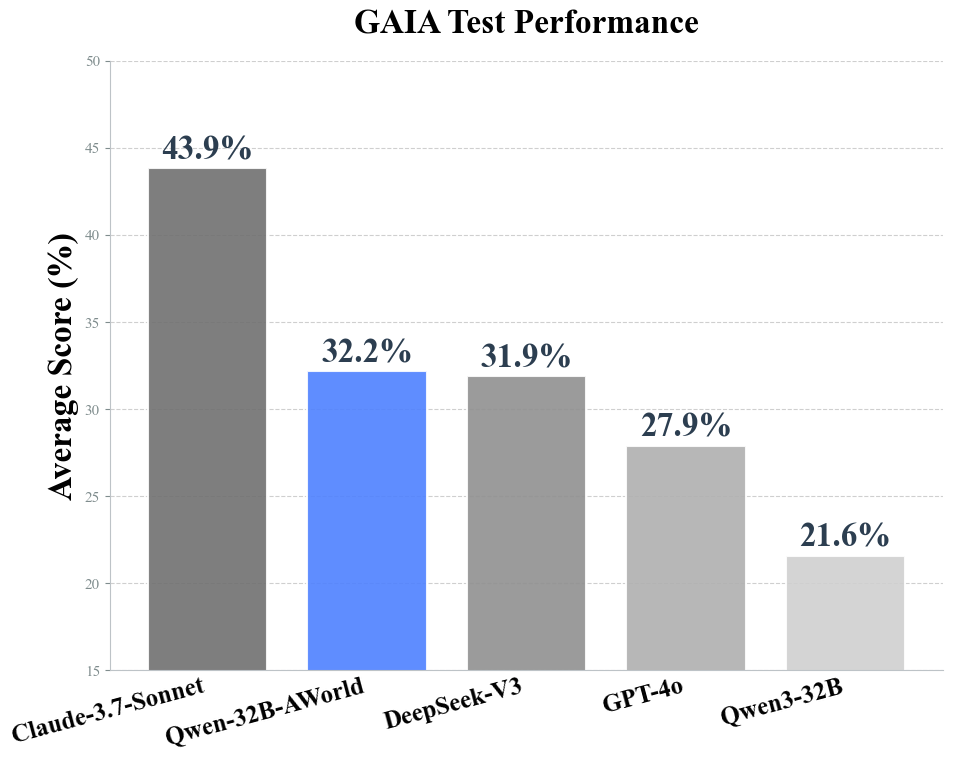

Using AWorld, the authors fine-tune Qwen3-32B via SFT (on 886 successful trajectories) followed by RL (using GRPO and rule-based rewards). The resulting agent, Qwen3-32B-AWorld, achieves a pass@1 of 32.23% on GAIA, a 10.6 percentage point improvement over the base model. On the most challenging Level 3 questions, it attains 16.33%, outperforming all compared models, including GPT-4o and DeepSeek-V3. Notably, the agent also generalizes to xbench-DeepSearch, improving from 12% to 32% without direct training, indicating robust skill acquisition rather than overfitting.

Practical and Theoretical Implications

AWorld provides a scalable infrastructure for agentic AI, enabling efficient RL in environments with high resource demands and long-horizon tasks. The framework's modularity and integration capabilities position it as a practical solution for both single-agent and multi-agent systems. The empirical results challenge the notion that model size or architecture alone determines agentic performance; instead, rollout efficiency and experience diversity are critical.

Theoretically, the work underscores the importance of the "learning from practice" paradigm and the need for frameworks that optimize the entire agent-environment-training loop. The demonstrated generalization across benchmarks suggests that distributed RL with sufficient experience can yield agents with transferable reasoning capabilities.

Future Directions

The authors outline a roadmap for extending AWorld to support collective intelligence, expert societies of specialized agents, and autonomous self-improvement. Key research directions include multi-agent collaboration in heterogeneous environments, domain-specific agent specialization, and continuous, self-sustaining learning loops.

Conclusion

AWorld addresses the central bottleneck in agentic AI training by enabling efficient, distributed experience generation and seamless integration with RL frameworks. The framework's design and empirical validation establish rollout efficiency as the primary determinant of agentic performance on complex tasks. AWorld provides a practical blueprint for scalable agentic AI development and opens avenues for future research in collective and self-improving intelligence.