- The paper demonstrates that diffusion LMs converge on the correct answer early, reducing the need for extensive refinement steps.

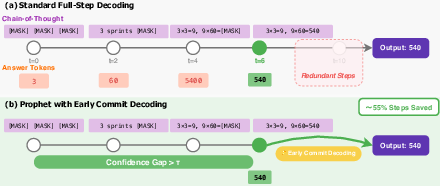

- The Prophet algorithm employs a dynamic confidence gap threshold to decide optimal early commit points during decoding.

- Experiments on benchmarks like GSM8K and MMLU show up to 3.4× speedup without compromising output quality.

Early Answer Convergence and Fast Decoding in Diffusion LLMs

Introduction

Diffusion LLMs (DLMs) have emerged as a competitive alternative to autoregressive (AR) models for sequence generation, offering parallel decoding and flexible token orders. However, practical deployment of DLMs is hindered by slower inference, primarily due to the computational cost of bidirectional attention and the necessity for numerous refinement steps to achieve high-quality outputs. This paper identifies and exploits a critical property of DLMs: early answer convergence, where the correct answer can be internally determined well before the final decoding step. The authors introduce Prophet, a training-free, fast decoding paradigm that leverages this property to accelerate DLM inference by dynamically monitoring model confidence and committing to early decoding when appropriate.

Early Answer Convergence in DLMs

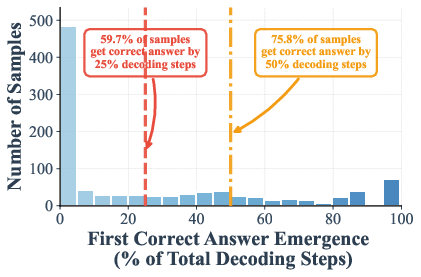

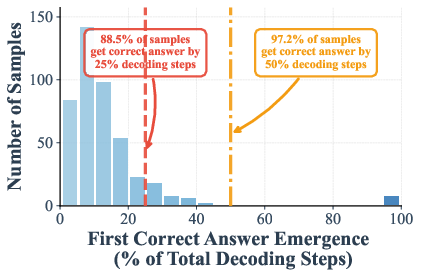

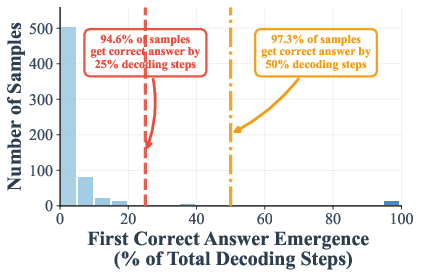

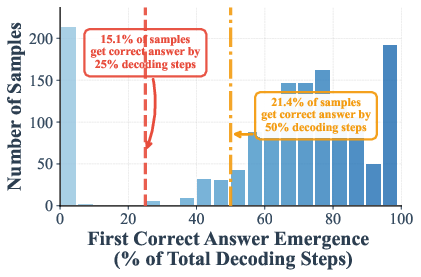

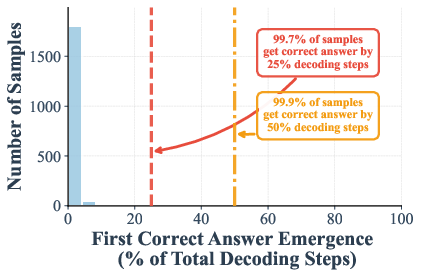

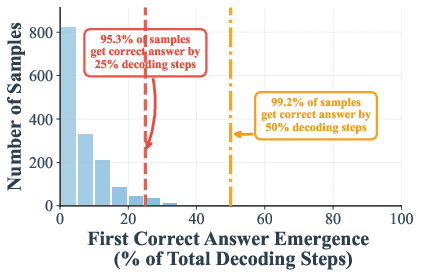

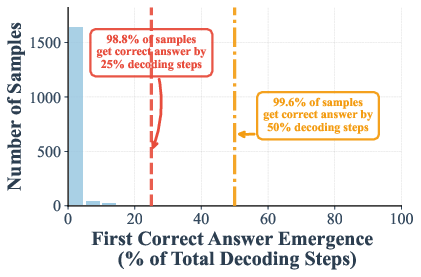

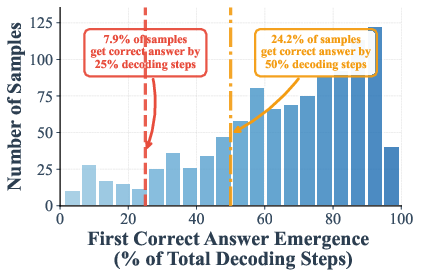

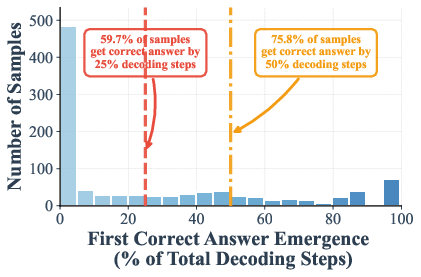

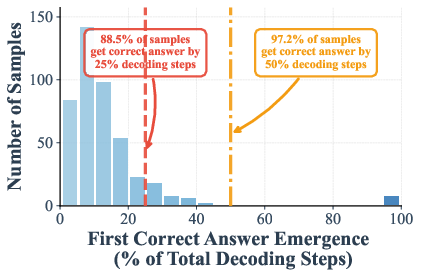

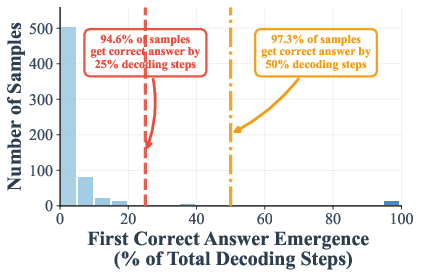

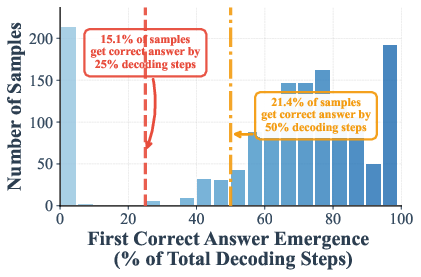

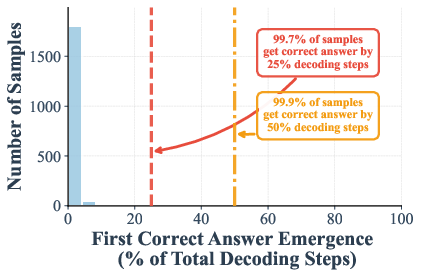

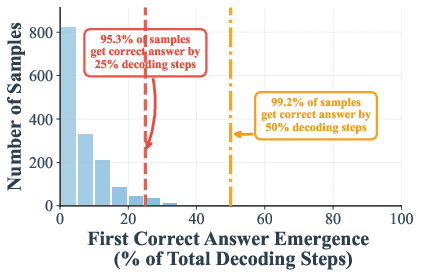

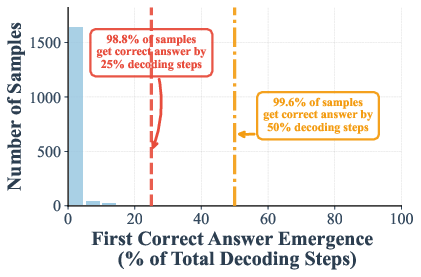

The central empirical finding is that DLMs often internally stabilize on the correct answer at an early stage of the iterative denoising process. Analysis on benchmarks such as GSM8K and MMLU with LLaDA-8B reveals that up to 97% and 99% of instances, respectively, can be decoded correctly using only half of the refinement steps. This phenomenon is observed under both semi-autoregressive and random remasking schedules, and is further amplified by the use of suffix prompts (e.g., appending "Answer:") which encourage earlier convergence.

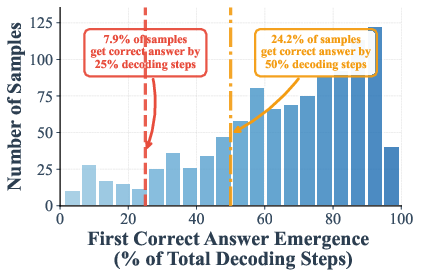

Figure 1: Distribution of early correct answer detection during decoding with low-confidence remasking, showing substantial early convergence in GSM8K.

The decoding dynamics indicate that answer tokens stabilize much earlier than chain-of-thought tokens, which tend to fluctuate until later stages. This suggests that the iterative refinement process in DLMs is fundamentally redundant for a large fraction of samples, as the correct answer is already internally determined well before the final output is produced.

Prophet: Training-Free Early Commit Decoding

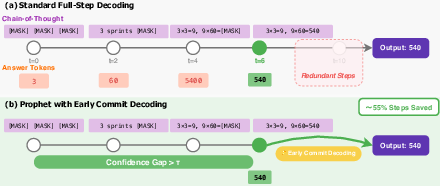

Building on the early convergence observation, Prophet is introduced as a fast decoding algorithm that dynamically decides when to terminate the iterative refinement process. The core mechanism is the Confidence Gap, defined as the difference between the top-1 and top-2 logits for each token position. A large gap indicates high predictive certainty and convergence.

Prophet frames the decoding process as an optimal stopping problem, balancing the computational cost of further refinement against the risk of premature commitment. The algorithm employs a staged threshold function for the confidence gap, with higher thresholds in early, noisy stages and lower thresholds as decoding progresses. When the average confidence gap across answer positions exceeds the threshold, Prophet commits to decoding all remaining tokens in a single step.

Figure 2: Prophet's early-commit-decoding mechanism, illustrating dynamic monitoring of the confidence gap and substantial reduction in decoding steps without loss of output quality.

This approach is model-agnostic, incurs negligible computational overhead, and requires no retraining. Prophet can be implemented as a wrapper around existing DLM inference code, making it highly practical for real-world deployment.

Experimental Results

Comprehensive experiments on LLaDA-8B and Dream-7B across multiple benchmarks demonstrate that Prophet achieves up to 3.4× reduction in decoding steps while maintaining generation quality. On general reasoning tasks (MMLU, ARC-Challenge, HellaSwag, TruthfulQA, WinoGrande, PIQA), Prophet matches or exceeds the performance of full-budget decoding. Notably, on HellaSwag, Prophet improves upon the full baseline, indicating that early commit decoding can prevent the model from corrupting an already correct prediction in later steps.

On mathematics and science benchmarks (GSM8K, GPQA), Prophet reliably preserves accuracy, outperforming naive static truncation baselines. For instance, on GPQA, the half-step baseline suffers a significant performance drop, while Prophet recovers the full model's accuracy. Planning tasks (Countdown, Sudoku) also benefit from Prophet's adaptive strategy.

Figure 3: Early correct answer detection distribution on MMLU with low-confidence remasking, further validating early convergence in DLMs.

These results substantiate the claim that DLMs internally resolve uncertainty and determine the correct answer well before the final decoding step, and that Prophet can safely exploit this property for efficient inference.

Theoretical and Practical Implications

The identification of early answer convergence in DLMs challenges the necessity of conventional full-length decoding and recasts DLM inference as an optimal stopping problem. Prophet's adaptive early commit strategy is complementary to existing acceleration techniques such as KV-caching and parallel decoding, and can be integrated with them for further speedup.

From a theoretical perspective, the findings suggest that the iterative denoising process in DLMs is overparameterized for most samples, and that model confidence metrics can serve as reliable proxies for convergence. This opens avenues for further research into dynamic decoding schedules, risk-aware stopping criteria, and the internal mechanisms by which DLMs resolve uncertainty.

Practically, Prophet enhances the deployability of DLMs in latency-sensitive applications, making them more competitive with AR models. The approach is robust, model-agnostic, and does not compromise output quality, making it suitable for production-level systems.

Future Directions

Future work may explore more sophisticated confidence metrics, integration with reinforcement learning-based sampling optimization, and extension to multimodal and multilingual DLMs. Investigating the interplay between early convergence and model architecture, training objectives, and prompt engineering could yield further improvements in efficiency and reliability. Additionally, the optimal stopping framework may be generalized to other iterative generative models beyond DLMs.

Conclusion

This paper establishes early answer convergence as a fundamental property of diffusion LLMs and introduces Prophet, a training-free fast decoding paradigm that leverages this property for efficient inference. Prophet achieves substantial speedup with negligible or even positive impact on generation quality, recasting DLM decoding as an optimal stopping problem. These findings have significant implications for both the theory and practice of sequence generation, and suggest that early convergence is a core characteristic of how DLMs internally resolve uncertainty.