- The paper introduces diffusion language models as an alternative to autoregressive methods by leveraging iterative denoising for parallel token generation.

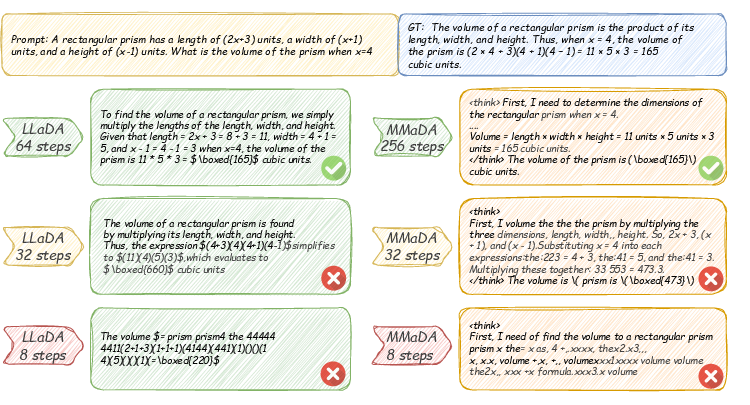

- The paper details training and inference strategies, including complementary masking, caching, and step distillation, achieving competitive benchmarks in language, code, and multimodal tasks.

- The paper outlines key open challenges such as scalability, infrastructure development, and long-sequence handling that must be addressed for broader adoption.

A Comprehensive Survey of Diffusion LLMs

Introduction and Motivation

Diffusion LLMs (DLMs) have emerged as a compelling alternative to the autoregressive (AR) paradigm for language generation, leveraging iterative denoising processes to enable parallel token generation and bidirectional context modeling. This survey systematically reviews the evolution, taxonomy, training and inference strategies, multimodal extensions, empirical performance, and open challenges of DLMs, providing a technical synthesis for researchers and practitioners.

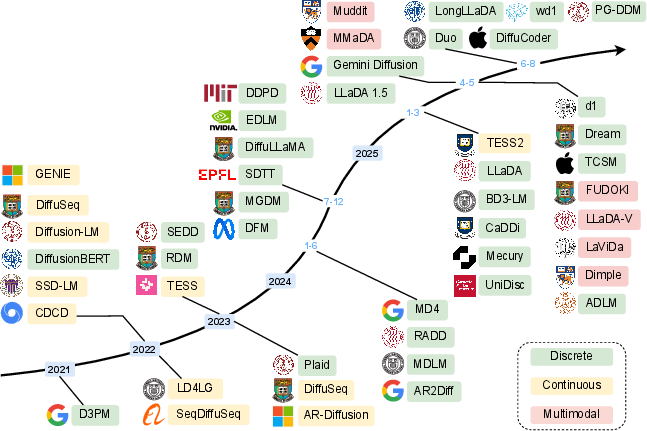

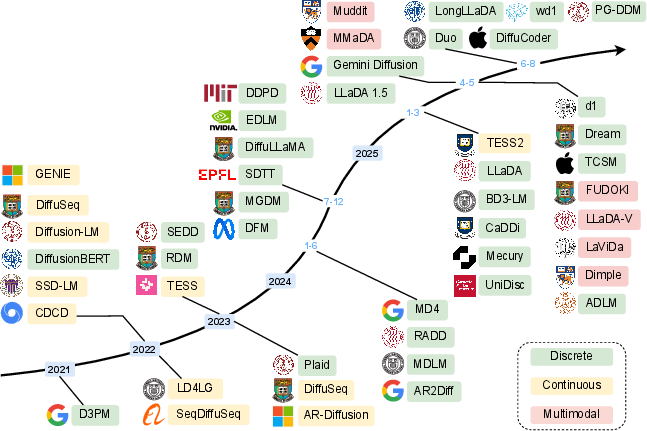

Figure 1: Timeline of Diffusion LLMs, highlighting the shift from continuous to discrete and multimodal DLMs.

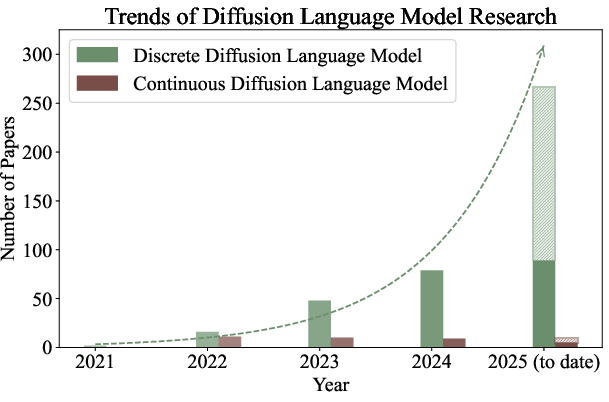

Evolution and Taxonomy of DLMs

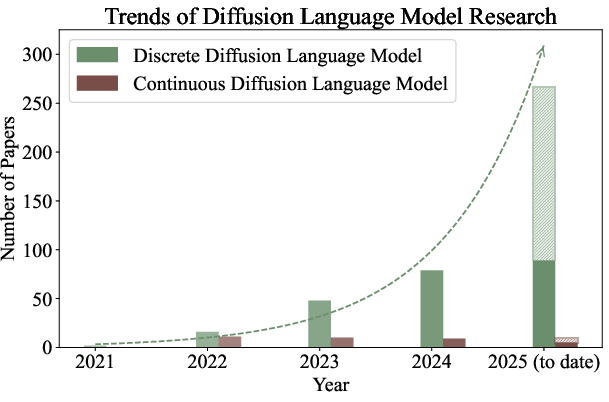

The development of DLMs can be categorized into three main groups: continuous DLMs, discrete DLMs, and multimodal DLMs. Early research focused on continuous-space models, where diffusion operates in the embedding or logit space. Discrete DLMs, which define the diffusion process directly over token vocabularies, have gained traction due to their scalability and compatibility with large-scale LLMing. Recent advances have extended DLMs to multimodal domains, enabling unified modeling of text, images, and other modalities.

Figure 2: Research trend showing the increasing number of DLM papers, especially in discrete and multimodal settings.

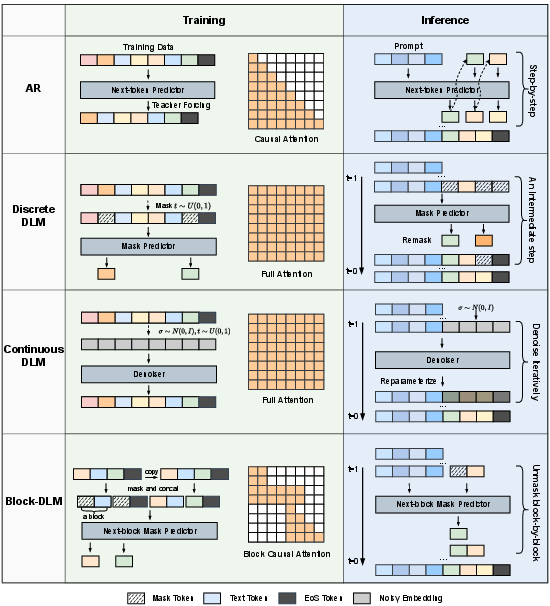

Modeling Paradigms and Architectural Distinctions

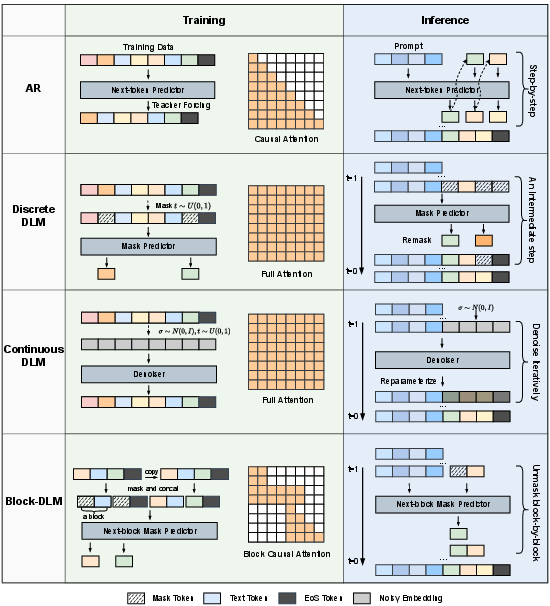

DLMs are positioned within the broader landscape of LLMing paradigms, which include masked LLMs (MLMs), AR models, permutation LLMs, and sequence-to-sequence architectures. DLMs distinguish themselves by their iterative, non-sequential generation process, which allows for parallelism and bidirectional context utilization. Continuous DLMs operate in embedding or logit spaces, while discrete DLMs employ token-level corruption and denoising, often using masking strategies.

Figure 4: Overview of training and inference procedures across AR, continuous DLM, discrete DLM, and block-wise hybrid models.

Training and Post-Training Strategies

Pre-training

DLMs are typically pre-trained using objectives analogous to those in AR or image diffusion models. Discrete DLMs often initialize from AR model weights (e.g., LLaMA, Qwen2.5), facilitating efficient adaptation and reducing training cost. Continuous DLMs may leverage pretrained image diffusion backbones for multimodal tasks.

Supervised Fine-Tuning and RL Alignment

Supervised fine-tuning (SFT) in DLMs mirrors AR approaches but must address the inefficiency of loss computation due to partial masking. Techniques such as complementary masking and improved scheduling have been proposed to enhance gradient flow and data utilization.

Post-training for reasoning capabilities is a critical area, with methods such as Diffusion-of-Thought (DoT), DCoLT, and various policy gradient adaptations (e.g., diffu-GRPO, UniGRPO, coupled-GRPO) enabling DLMs to perform complex reasoning and alignment tasks. Preference optimization methods (e.g., VRPO) have also been adapted to the diffusion setting, addressing the high variance of ELBO-based log-likelihood approximations.

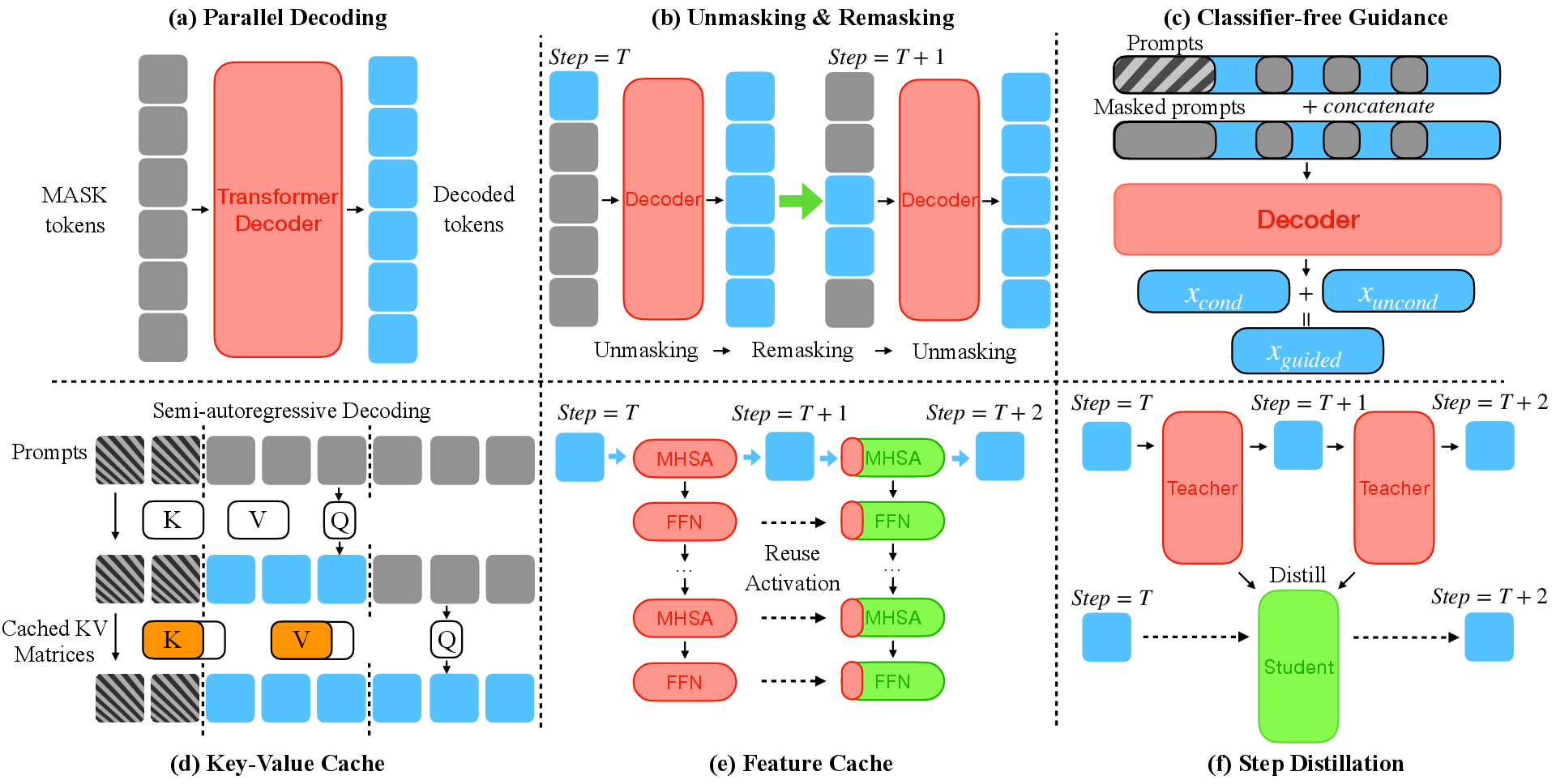

Inference Techniques and Efficiency Optimizations

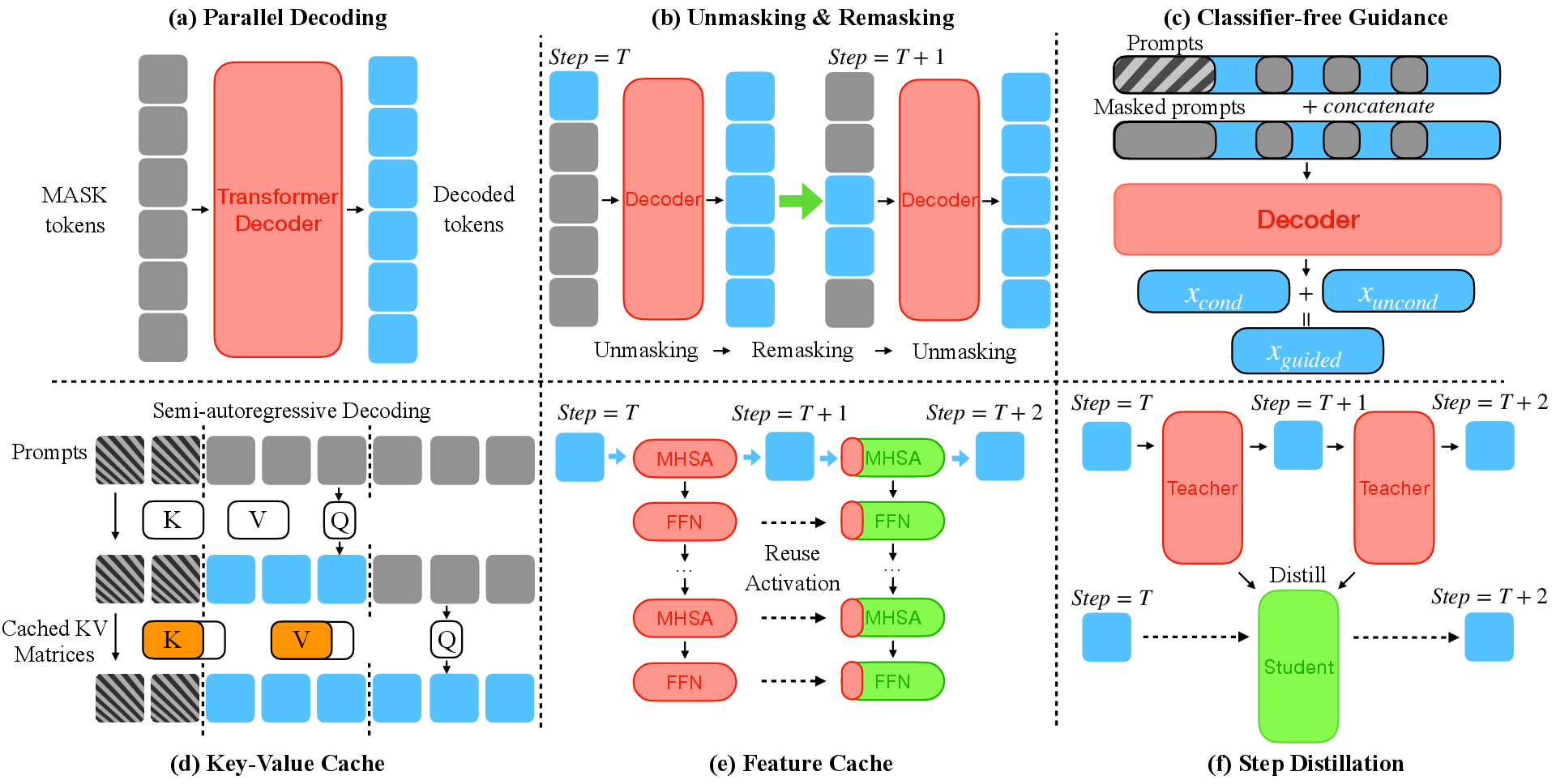

Inference in DLMs is characterized by a rich set of strategies aimed at balancing quality, controllability, and efficiency:

- Parallel Decoding: Confidence-aware and adaptive parallel decoding methods enable substantial speed-ups (up to 34×) with minimal quality loss.

- Unmasking/Remasking: Adaptive policies for token selection and remasking improve both convergence and output coherence.

- Guidance: Classifier-free guidance and structural constraints steer generation toward desired attributes, with extensions for semantic and syntactic control.

- Caching and Step Distillation: Innovations in KV and feature caching, as well as step distillation, have closed much of the inference latency gap with AR models, achieving up to 500× acceleration in some cases.

Figure 3: Inference techniques for DLMs, including parallel decoding, unmasking/remasking, guidance, caching, and step distillation.

Multimodal and Unified DLMs

Recent work has extended DLMs to multimodal and unified architectures, supporting both understanding and generation across text and vision. Approaches include:

- Vision Encoders + DLMs: Models like LLaDA-V and LaViDa integrate vision encoders with DLM backbones, employing complementary masking and KV-caching for efficient training and inference.

- Unified Token Spaces: MMaDA and UniDisc tokenize all modalities into a shared space, enabling joint modeling and cross-modal reasoning.

- Hybrid Training: Dimple employs an autoregressive-then-diffusion training regime to stabilize multimodal learning and enable parallel decoding.

These models demonstrate competitive or superior performance to AR-based multimodal models, particularly in cross-modal reasoning and generation.

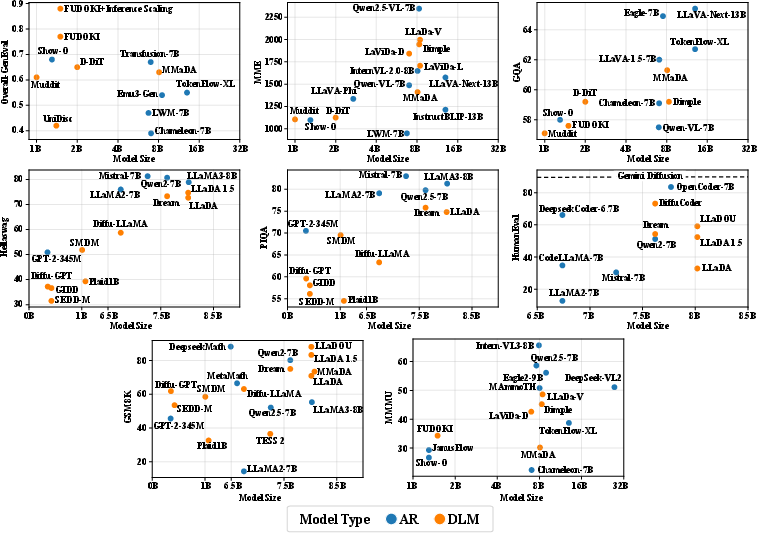

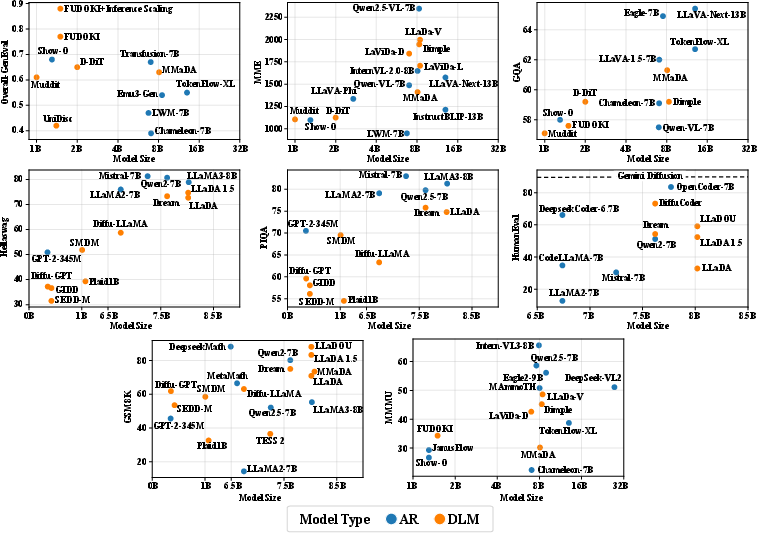

DLMs have achieved performance on par with, and in some cases exceeding, AR models of similar scale across a range of benchmarks, including language understanding (PIQA, HellaSwag), code generation (HumanEval), mathematical reasoning (GSM8K), and multimodal tasks (MME, MMMU). Notably, DLMs exhibit stronger performance in math and science-related benchmarks and demonstrate superior throughput in code generation and multimodal settings.

Figure 5: Performance comparison on eight benchmarks, showing DLMs (orange) competitive with AR models (blue) across tasks and scales.

Trade-offs, Limitations, and Open Challenges

Despite their promise, DLMs face several unresolved challenges:

Applications and Implications

DLMs have been successfully applied to a broad spectrum of tasks, including robust text classification, NER, summarization, style transfer, code generation, and computational biology. Their global planning and iterative refinement capabilities are particularly advantageous for structured and logic-heavy domains. In code generation, DLMs have demonstrated competitive HumanEval performance and superior throughput compared to AR baselines.

Theoretically, DLMs offer a unified framework for generative modeling across modalities, with inherent advantages in controllability and bidirectional context. Practically, their parallelism and efficiency optimizations position them as viable candidates for latency-sensitive and large-scale applications, contingent on further advances in infrastructure and scalability.

Future Directions

Key areas for future research include:

- Improving training efficiency and data utilization.

- Adapting quantization, pruning, and distillation techniques to the diffusion paradigm.

- Advancing unified multimodal reasoning and DLM-based agent architectures.

- Developing robust infrastructure and deployment frameworks for DLMs.

Conclusion

This survey provides a comprehensive technical synthesis of the DLM landscape, highlighting the paradigm's modeling innovations, empirical strengths, and open challenges. DLMs have established themselves as a credible alternative to AR models, particularly in settings demanding parallelism, bidirectional context, and unified multimodal reasoning. Continued research into scalability, efficiency, and infrastructure will be critical for realizing the full potential of diffusion-based LLMing.