- The paper introduces a reinforcement learning framework that decomposes reasoning to separately supervise visual perception and language reasoning.

- It reduces visual hallucinations and language shortcuts by assigning self-visual rewards, achieving significant gains on benchmarks like VisNumBench.

- The study demonstrates enhanced visual grounding and preserved text-only performance, offering a scalable strategy for multimodal training.

Self-Rewarding Vision-LLMs via Reasoning Decomposition: An Expert Analysis

Introduction

The paper "Self-Rewarding Vision-LLM via Reasoning Decomposition" (Vision-SR1) (2508.19652) addresses persistent challenges in vision-LLMs (VLMs), specifically visual hallucinations and language shortcuts. These issues stem from the dominance of language priors and the lack of explicit supervision for intermediate visual reasoning in most post-training pipelines. Vision-SR1 introduces a reinforcement learning (RL) framework that decomposes reasoning into visual perception and language reasoning, and crucially, leverages a self-rewarding mechanism to provide direct, adaptive supervision for visual perception without external annotations or reward models.

Methodology

Reasoning Decomposition and Self-Reward

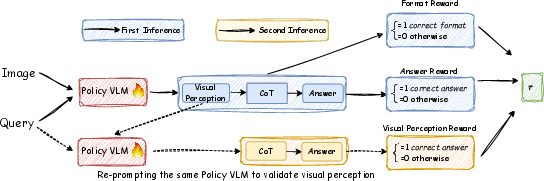

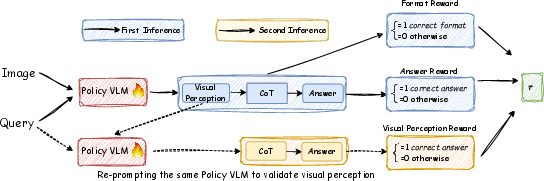

Vision-SR1 enforces a structured output format for VLMs, explicitly separating visual perception, chain-of-thought (CoT) reasoning, and the final answer. The RL training loop consists of two rollouts:

- Standard Rollout: The model receives an image-query pair and generates a structured output: visual perception, CoT reasoning, and answer. The answer is compared to the ground truth for an accuracy reward.

- Self-Reward Rollout: The model is re-prompted with only the query and its own generated visual perception. If it can recover the correct answer, a self-visual reward is assigned, indicating that the perception is self-contained and sufficient.

Figure 1: Overall framework of Vision-SR1. During RL training, the VLM performs two rollouts: one with the image-query pair and one with only the query and generated visual perception, assigning rewards based on answer correctness and self-containment.

This dual-reward structure is combined with a format reward to ensure adherence to the required output structure. The total reward is a weighted sum of these components, with hyperparameters tuned empirically.

Theoretical Motivation

The approach is motivated by the observation that answer-only rewards in RL for VLMs encourage models to exploit language priors, neglecting the visual input. By introducing a perception-level reward, Vision-SR1 increases the mutual information between the visual input and the answer, anchoring the model's reasoning in actual perception rather than spurious correlations.

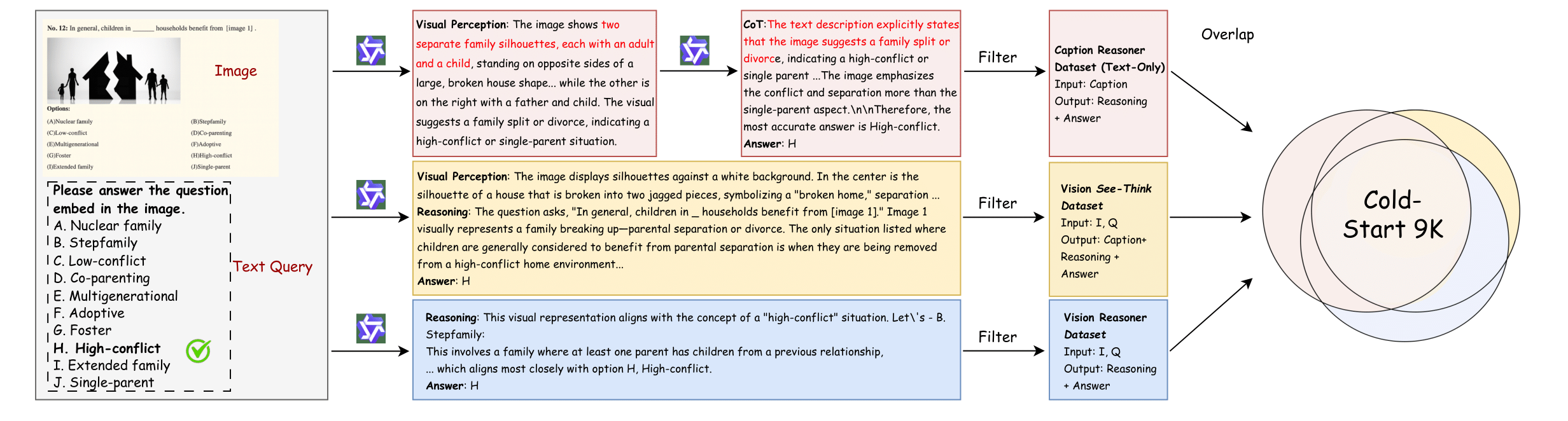

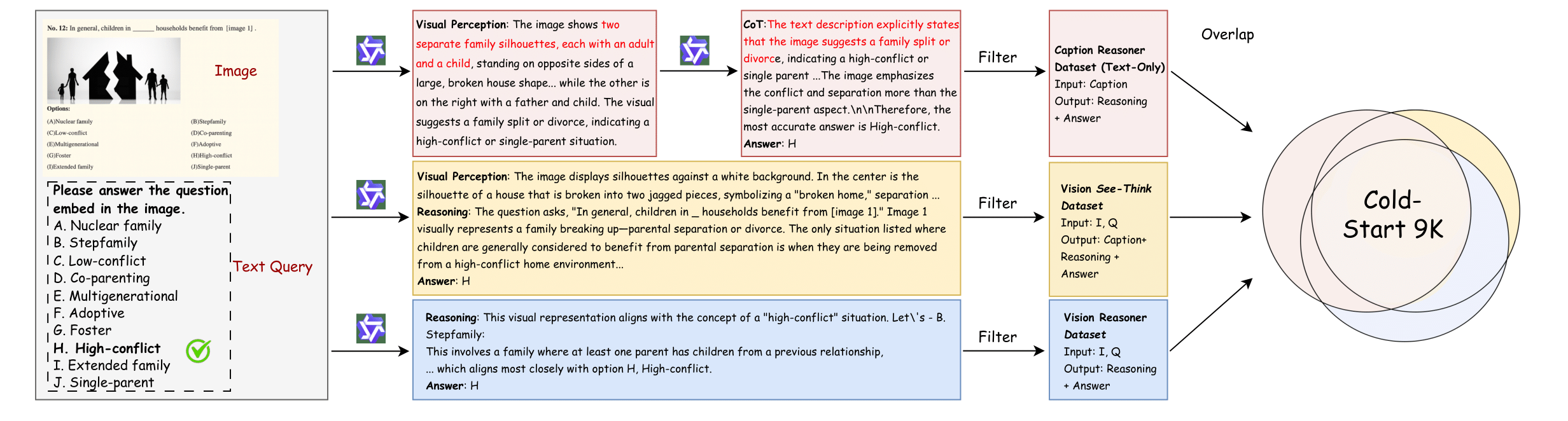

Data Curation and SFT Cold Start

The RL dataset (Vision-SR1-47K) is curated from 24 open-source VLM benchmarks, spanning mathematical reasoning, commonsense knowledge, and general visual understanding. To facilitate efficient RL training, a cold-start supervised fine-tuning (SFT) dataset is constructed by prompting Qwen-2.5-VL-7B to generate high-quality, format-compliant examples, filtered to ensure both answer and perception correctness.

Figure 2: SFT cold-start dataset curation process, intersecting three source datasets and applying multi-stage filtration to ensure format and answer correctness.

Experimental Results

Baselines and Evaluation

Vision-SR1 is compared against Vision-R1, Perception-R1, and Visionary-R1, with all models retrained on the same 47K dataset for fair comparison. Evaluation covers general visual understanding (MMMU, MMMU-Pro, MM-Vet, RealWorldQA, VisNumBench), multimodal mathematical reasoning (MathVerse, MATH-Vision), and hallucination diagnosis (HallusionBench). Gemini-2.5-flash is used as an LLM-as-a-judge for open-ended responses.

Main Results

Vision-SR1 consistently outperforms all baselines across both 3B and 7B backbones. For example, with Qwen2.5-VL-7B, Vision-SR1 achieves an average score of 58.8, compared to 57.4 for Vision-R1 and 55.1 for SFT. Notably, Vision-SR1 demonstrates the largest gains on benchmarks requiring genuine visual reasoning, such as VisNumBench and HallusionBench.

Ablation and Shortcut Analysis

Ablation studies confirm that removing the self-reward for visual perception degrades performance, particularly in tasks requiring visual grounding. The Language Shortcut Rate (LSR), defined as the fraction of correct answers produced with incorrect visual perception, is significantly reduced by Vision-SR1, indicating a lower reliance on language priors and improved visual grounding.

Text-Only Reasoning

Interestingly, Vision-SR1 also mitigates the degradation of text-only reasoning performance observed in multimodal RL training. On text-only benchmarks (MMLU-Pro, SuperGPQA, GSM8K, MATH-500), Vision-SR1 preserves or improves performance relative to Vision-R1, suggesting that decoupling perception and reasoning rewards helps maintain generalization.

Implementation Considerations

- Architecture: Vision-SR1 can be implemented atop any VLM with a modular architecture supporting structured outputs. The method is demonstrated with Qwen-2.5-VL-3B/7B.

- Training: The RL phase uses Group Relative Policy Optimization (GRPO) for stable policy updates. The self-reward rollout requires efficient re-prompting and caching of intermediate perceptions.

- Data: High-quality, format-compliant SFT data is critical for cold-starting RL. The filtration pipeline must ensure that visual perceptions are both correct and self-contained.

- Evaluation: Automated LLM-as-a-judge pipelines (e.g., Gemini-2.5-flash) are necessary for scalable, fine-grained evaluation of both answer correctness and perception self-containment.

Implications and Future Directions

Vision-SR1 demonstrates that self-rewarding mechanisms can provide scalable, adaptive supervision for visual perception in VLMs, reducing the need for costly human annotations or static external reward models. The explicit decomposition of reasoning aligns with the modularity hypothesis in cognitive science and may facilitate more interpretable and controllable VLMs.

However, the reliance on text-based proxies for visual perception introduces potential information loss. Future work should explore direct supervision of visual embeddings and more sophisticated self-rewarding strategies, including self-play and self-evolving VLMs. Additionally, the findings raise important questions about the true nature of improvements in multimodal RL—whether they reflect genuine perception gains or merely better exploitation of language shortcuts. More rigorous benchmarks and analysis are needed to disentangle these effects.

Conclusion

Vision-SR1 advances the state of the art in vision-language alignment by introducing a self-rewarding, reasoning-decomposed RL framework that directly supervises visual perception. The method yields consistent improvements in visual reasoning, reduces hallucinations and language shortcuts, and preserves text-only reasoning capabilities. This work provides a scalable blueprint for future VLM training pipelines and highlights the importance of explicit, adaptive supervision for intermediate reasoning steps in multimodal models.