- The paper introduces a novel framework that integrates metric head pose estimation with a lightweight CNN for real-time, browser-based gaze tracking.

- It leverages on-device few-shot meta-learning to enable rapid, personalized calibration with as few as nine samples per user.

- The system achieves competitive accuracy and high efficiency, operating at 1137 FPS on desktop CPUs while preserving user privacy.

WebEyeTrack: Scalable, Real-Time Eye-Tracking in the Browser via On-Device Few-Shot Personalization

Introduction

WebEyeTrack addresses the persistent gap between high-accuracy, deep learning-based gaze estimation and the practical requirements of scalable, privacy-preserving, and real-time eye-tracking on consumer devices. The framework integrates a lightweight, headpose-aware CNN model (BlazeGaze) with a novel metric head pose estimation pipeline and on-device few-shot meta-learning, enabling robust, personalized gaze estimation directly in the browser. This approach is motivated by the limitations of both commercial hardware-based eye trackers and prior webcam-based solutions, which often lack either accuracy, efficiency, or adaptability to user-specific variation and head movement.

System Architecture and Methodology

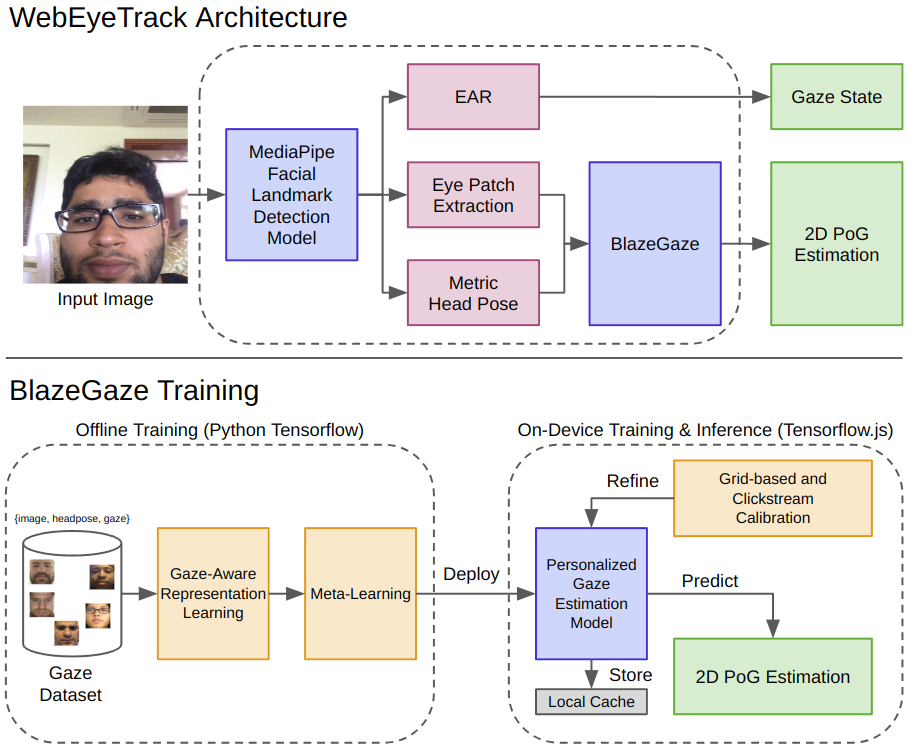

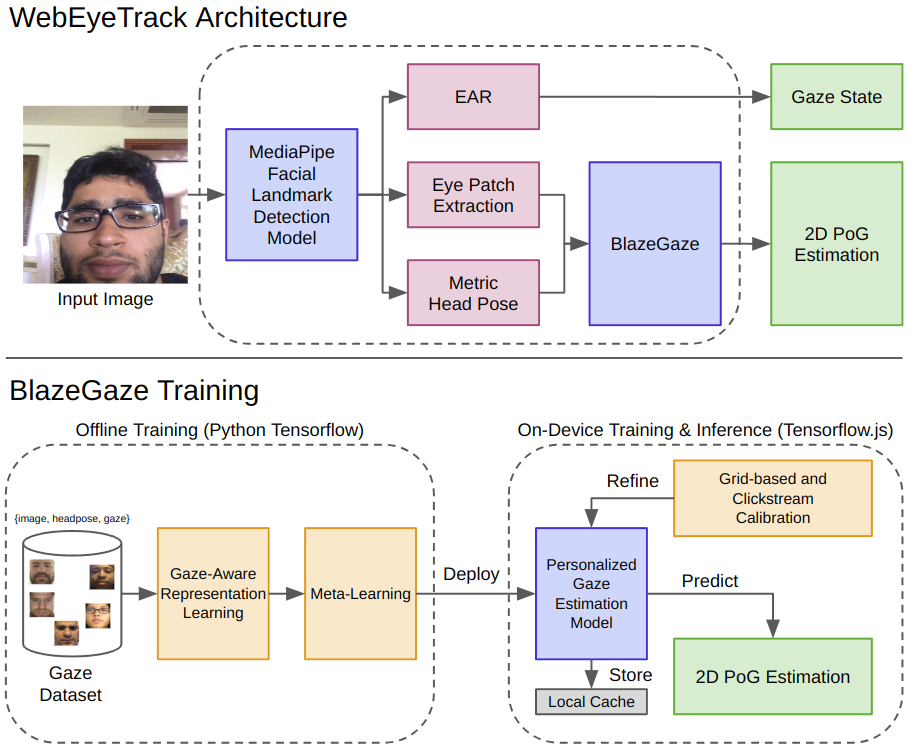

WebEyeTrack is composed of two principal components: (1) a model-based 3D face reconstruction and metric head pose estimation pipeline, and (2) the BlazeGaze CNN for appearance-based gaze estimation with few-shot personalization.

Figure 1: The WebEyeTrack framework integrates model-based routines and the BlazeGaze CNN, supporting on-device calibration and inference for privacy.

Metric Head Pose Estimation

The head pose estimation pipeline leverages MediaPipe's 3D facial landmark detection to reconstruct a canonical face mesh. To resolve the scale ambiguity inherent in monocular RGB input, the system employs iris-based face scaling, using a fixed iris diameter (α=1.2 cm) to estimate true facial dimensions. This enables transformation of normalized facial landmarks into metric space, which is critical for robust, device-agnostic gaze mapping.

Figure 2: Iris-based face scaling enables metric estimation of face width, supporting accurate 3D head pose recovery from monocular images.

A novel radial Procrustes alignment algorithm iteratively refines the translation component of the head pose, minimizing reprojection error between observed and predicted facial landmarks. This approach yields a metric 3D head pose ([R∣t′]) suitable for downstream gaze estimation without requiring depth sensors or multi-camera setups.

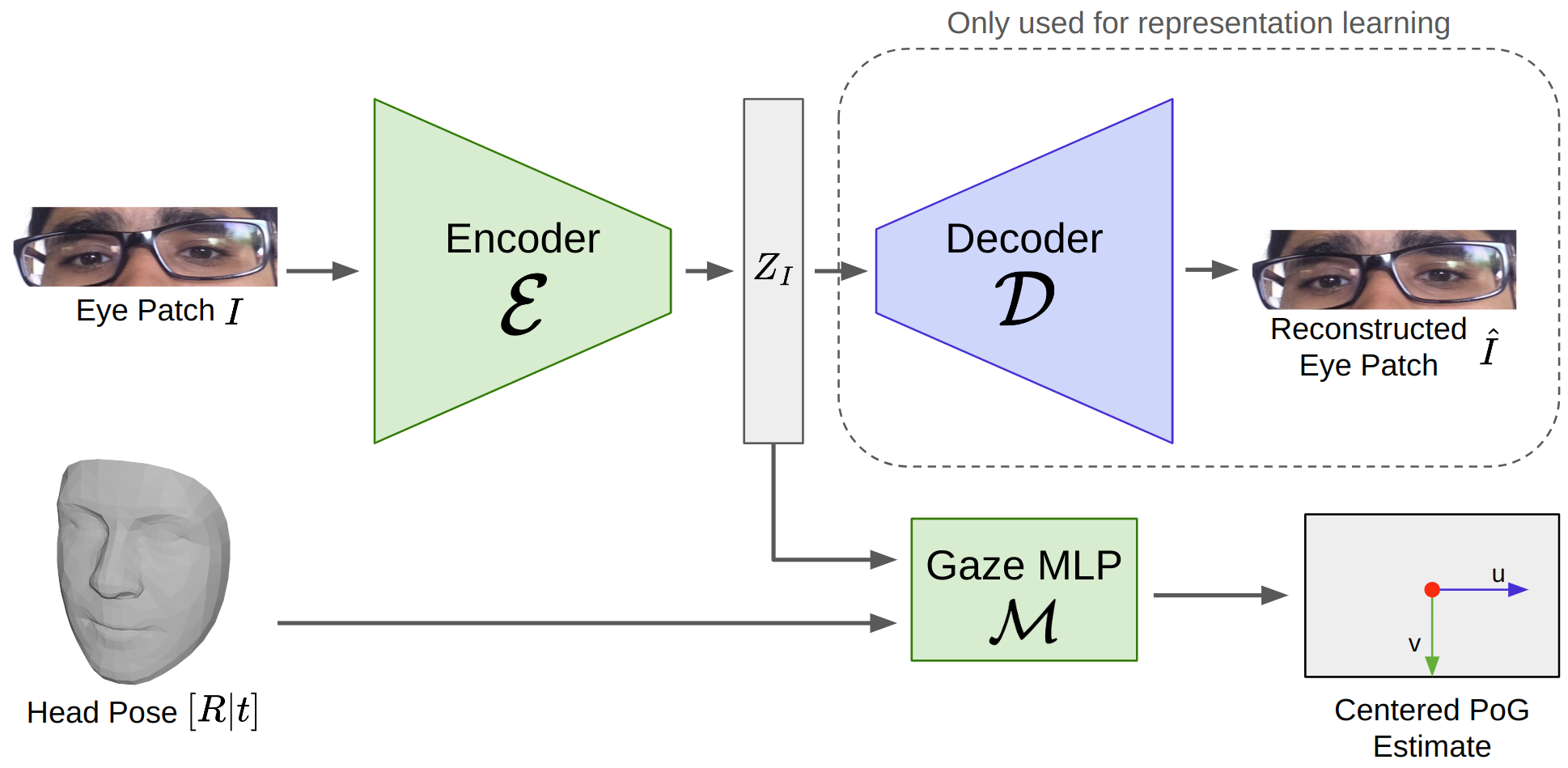

BlazeGaze: Lightweight, Personalized Gaze Estimation

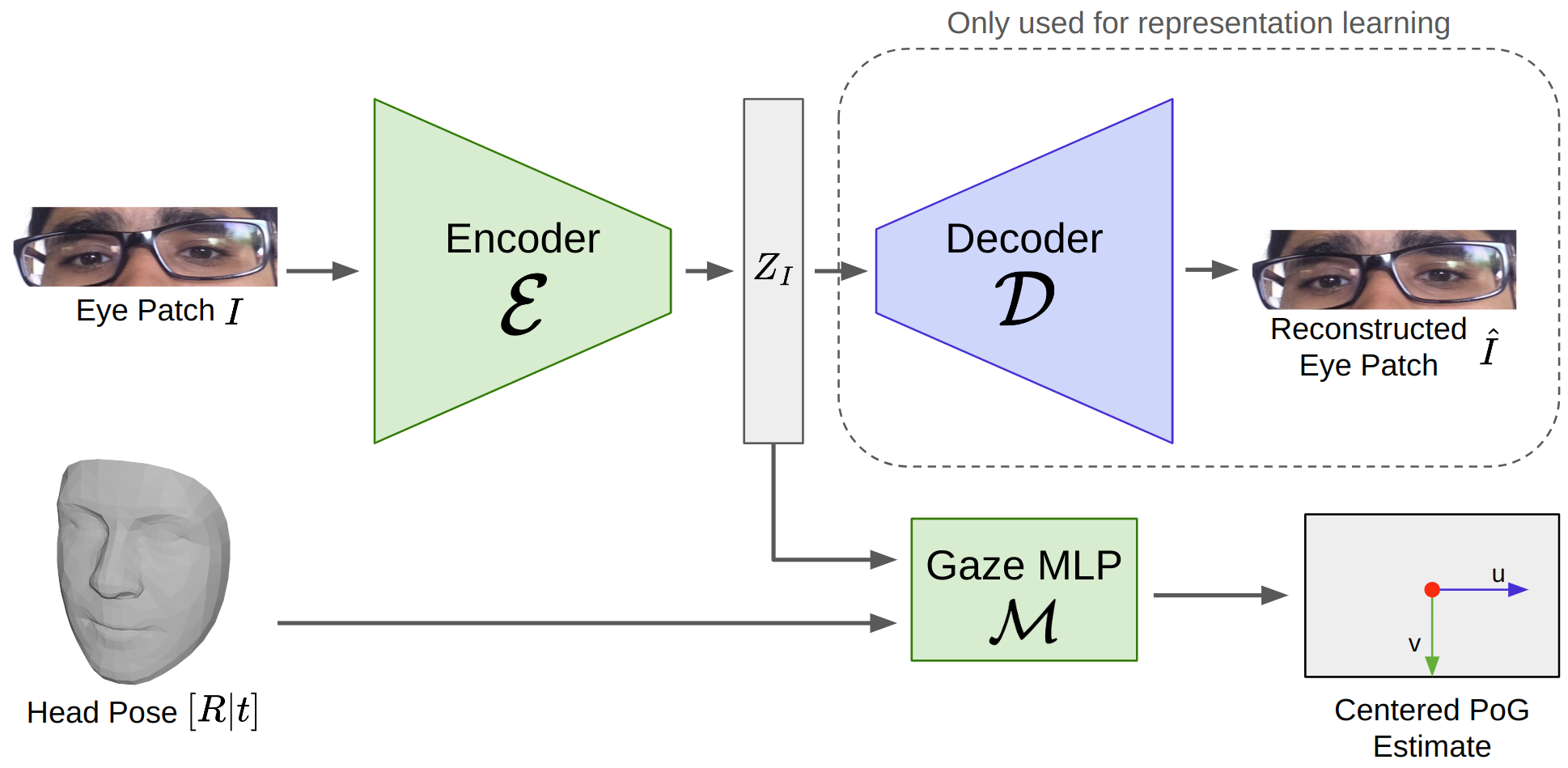

BlazeGaze is a compact CNN (670KB, 0.16M parameters) built from BlazeBlocks, optimized for real-time inference on CPUs and mobile GPUs. The model ingests an eye patch and the metric head pose, producing a normalized 2D point-of-gaze (PoG) prediction. The architecture consists of an encoder, a decoder (used only during representation learning), and a gaze estimator MLP.

Figure 3: BlazeGaze architecture: eye patch and metric head pose are processed by a BlazeBlock-based encoder and a gaze MLP; the decoder is used only for representation learning.

Training proceeds in two stages:

- Stage 1: Joint representation learning with a multi-objective loss (reconstruction, weighted L2 PoG, and embedding consistency) to structure the latent space for gaze-aware features.

- Stage 2: The encoder is frozen, and the gaze estimator is meta-trained using first-order MAML, enabling rapid adaptation to new users with as few as k≤9 calibration samples.

This meta-learning approach allows for efficient, on-device few-shot personalization, supporting privacy-preserving calibration and inference entirely within the browser.

Implementation and Deployment

The pipeline is implemented in both Python (TensorFlow 2) and JavaScript (TensorFlow.js), with models converted for browser deployment. The normalization pipeline eschews iterative PnP solvers in favor of homography-based warping using MediaPipe landmarks, ensuring compatibility with devices lacking camera intrinsics.

The encoder processes 128×512×3 eye-region images, outputting a 512-dimensional embedding. The gaze estimator MLP (16-16-2) combines this embedding with the head pose. Training uses Adam and SGD optimizers with exponential decay, and meta-learning is performed with k=9 support and l=100 query samples per user.

On-device training and inference are supported via TensorFlow.js LayerModels, ensuring that user data remains local and private. Web Workers are used to offload computation, maintaining UI responsiveness.

Experimental Results

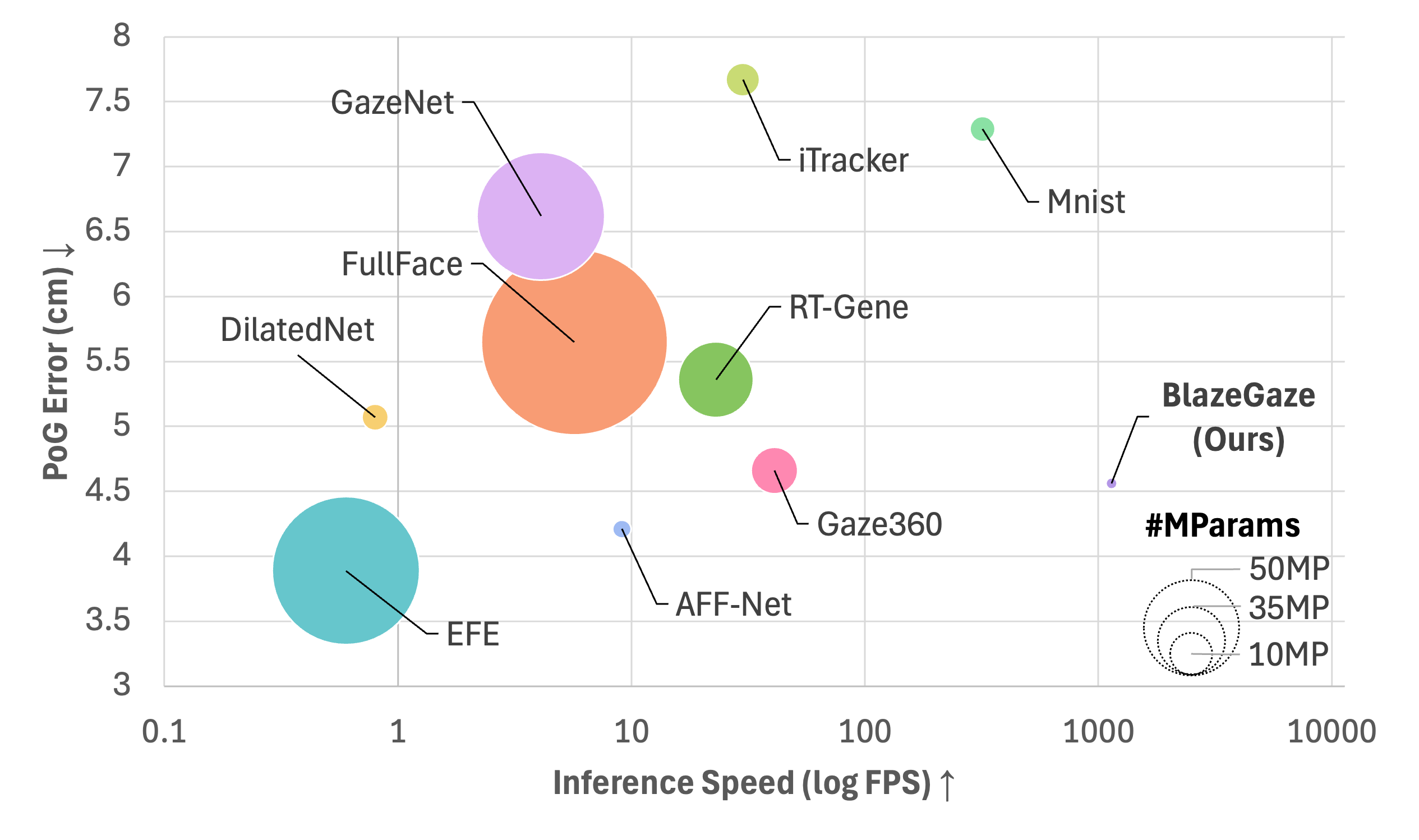

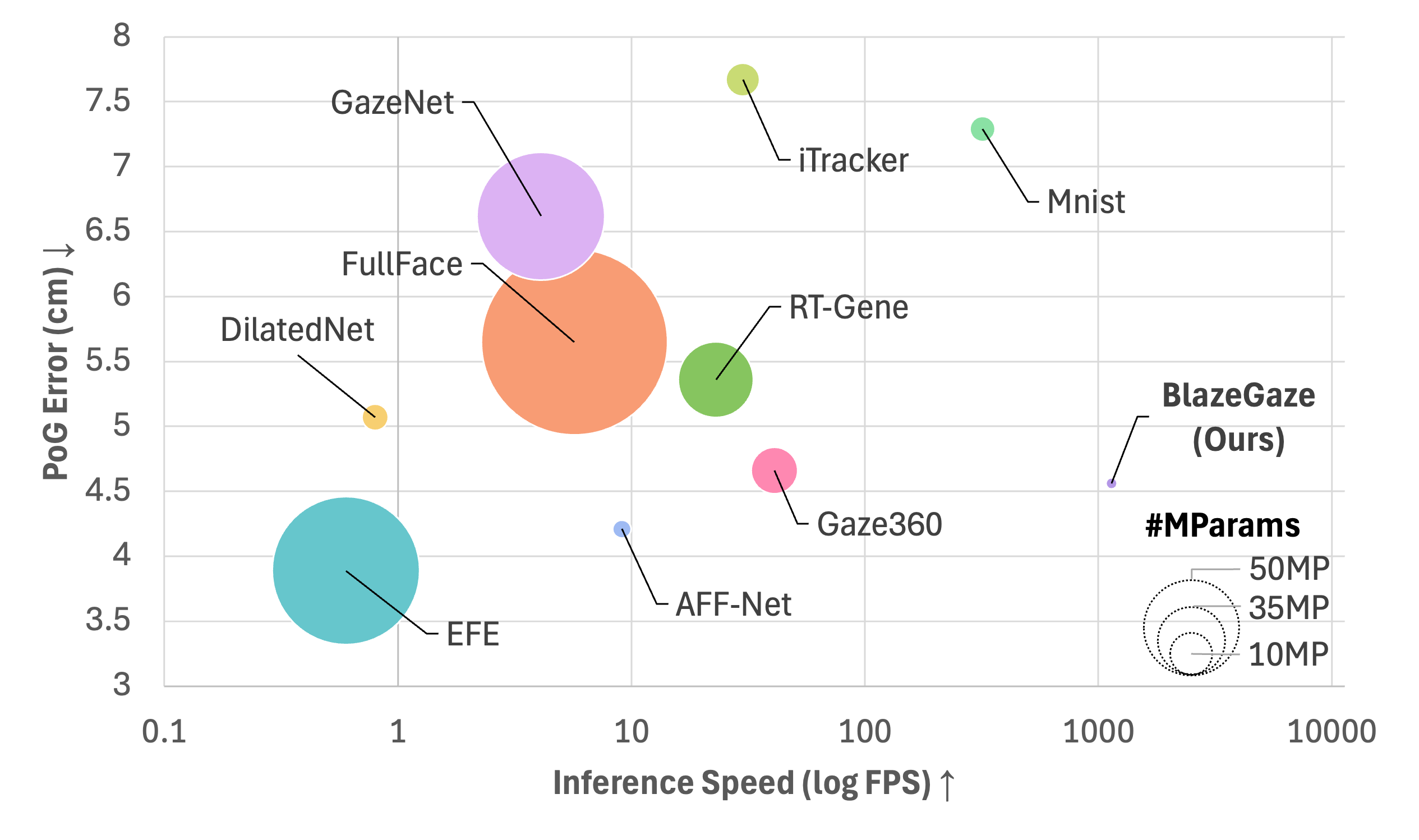

Accuracy vs. Efficiency

BlazeGaze achieves a PoG error of 2.32 cm on GazeCapture and 4.56 cm on MPIIFaceGaze, with an inference delay of 0.88 ms and 1137 FPS on a desktop CPU. This places it among the most efficient models, outperforming prior lightweight solutions by a substantial margin in both speed and model size, while maintaining competitive accuracy.

Figure 4: BlazeGaze achieves high accuracy with orders of magnitude faster inference speed compared to prior gaze estimation methods.

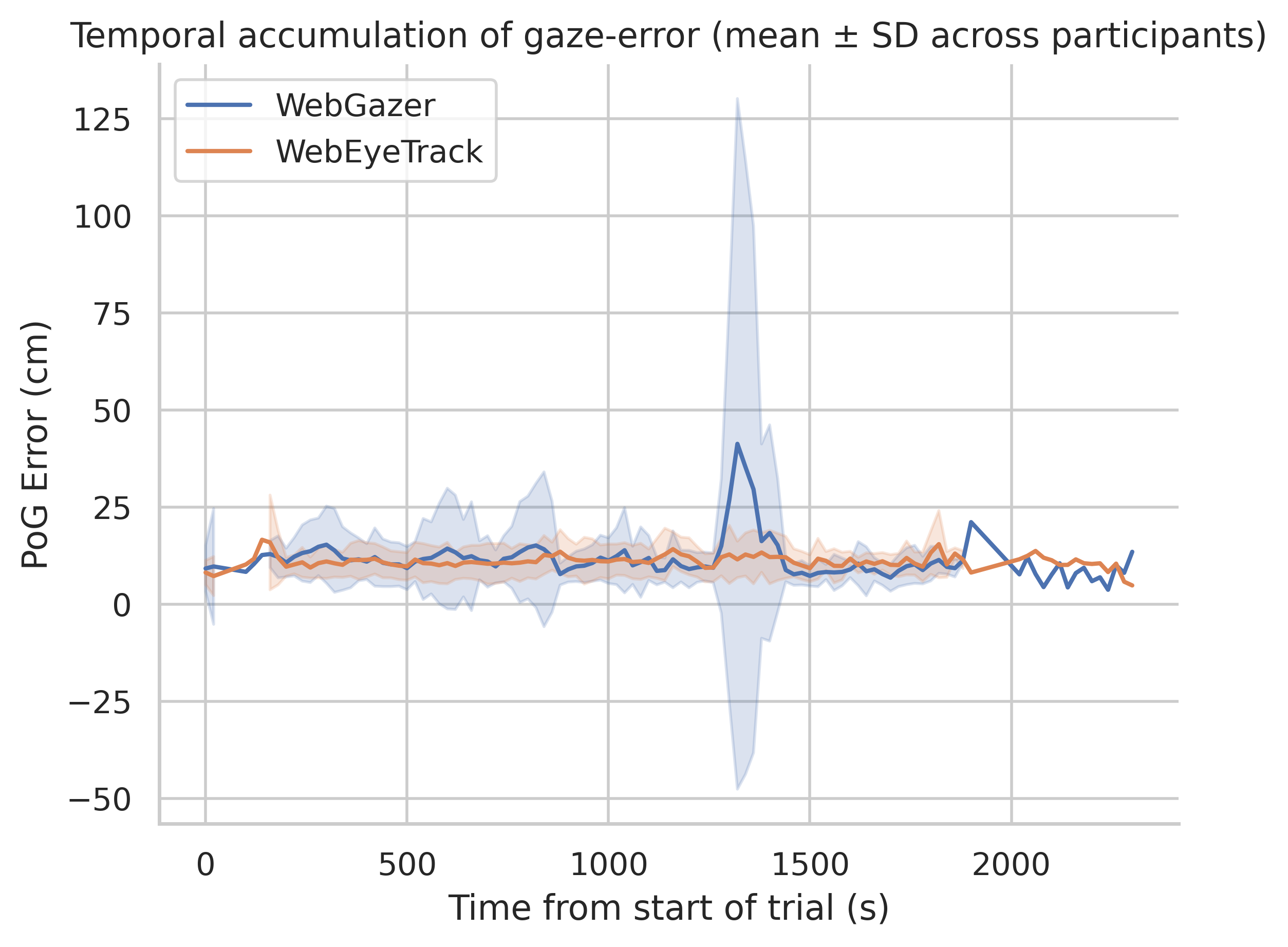

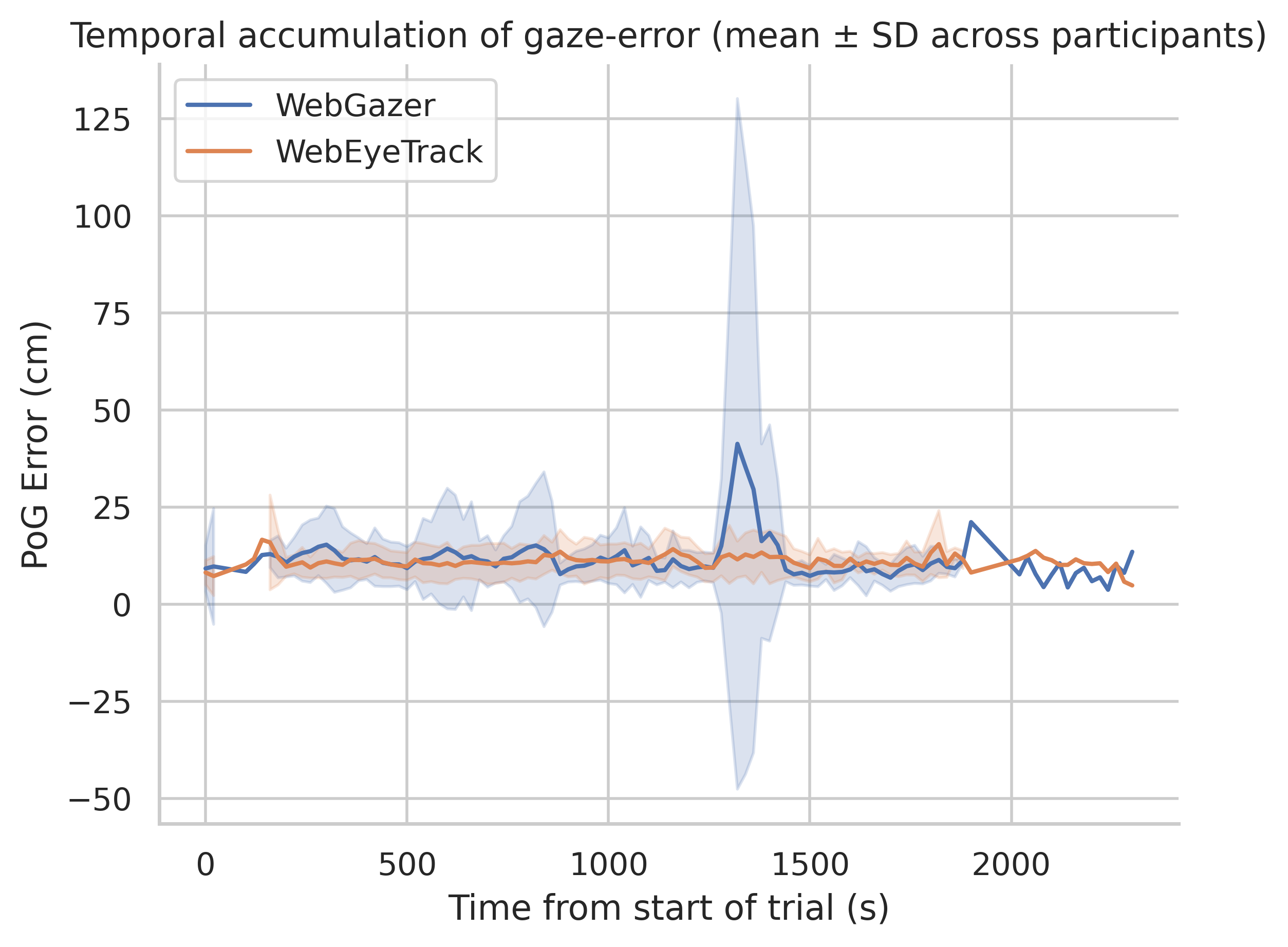

Temporal Robustness and Cross-Dataset Generalization

On the Eye of the Typer dataset, WebEyeTrack demonstrates strong spatial and temporal consistency, with minimal drift over 20-minute sessions. Compared to WebGazer, which lacks head pose awareness, WebEyeTrack exhibits only a 20% increase in error over time (7.24 cm → 8.72 cm), versus a 49% increase for WebGazer (7.79 cm → 11.62 cm). Statistical analysis (Mann–Whitney U test, p<0.05) confirms the significance of this improvement.

Figure 5: WebEyeTrack maintains lower PoG error and reduced temporal drift compared to WebGazer over extended sessions.

Qualitative visualizations further confirm that WebEyeTrack's predictions remain closely aligned with ground truth from a commercial Tobii tracker, even after prolonged use.

Implications and Future Directions

WebEyeTrack demonstrates that SOTA gaze estimation accuracy and robustness can be achieved in real-time, browser-based settings with minimal computational overhead and strong privacy guarantees. The integration of metric head pose estimation and few-shot meta-learning enables rapid, user-specific adaptation, addressing the key limitations of prior webcam-based and deep learning approaches.

Practically, this enables scalable deployment of eye-tracking in education, accessibility, behavioral research, and HCI applications without specialized hardware or cloud-based inference. The open-source release further facilitates reproducibility and adoption.

Theoretically, the work highlights the importance of geometric normalization, lightweight architectures, and meta-learning for real-world gaze estimation. The strong empirical results challenge the prevailing trend toward ever-larger models, demonstrating that careful architectural and algorithmic choices can yield efficient, deployable solutions.

Future research should address model fairness across demographic groups, further reduce energy consumption for mobile deployment, and explore unsupervised or label-free adaptation to further minimize calibration effort.

Conclusion

WebEyeTrack provides a comprehensive, efficient, and privacy-preserving solution for browser-based eye-tracking, combining metric head pose estimation, lightweight CNNs, and on-device few-shot personalization. The framework achieves competitive accuracy and robustness with orders of magnitude lower computational cost, enabling practical, scalable deployment of gaze-based interaction and analytics on consumer devices.