- The paper introduces a dual-system cognitive framework that models both reactive and deliberative behaviors for lifelike avatar rendering.

- It leverages Multimodal Large Language Models for high-level planning and a diffusion transformer for low-level synthesis, ensuring semantic consistency.

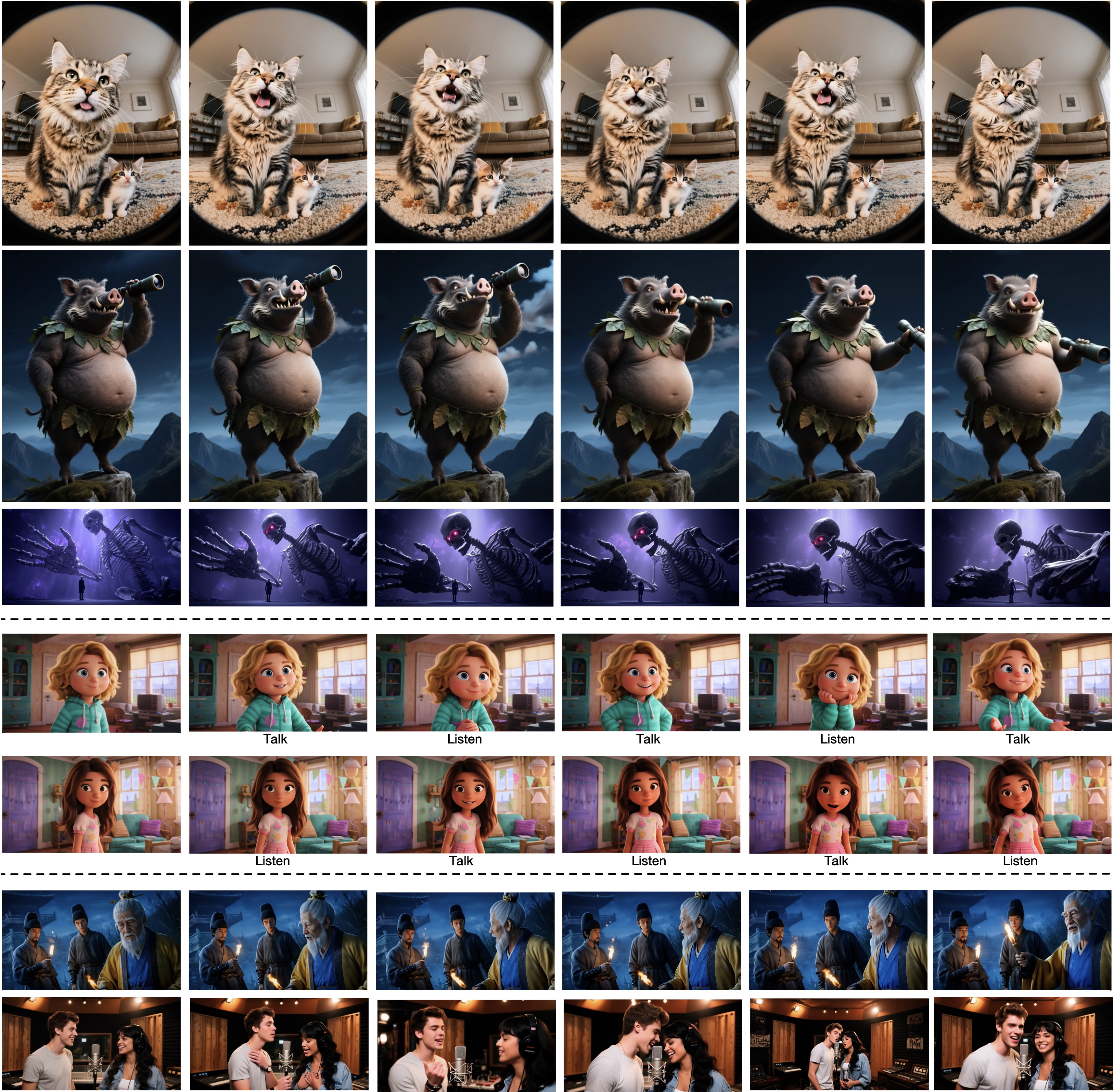

- Empirical results demonstrate improved motion naturalness and robust generalization across diverse avatars and multi-agent scenarios.

Cognitive Simulation for Lifelike Avatars: The OmniHuman-1.5 Framework

Introduction and Motivation

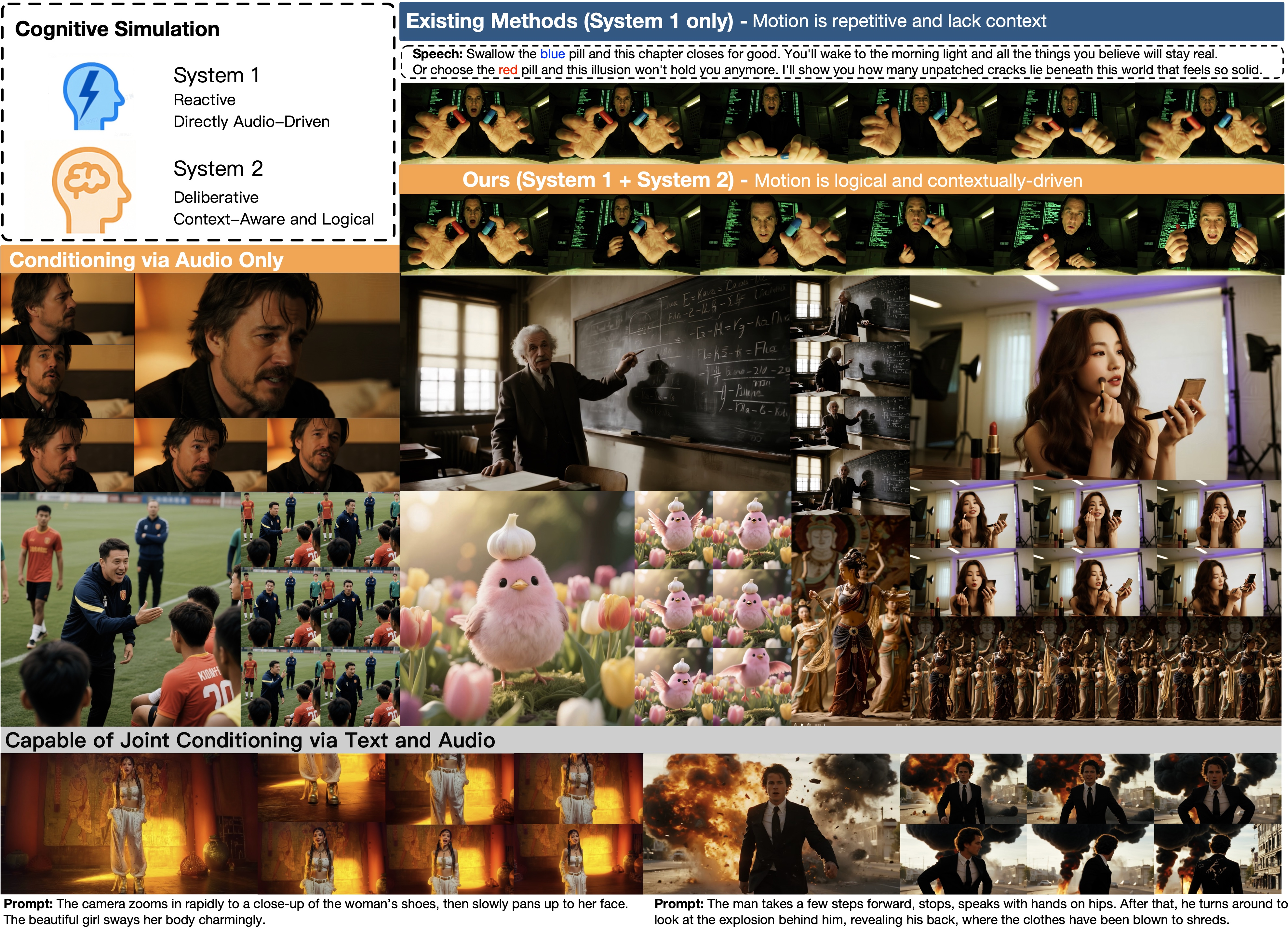

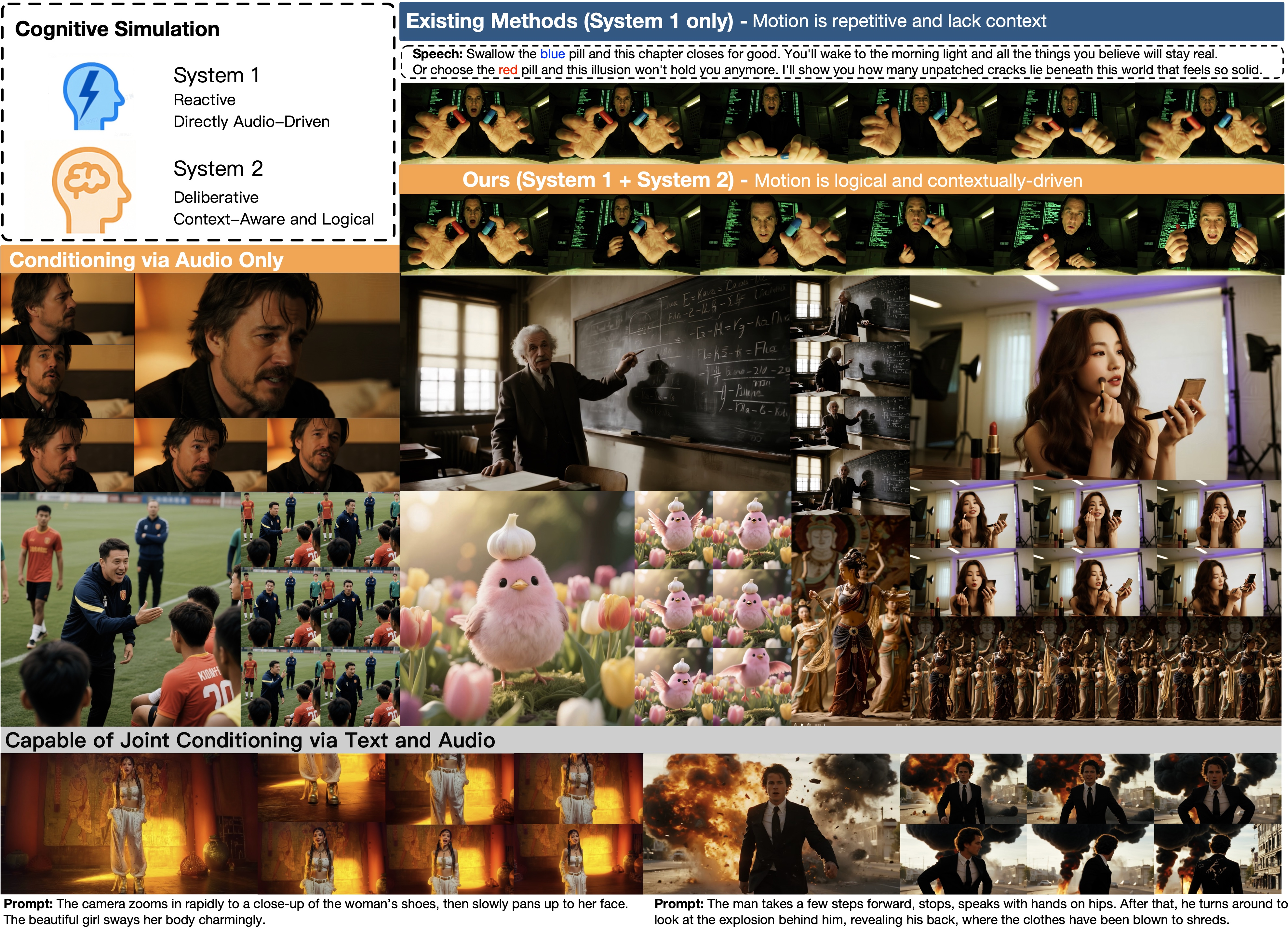

OmniHuman-1.5 addresses a fundamental limitation in current video avatar models: the inability to generate semantically coherent, contextually appropriate, and expressive human-like behaviors. Existing approaches predominantly synchronize avatar motion with low-level cues such as audio rhythm, resulting in outputs that lack deeper semantic understanding of intent, emotion, or context. The paper frames this gap through the lens of dual-system cognitive theory, distinguishing between fast, reactive (System 1) and slow, deliberative (System 2) processes. The proposed solution is a dual-system simulation framework that explicitly models both reactive and deliberative behaviors, leveraging Multimodal LLMs (MLLMs) for high-level reasoning and a specialized Multimodal Diffusion Transformer (MMDiT) for low-level rendering.

Figure 1: The dual-system theory motivates the integration of reactive (System 1) and deliberative (System 2) processes for avatar behavior, enabling both context-aware gestures and low-level synchronization.

Dual-System Simulation Architecture

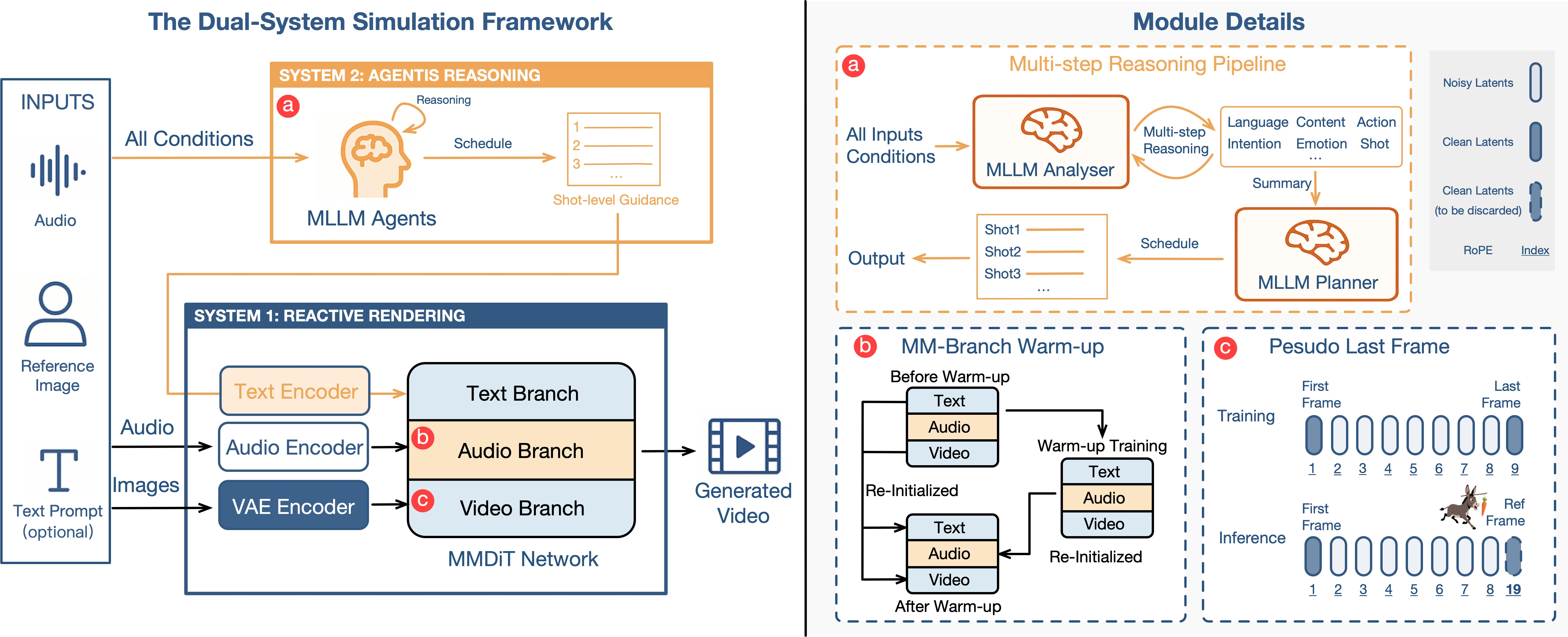

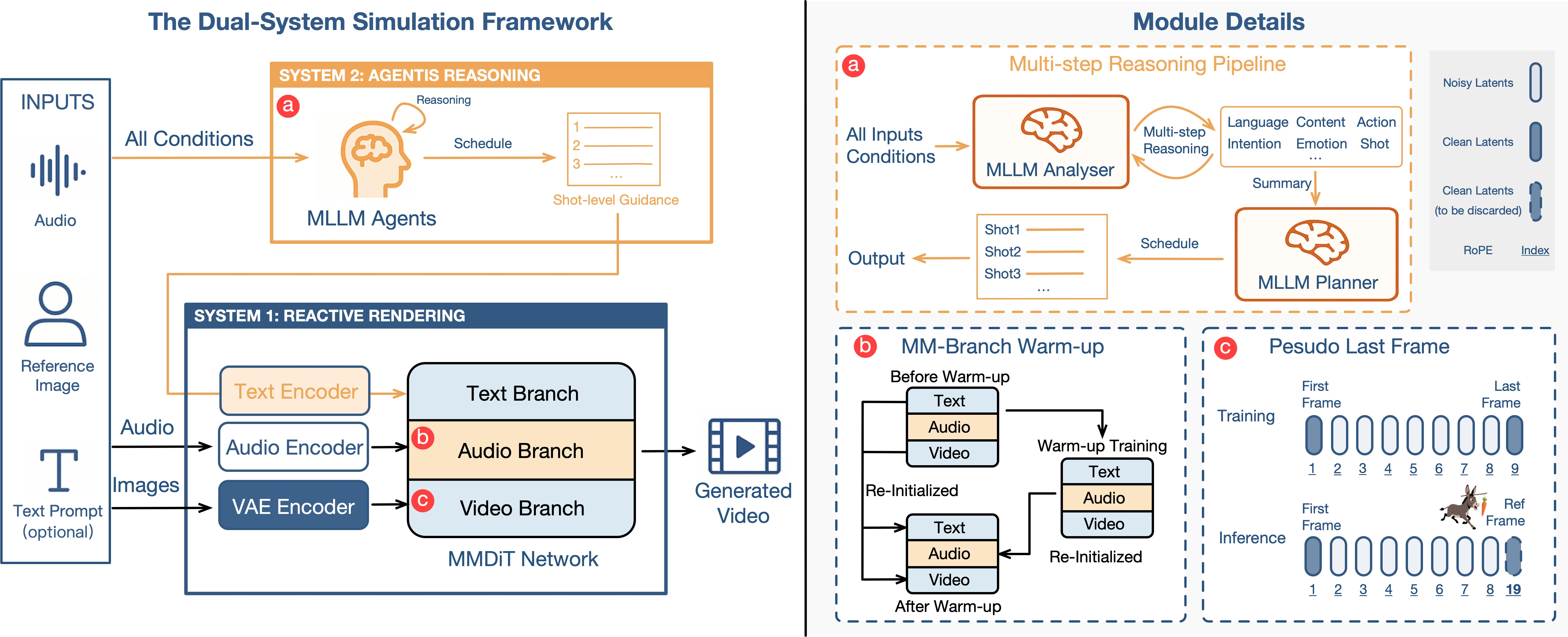

The core architectural innovation is the explicit separation and integration of System 1 and System 2 processes. System 2 is instantiated as an agentic reasoning module powered by MLLMs, which ingests multimodal inputs (audio, image, text) and produces a structured, high-level "schedule" of actions. This schedule is then used to guide System 1, implemented as an MMDiT network, which synthesizes the final video by fusing information from dedicated text, audio, and video branches.

Figure 2: The dual-system simulation framework: System 2 (MLLM agent) generates a high-level action schedule, which guides System 1 (MMDiT) in multimodal video synthesis. Key training strategies mitigate modality conflicts.

Agentic Reasoning and Planning

The agentic reasoning module operates in two stages:

- Analyzer MLLM: Receives the reference image, audio, and optional text prompt, and, via chain-of-thought prompting, infers persona, emotion, intent, and context, outputting a structured semantic representation.

- Planner MLLM: Consumes the Analyzer's output and the reference image, generating a temporally segmented action plan (schedule) that specifies expressions and actions for each video segment.

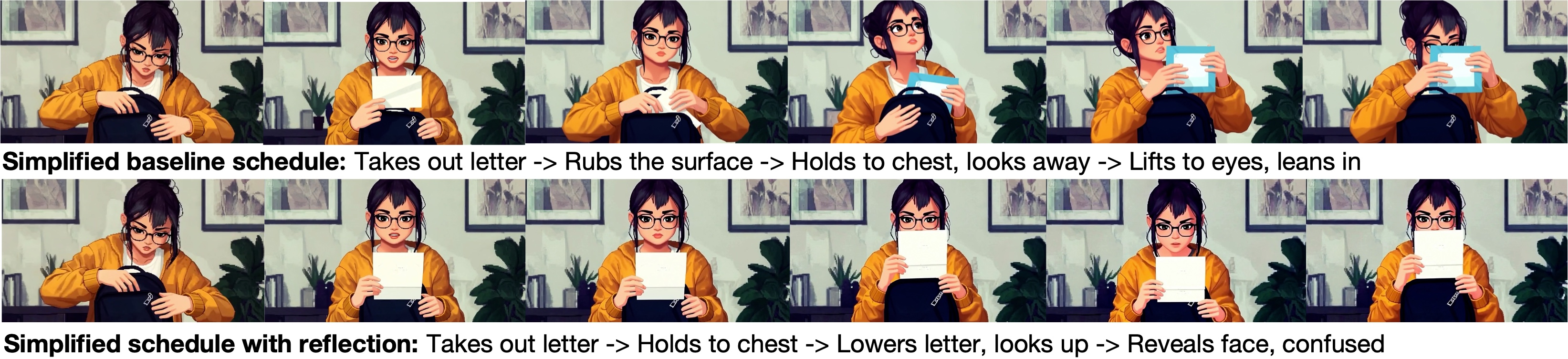

A reflection mechanism enables the Planner to revise its plan based on generated frames, correcting for semantic drift and ensuring logical consistency in long-form video synthesis.

The MMDiT backbone is enhanced with several critical design choices:

- Dedicated Audio Branch: Instead of cross-attention injection, audio features are processed in a branch architecturally symmetric to the video and text branches, enabling deep, iterative fusion via shared multi-head self-attention.

- Pseudo Last Frame Strategy: To avoid spurious correlations and motion artifacts from reference image conditioning, the model is trained with ground-truth first/last frames and, at inference, uses the user-provided reference image as a pseudo last frame with shifted positional encoding. This guides identity preservation without constraining motion diversity.

- Two-Stage Warm-Up: To prevent modality dominance and overfitting, the model is first trained jointly, then fine-tuned with pre-trained weights for text/video branches and warmed-up weights for the audio branch.

Empirical Evaluation and Ablation

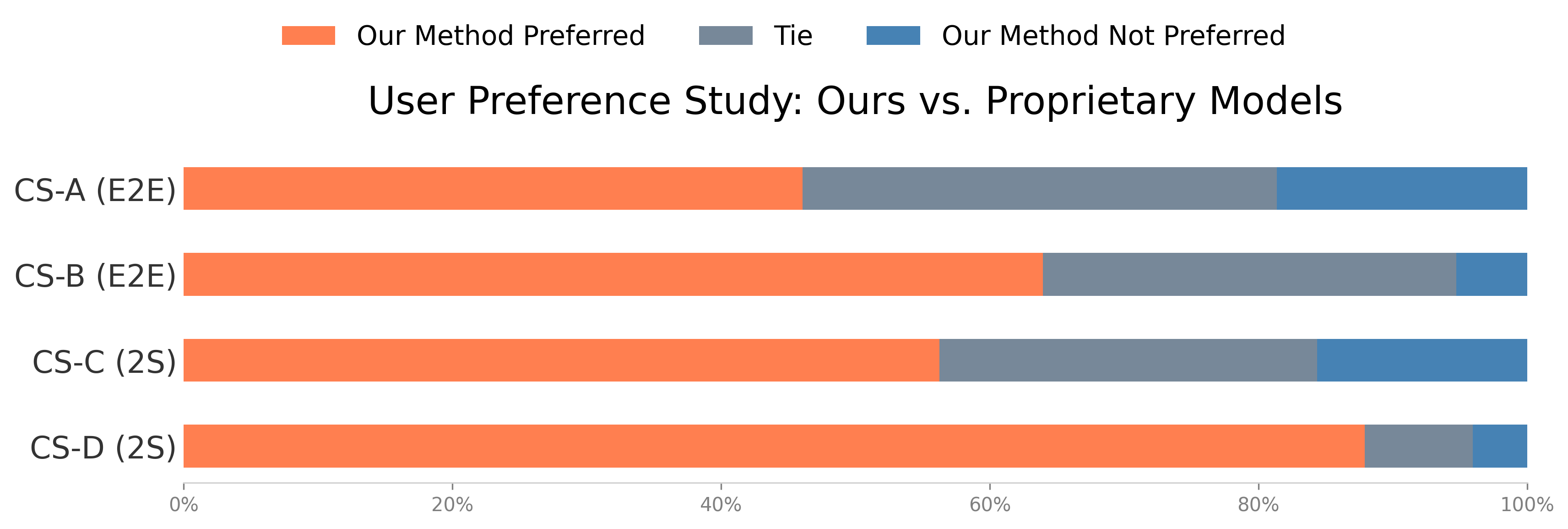

The framework is evaluated on challenging benchmarks, including custom single- and multi-subject test sets with diverse human, AIGC, anime, and animal avatars, as well as standard datasets (CelebV-HQ, CyberHost). Metrics include FID, FVD, IQA, ASE, Sync-C, HKC, and HKV, complemented by comprehensive user studies.

Key findings:

- Agentic Reasoning: Ablation of the reasoning module leads to a marked increase in motion unnaturalness and a significant drop in user preference, despite minor changes in low-level metrics. The full model achieves a 20% reduction in perceived motion unnaturalness and a +0.29 GSB user preference score.

- Conditioning Architecture: The proposed symmetric fusion and pseudo last frame strategy yield superior motion dynamics (HKV), lip-sync, and visual quality, outperforming state-of-the-art baselines in both objective and subjective evaluations.

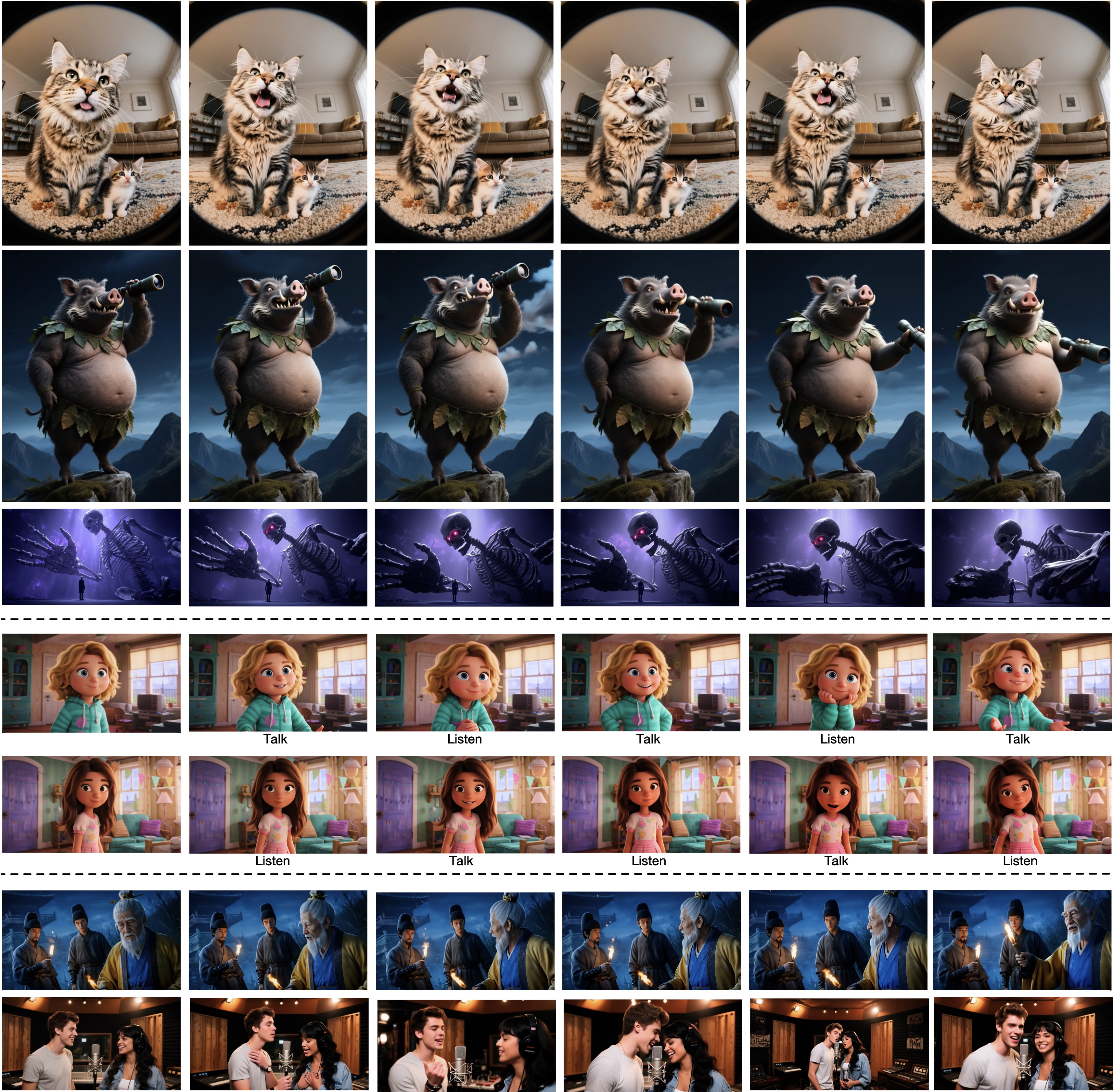

- Generalization: The model demonstrates robust performance on non-human avatars and multi-person scenes, with coordinated, context-aware behaviors and accurate conversational turn-taking.

Figure 3: The model generalizes to non-human avatars and multi-person scenes, maintaining contextually appropriate and coordinated behaviors.

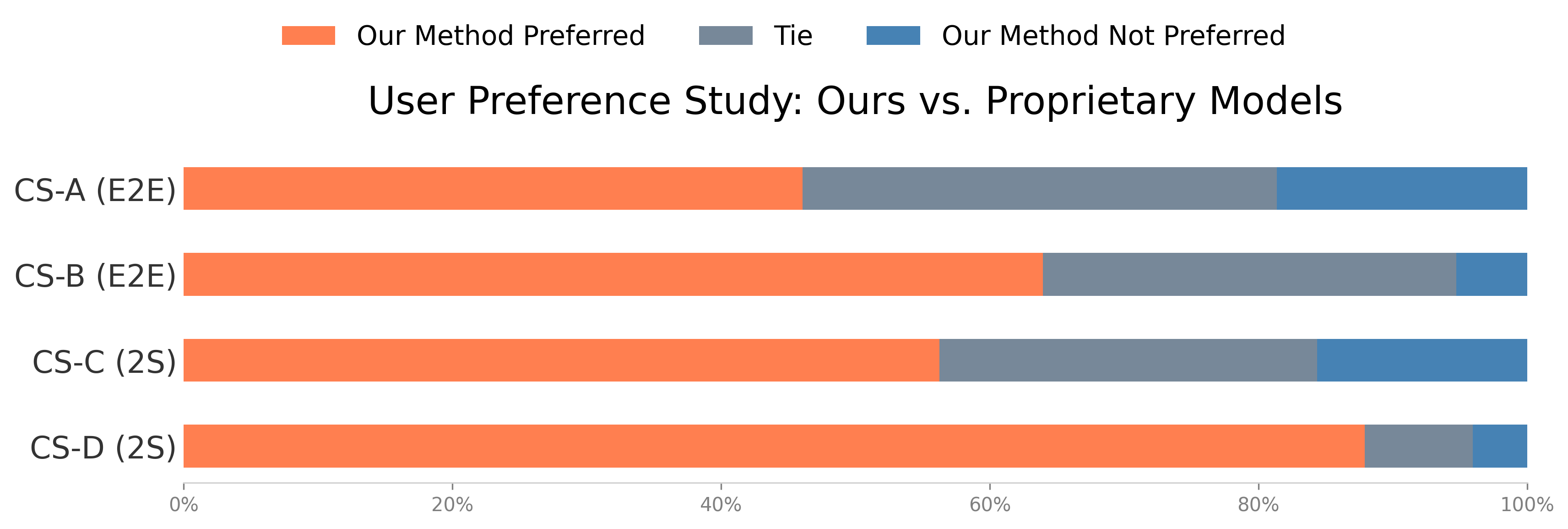

Figure 4: User studies show strong preference for the proposed method over academic and proprietary baselines in both best-choice and pairwise GSB evaluations.

Qualitative Analysis and Reflection

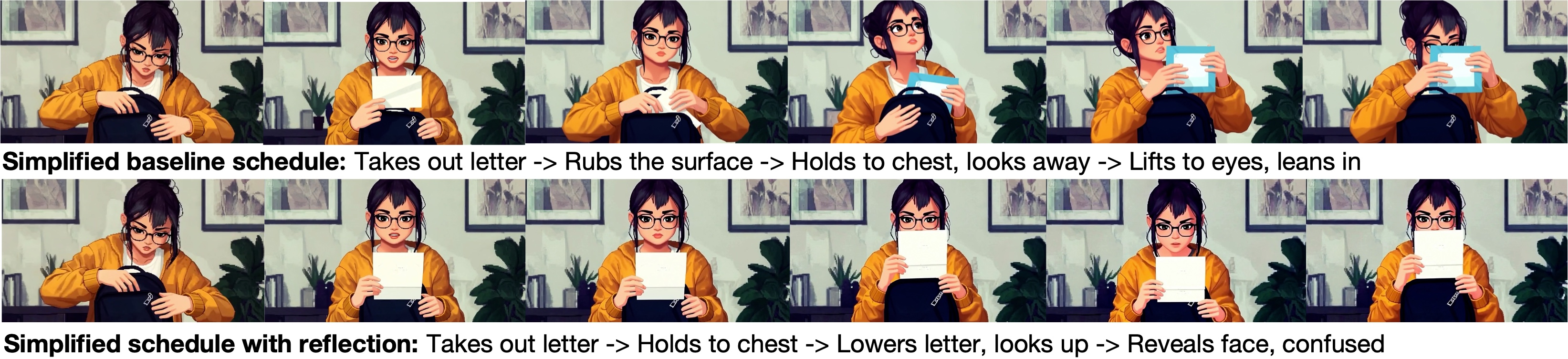

The reflection process is shown to correct logical inconsistencies in action planning, such as object disappearance or illogical transitions, by revising the schedule based on generated frames.

Figure 5: The reflection process enables the model to revise illogical plans, ensuring object and action consistency across video segments.

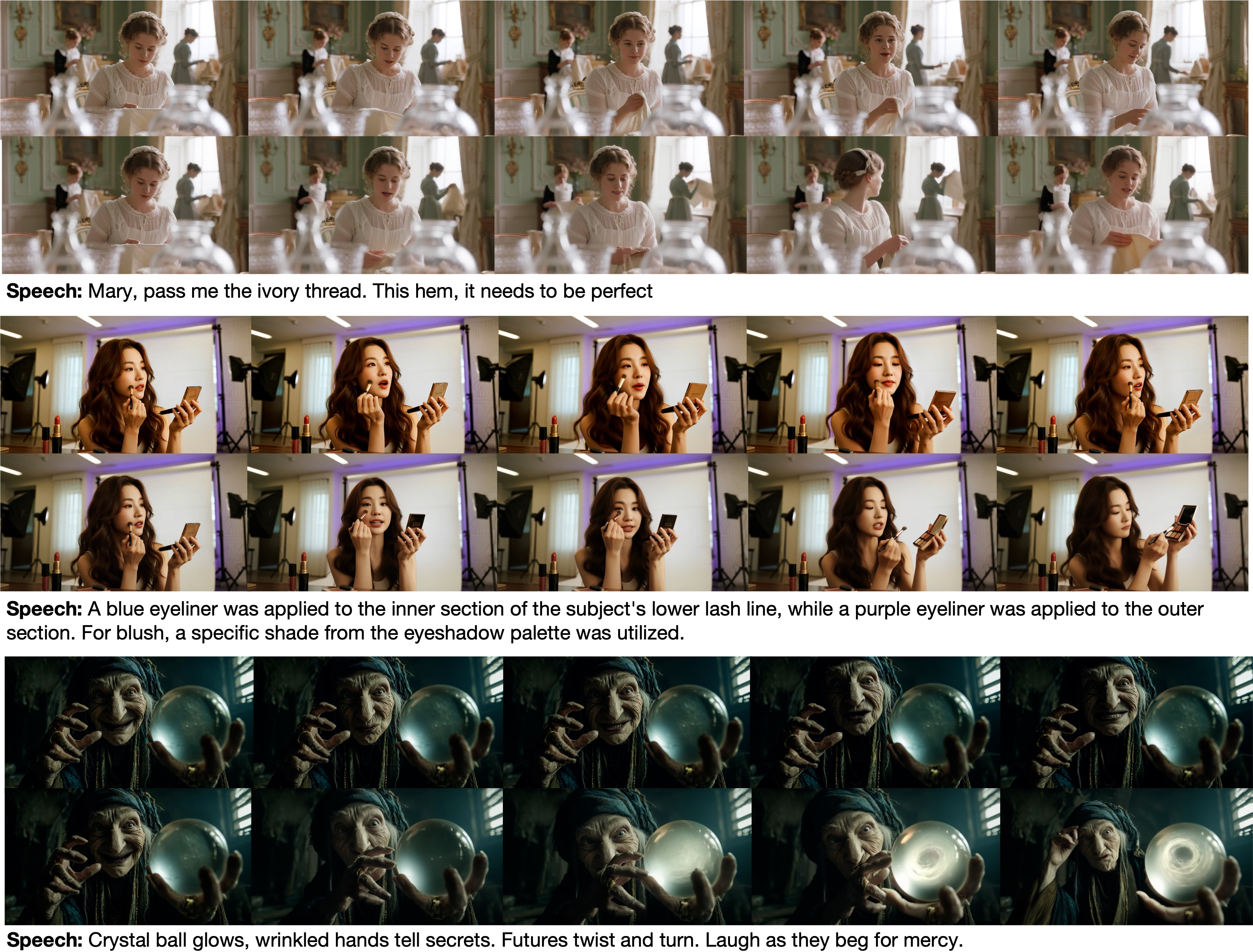

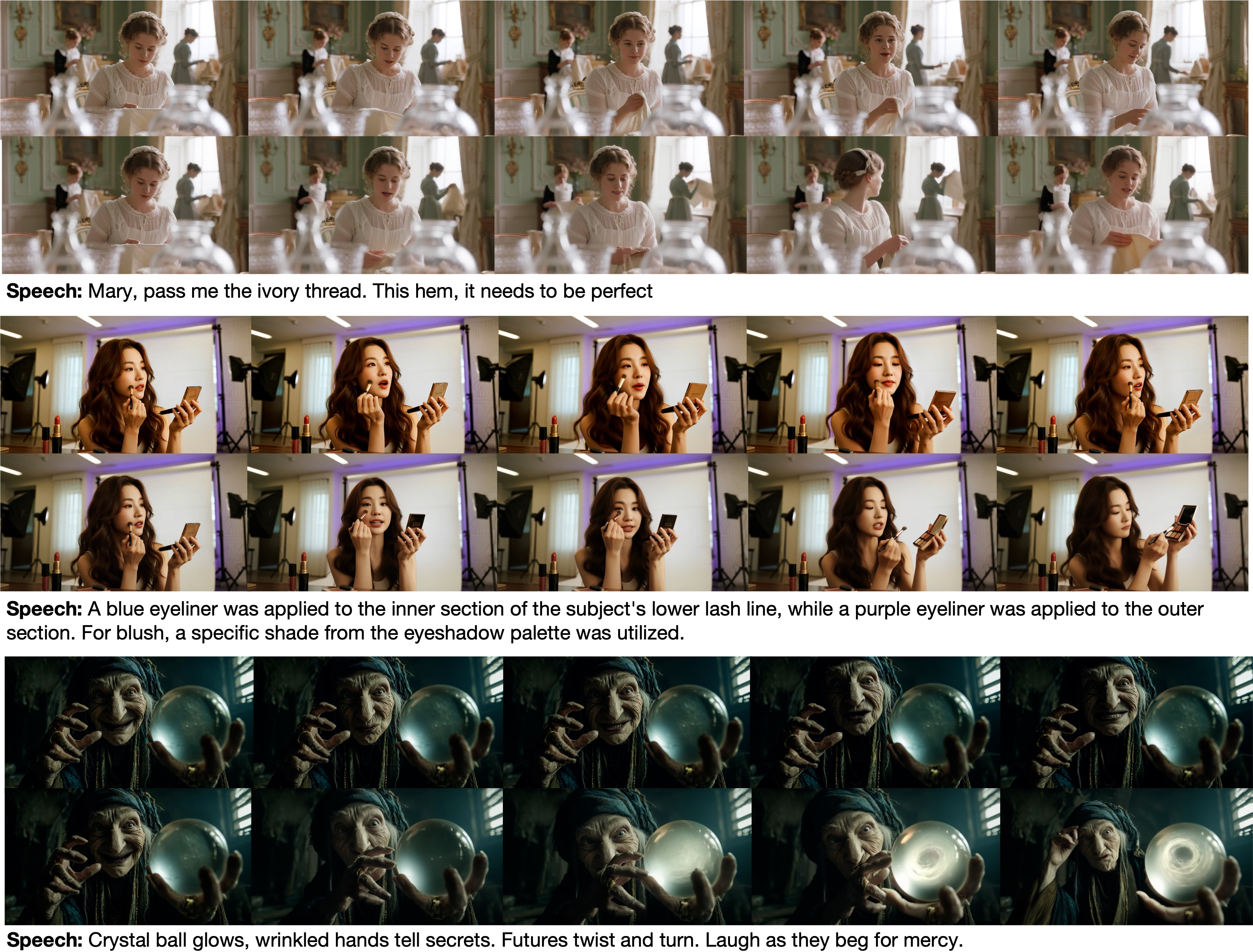

Qualitative comparisons with strong baselines (e.g., OmniHuman-1) reveal that the proposed model produces actions with higher semantic alignment to speech and context, such as correctly depicting described activities and object interactions.

Figure 6: The model generates semantically consistent actions (e.g., makeup application, glowing crystal ball) in alignment with speech content, outperforming prior baselines.

Implications and Future Directions

The explicit modeling of dual-system cognition in avatar generation represents a significant step toward avatars that exhibit both reactive and deliberative behaviors. The integration of MLLM-based planning with diffusion-based rendering enables avatars to act in ways that are not only physically plausible but also contextually and semantically coherent. This paradigm is extensible to multi-agent, non-human, and interactive scenarios, and provides a foundation for future research in agentic video generation, controllable animation, and embodied AI.

Potential future directions include:

- Scaling agentic reasoning to more complex, open-ended tasks and environments.

- Integrating real-time user feedback for interactive avatar control.

- Extending the framework to multi-agent social simulations and collaborative behaviors.

- Investigating the trade-offs between explicit reasoning latents and textual guidance for fine-grained control.

Conclusion

OmniHuman-1.5 introduces a dual-system cognitive simulation framework for video avatar generation, combining MLLM-driven deliberative planning with a multimodal diffusion transformer for reactive rendering. The approach achieves state-of-the-art performance in both objective and subjective evaluations, with strong generalization to diverse avatars and scenarios. The explicit separation and integration of high-level reasoning and low-level synthesis set a new direction for lifelike, semantically coherent digital humans and open new avenues for research in cognitive simulation and agentic generative models.