- The paper introduces Memento, a memory-augmented framework that enables continual adaptation of LLM agents without fine-tuning the underlying LLMs.

- It leverages a memory-based Markov Decision Process with both non-parametric and parametric retrieval to integrate case-based reasoning for dynamic tool use and multi-step planning.

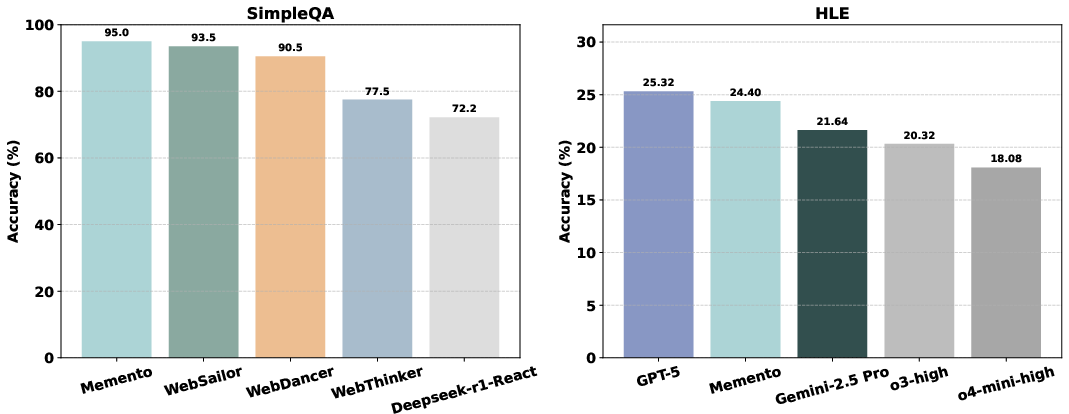

- Empirical results show state-of-the-art performance across benchmarks, with significant improvements in long-horizon research tasks and out-of-distribution generalization.

Memory-Augmented Continual Adaptation for LLM Agents: The Memento Framework

Introduction and Motivation

The paper introduces Memento, a learning paradigm for LLM-based agents that enables continual adaptation without fine-tuning the underlying LLM parameters. The motivation stems from the limitations of current LLM agent paradigms: static, workflow-based systems lack flexibility, while parameter fine-tuning approaches are computationally expensive and impractical for real-time, open-ended adaptation. Memento addresses this by leveraging external, episodic memory and case-based reasoning (CBR), formalized as a memory-augmented Markov Decision Process (M-MDP), to enable agents to learn from experience in a non-parametric, scalable manner.

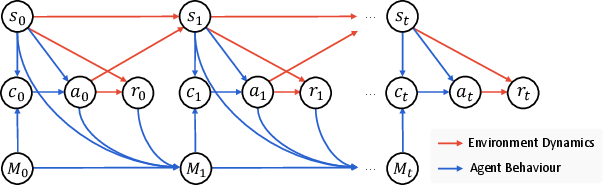

Memory-Based Markov Decision Process and Case-Based Reasoning

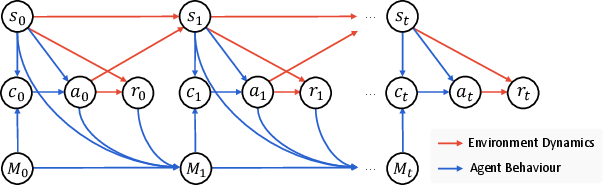

Memento formalizes the agent's decision process as an M-MDP, extending the standard MDP tuple ⟨S,A,P,R,γ⟩ with a memory space M that stores episodic trajectories. At each timestep, the agent retrieves a relevant case from memory using a learned retrieval policy μ, adapts the retrieved solution via the LLM, executes the action, and appends the new experience to memory. This process is governed by a policy:

π(a∣s,M)=c∈M∑μ(c∣s,M)pLLM(a∣s,c)

where M is the case bank, c is a case, and pLLM is the LLM's action likelihood conditioned on the current state and retrieved case.

Figure 1: A graphical model of memory-based Markov Decision Process.

The retrieval policy μ is optimized via maximum entropy RL (soft Q-learning), encouraging both exploitation of high-utility cases and exploration/diversity in retrieval. The Q-function Q(s,M,c) estimates the expected return of selecting case c in state s with memory M, and the optimal retrieval policy is a softmax over Q-values:

μ∗(c∣s,M)=∑c′∈Mexp(Q∗(s,M,c′)/α)exp(Q∗(s,M,c)/α)

To address the challenge of high-dimensional, natural language state and case spaces, the Q-function can be approximated via kernel-based episodic control or a neural network, depending on the memory variant.

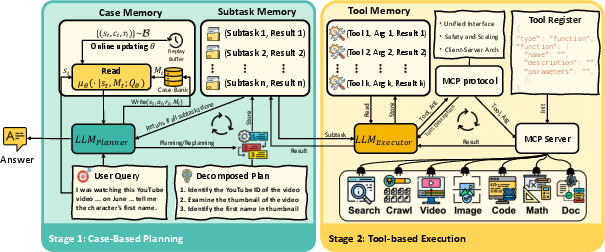

Planner–Executor Architecture and Memory Management

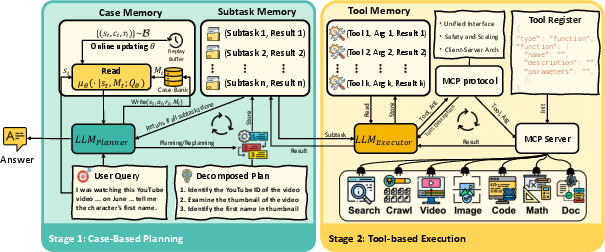

Memento is instantiated as a planner–executor framework. The planner is an LLM-based CBR agent that alternates between retrieving relevant cases from memory (Read) and recording new experiences (Write), with the retrieval policy either similarity-based (non-parametric) or Q-function-based (parametric). The executor is an LLM-based client that invokes external tools via the Model Context Protocol (MCP), enabling compositional tool use and dynamic reasoning.

Figure 2: The architecture of Memento with parametric memory, alternating between Case-Based Planning and Tool-Based Execution.

The memory module supports both non-parametric (vectorized similarity search) and parametric (Q-function) retrieval. In the non-parametric setting, retrieval is based on cosine similarity between the current state and stored cases. In the parametric setting, the Q-function is trained online (using cross-entropy loss for binary rewards) to predict the utility of each case, and retrieval is performed by selecting the top-K cases with the highest Q-values.

Memento is designed for deep research tasks requiring long-horizon planning, multi-step tool use, and reasoning over heterogeneous data. The MCP-based executor supports a suite of tools for web search, crawling, multimodal document processing, code execution, and mathematical computation. This enables the agent to acquire, process, and reason over external information in real time, supporting complex research workflows.

Empirical Evaluation

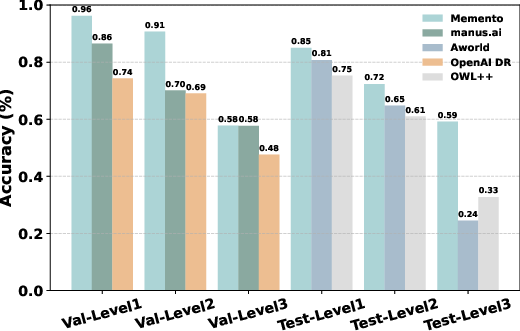

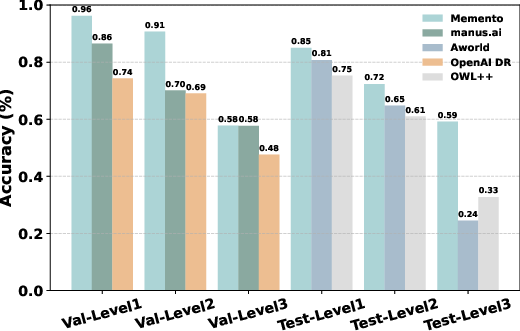

Memento is evaluated on four benchmarks: GAIA (long-horizon tool use), DeepResearcher (real-time web research), SimpleQA (factual precision), and HLE (long-tail academic reasoning). The agent achieves:

- GAIA: 87.88% Pass@3 on validation and 79.40% on the test set, outperforming all open-source agent frameworks.

Figure 3: Memento vs. Baselines on GAIA validation and test sets.

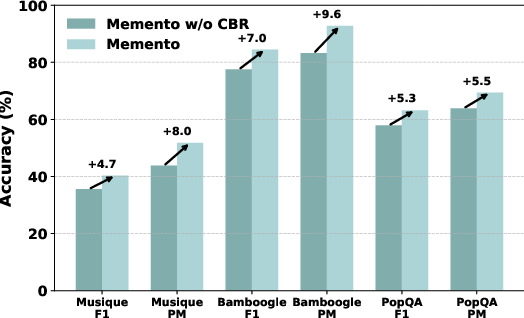

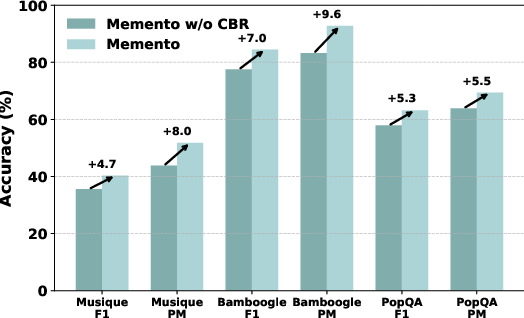

Ablation studies show that both parametric and non-parametric CBR yield consistent, additive improvements across all benchmarks. Notably, case-based memory provides 4.7% to 9.6% absolute gains on out-of-distribution tasks, highlighting its role in generalization.

Continual Learning and Memory Efficiency

Memento demonstrates continual learning capability: as the case bank grows, performance improves over successive iterations, with rapid convergence observed after a few iterations due to the finite environment. The optimal number of retrieved cases is small (K=4), as larger K introduces noise and computational overhead without further gains.

The system is efficient in terms of output token usage, with most computational cost arising from integrating multi-step tool outputs as task complexity increases. The architecture is robust to hallucination and maintains concise, structured planning, with fast planners outperforming slow, deliberative ones in modular settings.

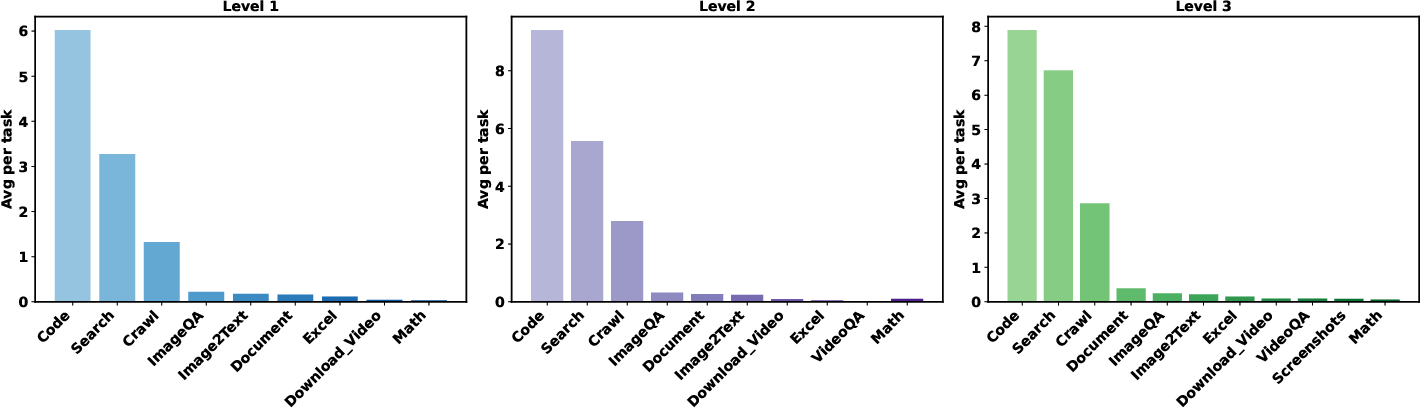

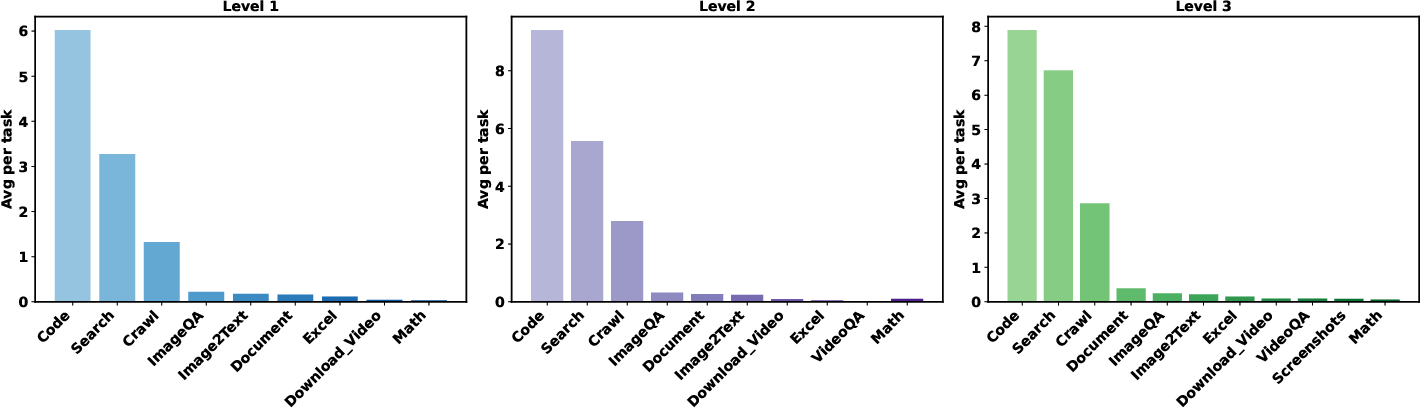

Figure 5: The average number of each task type per level, highlighting the dominance of code, search, and crawl tasks as difficulty level increases.

Theoretical and Practical Implications

Memento provides a principled framework for continual, real-time adaptation of LLM agents without gradient updates. By decoupling agent learning from LLM parameter updates, it enables scalable, low-cost deployment in open-ended environments. The memory-augmented MDP formalism and CBR policy optimization bridge cognitive science and RL, offering a pathway for agents to accumulate, reuse, and generalize from experience.

The strong empirical results, especially on OOD tasks, challenge the assumption that parameter fine-tuning is necessary for agent adaptation. Instead, memory-based approaches can yield comparable or superior performance with greater efficiency and flexibility.

Future Directions

Potential extensions include:

- Scaling to larger, more diverse memory banks with advanced curation and forgetting mechanisms to mitigate retrieval swamping.

- Integrating richer forms of memory (e.g., semantic, procedural) and more sophisticated retrieval policies.

- Applying the framework to multi-agent and collaborative research scenarios.

- Exploring hybrid approaches that combine memory-based adaptation with lightweight parameter-efficient fine-tuning.

Conclusion

Memento demonstrates that memory-augmented, case-based reasoning enables LLM agents to achieve continual, real-time adaptation without fine-tuning LLM parameters. The framework achieves state-of-the-art results across multiple challenging benchmarks, with strong generalization and efficiency. These findings suggest that external memory and CBR are critical components for scalable, generalist LLM agents, and motivate further research into memory-based agent architectures for open-ended AI.