- The paper's main contribution is combining LLMs with Bayesian Optimization to enable designer-guided parameter selection through natural language interaction.

- It demonstrates improved user agency and reduced cognitive load compared to fully automated and explicit constraint-based methods.

- Empirical studies reveal competitive optimization performance and higher user satisfaction in complex, high-dimensional design tasks.

Cooperative Design Optimization through Natural Language Interaction: An Expert Analysis

Introduction and Motivation

The paper presents a novel framework for cooperative design optimization that leverages natural language interaction between designers and an optimization system. The approach integrates LLMs with Bayesian Optimization (BO), enabling designers to guide the optimization process and receive interpretable explanations for system-generated parameter suggestions. This method addresses the limitations of fully system-led optimization, which often restricts designer agency and fails to incorporate human intuition, and also improves upon prior cooperative approaches that require explicit, often cognitively demanding, parameter-space constraints.

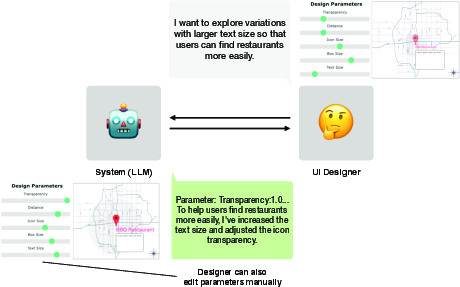

System Architecture and Interaction Paradigm

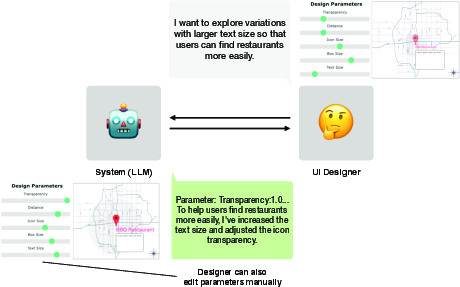

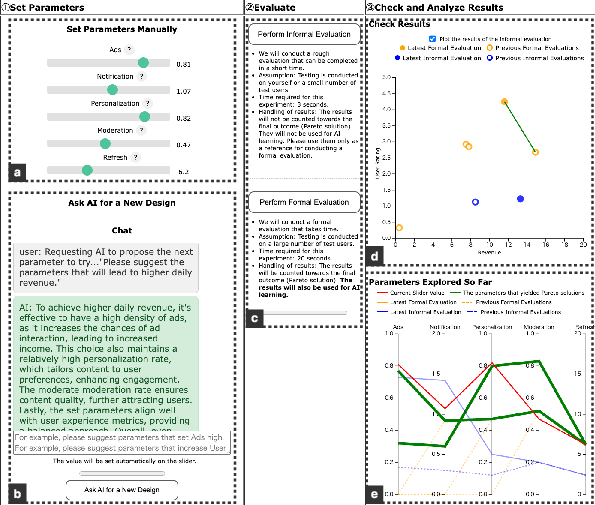

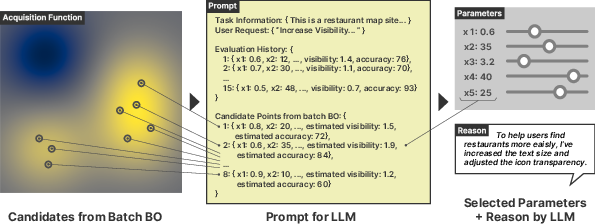

The proposed system operates in iterative cycles where designers can intervene using natural language requests. The system samples q candidate parameter sets via batch BO, then prompts the LLM to select the candidate best aligned with the designer's request and to provide a natural language rationale for its choice. This interaction paradigm is illustrated in a typical scenario where a UI designer optimizes a restaurant map design by providing instructions and receiving parameter suggestions and explanations.

Figure 1: A typical interaction scenario of cooperative design optimization with LLMs, showing designer instructions, system proposals, and editable parameter sliders.

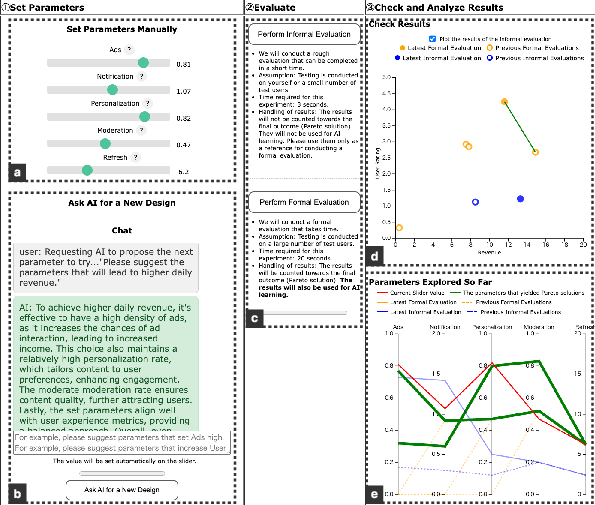

The system interface supports both manual parameter adjustment and natural language interaction, with real-time visualization of parameter history and objective values. Designers can alternate between direct manipulation and AI-guided exploration, fostering a flexible, collaborative workflow.

Figure 2: The design interface for the Cooperative: Natural Language condition, featuring sliders, natural language input, evaluation controls, and visualizations of objectives and parameter history.

Technical Implementation: LLM-Guided Bayesian Optimization

The optimization problem is multi-objective, seeking to maximize m performance metrics (e.g., speed, accuracy) over n design parameters. The system iteratively selects parameter sets, conducts user testing (simulated via synthetic functions in the paper), and updates the surrogate models.

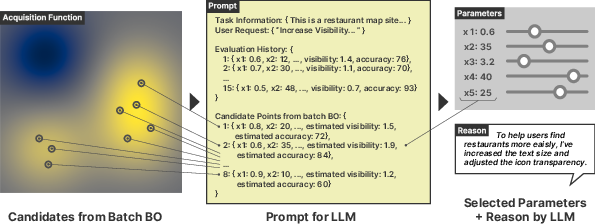

Batch BO and Candidate Generation

A Gaussian Process (GP) surrogate is trained for each objective. The acquisition function used is qLogNEHVI, which supports batch sampling and diversity among candidates. In each iteration, q candidates are generated, and the LLM selects one based on the designer's request and the predicted performance/uncertainty.

Figure 3: Overview of the system procedure, showing batch candidate generation, LLM-guided selection, and reasoning.

LLM Prompt Engineering

The LLM prompt includes task context, parameter and objective ranges, candidate statistics (mean, variance, acquisition value), evaluation history, and the designer's request. The LLM outputs the index of the selected candidate and a rationale, which is displayed to the designer. This prompt structure enables the LLM to balance exploitation and exploration, incorporate user intent, and communicate uncertainty.

(Figure 4)

Figure 4: Prompt for candidate selection and reasoning, with context variables and candidate statistics.

Empirical Evaluation: User Studies

Study 1: Levels of Control

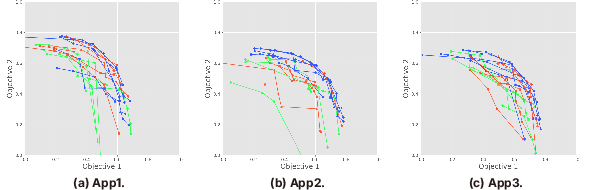

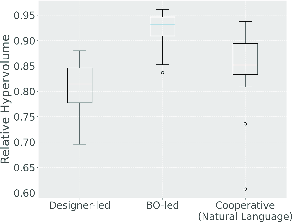

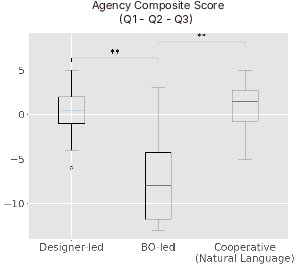

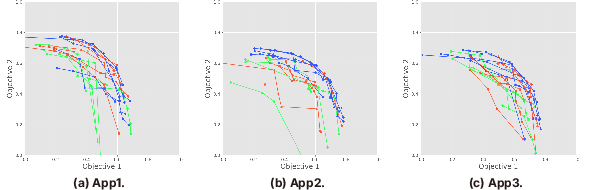

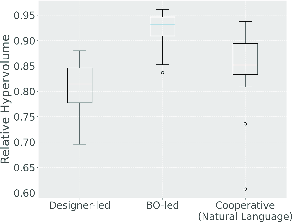

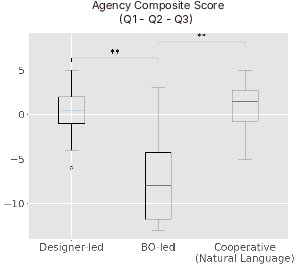

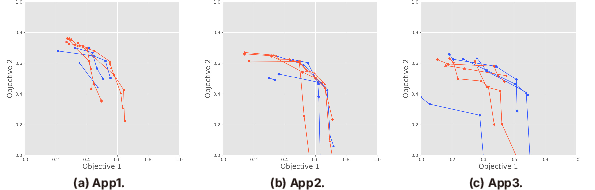

Three conditions were compared: Designer-led (manual), BO-led (system-led), and Cooperative: Natural Language (proposed). Participants optimized web app designs using simulated user testing.

- Optimization Performance: BO-led achieved the highest relative hypervolume, but Cooperative matched or exceeded Designer-led performance in most cases.

- Agency: Cooperative and Designer-led conditions yielded significantly higher agency scores than BO-led.

- Preference: Majority preferred the Cooperative condition.

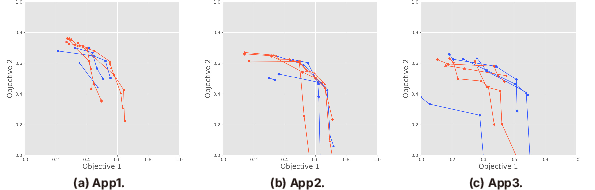

Figure 5: Visualization of obtained Pareto fronts in Study 1, showing performance across conditions.

Figure 6: Boxplots of the relative hypervolume for the three conditions, quantifying optimization performance.

Figure 7: Boxplot of agency scores, demonstrating higher agency in Cooperative and Designer-led conditions.

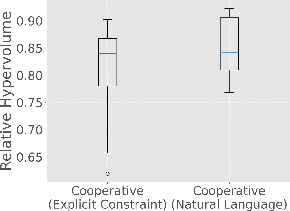

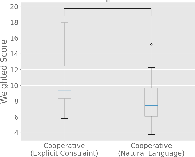

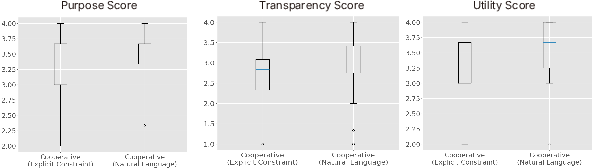

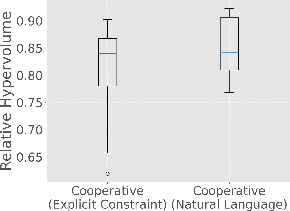

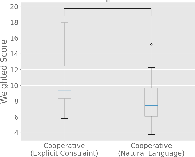

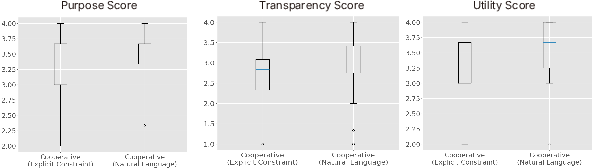

Study 2: Comparison with Explicit Constraint-Based Cooperation

The Cooperative: Natural Language approach was compared to a prior cooperative method (Explicit Constraint) that required designers to specify forbidden regions in parameter space.

- Cognitive Load: Cooperative: Natural Language resulted in significantly lower NASA-TLX scores, indicating reduced mental demand.

- Trust: No significant difference in trust metrics, though qualitative feedback suggested nuanced perceptions of system transparency.

- Agency: Explicit Constraint condition yielded higher agency, likely due to more tangible control over parameter space.

- Preference: Most participants preferred the natural language approach.

Figure 8: Visualization of Pareto fronts in Study 2, showing comparable optimization outcomes for both cooperative methods.

Figure 9: Boxplots of relative hypervolume for Explicit Constraint and Natural Language conditions.

Figure 10: Boxplots of NASA-TLX scores, indicating lower cognitive load for Natural Language interaction.

Figure 11: Boxplots of trust dimensions from the Multidimensional Trust Questionnaire.

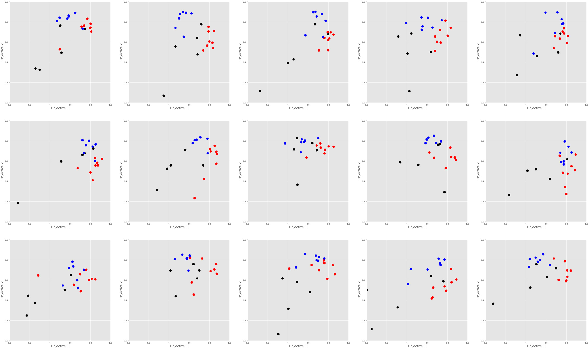

Technical Validation and Request Analysis

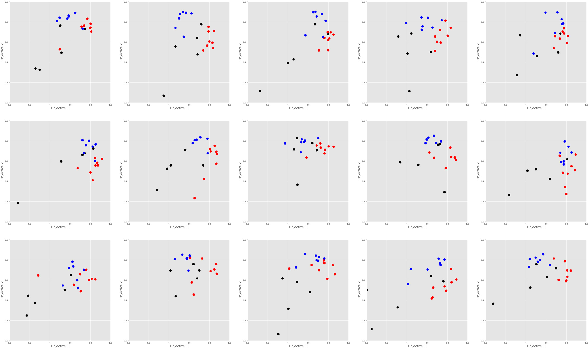

A technical assessment demonstrated that alternating natural language requests (e.g., "increase Objective 1" vs. "increase Objective 2") successfully steered the optimization toward distinct regions of the search space, as evidenced by clustering and centroid separation in the parameter space.

Figure 12: Visualization of optimization results for different objective-focused requests, showing effective steering of the search.

Analysis of 187 designer requests revealed that 90.9% focused on outcomes ("what" to achieve) rather than specific parameter manipulations ("how"), and 20.6% expressed complex constraints or trade-offs not easily captured by GUIs. This underscores the expressive power and flexibility of natural language interaction.

Discussion and Implications

Mitigating Design Fixation

The system's ability to provide unexpected yet feasible suggestions helps mitigate design fixation, encouraging broader exploration while preserving designer agency. Contradictory rationales prompt designers to reconsider assumptions and expand their search.

Explanations and Acceptance

Natural language explanations facilitate acceptance of system suggestions, support planning, and reassure designers when system reasoning aligns with their own. However, explanations must be concise and contextually relevant to avoid cognitive overload.

Trade-offs and Limitations

There is a trade-off between candidate diversity (batch size q) and interaction efficiency. Larger batches improve alignment with specific requests but increase computational overhead. Early-stage accuracy is limited by surrogate model uncertainty; more initial seed points or improved uncertainty communication could enhance responsiveness.

Applicability and Generalization

The method is well-suited to real-world design tasks, especially in high-dimensional or complex spaces where manual constraint specification is impractical. The hybrid approach—combining BO's statistical guidance and LLM's adaptive learning—enables generalization to unfamiliar domains without prior knowledge.

Future Directions

- Improved Explanation Generation: Layered explanations (summary + detail) and explicit hypothesis formulation.

- Uncertainty Communication: Adaptive explanations based on predictive confidence.

- Scalability: Application to higher-dimensional design spaces and real-world iterative testing.

- Generalization: Validation in domains outside the LLM's training data.

Conclusion

The integration of LLMs with BO for cooperative design optimization via natural language interaction offers a flexible, interpretable, and cognitively efficient framework for human-AI collaboration. Empirical results demonstrate enhanced user agency, competitive optimization performance, and reduced cognitive load compared to manual and explicit constraint-based methods. The approach is particularly advantageous in complex, high-dimensional design tasks and holds promise for broader application and future refinement.