Understanding Bayesian Optimization and LLMs in Natural Language Preference Elicitation

Introduction to the Technique

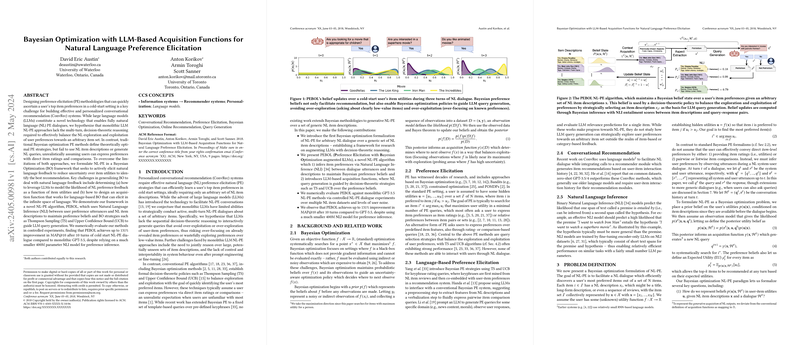

The paper under discussion merges Bayesian Optimization (BO) with LLMs to enhance Preference Elicitation (PE) in systems that engage users through natural language. This innovative approach targets conversational recommendation systems that operate in "cold-start" settings—a scenario where initial user preferences aren't known.

BO traditionally excels at discovering user preferences by balancing exploration (learning new information) and exploitation (leveraging known information). However, it struggles with understanding and generating natural language dialogues. LLMs, conversely, are adept at handling language but lack the strategic decision-making BO provides. The proposed solution, named PEBOL (Preference Elicitation with Bayesian Optimization augmented LLMs), integrates these strengths, setting a new stage for conversational recommendation systems.

Key Components of the Approach

Bayesian Optimization Framework

PEBOL applies BO to PE by maintaining a probabilistic model of user preferences in the form of Bayesian "beliefs". These beliefs about item utilities are updated based on user’s feedback, which allows the system to carefully select what to inquire next about based on current knowledge and uncertainties.

Natural Language Handling via LLMs

LLMs in PEBOL are used to generate and understand natural language queries and responses. Queries generated by LLMs prompt users to disclose their preferences in a conversational format, making the interaction more user-friendly and less rigid compared to traditional PE approaches.

Observations from Experiments

The system was tested against GPT-3.5 in various settings, such as different datasets and levels of user-response noise. Here are some notable findings:

- Effectiveness in Cold-Start Scenarios: PEBOL displayed significant improvements in performance measures like MAP@10, achieving up to 131% better outcomes than GPT-3.5 alone in early interactions (10 dialogue turns).

- Robustness to Noise: PEBOL maintained superior performance even when users' responses included noise, illustrating its effectiveness in less-than-ideal real-world scenarios.

- Comparisons of Different Strategies: Various acquisition strategies were explored within PEBOL, such as Thompson Sampling and Upper Confidence Bound, which helped in understanding how different methods affect the exploration-exploitation balance.

Implications and Future Directions

Practical Applications

For businesses, this research paves the way for more effective conversational recommendation systems that can start functioning effectively without needing extensive initial data about user preferences. This can be particularly valuable in e-commerce, content recommendation, and any service that benefits from personalized user engagement.

Theoretical Contributions

Theoretically, this work broadens the understanding of integrating decision-theoretical reasoning with LLMs, a crossover not often explored in machine learning. It sets groundwork for future studies on how different AI domains can be blended for enhanced user interaction.

Speculations on Future Developments

Looking ahead, the successful integration of BO and LLMs suggests potential extensions where more complex models of user behavior could be incorporated, potentially taking into account varying user moods, contexts, or even indirect preference signals within longer dialogues.

Conclusion

The intersection of Bayesian Optimization and LLMs through the PEBOL framework represents a significant step forward in making conversational systems more responsive and effective right from the start. This fusion not only amplifies the strengths of both methods but also opens new horizons in personalized AI interactions, enhancing both user experience and system performance.