- The paper introduces an intermediate Representation of Logical Structure (RLS) that captures core logical atoms from NL arguments for deterministic reasoning tasks.

- The approach leverages a sequence-to-sequence transformer model, achieving high accuracy on datasets such as RuleTakers (99.6%) and CLUTRR (95%).

- The study demonstrates that symbolic reasoning on extracted RLS representations can match state-of-the-art transformer models while improving interpretability.

Detailing the Logical Framework: "Reasoning is about giving reasons"

This essay analyzes the paper "Reasoning is about giving reasons," which explores the extraction and utility of logical structures in natural language (NL) arguments. The authors argue that current models fall short in interpretability and extensibility for reasoning tasks, and propose a method to address these limitations through an intermediate Representation of Logical Structure (RLS). This approach highlights core logical atoms, enabling deterministic reasoning tasks.

Introduction to RLS

The introduction of RLS serves as the cornerstone of this research. By capturing logical structures inherent in NL arguments, RLS allows for deeper analysis and handling of various reasoning forms like deduction, abduction, and contradiction detection. The approach is particularly effective as it detaches the extraction of logical structure from complex NL variability, clearly defining task-relevant logical atoms.

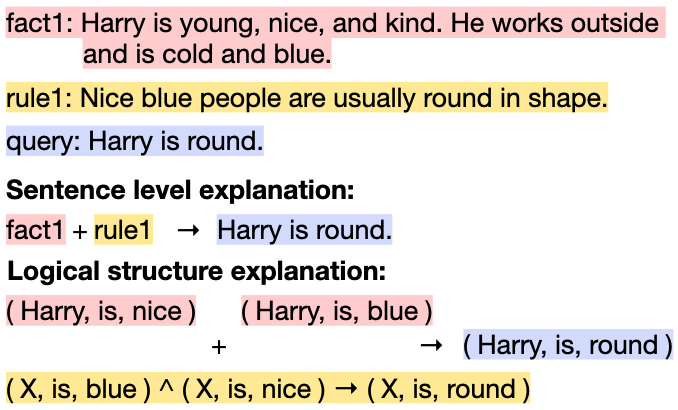

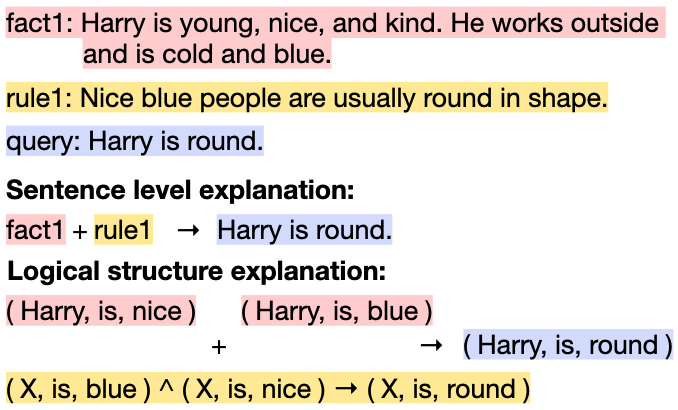

Figure 1: Visual simplification of an argument from the RuleTakers dataset, emphasizing logical structure and its role in reasoning.

The paper proposes using a sequence-to-sequence transformer model to convert NL statements into RLS, focusing on three datasets: RuleTakers, Leap-Of-Thought, and CLUTRR. The structured representations efficiently encode information necessary for reasoning tasks. The model is trained to map sentences to these representations, achieving high accuracy through the use of logical atoms and connectors.

The table below summarizes the transformation of different NL sentences into their respective RLS formulations, showcasing the adaptability of the method across datasets:

| Dataset |

Sentence |

RLS |

| RuleTakers |

"Harry is young and nice." |

(Harry, is, young, +) ∧ (Harry, is, nice, +) |

| CLUTRR |

"Sol took her son Kent to the park." |

(Sol, son, Kent) ∧ (Kent, mother, Sol) |

| Leap-Of-Thought |

"A mustard is not capable of shade from sun." |

(mustard, capable of, shade, -) |

Evaluating the RLS Approach

The method's effectiveness is demonstrated through extensive evaluations. For representation extraction, the model exhibits strong performance across datasets, with notable accuracies of 99.6% and 95% on Leap-Of-Thought and CLUTRR, respectively. This high accuracy underscores the model's ability to generalize logical structures, bridging gaps between NL variability and logical reasoning tasks.

Symbolic reasoning on extracted RLS representations facilitates complex tasks without extensive retraining. The reasoning system performs on par with state-of-the-art end-to-end transformer models on the RuleTakers dataset, proving the robustness of intermediate logical representation extraction. Furthermore, the approach generalizes well to varying comprehension depths and out-of-domain datasets, evidencing its versatility.

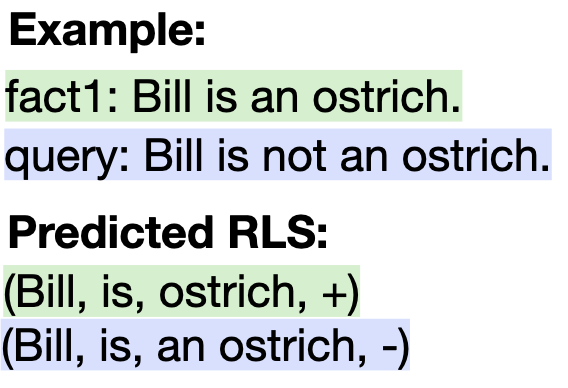

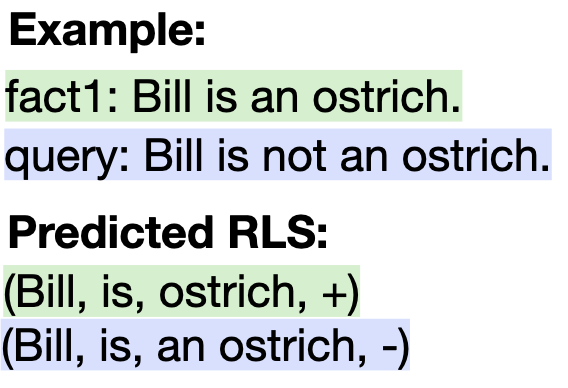

Figure 2: Example highlighting a reasoning limitation on the Birds dataset, advocating for enhanced unification operators.

Challenges and Solutions

The paper acknowledges challenges in mapping NL to structured representations, emphasizing trade-offs like missing incidental text information which doesn't affect the argument's logical structure. To handle linguistic variability, the authors recommend soft unification operators, which tackle uncertainty and noise in NL using weak semantic matches, thus improving transparency in reasoning processes.

Conclusion and Future Work

The paper advances the discussion on logical structure extraction from NL arguments, establishing the framework for more comprehensive and interpretable reasoning systems. While it effectively challenges end-to-end systems, the approach invites further exploration into broader contextual representation extraction and adaptation to diverse NL forms. Future research can refine these methods to embrace broader applicability and enhanced interpretability in AI reasoning tasks.