- The paper presents a hybrid Mamba-Transformer model that replaces traditional self-attention layers with Mamba-2 layers to significantly enhance inference speed.

- It leverages a unique FP8 pre-training approach over 20 trillion tokens and incorporates fine-tuning techniques such as SFT, GRPO, DPO, and RLHF to improve adaptability across domains.

- The model achieves up to 6.3 times higher throughput than competitors while efficiently managing long-context reasoning on a single A10G GPU.

Introduction to Nemotron Nano 2

The paper presents Nemotron-Nano-9B-v2, a Mamba-Transformer hybrid model designed to optimize throughput in reasoning tasks while maintaining high accuracy. The Nemotron architecture innovatively replaces most self-attention layers with Mamba-2 layers to significantly speed up inference, especially in generating extensive reasoning traces.

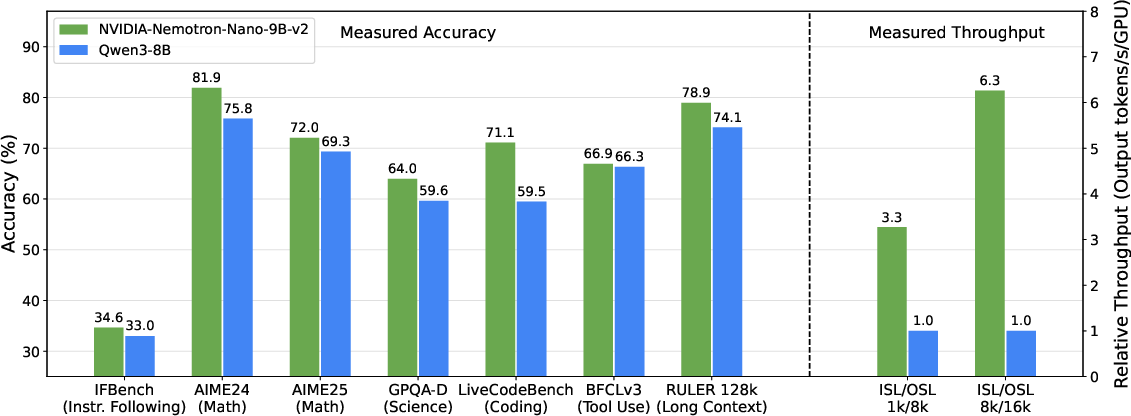

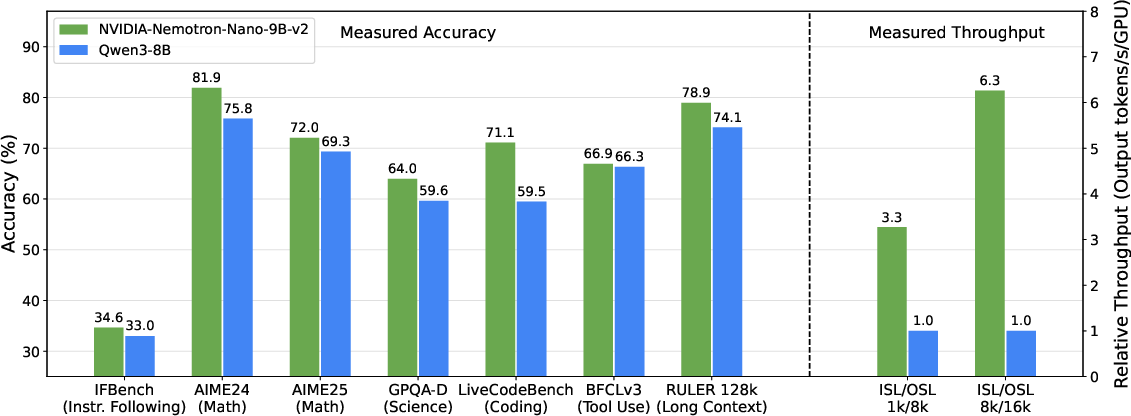

Figure 1: A comparison of the Nemotron Nano 2 and Qwen3-8B in terms of accuracy and throughput.

Model Architecture and Pre-Training

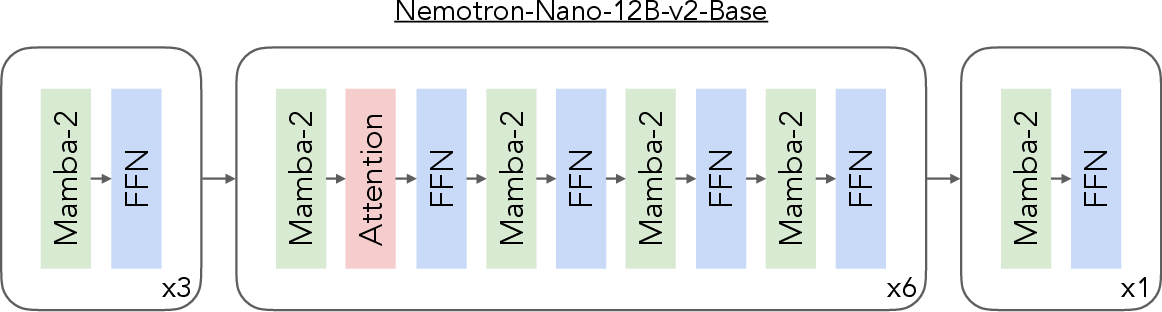

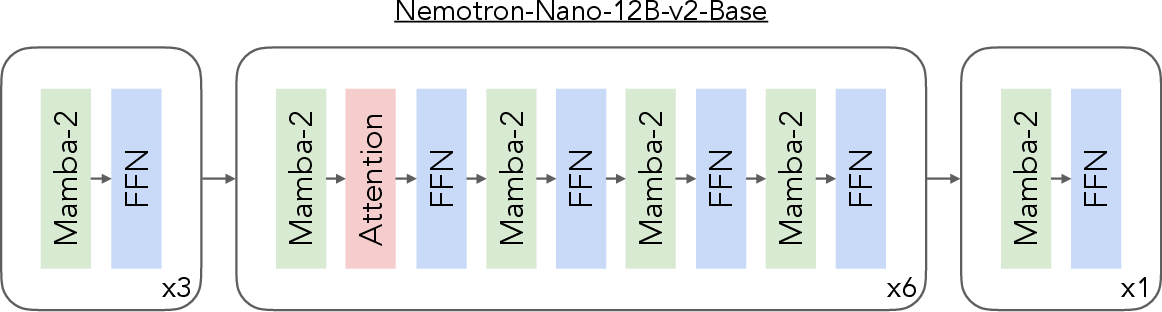

The base model, Nemotron-Nano-12B-v2-Base, was pre-trained over 20 trillion tokens using a unique FP8 training approach. It was designed with 62 layers, with self-attention layers strategically distributed to enhance inference capabilities across extended sequences.

Figure 2: Nemotron-Nano-12B-v2-Base layer pattern highlighting the distribution of self-attention layers.

The model utilized a vast corpus, comprising curated web data, multilingual datasets, and specialized subsets like mathematical and code data. This diverse pre-training dataset helped achieve superior performance on reasoning benchmarks compared to existing models.

Post-Training and Alignment

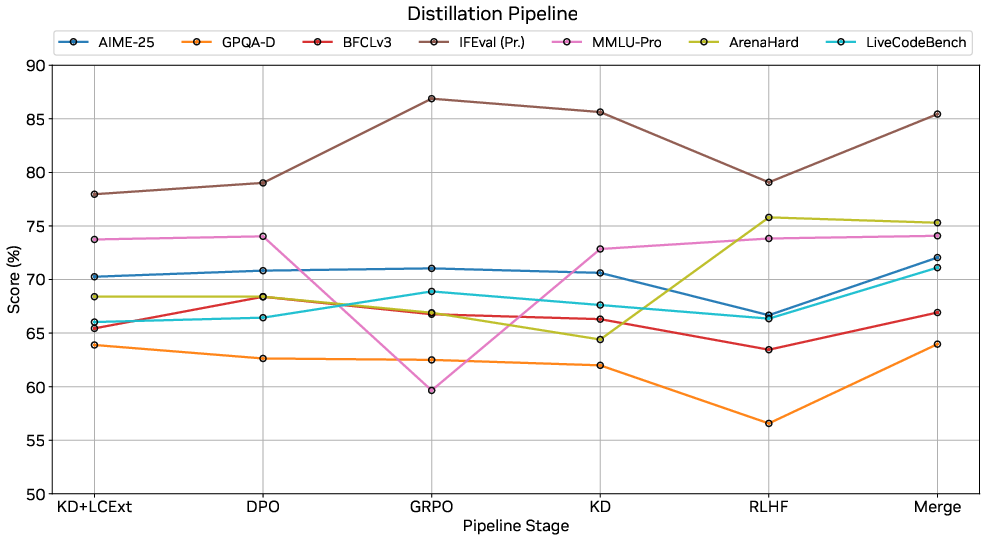

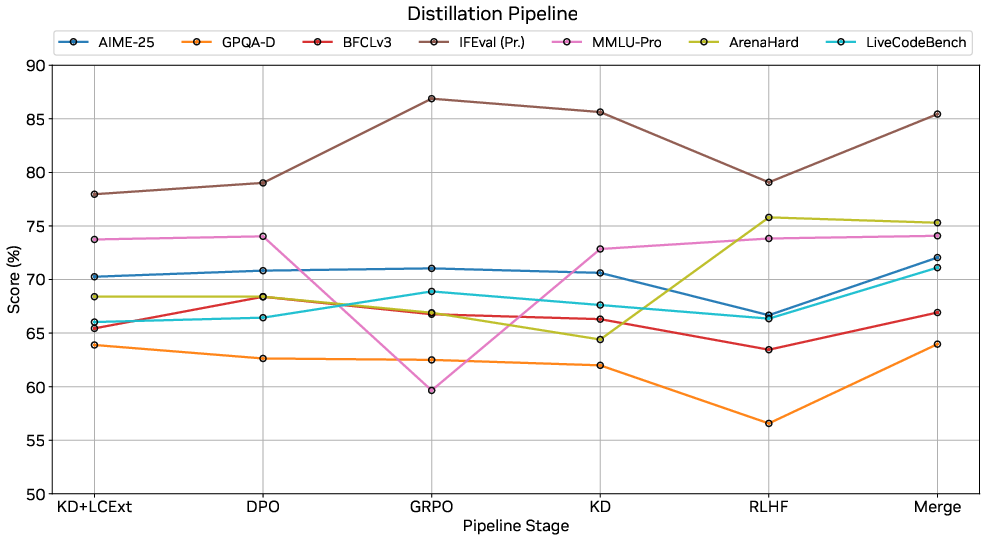

Post-training included Supervised Fine-Tuning (SFT), Group Relative Policy Optimization (GRPO), Direct Preference Optimization (DPO), and Reinforcement Learning from Human Feedback (RLHF). These phases enhanced the model's adaptability to various domains, including the ability to manage long-context interactions and tool usage efficiently.

Compression and Distillation

To allow inference over 128k token contexts on a single A10G GPU, the model was compressed using Minitron's pruning and distillation techniques. This involved careful tuning of the model's architecture and parameter space without compromising on accuracy.

Nemotron Nano 2 achieves markedly better results than Qwen3-8B on complex reasoning tasks, delivering up to 6.3 times higher throughput while maintaining similar or superior accuracy. This performance is crucial for applications in domains requiring extensive reasoning, such as complex mathematical problem-solving or multilingual content understanding.

Figure 3: Task accuracy at different stages of the distillation pipeline for Nemotron Nano 2.

Conclusion

In summary, Nemotron-Nano-9B-v2 stands out as a highly efficient reasoning model due to its innovative hybrid architecture and optimization strategies. Its ability to handle extensive reasoning tasks with high throughput opens new possibilities for AI applications requiring real-time reasoning capabilities in resource-constrained environments. Future work may explore further compression techniques and extensions to other domain-specific reasoning tasks.