An Empirical Study of Mamba-based LLMs

In the presented paper, the authors conducted a comprehensive comparison between Mamba-based Selective State-Space Models (SSMs) and traditional Transformer-based architectures. This comparison spans a variety of scales, including 8 billion parameter models trained on datasets comprising up to 3.5 trillion tokens. The principal focus of the paper was to determine whether models based on Mamba, specifically Mamba and Mamba-2 architectures, could match or exceed the performance of Transformers on standard and long-context natural language processing tasks.

Key Findings

The paper reports several key findings from the empirical evaluations:

- Task and Training Comparisons:

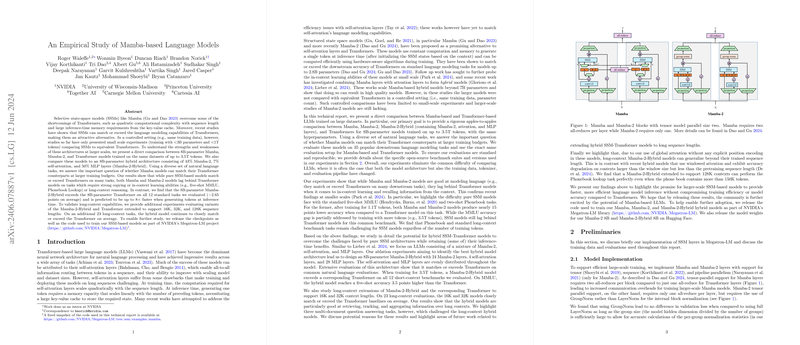

- Mamba and Mamba-2 models were evaluated against 8B-parameter Transformer models using the same datasets and hyperparameters, ensuring a controlled comparative analysis.

- The paper highlighted that pure Mamba-based models generally matched or exceeded Transformers on many downstream language tasks but fell short on tasks requiring in-context learning and long-context reasoning.

- Performance on Standard Tasks:

- On a benchmark suite of 12 standard tasks, including WinoGrande, PIQA, HellaSwag, ARC-Easy, and ARC-Challenge, pure Mamba-2 models achieved competitive or superior results compared to Transformers. However, they underperformed on MMLU and Phonebook tasks which involve in-context learning and data retrieval from long contexts.

- The performance gap on MMLU was significant when training on smaller token datasets (1.1T tokens), demonstrating that pure SSM models require approaching or surpassing Transformer token budgets to close this gap.

- Hybrid Architectures:

- The hybrid model combining Mamba-2, self-attention, and MLP layers (termed Mamba-2-Hybrid) was substantially more effective. At 8B-parameters and trained on 3.5T tokens, Mamba-2-Hybrid outperformed the corresponding Transformer on all evaluated tasks, achieving an average increase of 2.65 points.

- Mamba-2-Hybrid models demonstrated significant speedup during token generation at inference time—potentially up to 8 times faster for long sequences—due to efficient state-space model computation.

- Long-Context Capabilities:

- The extension of Mamba-2-Hybrid models to support sequence lengths of 16K, 32K, and even 128K maintained or improved accuracy on standard tasks and outperformed Transformers on synthetic long-context benchmarks like the Phonebook task.

- In long-context evaluations such as those in LongBench and RULER, Mamba-2-Hybrid models displayed excellent capabilities in context learning and copying tasks, though certain multi-document question-answering settings favored Transformers.

Implications and Future Work

The paper underscores the potential of hybrid models incorporating Mamba-2 layers to achieve superior performance and inference efficiency compared to pure Transformer models. This has several practical implications for the future development and deployment of LLMs:

- Inference Efficiency: The reduced computational and memory overheads during inference make Mamba-2-Hybrid models attractive for applications requiring real-time or low-latency responses.

- Scalability: The successful extension of Mamba-2-Hybrid models to 128K context lengths indicates their potential for handling extensive and complex input data sequences, benefiting use cases in document understanding and long-form content generation.

Future research directions could involve:

- Optimization of Training Procedures: Exploring tailored training recipes for SSM-based models, especially for mixed long-document datasets, to further enhance their performance on natural long-context tasks.

- Fine-tuning and Prompt Techniques: Investigating more sophisticated prompt engineering strategies to improve the robustness of hybrid models in various knowledge retrieval and question-answering scenarios.

- Hybrid Model Architectures: Delving deeper into the architectural nuances, such as the ratio and placement of SSM, attention, and MLP layers, to optimize hybrid model performance for specific tasks.

In conclusion, the comparison provides compelling evidence that integrating selective state-space models with attention mechanisms offers a promising avenue for pushing the boundaries of what is achievable with large-scale NLP models. The release of code and model checkpoints as part of NVIDIA's Megatron-LM project further promotes reproducibility and encourages continued innovation in this field.