Prompt Orchestration Markup Language (2508.13948v1)

Abstract: LLMs require sophisticated prompting, yet current practices face challenges in structure, data integration, format sensitivity, and tooling. Existing methods lack comprehensive solutions for organizing complex prompts involving diverse data types (documents, tables, images) or managing presentation variations systematically. To address these gaps, we introduce POML (Prompt Orchestration Markup Language). POML employs component-based markup for logical structure (roles, tasks, examples), specialized tags for seamless data integration, and a CSS-like styling system to decouple content from presentation, reducing formatting sensitivity. It includes templating for dynamic prompts and a comprehensive developer toolkit (IDE support, SDKs) to improve version control and collaboration. We validate POML through two case studies demonstrating its impact on complex application integration (PomLink) and accuracy performance (TableQA), as well as a user study assessing its effectiveness in real-world development scenarios.

Collections

Sign up for free to add this paper to one or more collections.

Summary

- The paper introduces POML which standardizes prompt engineering using an HTML-like syntax to organize roles, tasks, and examples.

- It integrates diverse data sources directly into prompts and employs a CSS-like styling system to improve LLM formatting sensitivity.

- Empirical case studies validate POML's effectiveness in enhancing LLM performance, rapid prototyping, and collaborative development.

Prompt Orchestration Markup Language: A Structured Paradigm for Advanced Prompt Engineering

Introduction and Motivation

The paper introduces Prompt Orchestration Markup Language (POML), a domain-specific, HTML-inspired markup language designed to address persistent challenges in prompt engineering for LLMs. The motivation stems from four critical pain points: lack of standardized prompt structure, complexity in integrating diverse data types, LLM sensitivity to formatting, and insufficient development tooling. POML is architected to provide a unified solution, combining semantic markup, specialized data components, a CSS-like styling system, and a comprehensive developer toolkit.

Language Design and Core Features

POML adopts a hierarchical, component-based syntax analogous to HTML, enabling logical organization of prompt elements such as roles, tasks, and examples. This structure supports modularity and maintainability, facilitating collaborative development and version control.

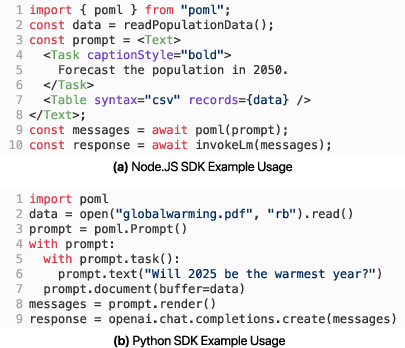

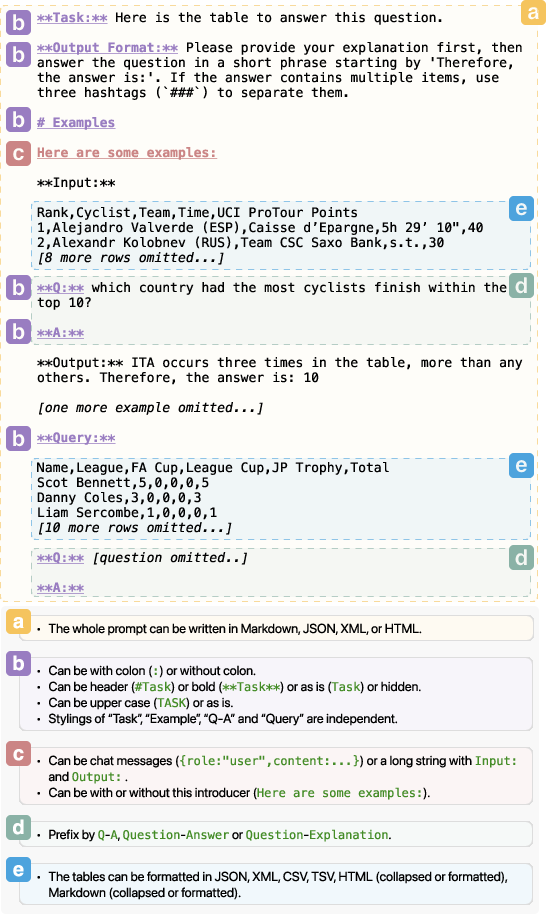

Figure 1: An example illustrating POML's structured markup and rendering, showing the mapping from semantic components to rendered prompt output.

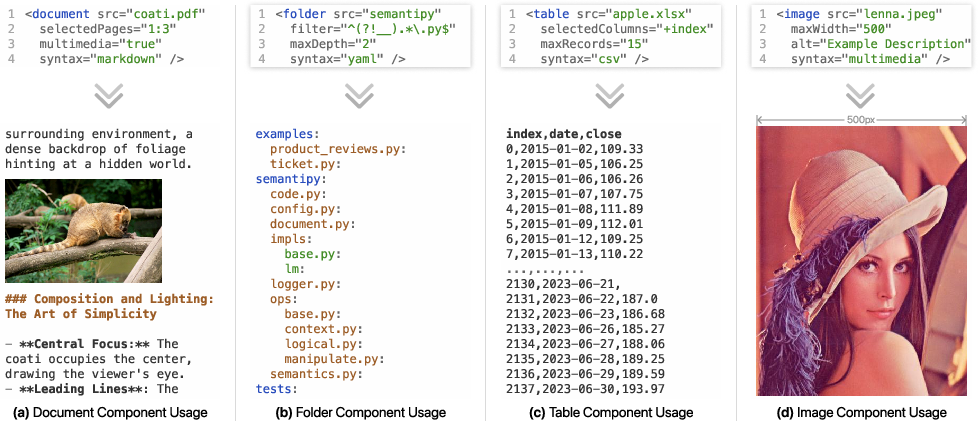

Data Integration

A distinguishing feature of POML is its suite of data components, which enable seamless embedding of external data sources (documents, tables, images, folders, audio, web pages) directly into prompts. These components support granular configuration (e.g., page selection for documents, format specification for tables, filtering for folders), abstracting away manual data preprocessing and reducing error-prone text manipulation.

Figure 2: Examples of POML data components demonstrating integration of diverse data types, including documents, tables, images, and folders.

Styling System

POML introduces a CSS-like styling system that decouples content from presentation. Styles can be applied via inline attributes or external stylesheets, controlling aspects such as syntax format, layout, captioning, and verbosity. This separation enables systematic experimentation with prompt formatting, addressing LLMs' documented sensitivity to minor presentational changes.

Figure 3: Demonstrating POML styling capabilities, including inline attributes and global stylesheets for flexible presentation control.

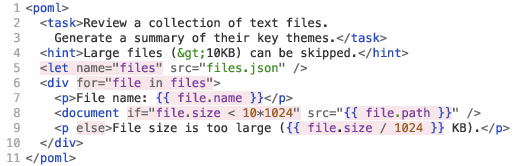

Templating Engine

The built-in templating engine supports variable substitution, iteration, and conditional rendering, allowing dynamic prompt generation without external scripting dependencies. This facilitates the creation of reusable, data-driven prompt templates, enhancing scalability and reducing redundancy.

Figure 4: Example of POML's templating engine, illustrating variable loading, iteration, and conditional component rendering.

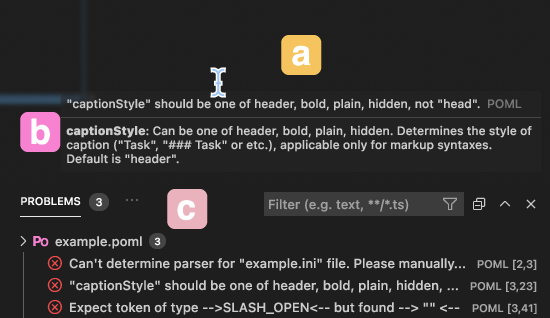

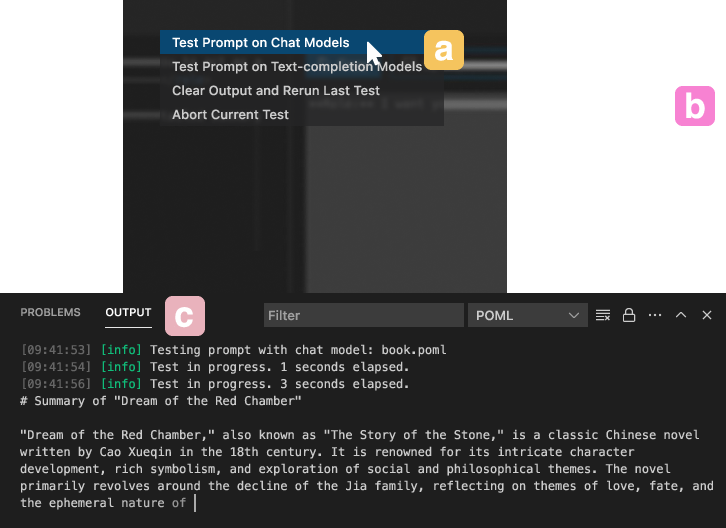

Development Toolkit and Integration

POML is complemented by a robust development toolkit, including a Visual Studio Code extension and SDKs for Node.js and Python. The IDE extension provides syntax highlighting, hover documentation, auto-completion, inline diagnostics, live preview, and integrated LLM testing, streamlining the prompt authoring and debugging workflow.

Figure 5: Real-time diagnostic feedback from the POML toolkit in the IDE, including inline error highlighting and detailed validation messages.

Figure 6: Integrated interactive prompt testing within the POML development environment, supporting real-time LLM response streaming.

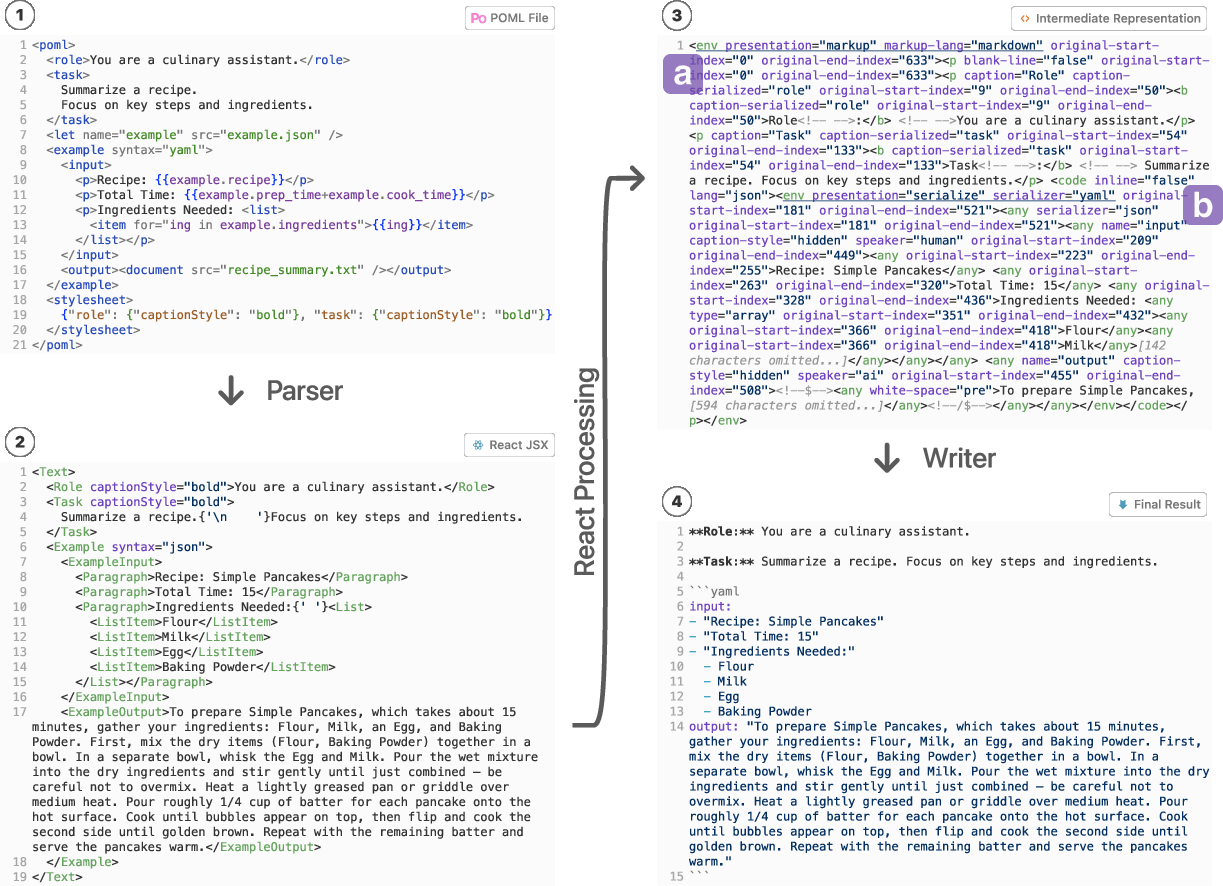

SDKs enable programmatic integration of POML into application backends, supporting both declarative and imperative prompt construction paradigms.

Figure 7: Using POML SDKs to integrate into programming workflows, with examples in JavaScript/TypeScript and Python.

Implementation Architecture

The POML engine is implemented in TypeScript atop React, employing a three-pass rendering architecture: parsing markup into JSX components, generating an intermediate representation (IR), and serializing to target formats (Markdown, JSON, plain text). This modular design supports extensibility (e.g., new output formats, alternative input syntaxes) and performance optimizations (e.g., IR caching).

Figure 8: The POML three-pass rendering architecture, illustrating the transformation from markup to IR to final output.

Empirical Validation: Case Studies

PomLink iOS Agent Prototype

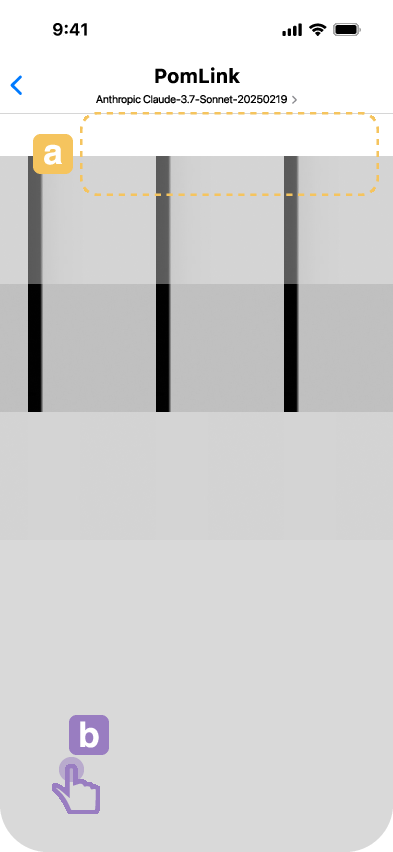

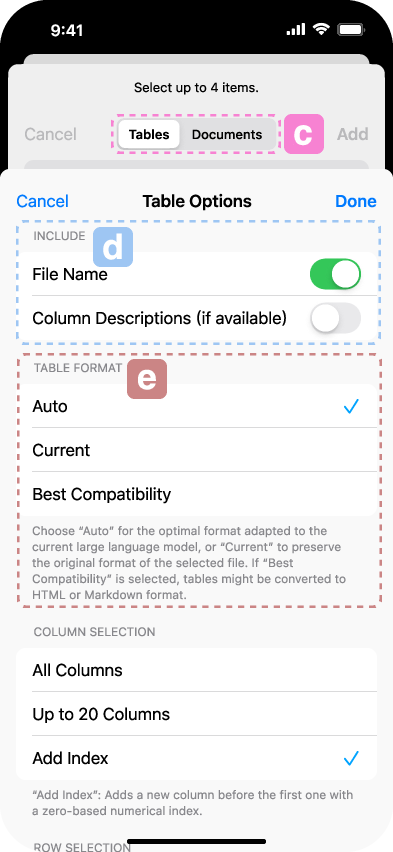

PomLink demonstrates POML's practical utility in a real-world, multimodal LLM application. The agent leverages POML to structure prompts integrating user-linked files (documents, tables, images, audio), conversational history, and task-specific instructions. The development process was expedited by POML's abstractions, with rapid prototyping achieved and minimal code required for prompt logic.

Figure 9: PomLink iOS interface, powered by POML, showing LLM interaction with linked Excel data and table configuration options.

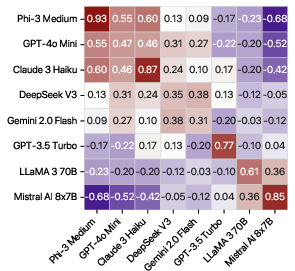

TableQA: Systematic Prompt Styling Exploration

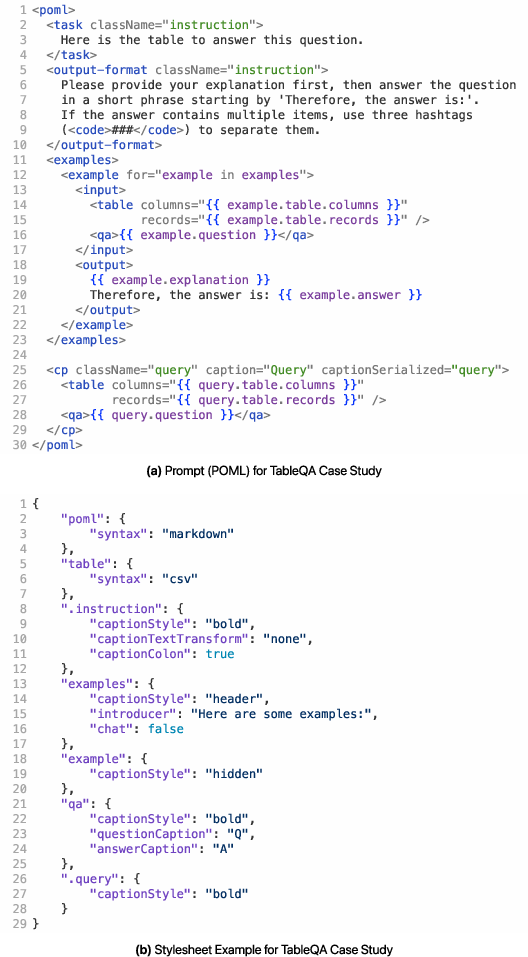

A comprehensive paper on table-based question answering (TableQA) quantified the impact of prompt styling on LLM accuracy. Using a single base POML prompt and programmatically generated stylesheets, 100 distinct prompt styles were evaluated across 8 LLMs. Results revealed dramatic, model-specific sensitivity to formatting, with accuracy improvements exceeding 9x for some models. The optimal prompt style was found to be highly model-dependent, underscoring the necessity of adaptable styling mechanisms.

Figure 10: Visualization of the prompt styling search space explored in the TableQA experiment, encompassing 74k unique prompt styles.

Figure 11: Example of POML usage for the TableQA case paper, showing the prompt template and corresponding stylesheet.

Figure 12: Correlation matrix showing relationships between LLMs based on their performance rankings across 100 prompt styles in TableQA.

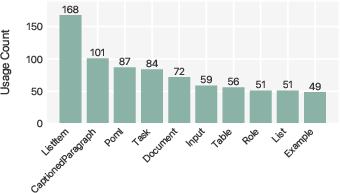

User Study: Usability and Developer Experience

A formal user paper with seven participants evaluated POML's usability across five representative tasks. Participants consistently valued POML's data integration components and styling system, with the IDE toolkit enhancing productivity. The learning curve was mitigated by live preview and documentation, though some overhead was noted for simple prompts. Feedback highlighted the need for improved documentation, error messaging, and accessibility.

Figure 13: Frequency of POML component usage across user paper sessions, indicating engagement with both structural and data components.

Implications and Future Directions

POML establishes a structured, maintainable, and extensible paradigm for prompt engineering, directly addressing challenges in data integration, format sensitivity, and development workflow. The empirical results substantiate the critical role of prompt structure and styling in LLM performance, with strong evidence for model-specific optimization. The separation of content and presentation in POML enables systematic experimentation and adaptation, facilitating both manual and automated prompt engineering.

Practically, POML's abstractions and tooling accelerate the development of complex, data-intensive LLM applications, supporting rapid prototyping, collaborative workflows, and robust version control. The extensible architecture positions POML for future integration with emerging modalities and automated prompt optimization techniques.

Theoretically, the findings reinforce the necessity of treating prompt engineering as a first-class software engineering discipline, with structured markup and tooling analogous to modern web development practices. The observed model-specific sensitivities suggest further research into adaptive, model-aware prompt generation and evaluation frameworks.

Conclusion

POML provides a comprehensive solution to the multifaceted challenges of prompt engineering for LLMs, combining semantic markup, data integration, decoupled styling, and developer-centric tooling. Empirical validation through case studies and user evaluation demonstrates its effectiveness in enhancing prompt maintainability, reusability, and performance. POML's structured approach and extensible ecosystem lay the groundwork for future advances in both practical LLM application development and automated prompt optimization.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Follow-up Questions

- How does POML address the challenges of inconsistent prompt formatting in LLMs?

- What role do data integration components play in improving prompt reliability with POML?

- How does the built-in templating engine enhance dynamic prompt generation?

- In what ways does the POML development toolkit streamline prompt creation and debugging?

- Find recent papers about structured prompt engineering.

Related Papers

- PromptSource: An Integrated Development Environment and Repository for Natural Language Prompts (2022)

- OpenPrompt: An Open-source Framework for Prompt-learning (2021)

- Prompting Is Programming: A Query Language for Large Language Models (2022)

- Unleashing the potential of prompt engineering for large language models (2023)

- Prompt Engineering Through the Lens of Optimal Control (2023)

- A Systematic Survey of Prompt Engineering in Large Language Models: Techniques and Applications (2024)

- Minstrel: Structural Prompt Generation with Multi-Agents Coordination for Non-AI Experts (2024)

- Does Prompt Formatting Have Any Impact on LLM Performance? (2024)

- CoPrompter: User-Centric Evaluation of LLM Instruction Alignment for Improved Prompt Engineering (2024)

- Beyond Prompt Content: Enhancing LLM Performance via Content-Format Integrated Prompt Optimization (2025)

Authors (4)

YouTube

alphaXiv

- Prompt Orchestration Markup Language (59 likes, 0 questions)