Analysis of "CoPrompter: User-Centric Evaluation of LLM Instruction Alignment for Improved Prompt Engineering"

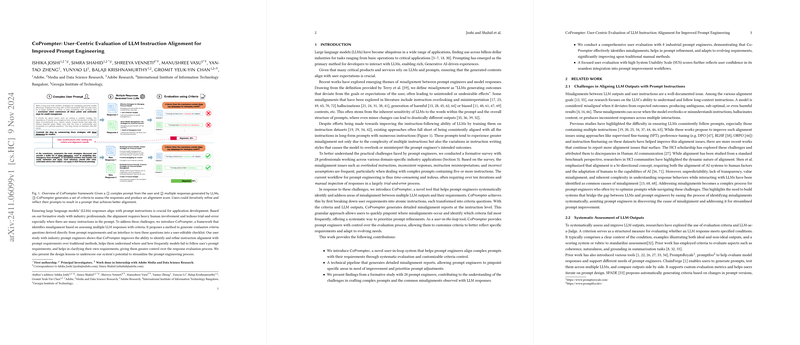

The paper, "CoPrompter: User-Centric Evaluation of LLM Instruction Alignment for Improved Prompt Engineering," introduces a novel framework tailored to optimize alignment between LLMs and user-defined instructions. CoPrompter facilitates a user-centered approach enabling prompt engineers to systematically assess and refine the alignment of LLM outputs with specified criteria, addressing significant challenges posed by complex, multi-instruction prompts.

Key Contributions and Methodology

CoPrompter's design is informed by preliminary studies involving industry professionals and prompt engineers, recognizing the critical issues associated with LLM misalignments, especially with multifaceted and detailed prompts. This tool is positioned to streamline the iterative and labor-intensive process of manual prompt tuning by providing an interface that facilitates the decomposition of prompts into atomic criteria, which are then evaluated against LLM-generated outputs.

Key technical contributions of the work are:

- Atomic Instruction Decomposition: CoPrompter translates high-level user requirements into granular criteria questions, enabling detailed misalignment reporting at the instruction level. These atomic criteria are tagged with evaluation priorities, allowing users to focus on specific aspects of instruction adherence.

- User-Centric Evaluation Interface: The system's interface allows users to continuously refine and adapt criteria to evolving requirements, providing a significant degree of user control over the evaluation process.

- Automated Evaluation and Feedback Mechanism: CoPrompter generates detailed feedback reports, highlighting alignment scores for each criterion and providing reasoning to enhance transparency. This facilitates a nuanced understanding of prompt efficacy.

The framework's efficacy was validated through a user evaluation with eight industry prompt engineers, employing the System Usability Scale (SUS) for quantitative feedback, which reflected high confidence in CoPrompter's ability to seamlessly integrate into existing workflows.

Implications and Future Directions

CoPrompter represents a significant advance in the domain of AI alignment, offering practical utility for engineers working with LLMs by minimizing manual trial and error. The system's ability to break down prompts into atomic instructions aligns with broader trends in human-AI collaboration emphasizing modularity and iterative refinement.

The paper points to exciting future research directions, including the potential expansion of CoPrompter's methodologies to other AI modalities, such as text-to-image models, and exploring its role in supporting interpretability and transparency in AI systems. Additionally, by facilitating a structured approach to prompt refinement, CoPrompter contributes to a more comprehensive understanding of model behavior and alignment dynamics, which could inform subsequent improvements in LLM architectures and training paradigms.

In conclusion, the research presents CoPrompter as not merely a tool for improved prompt engineering but as a foundational step towards more robust and reliable AI systems. Its emphasis on user control and iterative refinement positions it well for immediate application in industry settings, potentially transforming how complex prompt-based interactions are managed and optimized in AI-driven systems.