- The paper introduces a hierarchical, multi-agent AI system that autonomously executes end-to-end scientific workflows, including hypothesis generation and data analysis.

- It employs cognitive operators such as abstraction, metacognition, and dynamic retrieval to ensure rigorous methodological design and reproducible results.

- The system demonstrates significant efficiency gains by reducing research timelines to hours and leveraging multi-model collaboration for enhanced reliability.

Autonomous Agentic AI for Scientific Discovery: A Technical Analysis of "Virtuous Machines: Towards Artificial General Science"

Introduction

"Virtuous Machines: Towards Artificial General Science" presents a comprehensive framework for autonomous scientific discovery, integrating agentic AI architectures with human-inspired cognitive operators to execute end-to-end empirical research workflows. The system is validated in cognitive science, demonstrating the capacity to independently generate hypotheses, design and implement experiments, analyze data, and produce publication-ready manuscripts with minimal human intervention. This essay provides a technical summary of the system's architecture, operational mechanisms, empirical results, and implications for the future of AI-driven science.

System Architecture and Cognitive Operators

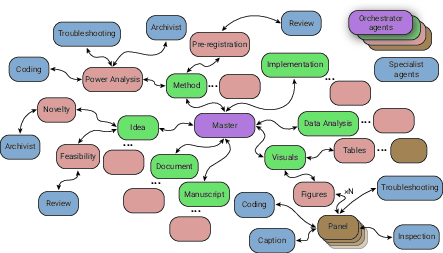

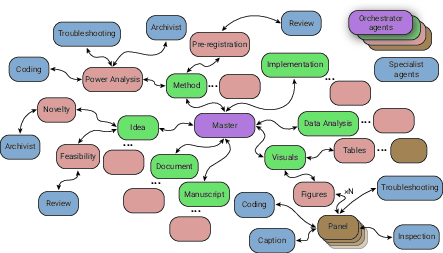

The core of the system is a hierarchical multi-agent architecture, orchestrated by a master agent that coordinates specialized sub-agents responsible for discrete scientific tasks. The architecture is modular, supporting both fully autonomous and human-in-the-loop operation modes. Each agent is capable of independent reasoning, tool use, and recursive task decomposition, enabling robust navigation of complex, multi-stage workflows.

Figure 1: Simplified network architecture of the autonomous scientific discovery system, illustrating agent coordination and distributed task execution.

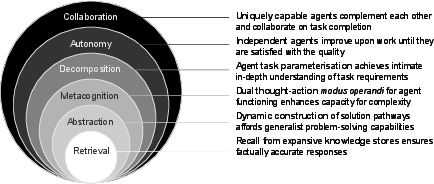

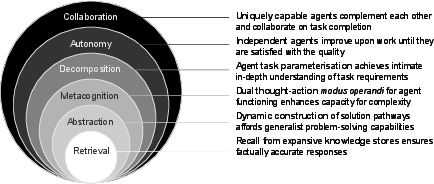

The agentic framework is augmented by cognitive operators derived from psychological science: abstraction, metacognition, decomposition, and autonomy. These operators are computational analogues of human executive functions, facilitating planning, self-monitoring, iterative refinement, and goal-directed behavior. The system also incorporates a dynamic Retrieval-Augmented Generation (d-RAG) mechanism, providing agents with context-sensitive access to external knowledge bases and enabling cognitive offloading analogous to human working memory and long-term memory systems.

Figure 2: Hierarchical framework of cognitive agency levels, from basic retrieval to collaborative multi-agent problem-solving.

A Mixture of Agents (MoA) approach leverages multiple frontier LLMs (Claude 4 Sonnet, OpenAI o3-mini/o1, Grok-3, Pixtral Large, Gemini 2.5 Pro), mitigating model-specific biases and enhancing robustness across diverse scientific tasks.

End-to-End Scientific Workflow

The system operationalizes the complete scientific workflow through a sequence of specialized agents:

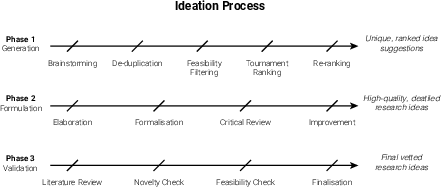

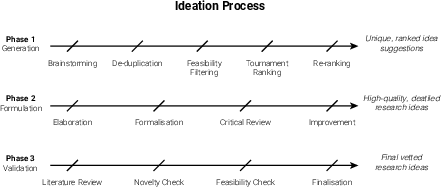

- Idea Generation: The idea agent, supported by review, novelty, and feasibility agents, formulates and validates research hypotheses using literature search APIs and multi-model tournament ranking.

- Methodological Design: The method agent develops experimental protocols, conducts power analyses via coding and archivist agents, and produces OSF-compliant pre-registration reports.

- Implementation: The implementation agent interfaces with online platforms (Pavlovia, Prolific) for participant recruitment and experiment deployment, with manual verification for ethical compliance.

- Data Analysis: The data analysis agent executes multi-stage pipelines, employing coding, troubleshooting, and validation agents to ensure statistical rigor and reproducibility.

- Experimental Re-evaluation: Bayesian and frequentist frameworks guide post-experiment decision-making, triggering theory refinement or follow-up studies as needed.

- Visualization: Visuals agents generate figures and tables through parallelized panel and table agents, supported by coding, inspection, and caption agents.

- Manuscript Development: The manuscript agent synthesizes all research components, verifies citations, and iteratively refines the report with review agents.

- Peer Review and Document Construction: Specialist review agents emulate human peer review, and document agents assemble publication-ready files in LaTeX and Word formats.

Figure 3: Three-phase ideation process for hypothesis generation, detailing agentic workflows for idea generation, formulation, and validation.

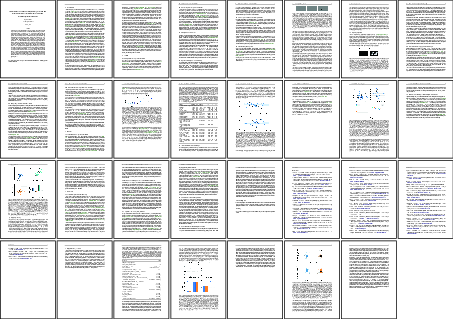

The system was tasked with three independent studies in cognitive psychology, each involving hypothesis generation, experimental design, data collection (288 participants), analysis, and manuscript production. The average runtime per paper was ~17 hours (excluding data collection), with marginal computational costs of ~$114 USD per project. Over 50 agents contributed per paper, processing an average of 32.5 million tokens and reviewing 1000–3000 publications per literature review. Data analysis pipelines involved 7696 lines of code and 72 action-observation cycles on average, demonstrating temporal persistence and goal-directed behavior over extended periods.

Figure 4: Manuscript generated by the pipeline, exemplifying autonomous end-to-end research output.

Human expert evaluation of the AI-generated manuscripts identified strengths in methodological rigor, statistical sophistication, literature integration, and clarity of scientific writing. Limitations included occasional theoretical misrepresentations, statistical omissions, presentation issues, and internal contradictions. Notably, the system prioritized practical significance over statistical significance, demonstrating objectivity in interpreting small effect sizes.

Technical and Practical Implications

Efficiency and Scalability

The agentic system achieves substantial efficiency gains over traditional research workflows, reducing project timelines from weeks/months to hours and lowering marginal costs. The modular architecture supports scalability across domains, with minimal adaptation required for new scientific fields given appropriate implementation interfaces.

Rigorous Reproducibility

Automated documentation of analytical decisions and availability of raw data enhance reproducibility, addressing persistent concerns in scientific literature. The system's conservative methodological choices and transparent reporting align with open science practices.

Adaptability and Robustness

The framework demonstrates adaptability to unexpected outcomes and implementation challenges, dynamically modifying approaches and maintaining comprehensive audit trails. The MoA strategy and d-RAG memory system mitigate model-specific limitations and support long-duration reasoning.

Limitations

Current physical implementation is constrained to online experiments; extension to laboratory automation and robotics is an engineering challenge. Visualizations occasionally require human refinement for aesthetic clarity. Sensitivity to early-stage errors (anchoring bias) persists, necessitating robust verification protocols during hypothesis generation and methodological design.

Safety and Security

The system incorporates multi-layered safety measures: code execution timeouts, memory and storage limits, package verification, isolated environments, semantic and entropy checks, API rate limiting, and activity logging. These safeguards are essential for autonomous operation and resource management.

Theoretical and Societal Implications

The demonstration of autonomous empirical research challenges traditional epistemological frameworks, suggesting that valid scientific knowledge can be generated mechanistically without human-like understanding. The system's capacity for recursive hypothesis refinement and empirical validation points toward the development of Artificial General Science (AGS), where AI systems independently drive scientific inquiry across domains.

Societal implications include democratization of research capabilities, potential mitigation of publication bias through documentation of null results, and the need for new governance structures for attribution, accountability, and ethical oversight. The environmental impact of sustained LLM operation warrants further quantification.

Future Directions

Immediate extensions include application to other scientific domains, integration with laboratory automation, and enhancement of autonomous theory refinement mechanisms. Improving cognitive reasoning frameworks and error correction protocols will strengthen research quality. The recursive cycle of hypothesis generation, empirical testing, and knowledge updating embodied in the system provides a foundation for advancing AI capabilities beyond pattern recognition toward genuine scientific understanding.

Conclusion

"Virtuous Machines: Towards Artificial General Science" establishes a technical foundation for autonomous, agentic AI systems capable of executing complete scientific workflows, including real-world experimentation. The system demonstrates efficiency, rigor, and adaptability, with empirical validation in cognitive science. While limitations remain in conceptual nuance and physical implementation, the architecture and operational mechanisms provide a scalable pathway toward Artificial General Science. The work invites reconsideration of epistemological assumptions and underscores the need for robust ethical, safety, and governance frameworks as AI-driven scientific discovery becomes increasingly prevalent.