SSRL: Self-Search Reinforcement Learning (2508.10874v1)

Abstract: We investigate the potential of LLMs to serve as efficient simulators for agentic search tasks in reinforcement learning (RL), thereby reducing dependence on costly interactions with external search engines. To this end, we first quantify the intrinsic search capability of LLMs via structured prompting and repeated sampling, which we term Self-Search. Our results reveal that LLMs exhibit strong scaling behavior with respect to the inference budget, achieving high pass@k on question-answering benchmarks, including the challenging BrowseComp task. Building on these observations, we introduce Self-Search RL (SSRL), which enhances LLMs' Self-Search capability through format-based and rule-based rewards. SSRL enables models to iteratively refine their knowledge utilization internally, without requiring access to external tools. Empirical evaluations demonstrate that SSRL-trained policy models provide a cost-effective and stable environment for search-driven RL training, reducing reliance on external search engines and facilitating robust sim-to-real transfer. We draw the following conclusions: 1) LLMs possess world knowledge that can be effectively elicited to achieve high performance; 2) SSRL demonstrates the potential of leveraging internal knowledge to reduce hallucination; 3) SSRL-trained models integrate seamlessly with external search engines without additional effort. Our findings highlight the potential of LLMs to support more scalable RL agent training.

Summary

- The paper introduces SSRL, a framework that leverages LLMs’ intrinsic self-search capabilities to reduce dependency on external search engines.

- Experiments quantify self-search using metrics like pass@k, showing that naive repeated sampling in smaller models approaches large-model performance in QA tasks.

- SSRL incorporates techniques like information token masking and format-based rewards, enabling superior sim-to-real transfer and efficient reinforcement learning.

Self-Search Reinforcement Learning: Quantifying and Enhancing LLMs as World Knowledge Simulators

Introduction

This work introduces Self-Search Reinforcement Learning (SSRL), a framework for leveraging LLMs as efficient simulators for agentic search tasks in reinforcement learning (RL). The central hypothesis is that LLMs, by virtue of their pretraining on web-scale corpora, encode substantial world knowledge that can be elicited through structured prompting and reinforcement learning, thereby reducing or eliminating the need for costly interactions with external search engines during RL training. The paper systematically quantifies the intrinsic search capabilities of LLMs, proposes SSRL to enhance these capabilities, and demonstrates robust sim-to-real transfer, where skills acquired in a fully simulated environment generalize to real-world search scenarios.

Quantifying Intrinsic Self-Search Capabilities

The authors first formalize the "Self-Search" setting, where an LLM is prompted to simulate the entire search process—query generation, information retrieval, and reasoning—using only its internal parametric knowledge. The evaluation is conducted across a suite of knowledge-intensive QA benchmarks, including both single-hop and multi-hop tasks, as well as web browsing challenges.

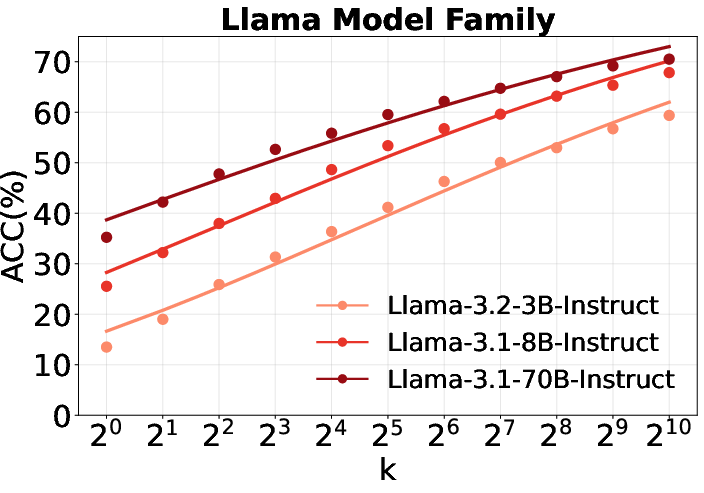

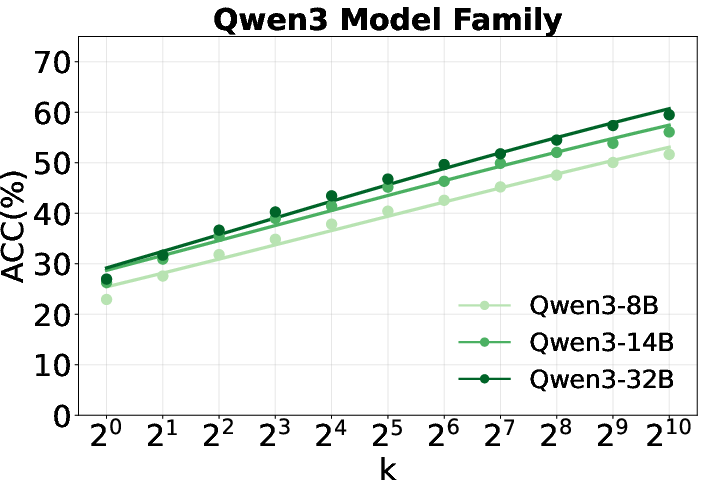

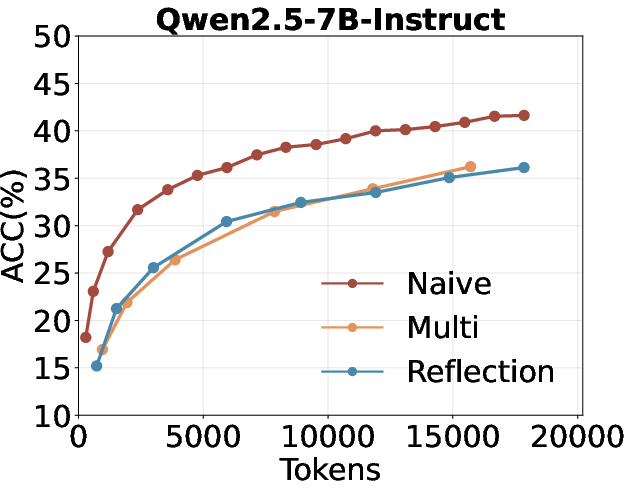

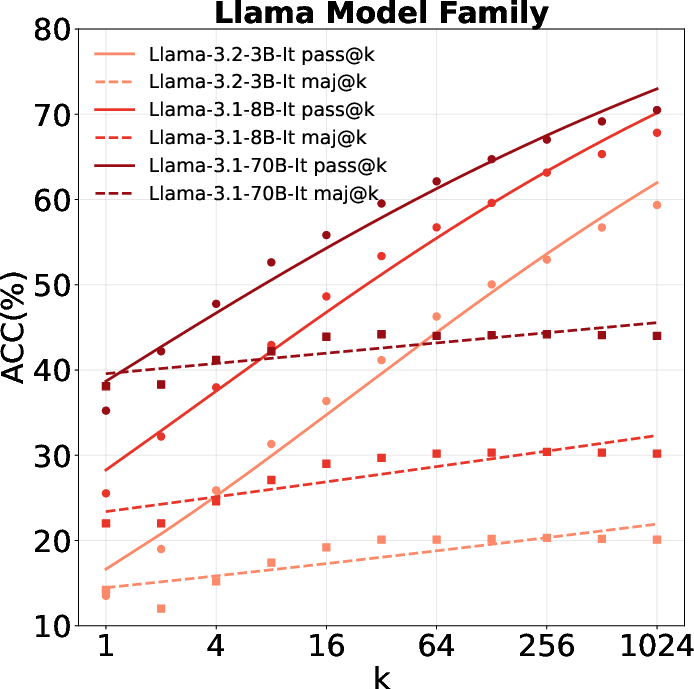

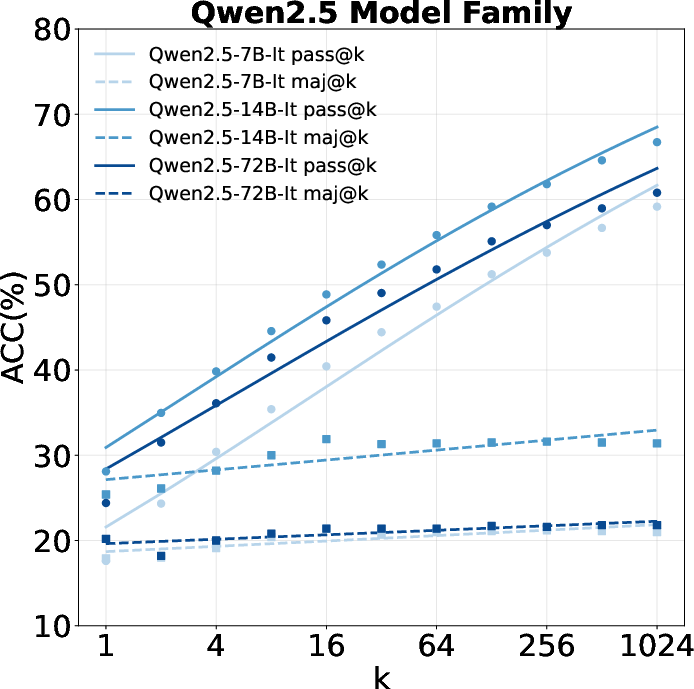

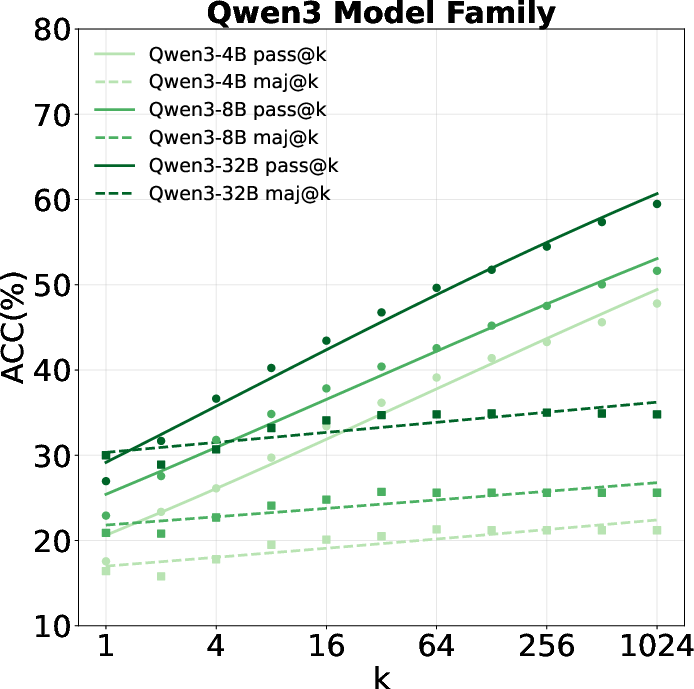

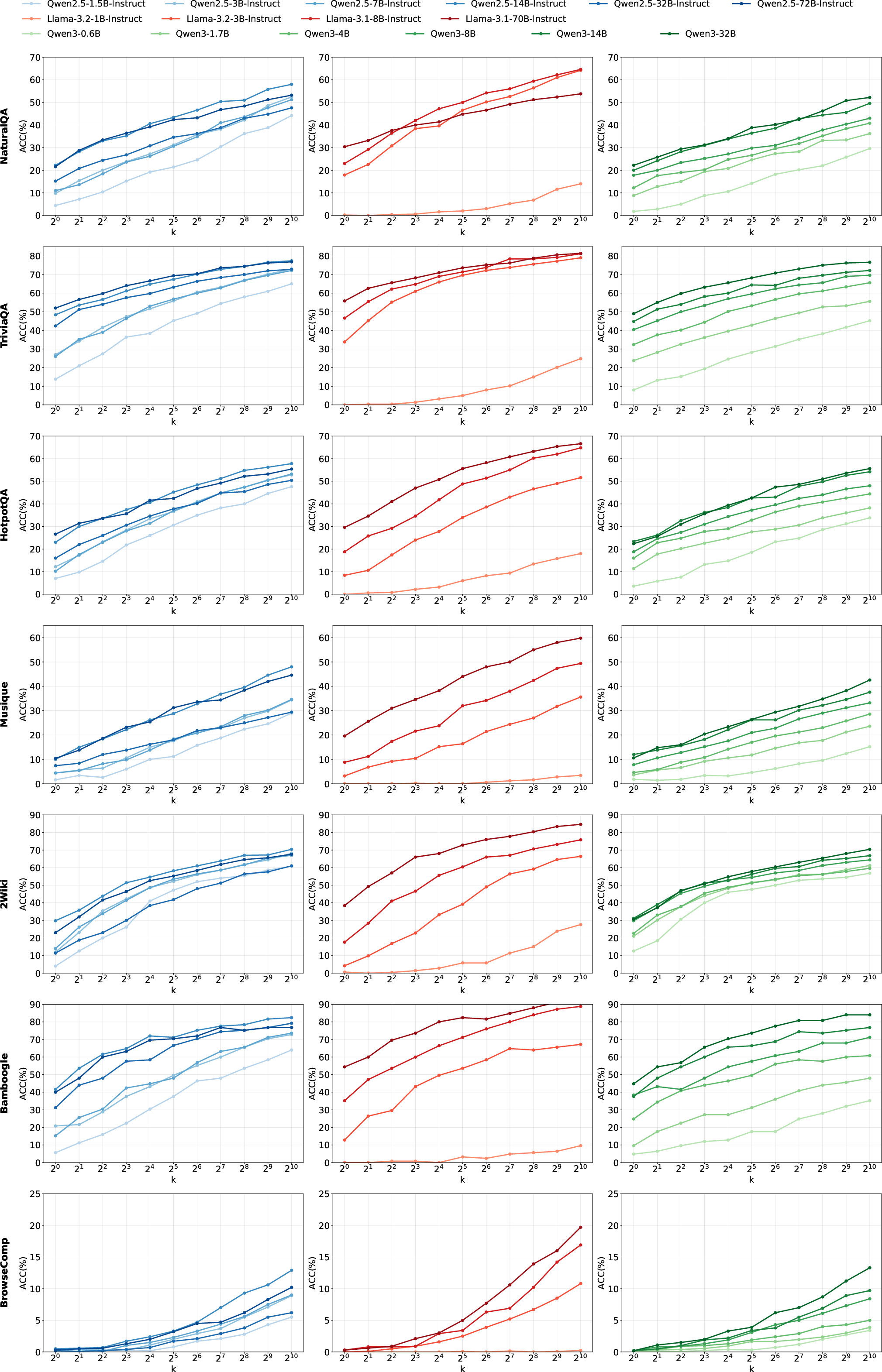

Performance is measured using pass@k, which quantifies the probability that at least one of k sampled responses is correct. The scaling law for test-time self-search is empirically validated: as the number of samples increases, predictive performance improves in a predictable, model-family-specific manner.

Figure 1: Scaling curves for repeated sampling across Qwen2.5, Llama, and Qwen3 families, showing consistent MAE improvements as sample size increases.

A key finding is that, with sufficient sampling, smaller models can approach the performance of much larger models, indicating that the gap between model sizes narrows under repeated sampling. Notably, Llama models outperform Qwen models in self-search settings, in contrast to prior results in mathematical reasoning, suggesting that reasoning priors and knowledge priors are not strongly correlated in this context.

Analysis of Reasoning and Sampling Strategies

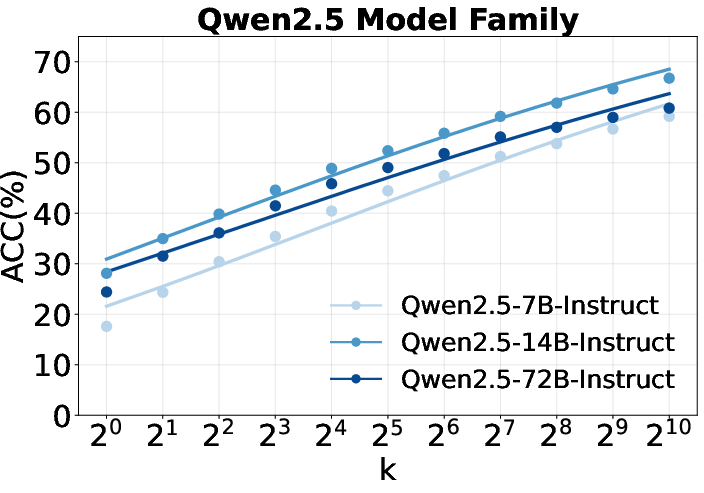

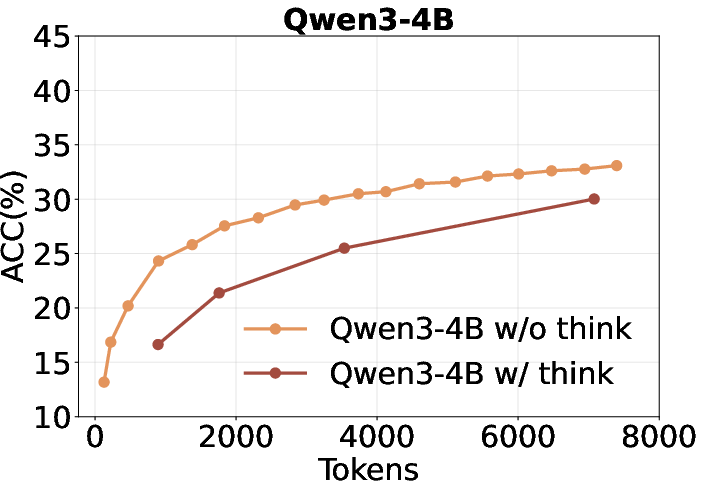

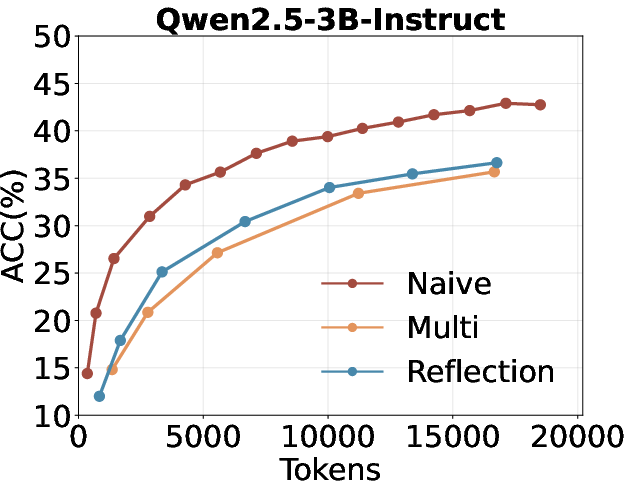

The paper further investigates the impact of reasoning token allocation, multi-turn search, and reflection on self-search performance. Contrary to trends in math and code tasks, increasing the number of "thinking" tokens or employing multi-turn/reflection-based strategies does not yield better results in self-search QA. Instead, short chain-of-thought (CoT) reasoning and naive repeated sampling are more effective and token-efficient.

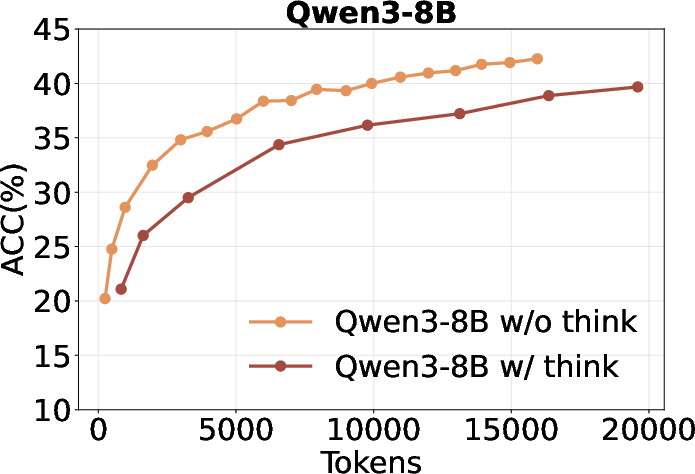

Figure 2: Qwen3 performance with and without forced thinking, showing diminishing returns from increased reasoning tokens.

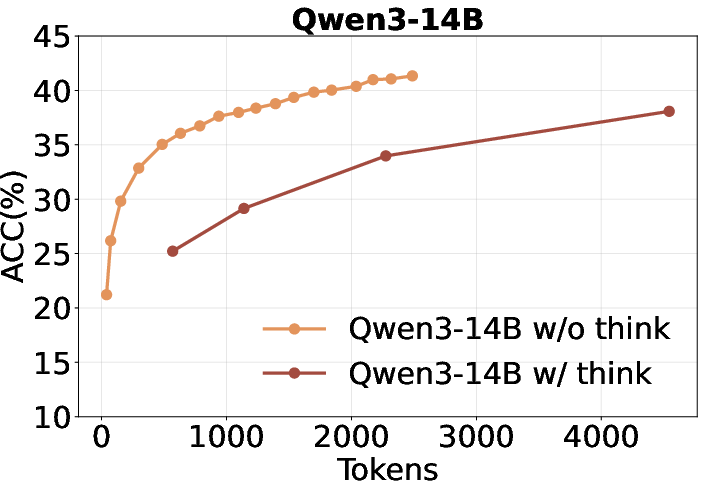

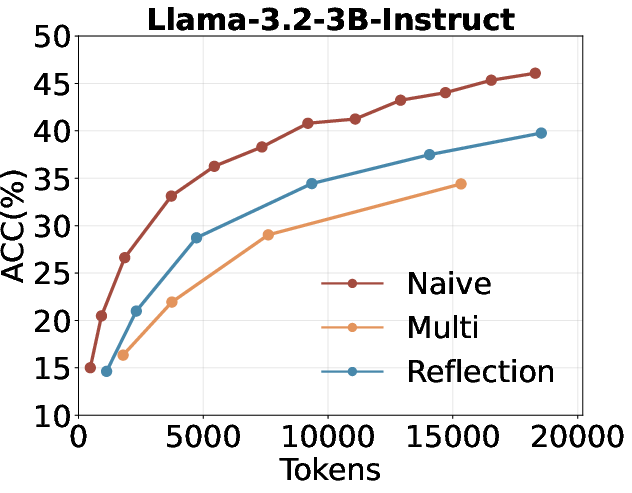

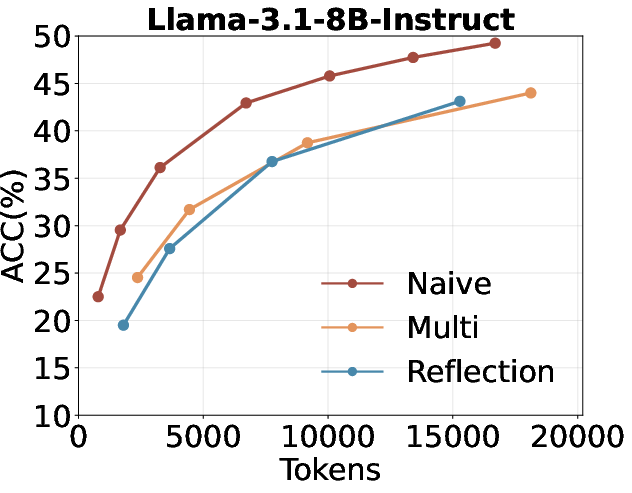

Figure 3: Comparison of repeated sampling, multi-turn self-search, and self-search with reflection under a fixed token budget.

Majority voting, a common test-time scaling strategy, provides only marginal gains in this setting, highlighting the challenge of reliably selecting the optimal answer from a set of plausible candidates when ground truth is unavailable.

Figure 4: Majority voting results across model families, indicating limited improvements over naive sampling.

Self-Search Reinforcement Learning (SSRL)

Building on the quantification of self-search capabilities, SSRL is introduced as a reinforcement learning framework that enhances LLMs' ability to extract and utilize their internal knowledge. The RL objective is formulated such that the policy model serves as both the reasoner and the internal search engine, with rewards based on both answer correctness and adherence to a structured reasoning format.

Key components of SSRL include:

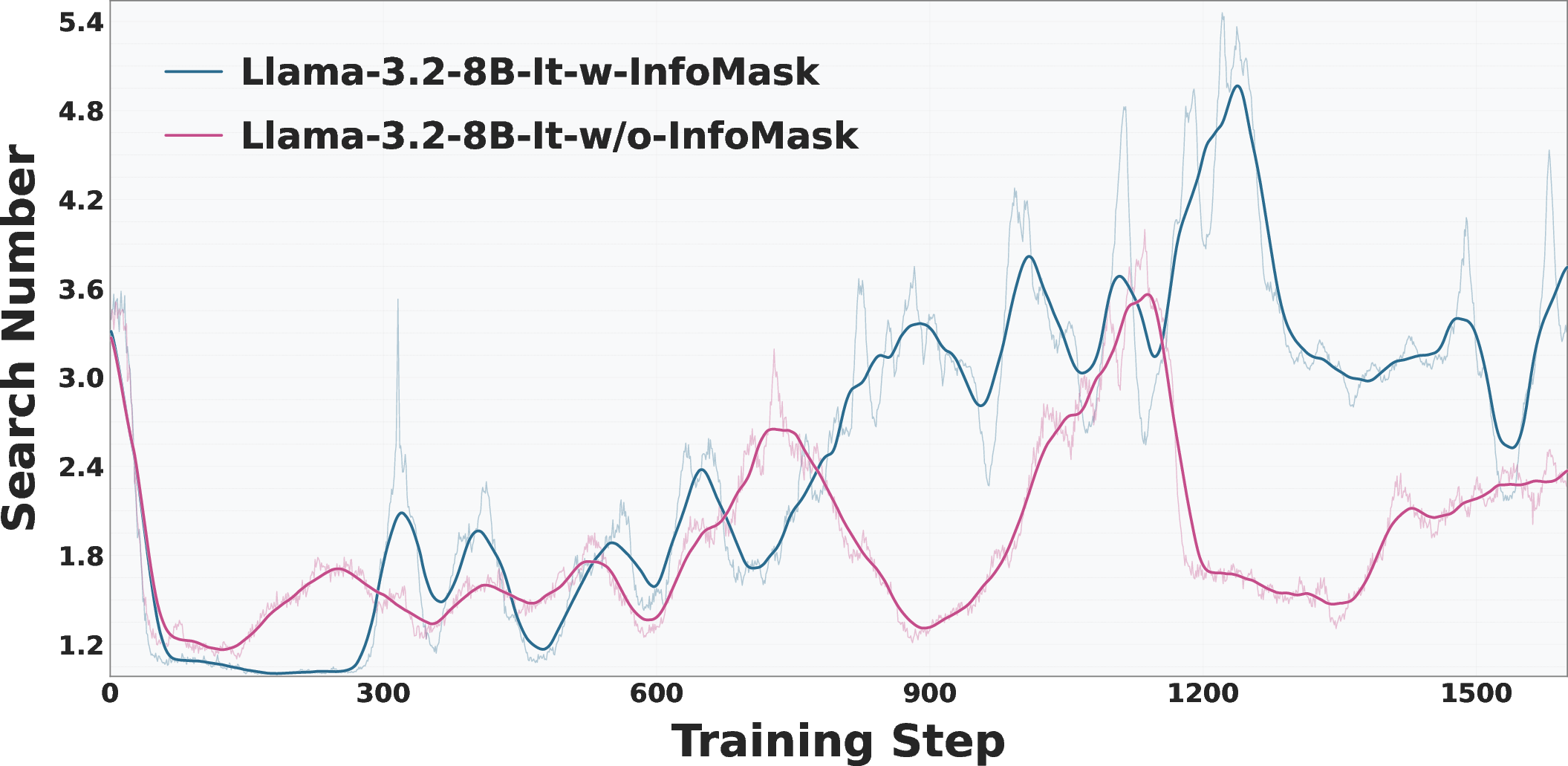

- Information Token Masking: Masking self-generated information tokens during training improves generalization and encourages deeper reasoning.

- Format-based Reward: Enforcing a structured output format (think/search/information/answer) stabilizes training and improves performance.

- On-policy Self-Search: Using the policy model itself as the information provider is critical; freezing the provider leads to rapid performance collapse.

Empirical results demonstrate that SSRL-trained models consistently outperform baselines relying on external search engines or simulated search environments, both in terms of accuracy and training efficiency.

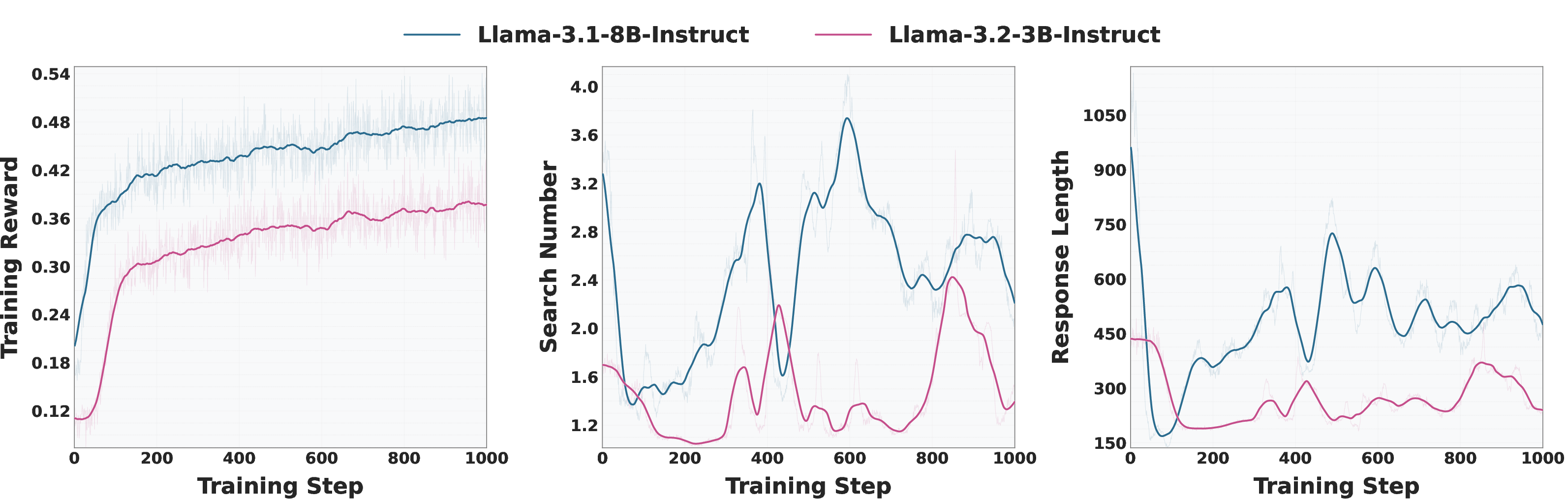

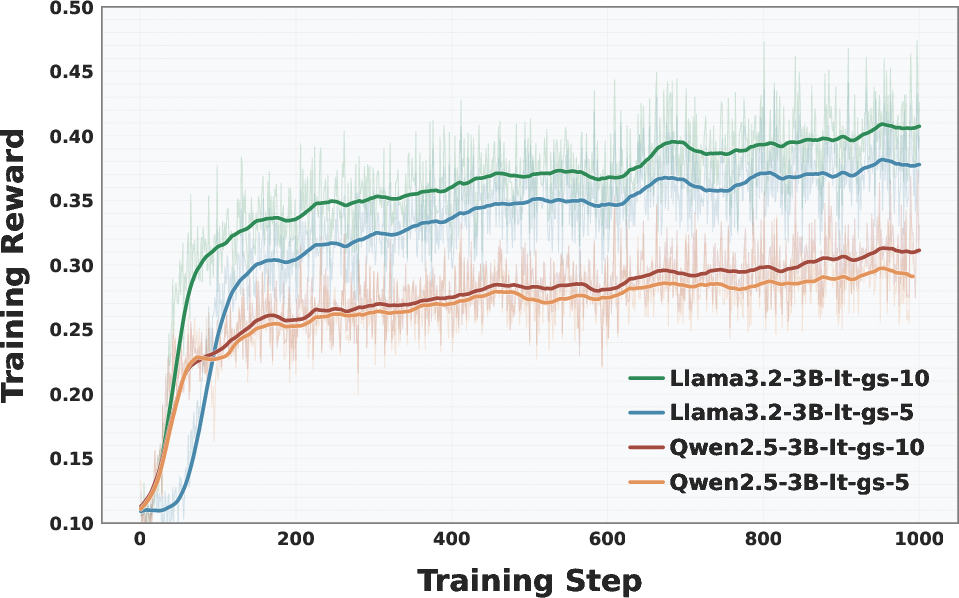

Figure 5: Training curves for Llama-3.2-3B-Instruct and Llama-3.1-8B-Instruct, showing reward, response length, and search count dynamics.

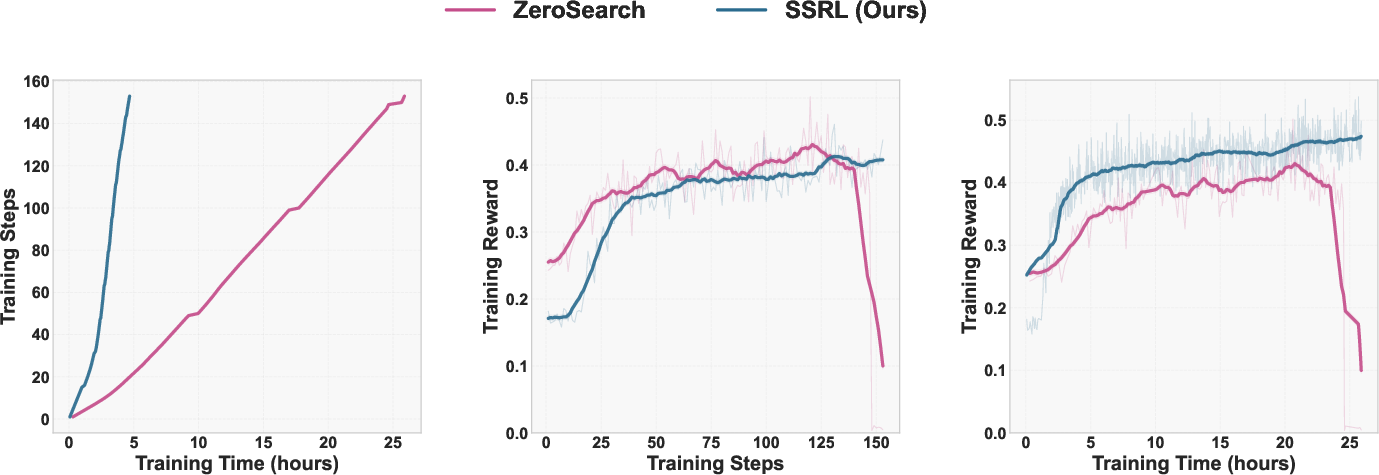

Figure 6: SSRL vs. ZeroSearch training efficiency and reward progression, with SSRL achieving faster convergence and greater robustness.

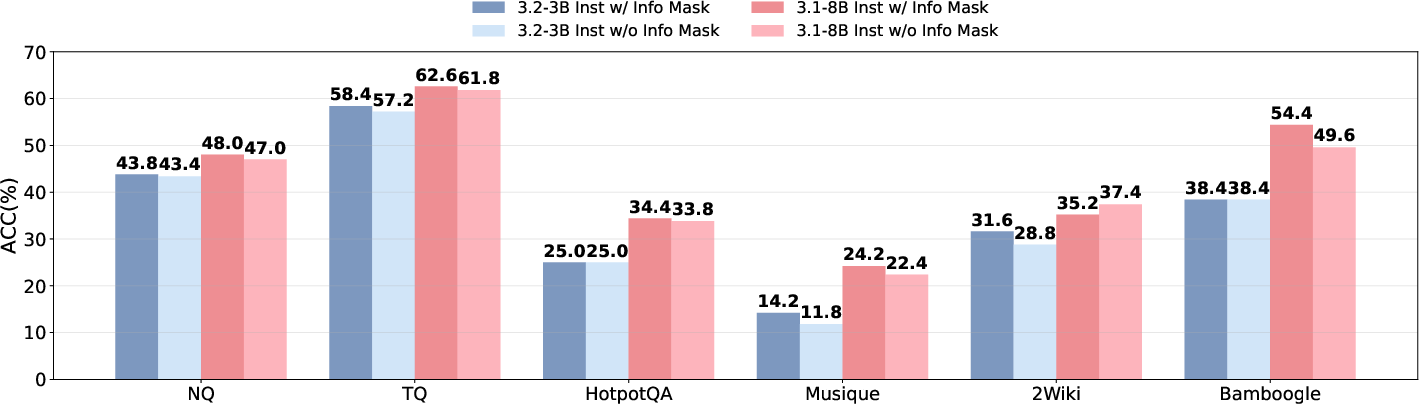

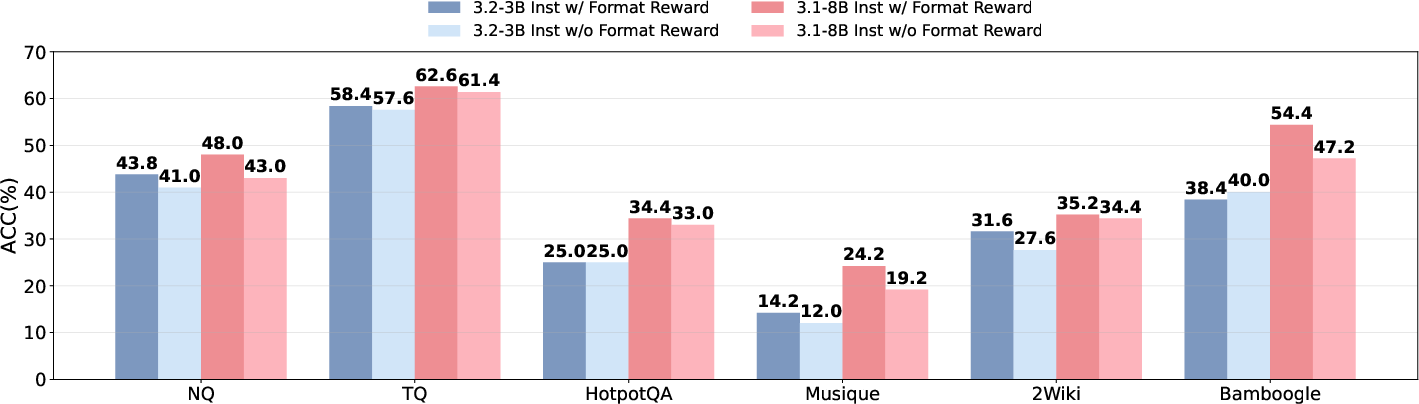

Ablation studies confirm the benefits of information masking and format reward, with both components contributing to improved generalization and more coherent reasoning trajectories.

Figure 7: Performance impact of information masking during training.

Figure 8: Performance impact of format-based reward, with and without information masking.

Sim-to-Real Generalization

A central claim of the paper is that SSRL-trained models, despite being trained entirely in a simulated (self-search) environment, can be seamlessly adapted to real search scenarios. This is validated by replacing self-generated information with results from actual search engines at inference time (Sim2Real). SSRL models demonstrate strong sim-to-real transfer, outperforming prior RL baselines and requiring fewer real search queries to achieve comparable or superior performance.

Figure 9: Repeated sampling results across seven benchmarks, illustrating the scaling law and upper bounds of self-search.

Figure 10: Left: Search count comparison with and without information mask. Right: Group size ablation for GRPO.

An entropy-guided hybrid strategy is also proposed, where the model selectively invokes external search only when internal uncertainty is high, further reducing reliance on real search engines without sacrificing accuracy.

Test-Time RL and Algorithmic Robustness

The paper explores the application of unsupervised RL algorithms such as TTRL in the self-search setting, finding that TTRL can yield substantial performance gains, particularly for smaller models. However, TTRL-trained models may develop biases toward over-reliance on internal knowledge, making adaptation to real search environments more challenging.

A comprehensive comparison of RL algorithms (GRPO, PPO, DAPO, KL-Cov, REINFORCE++) reveals that group-based policy optimization methods (e.g., GRPO, DAPO) are more effective than standard PPO or REINFORCE++ in this domain, likely due to their ability to leverage repeated rollouts efficiently in a fully offline training regime.

Implications and Future Directions

The findings have several important implications:

- LLMs as World Models: LLMs can serve as effective simulators of world knowledge for agentic RL, enabling scalable, cost-effective training without external search dependencies.

- Limits of Internal Knowledge: While LLMs encode substantial factual and procedural knowledge, reliably extracting the optimal answer remains nontrivial, and naive selection strategies (e.g., majority voting) are insufficient.

- Sim-to-Real Transfer: Skills acquired via SSRL in a fully simulated environment generalize robustly to real-world search tasks, provided that output formats are aligned.

- Algorithmic Considerations: Structured rewards, information masking, and on-policy training are critical for stable and effective SSRL. Group-based RL algorithms are particularly well-suited for this setting.

Future research should address the remaining challenges in answer selection, explore more sophisticated verification and selection mechanisms, and investigate the integration of SSRL with other modalities and agentic capabilities. The potential for LLMs to serve as general-purpose world models in broader RL and planning contexts remains a promising avenue for further exploration.

Conclusion

This work establishes that LLMs possess significant untapped capacity as implicit world models for search-driven tasks. SSRL provides a principled framework for quantifying and enhancing this capacity, enabling LLMs to function as both reasoners and knowledge retrievers without external dependencies. The demonstrated sim-to-real transfer and algorithmic robustness position SSRL as a practical approach for scalable RL agent training, with broad implications for the development of autonomous, knowledge-intensive AI systems.

Follow-up Questions

- How does SSRL compare with traditional reinforcement learning methods that rely on external search engines?

- What are the key factors that enable LLMs to function as effective world knowledge simulators in SSRL?

- How does the use of information token masking enhance the reasoning and performance of SSRL-trained models?

- What implications do the SSRL findings have for scaling RL across various knowledge-intensive applications?

- Find recent papers about Self-Search Reinforcement Learning.

Related Papers

- On the Emergence of Thinking in LLMs I: Searching for the Right Intuition (2025)

- R1-Searcher: Incentivizing the Search Capability in LLMs via Reinforcement Learning (2025)

- Search-R1: Training LLMs to Reason and Leverage Search Engines with Reinforcement Learning (2025)

- ReSearch: Learning to Reason with Search for LLMs via Reinforcement Learning (2025)

- DeepResearcher: Scaling Deep Research via Reinforcement Learning in Real-world Environments (2025)

- ZeroSearch: Incentivize the Search Capability of LLMs without Searching (2025)

- An Empirical Study on Reinforcement Learning for Reasoning-Search Interleaved LLM Agents (2025)

- TreeRL: LLM Reinforcement Learning with On-Policy Tree Search (2025)

- MMSearch-R1: Incentivizing LMMs to Search (2025)

- Beyond Ten Turns: Unlocking Long-Horizon Agentic Search with Large-Scale Asynchronous RL (2025)

Authors (18)

First 10 authors:

Tweets

alphaXiv

- SSRL: Self-Search Reinforcement Learning (46 likes, 0 questions)