- The paper introduces DxDirector-7B as a novel LLM that directs the diagnostic process with minimal physician input.

- It employs a three-stage training methodology including continued pre-training, instruction-tuning, and step-level strategy optimization for refined clinical reasoning.

- Experimental results show superior diagnostic accuracy and parameter efficiency over existing medically-adapted and commercial LLMs.

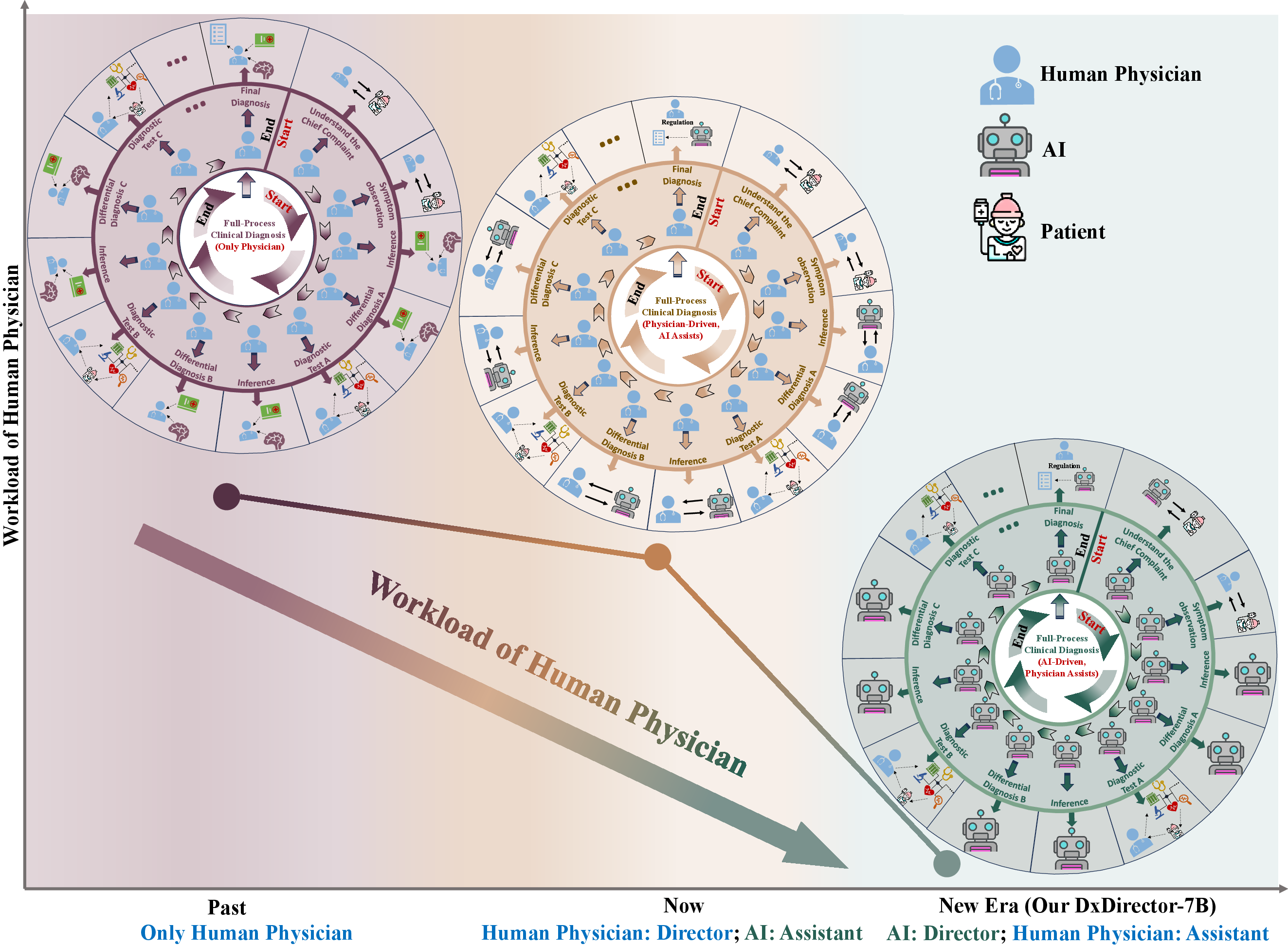

Reverse Physician-AI Relationship: Full-process Clinical Diagnosis Driven by a LLM

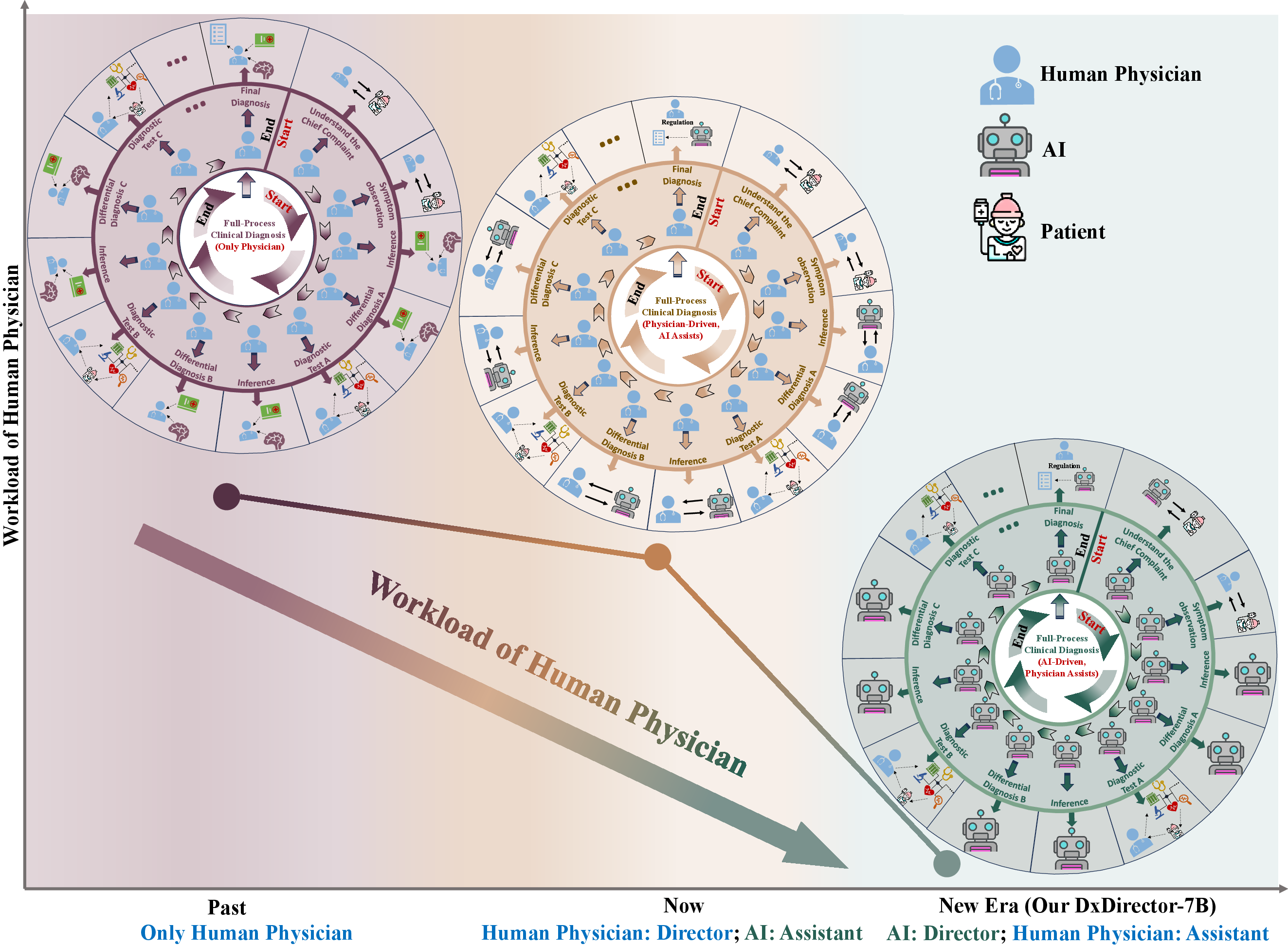

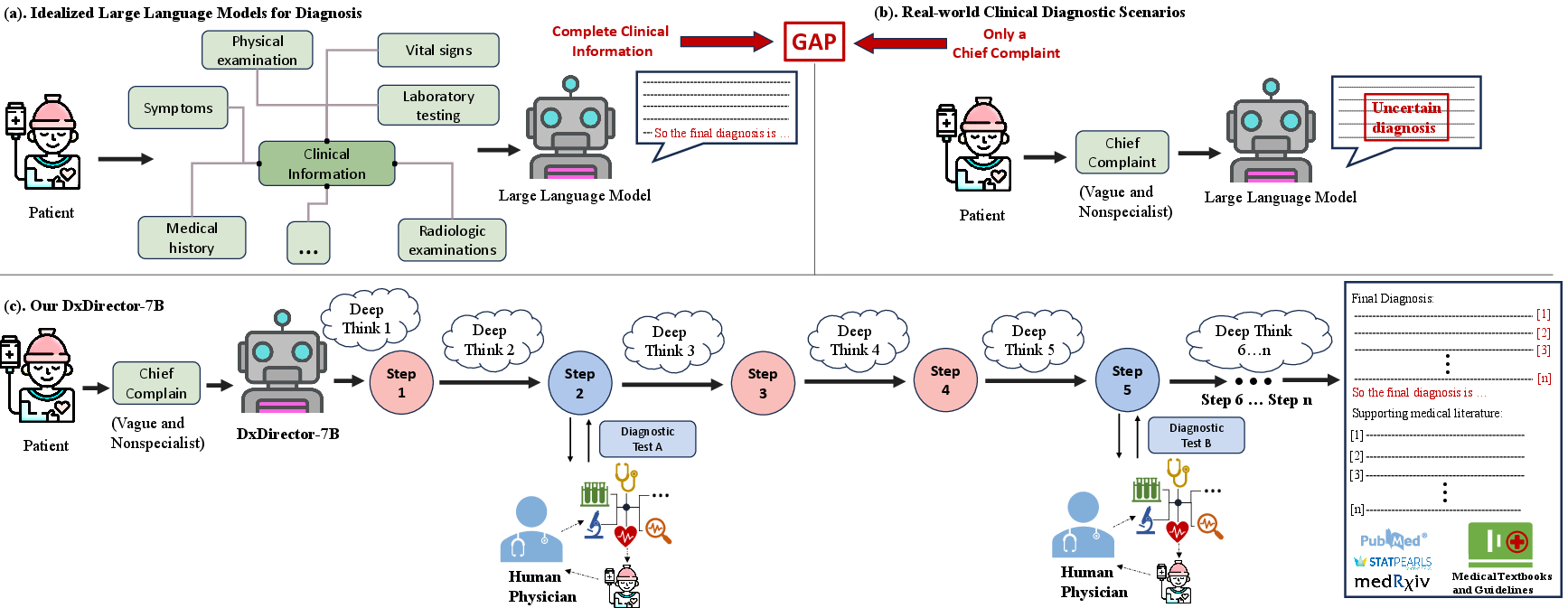

The paper "Reverse Physician-AI Relationship: Full-process Clinical Diagnosis Driven by a LLM" (2508.10492) presents a novel approach to clinical diagnostics using a LLM, DxDirector-7B. This model aims to shift the conventional roles in clinical diagnostics, promoting the AI to the role of director while relegating human physicians to assistants. This marks a fundamental change from the existing paradigm where AI merely serves as an adjunct to human decision-making. Through advanced "slow thinking" capabilities, DxDirector-7B is designed to guide the entire diagnostic workflow effectively, beginning from an ambiguous patient complaint to a determined diagnosis, thus minimizing physician involvement and aiming to significantly reduce their workload.

Figure 1: Workflow of full-process diagnosis in past, now and the new era. The inner circle represents the directorship (deciding the specific clinical problems) of multi-step dynamic clinical diagnosis, and the outer circle represents the specific execution of the corresponding steps (solving the clinical problems).

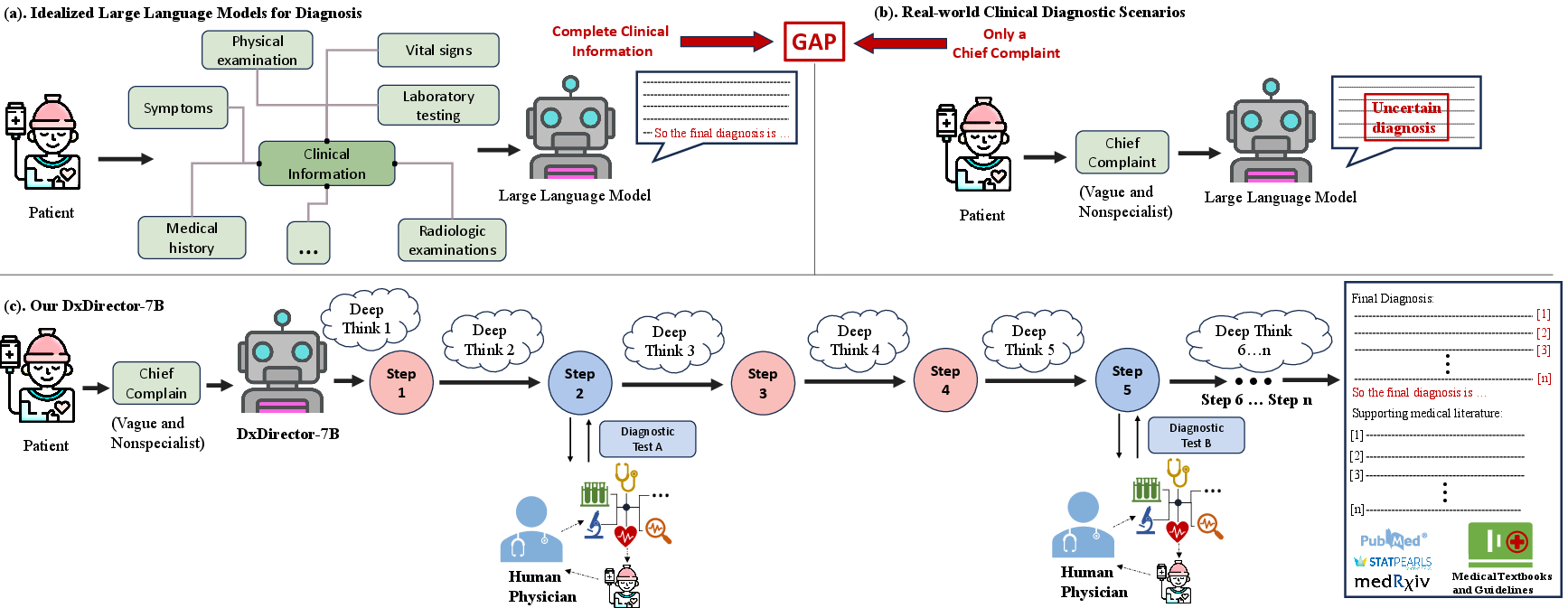

Methodology and System Architecture

The paper introduces DxDirector-7B, a LLM constructed with 7 billion parameters, specifically optimized for medical diagnostics. The authors describe their training method for the model, incorporating three stages: continued pre-training on medical data, instruction-tuning for full-process diagnosis starting from vague chief complaints, and step-level strategy preference optimization.

Stage 1: Continued Pre-training

DxDirector-7B is initialized from Llama-2-7B and subjected to an extensive continued pre-training on a corpus comprising medical literature, including clinical guidelines, PubMed abstracts, and full papers. The model is trained to acquire essential medical knowledge through a cross-entropy loss function, based on the next token prediction framework, which enables the model to learn from the text data itself.

Stage 2: Instruction-Tuning

The instruction-tuning phase enables DxDirector-7B to emulate human "slow thinking" and execute the full-process clinical diagnosis. The training dataset was constructed using MedQA, comprising 10,178 high-quality instruction-response pairs. Researchers utilize GPT-4o and o1-preview for data transformation and deep thinking injection. Specific prompts guided model training, resulting in a comprehensive dataset representing stepwise reasoning with clear delineation of LLM and human physician roles. Each step involves a structured process of formulating diagnostic queries and generating corresponding responses, thereby training DxDirector-7B to perform deep, nuanced clinical reasoning and proactive physician interaction when necessary.

Figure 2: Comparison between our DxDirector-7B and existing LLMs in full-process clinic diagnosis.

Stage 3: Step-Level Strategy Preference Optimization

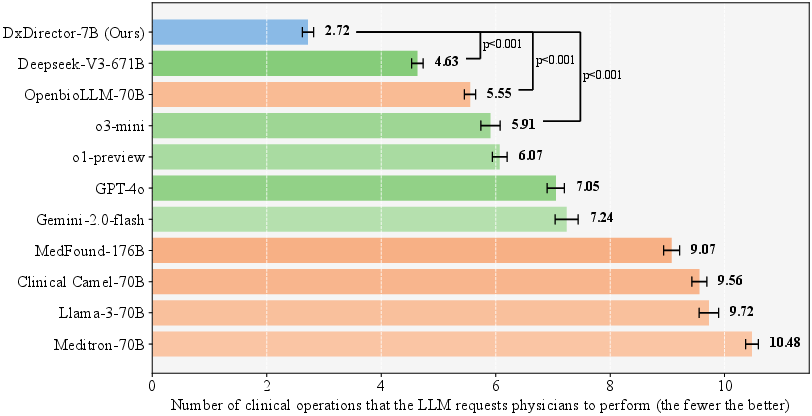

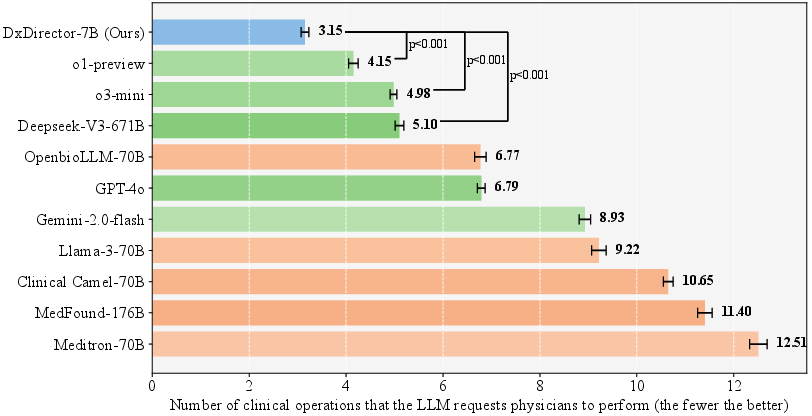

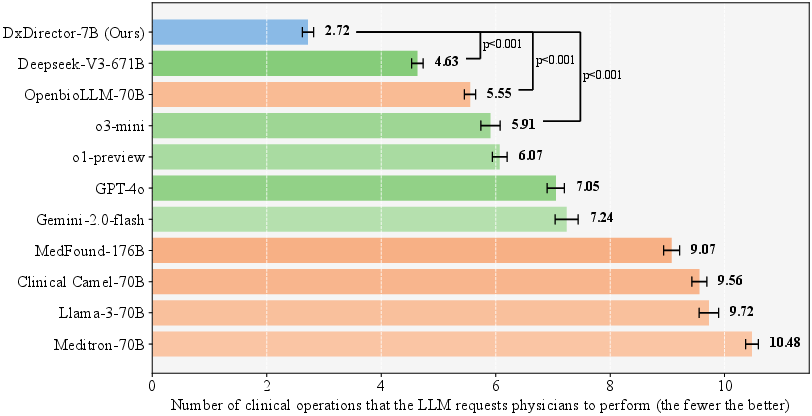

The authors introduce Step-Level Strategy Preference Optimization—a reinforcement learning technique to ensure that at each step of diagnosis, DxDirector-7B selects optimal strategies. They construct datasets comprising multiple reasoning paths, varying in rewards based on final diagnosis accuracy and physician involvement. The model learns to prefer higher-reward strategies, leveraging the Direct Preference Optimization objective. This approach ensures that the model not only attains superior diagnostic accuracy with minimal assistance but also establishes a robust accountability mechanism.

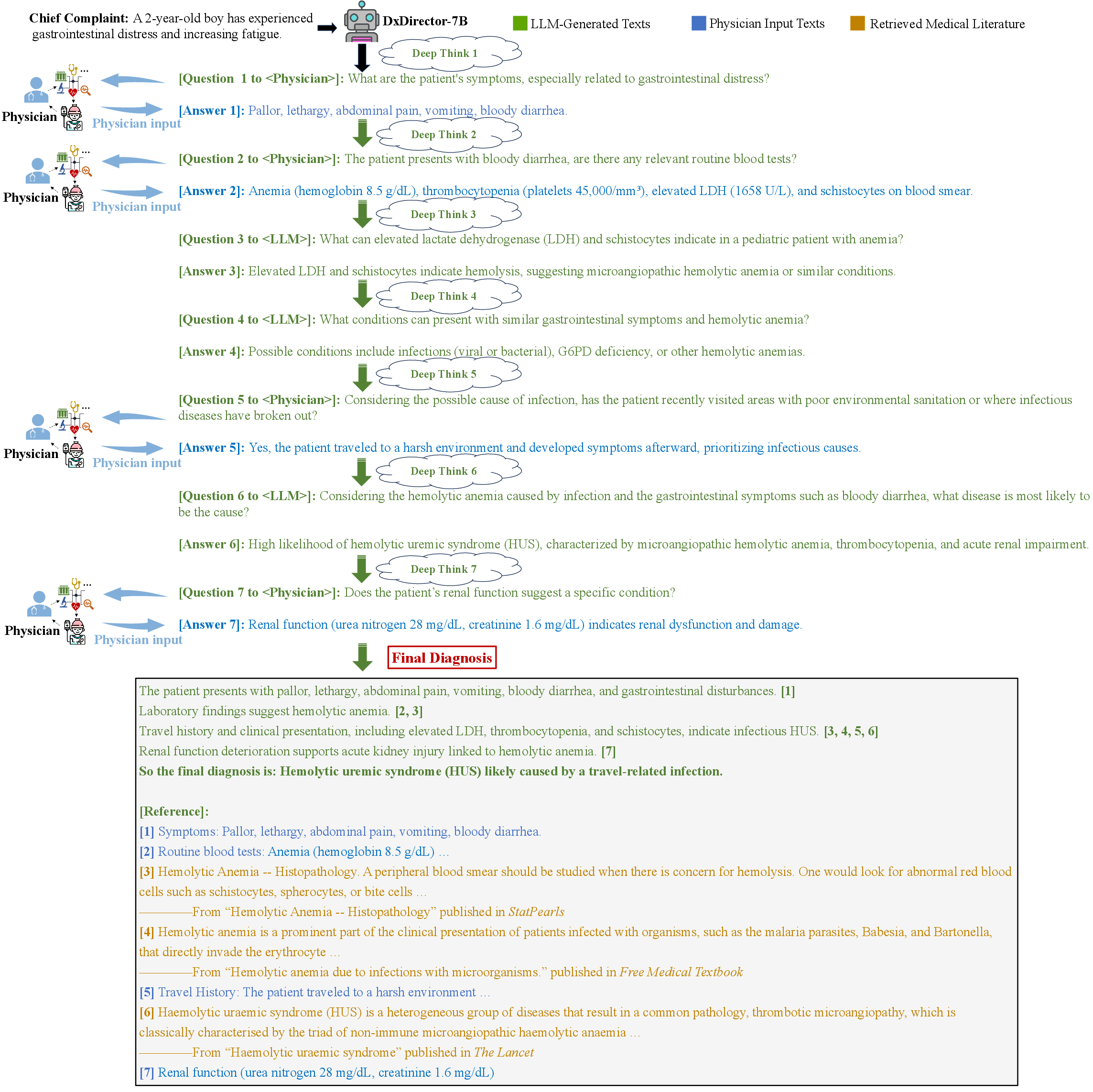

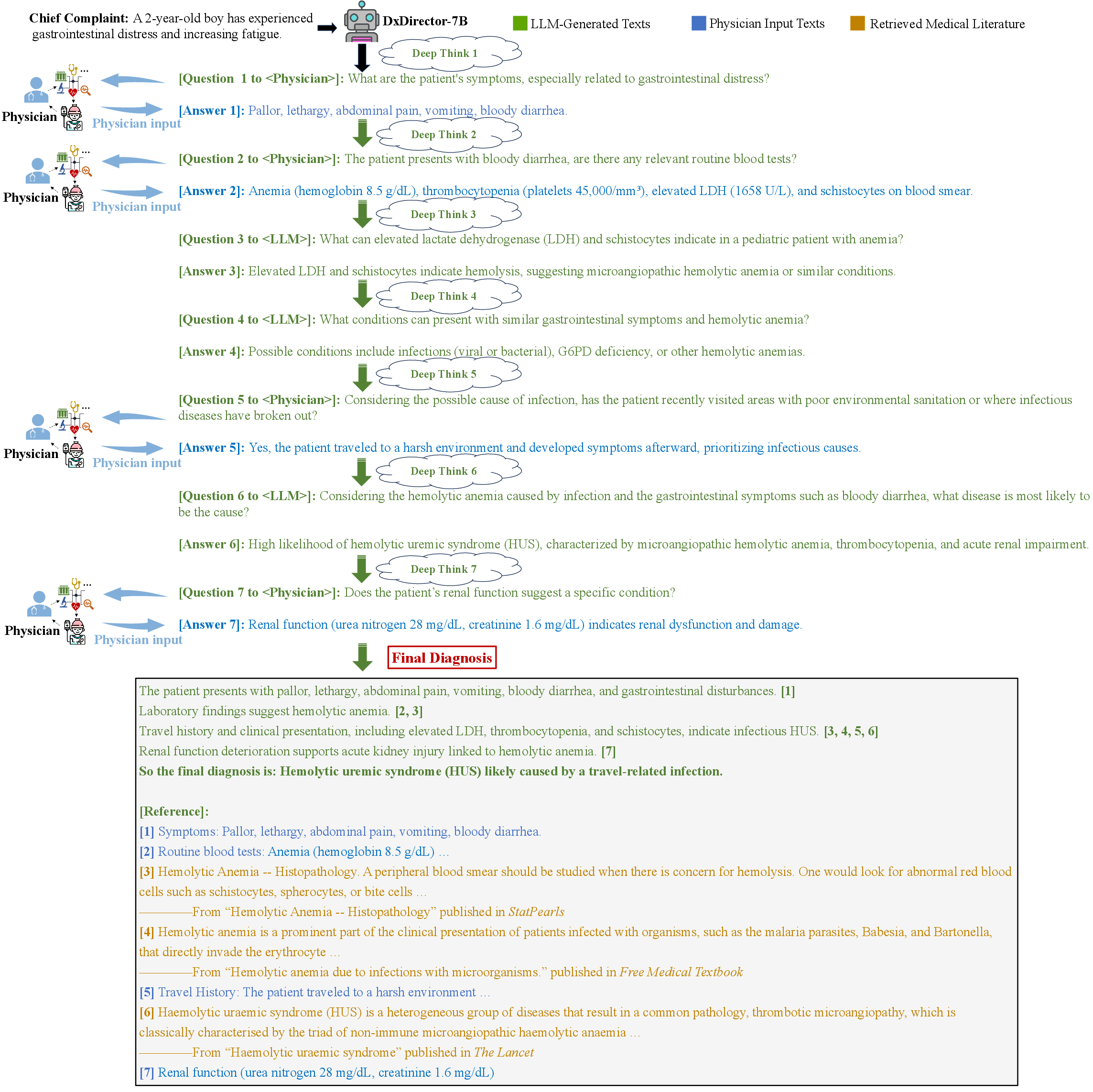

Figure 3: A case of DxDirector-7B performing the full-process diagnosis starting with only a chief complaint.

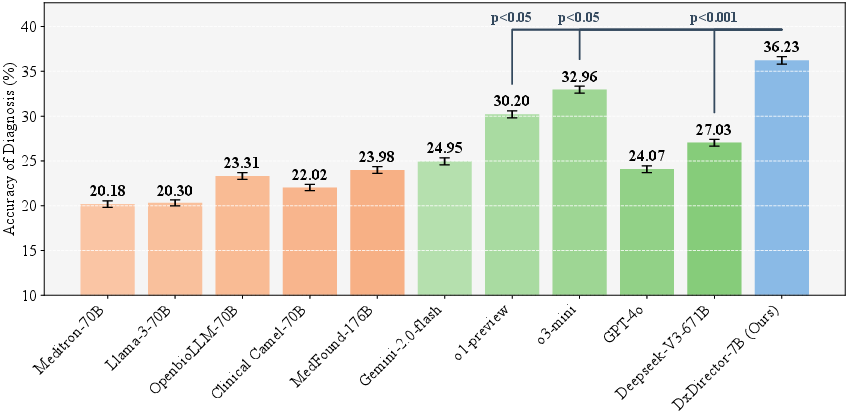

Experimental Evaluation

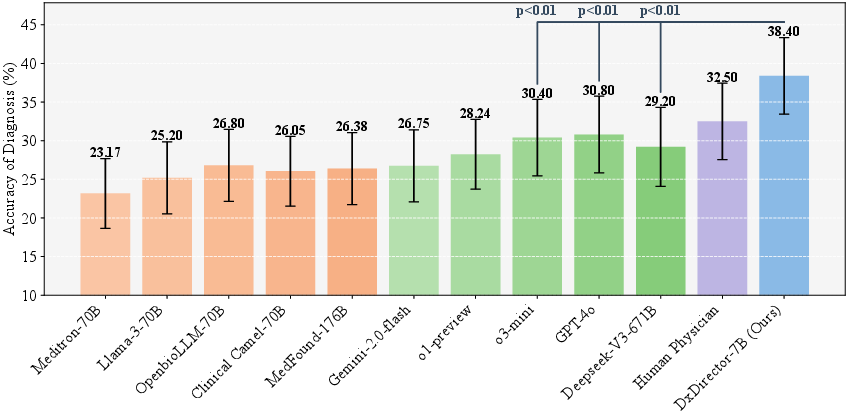

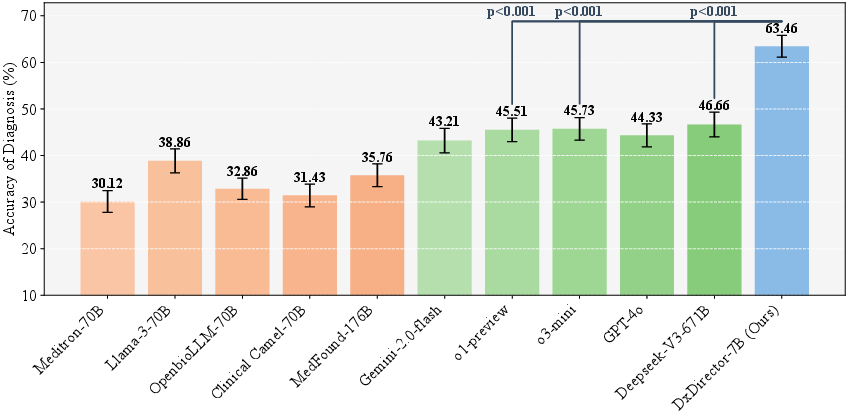

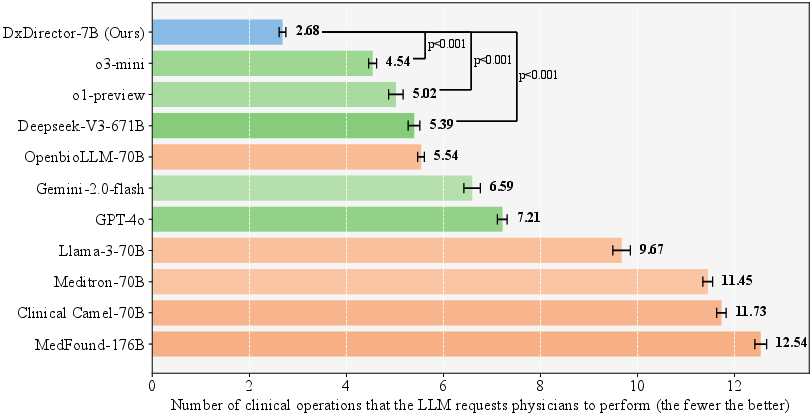

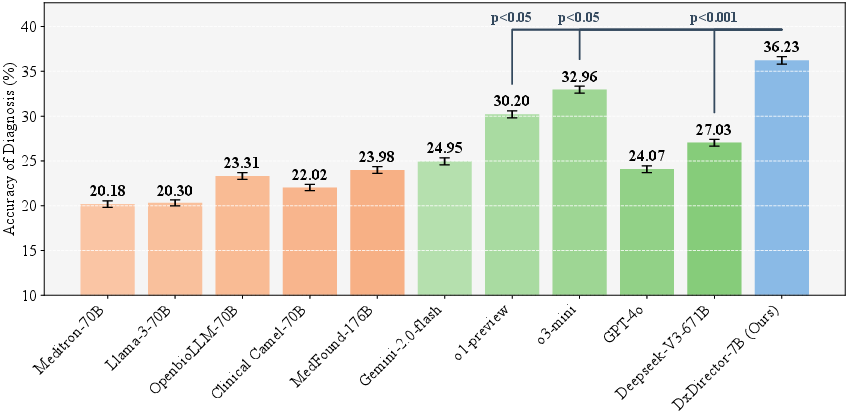

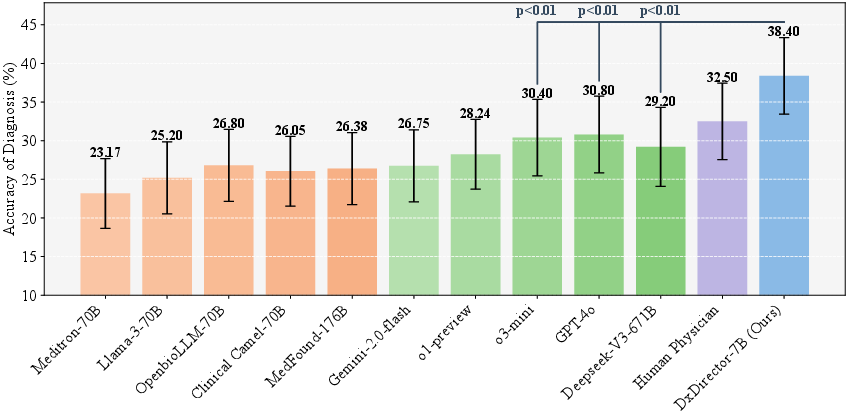

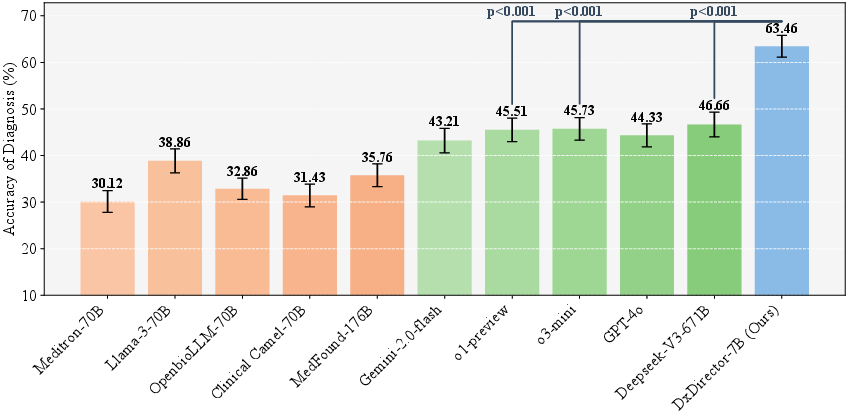

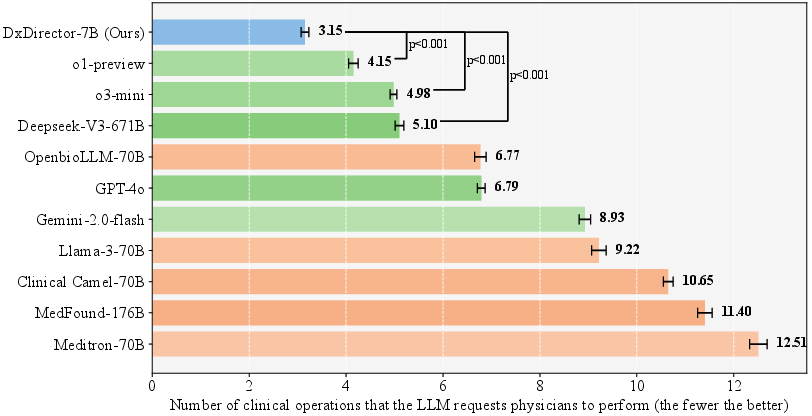

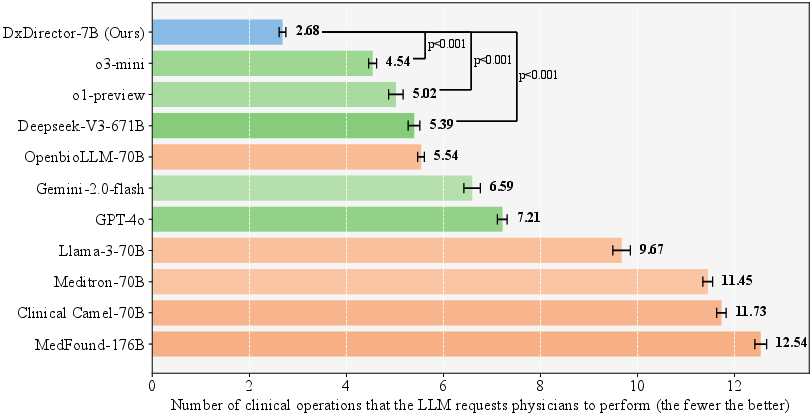

The authors systematically evaluate DxDirector-7B's performance against both medically adapted and powerful commercial LLMs across a range of datasets including NEJM Clinicopathologic Cases, RareArena, and ClinicalBench. The results consistently show that DxDirector-7B outperforms competitors in diagnostic accuracy despite having significantly fewer parameters, demonstrating marked parameter efficiency. In Fig. 4, DxDirector-7B achieves superior diagnostic accuracy across rare disease and real-world datasets, significantly reducing the necessary physician involvement relative to baseline LLMs.

Figure 4: Rare Disease Cases (RareArena).

Figure 5: A comparative heatmap analysis of diagnostic accuracy: DxDirector-7B vs. state-of-the-art medically adapted and commercial general-purpose LLMs across 17 clinical departments consisting of 1,500 samples in ClinicalBench.

Implications and Future Work

DxDirector-7B represents a significant evolution in the intersection of AI and clinical medicine, demonstrating that LLMs can assume a directing role in diagnosis with minimal human intervention. The model's capacity to autonomously guide diagnosis from a vague complaint significantly reduces physician workload, offering scalable diagnostic solutions particularly beneficial in resource-constrained settings. Despite its successes, areas for further exploration exist, such as refining physician involvement rules and integrating specialized AI models for pathology to fully realize DxDirector-7B's potential.

Conclusion

Through rigorous experimentation and methodological innovation, DxDirector-7B challenges and transforms existing paradigms in medical diagnostics. By repositioning AI not as an assistant but as the primary director, this work paves the way for more efficient, accurate, and scalable diagnostic methodologies. Further research and refinement are expected to augment DxDirector-7B's capabilities, enhancing its applicability across diverse clinical contexts worldwide.