Introduction to AMIE

In the field of medicine, the communication between a physician and a patient is a cornerstone of healthcare delivery. The success of medical treatment is often rooted in the quality of this interaction, which establishes a foundation for diagnosis and patient care. With advancements in the field of AI, the development of intelligent systems capable of mimicking such crucial conversations has made notable progress. AMIE, short for Articulate Medical Intelligence Explorer, is one such AI system that utilizes LLMs to simulate such diagnostic dialogues.

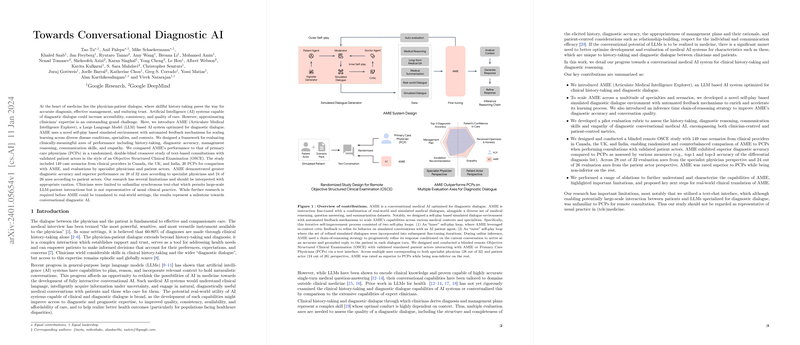

Training and Methodology Behind AMIE

The ingenuity behind AMIE is not just its ability to converse but also its refined learning environment that simulates varied medical scenarios. By engaging in what's known as "self-play" within this simulated environment, AMIE enriches its learning across different diseases, specialties, and contexts. AMIE’s training incorporated real-world datasets comprising electronic health records, medical question-answering, and transcribed medical conversations. During its training, AMIE employs a 'chain-of-reasoning' strategy, where it systematically refines its responses to ensure accurate and empathetic communication with the patient.

Evaluating AMIE’s Capabilities

To evaluate AMIE against the gold standard of primary care physicians, researchers engaged in a rigorous, randomized, double-blind paper. Here, both AMIE and physicians interacted with validated patient actors similar to an Objective Structured Clinical Examination (OSCE), a common method in medical education for assessing clinical competence. AMIE’s performance across a myriad of diagnostic cases was judged by specialist physicians and patient actors. Garnering superior ratings on most axes, AMIE demonstrated remarkable diagnostic accuracy, outstripping primary care physicians in multiple areas.

Implications and Future Directions

While the results are indeed promising, it's crucial to understand that AMIE, despite its sophistication, is not yet ready to replace human clinicians. The AI underwent evaluation in a controlled paper environment, using text-chat, which significantly differs from everyday clinical interactions. AMIE's deployment in actual healthcare settings will require careful further research, particularly to explore its safety, reliability, and fairness, especially when dealing with diverse populations and multilingual settings.

The potential of AMIE, and AI like it, could alter the landscape of healthcare, especially where access to quality medical advice is limited. It could support doctors by providing diagnostic suggestions and allowing healthcare providers to focus their skills where they are most needed. However, the path forward must be tread with cautious optimism, ensuring that any implementation is underpinned by rigorous testing and an ethical framework to maximize patient care without sacrificing human touch and professional insight.