- The paper presents a novel distillation framework that bypasses scaling laws to achieve state-of-the-art performance on mathematical and code reasoning tasks.

- It employs targeted teacher selection, rigorous corpus filtering, and diversity enhancement to reduce data needs while maintaining high accuracy.

- Empirical results demonstrate significant gains on benchmarks like AIME, MATH, and LiveCodeBench, highlighting robust cross-domain generalization.

Data-Efficient Distillation for Reasoning: Challenging the Scaling Law Paradigm

Introduction

The paper "Beyond Scaling Law: A Data-Efficient Distillation Framework for Reasoning" (DED) introduces a principled approach to reasoning model distillation that departs from the prevailing scaling law paradigm. Instead of relying on ever-larger corpora and computational budgets, the DED framework leverages targeted teacher selection, corpus curation, and diversity enhancement to achieve state-of-the-art (SOTA) performance in mathematical and code reasoning tasks with minimal data. This essay provides a technical analysis of the framework, its empirical results, and its implications for future research in efficient LLM post-training.

Reasoning Scaling Laws and Their Limitations

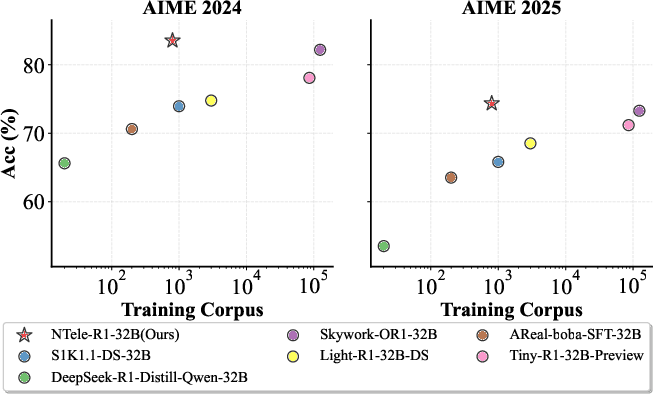

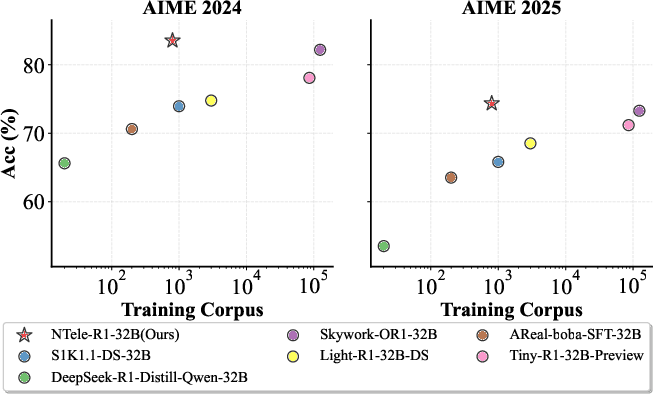

Recent advances in LLM reasoning have been driven by two main strategies: reinforcement learning with verifiable reward (RLVR) and supervised fine-tuning (SFT) from distilled chain-of-thought (CoT) trajectories. The scaling law hypothesis posits that reasoning performance increases monotonically with corpus size and model scale, but at the cost of diminishing returns and substantial resource requirements. Figure 1 illustrates this trend, showing that models fine-tuned from DeepSeek-R1-Distill-Qwen-32B adhere to a scaling curve, while the DED-trained NTele-R1-32B model breaks out of this regime and advances the Pareto frontier.

Figure 1: The performance on AIME 2024/2025 varies with the scale of the training corpus. Models fine-tuned from DeepSeek-R1-Distill-Qwen-32B exhibit a potential reasoning scaling law. Our model, NTele-R1-32B, breaks out of this trend and advances the Pareto frontier.

The DED Framework: Methodology

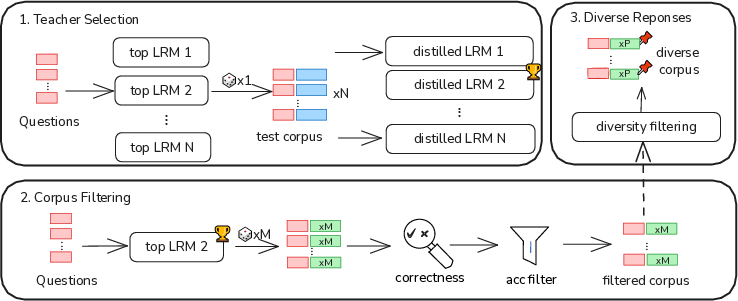

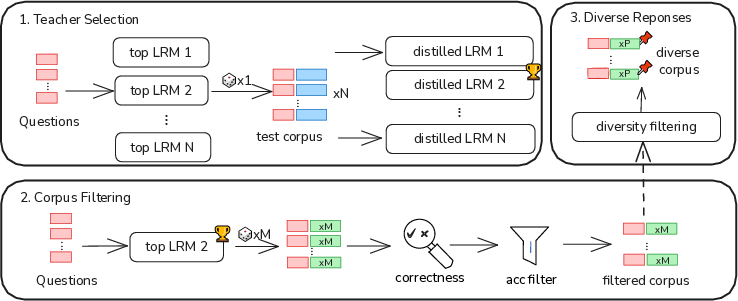

The DED framework is structured around three sequential stages, each designed to maximize reasoning gains under data constraints:

- Teacher Selection: Rather than defaulting to the highest-performing LLM on benchmarks, DED empirically evaluates candidate teacher models via smoke tests, distilling small corpora and measuring student performance. This process reveals that teaching ability is not strictly correlated with raw benchmark scores.

- Corpus Filtering and Compression: The framework applies rigorous quality checks (length, format, correctness) and compresses the question set by filtering out easy samples (high student pass rate). This ensures that only challenging, high-value examples are retained.

- Diversity Enhancement: Inspired by RL roll-out strategies, DED augments the corpus by selecting diverse reasoning trajectories for each question, measured via Levenshtein distance, to encourage robust student reasoning.

Figure 2: Overview of our data-efficient distillation framework.

Empirical Results and Analysis

Mathematical Reasoning

DED achieves SOTA results on AIME 2024/2025 and MATH-500 benchmarks using only 0.8k curated examples, outperforming models trained on much larger corpora. Notably, NTele-R1-32B attains 81.87% and 77.29% accuracy on AIME 2024 and 2025, respectively, surpassing both its teacher models and other distillation baselines.

Teacher Model Specialization

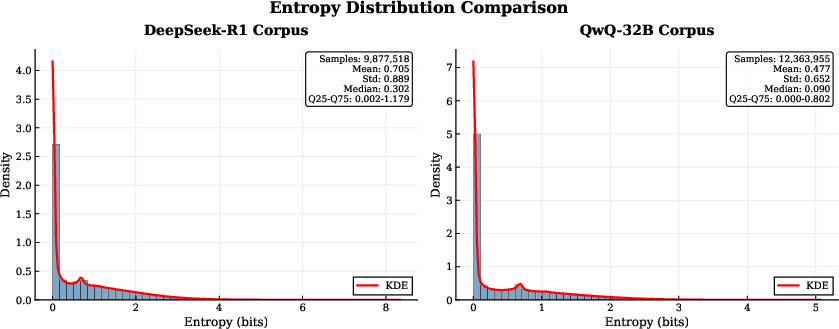

Experiments demonstrate that QwQ-32B serves as a more effective teacher than DeepSeek-R1, Qwen3-32B, and Qwen3-235B-A22B, despite not being the top performer on math benchmarks. This contradicts the assumption that the strongest LRM is always the optimal teacher, highlighting the importance of corpus affinity and token entropy.

Corpus Compression and Diversity

Quality filtering and hard-example compression reduce the corpus size by 75% with only a modest performance drop. Diversity augmentation restores and even surpasses full-corpus performance, indicating that data quality and diversity are more critical than sheer quantity.

Code Generation

DED generalizes to code reasoning tasks, achieving SOTA on LiveCodeBench (LCB) with only 230 hard samples expanded to 925 via diversity. The largest gains are observed in medium and hard subsets, confirming the framework's efficacy in few-shot learning across domains.

Cross-Domain Generalization

Mixed training on math and code corpora yields improvements in both domains and enhances out-of-domain (OOD) generalization, as evidenced by doubled scores on the Aider benchmark and consistent gains across MMLU, CMMLU, C-EVAL, BBH, MBPP, GSM8K, and MATH.

Deep Analysis: Length, Entropy, and Latent Shifts

Token Length

Contrary to prior work, corpus and response length are not dominant factors in distillation performance. Models trained on shorter QwQ-32B responses outperform those trained on longer DeepSeek-R1 responses, and accuracy remains stable across length variations.

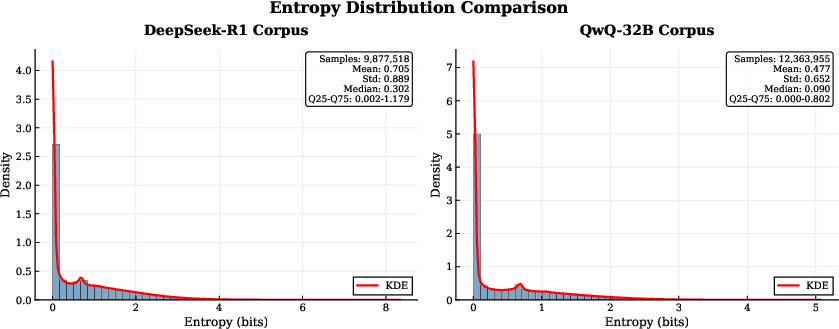

Token Entropy

Token entropy analysis reveals that QwQ-32B corpora exhibit lower entropy than DeepSeek-R1, resulting in more predictable and structured token distributions. This facilitates student convergence and enhances OOD robustness.

Figure 3: Comparison of token entropy distribution of teacher models.

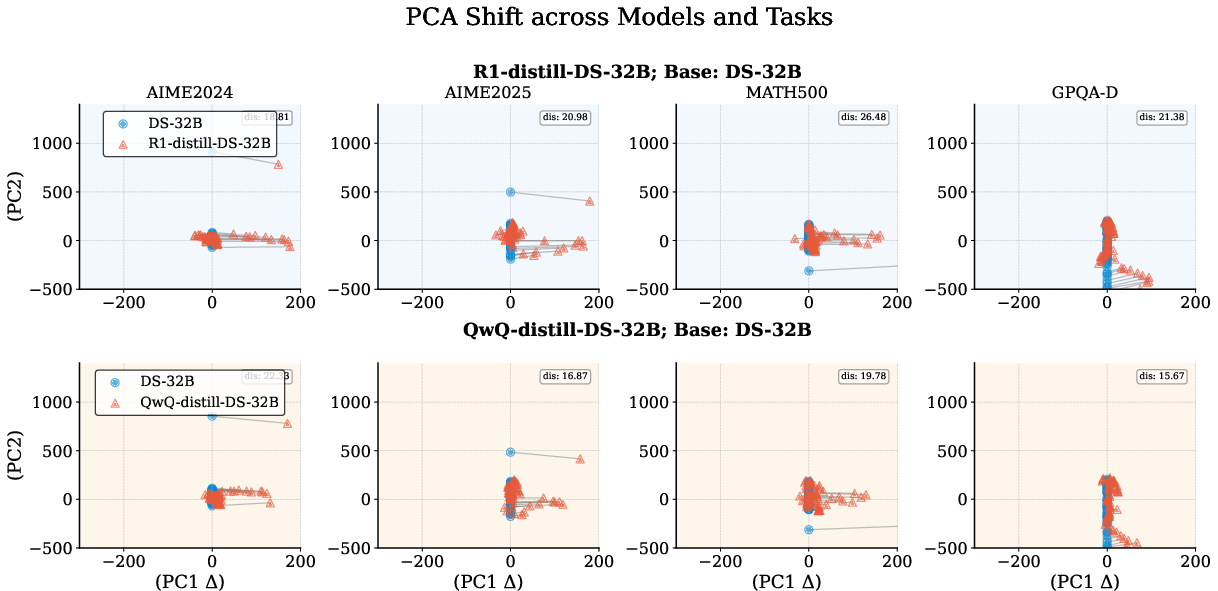

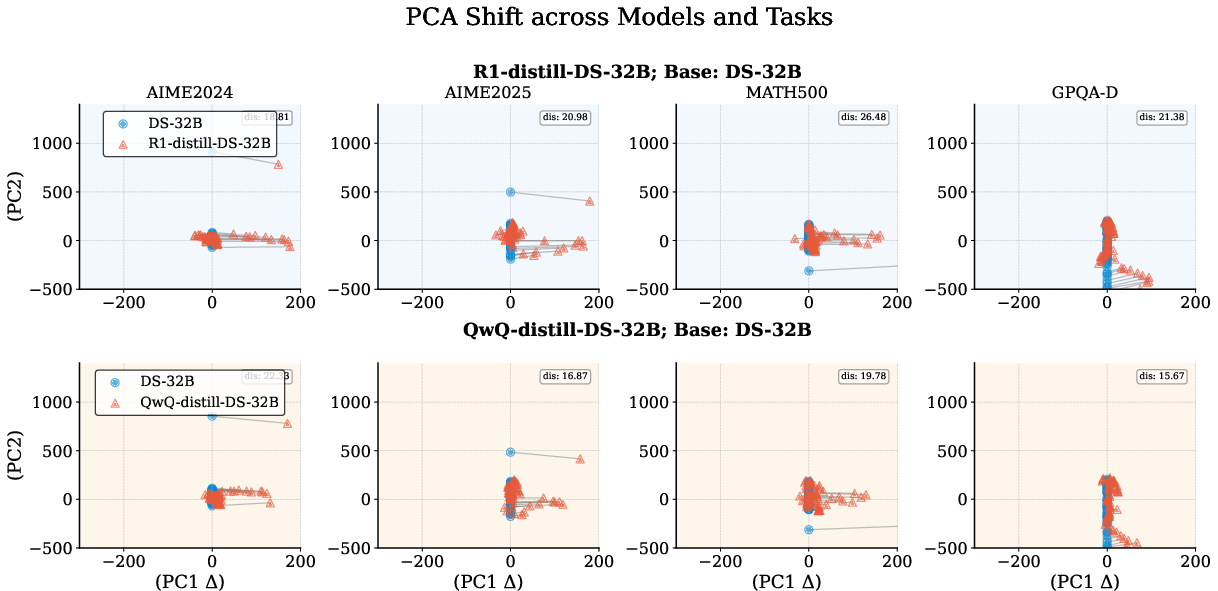

PCA Shift

PCA offset analysis shows that models distilled from QwQ-32B have smaller latent representation shifts than those distilled from DeepSeek-R1, indicating greater stability and generalization. This supports the hypothesis that corpus affinity and representational consistency are key to efficient distillation.

Figure 4: PCA offset of DS-32B across various teacher models and tasks. dis represents the Euclidean distance between the centroids of latent representations before and after training. Models trained on the QwQ-32B corpus exhibit smaller PCA offsets than DeepSeek-R1 across most tasks, indicating greater stability in their latent representations.

Implications and Future Directions

The DED framework demonstrates that reasoning scaling laws can be circumvented through principled teacher selection, corpus curation, and diversity enhancement. The findings challenge the reliance on superficial metrics such as teacher benchmark scores and token length, advocating for deeper analysis of token entropy and latent representation shifts. Practically, DED enables efficient reasoning model development in resource-constrained settings and provides a blueprint for cross-domain generalization.

Future research should explore the interpretability of DED-trained models, extend the framework to additional domains, and investigate the interplay between entropy, diversity, and latent stability in distillation. Theoretical work is needed to formalize the relationship between corpus affinity and generalization, and to develop automated methods for teacher and corpus selection.

Conclusion

The DED framework offers a data-efficient alternative to scaling-centric distillation, achieving SOTA reasoning performance with minimal data. By focusing on teacher specialization, corpus quality, and diversity, DED advances the Pareto frontier and provides robust generalization across domains. The results underscore the importance of token entropy and latent representation analysis in post-training, setting a new direction for efficient reasoning model development.