Genie Envisioner: A Unified World Foundation Platform for Robotic Manipulation (2508.05635v1)

Abstract: We introduce Genie Envisioner (GE), a unified world foundation platform for robotic manipulation that integrates policy learning, evaluation, and simulation within a single video-generative framework. At its core, GE-Base is a large-scale, instruction-conditioned video diffusion model that captures the spatial, temporal, and semantic dynamics of real-world robotic interactions in a structured latent space. Built upon this foundation, GE-Act maps latent representations to executable action trajectories through a lightweight, flow-matching decoder, enabling precise and generalizable policy inference across diverse embodiments with minimal supervision. To support scalable evaluation and training, GE-Sim serves as an action-conditioned neural simulator, producing high-fidelity rollouts for closed-loop policy development. The platform is further equipped with EWMBench, a standardized benchmark suite measuring visual fidelity, physical consistency, and instruction-action alignment. Together, these components establish Genie Envisioner as a scalable and practical foundation for instruction-driven, general-purpose embodied intelligence. All code, models, and benchmarks will be released publicly.

Summary

- The paper introduces a unified platform that collapses data collection, training, and evaluation into a closed-loop system for robotic manipulation.

- It employs a multi-stage training strategy using large-scale video-language data, achieving high spatial, temporal, and semantic consistency across tasks.

- The framework delivers real-time inference and robust evaluation through GE-Act and EWMBench, outperforming state-of-the-art baselines in diverse manipulation tasks.

Genie Envisioner: A Unified World Foundation Platform for Robotic Manipulation

Introduction and Motivation

Genie Envisioner (GE) presents a unified, scalable platform for robotic manipulation, integrating policy learning, simulation, and evaluation within a single video-generative framework. The platform is designed to address the fragmentation in existing robotic learning pipelines, which typically separate data collection, training, and evaluation, resulting in inefficiencies and limited reproducibility. GE collapses these stages into a closed-loop system, leveraging large-scale video-language paired data to model the spatial, temporal, and semantic dynamics of real-world robotic interactions.

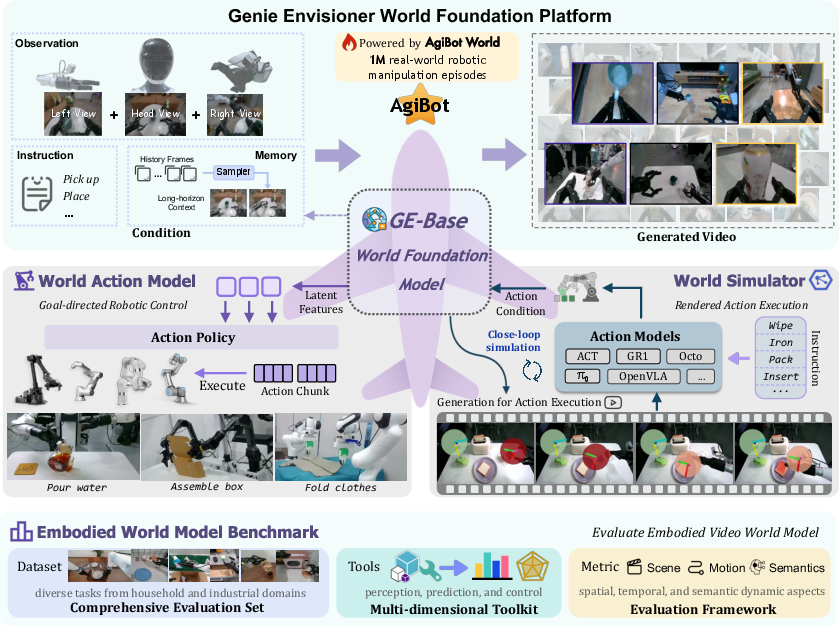

Figure 1: Overview of Genie Envisioner, integrating GE-Base, GE-Act, GE-Sim, and EWMBench into a unified world model for robotic manipulation.

GE-Base: World Foundation Model

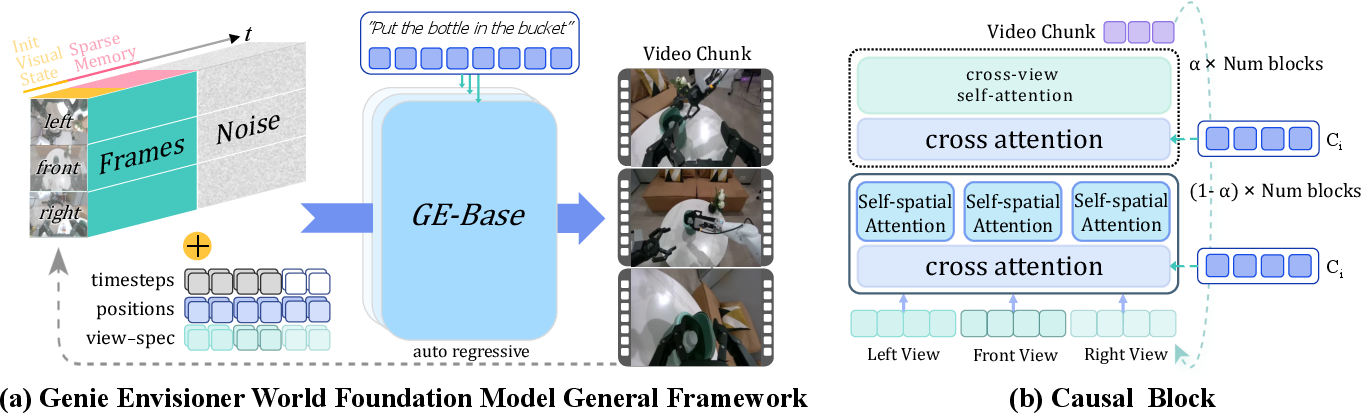

GE-Base is the core of Genie Envisioner, formulated as a multi-view, instruction-conditioned video diffusion transformer. It models robotic manipulation as a text-and-image-to-video generation problem, predicting future video segments conditioned on language instructions, initial observations, and sparse memory frames. The architecture supports multi-view synthesis, enabling spatial consistency across head-mounted and wrist-mounted cameras.

Key architectural features include:

- Autoregressive video chunk generation with sparse memory for long-term temporal reasoning.

- Cross-view self-attention for spatial consistency.

- Instruction integration via frozen T5-XXL embeddings and cross-attention.

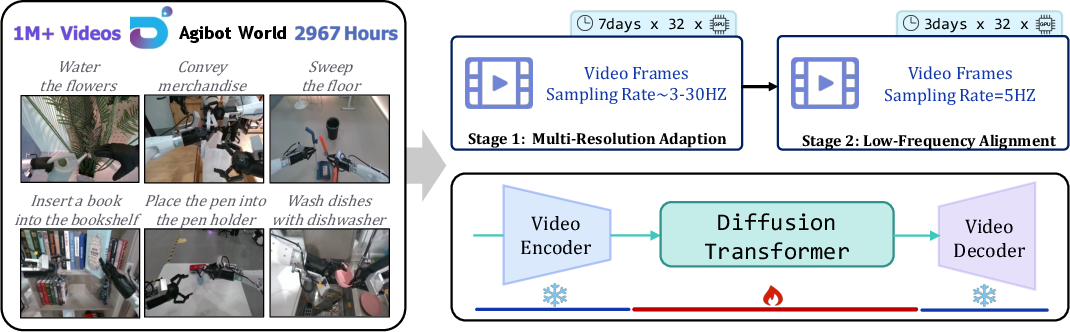

Pretraining is performed on the AgiBot-World-Beta dataset (1M episodes, 3,000 hours), using a two-stage process:

- Multi-Resolution Temporal Adaptation: Pretraining on variable frame rates to learn spatiotemporal invariance.

- Low-Frequency Policy Alignment: Fine-tuning for temporal abstraction matching downstream control.

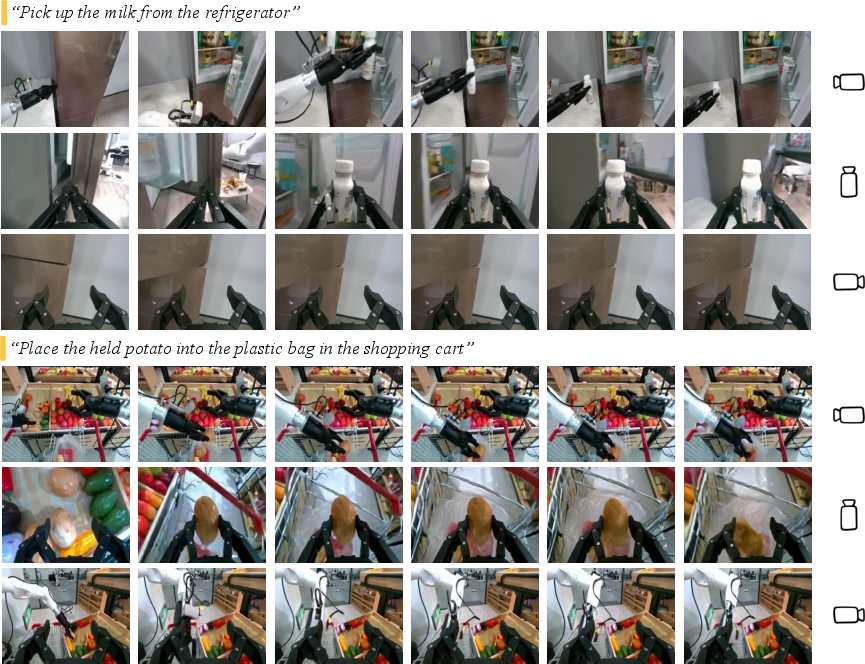

GE-Base demonstrates strong generalization in multi-view video generation, maintaining spatial and semantic alignment across diverse manipulation tasks.

Figure 2: GE-Base autoregressive video generation and cross-view causal block for spatial consistency.

Figure 3: GE-Base training pipeline leveraging domain adaptation and temporal abstraction.

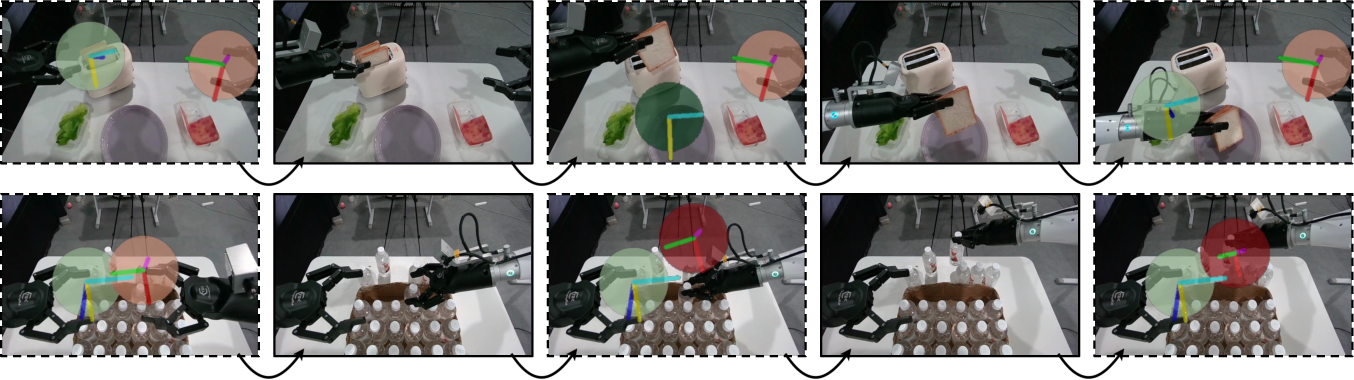

Figure 4: Multi-view robotic manipulation videos generated by GE-Base, showing spatial and semantic consistency.

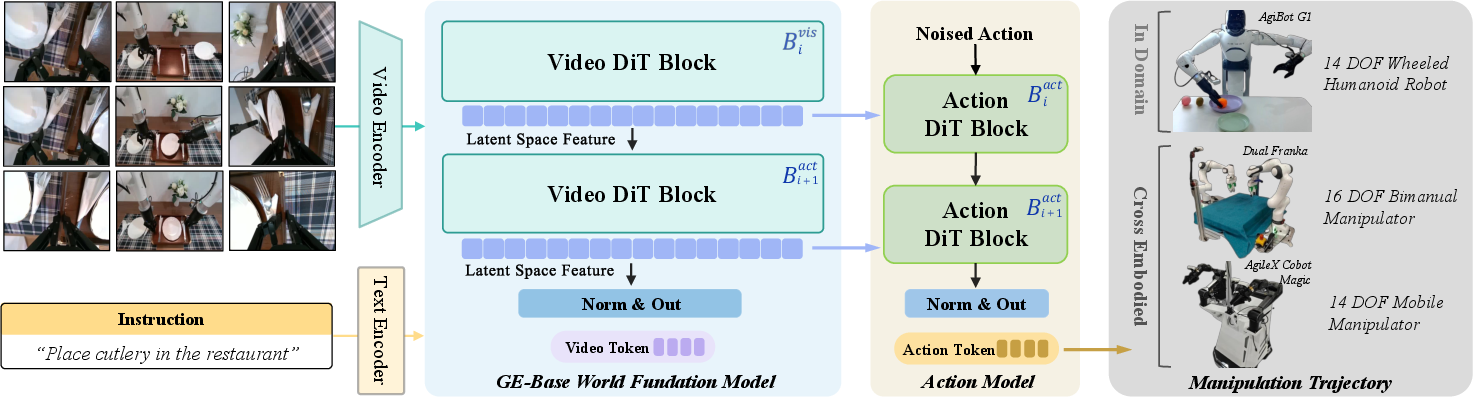

GE-Act: World Action Model

GE-Act extends GE-Base with a lightweight, parallel action branch, mapping visual latent representations to structured action policy trajectories. The architecture mirrors GE-Base's block design but reduces hidden dimensions for efficiency. Visual features are integrated into the action pathway via cross-attention, and final action predictions are generated using a diffusion-based denoising flow-matching pipeline.

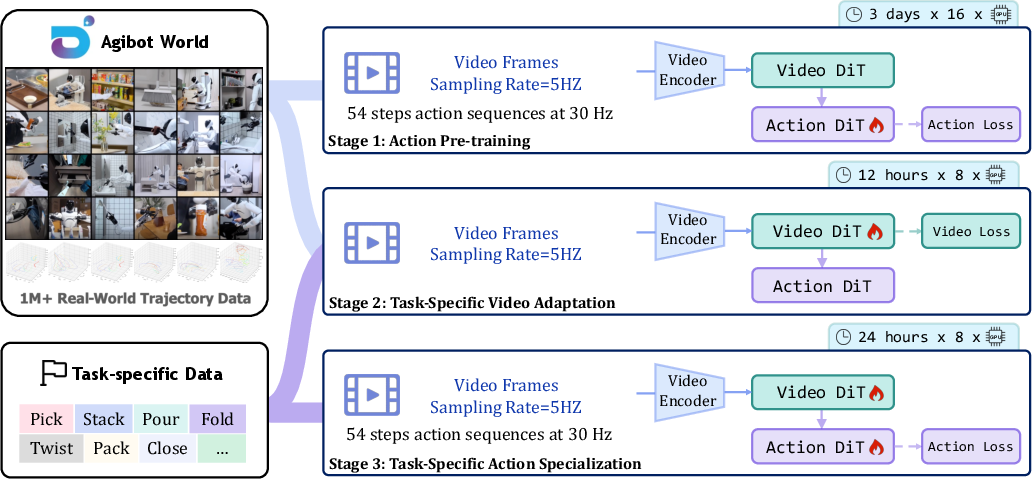

Training follows a three-stage protocol:

- Action-space pretraining: Visual backbone projects video sequences into latent action space.

- Video adaptation: Fine-tuning visual components for task-specific domains.

- Action specialization: Fine-tuning the action head for control signals.

GE-Act achieves real-time inference (54-step torque trajectories in 200 ms on RTX 4090), supporting asynchronous inference with decoupled video and action denoising frequencies (video at 5 Hz, action at 30 Hz).

Figure 5: GE-Act architecture with parallel action branch and cross-attention integration.

Figure 6: GE-Act training pipeline utilizing text-video-policy triplets for robust adaptation.

Real-World Performance and Cross-Embodiment Generalization

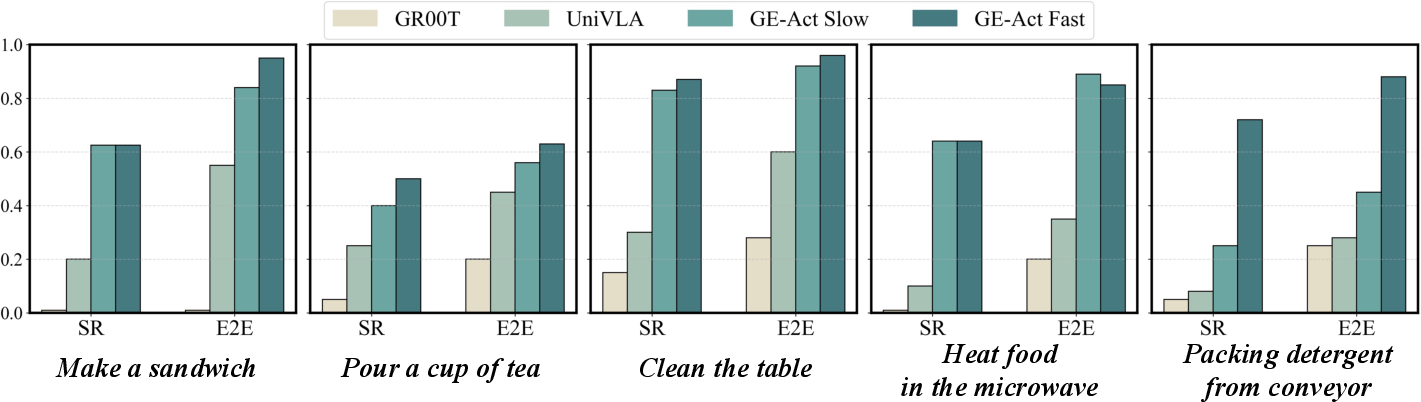

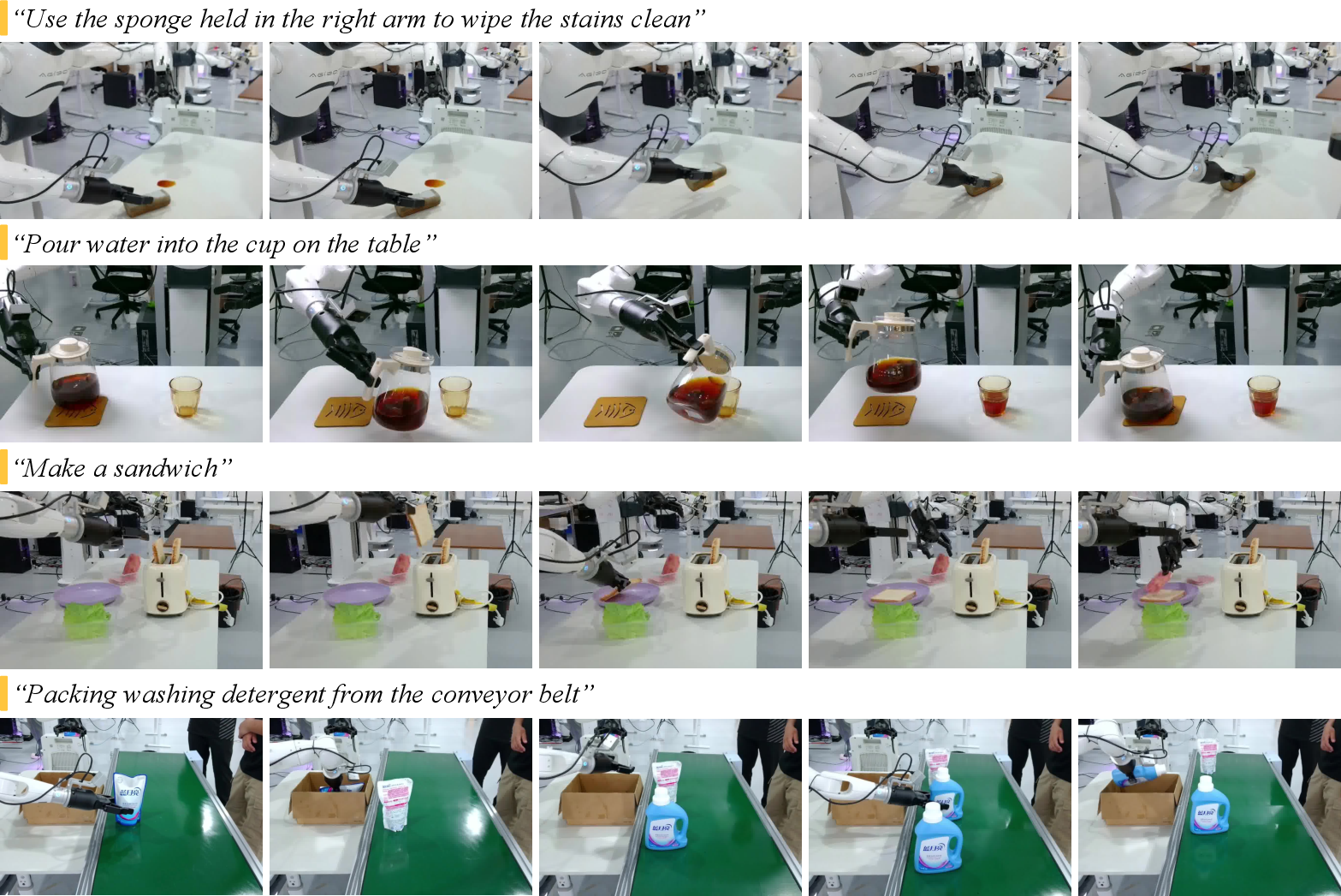

GE-Act is evaluated on five representative tasks (e.g., sandwich making, tea pouring, table cleaning, microwave operation, conveyor packing) using step-wise and end-to-end success rates. It consistently outperforms state-of-the-art VLA baselines (UniVLA, GR00T N1, π0) in both household and industrial settings.

Figure 7: Task-specific manipulation performance comparison on AgiBot G1, showing GE-Act's superior SR and E2E metrics.

Figure 8: Real-world manipulation on AgiBot G1 via GE-Act, demonstrating robust instruction-conditioned execution.

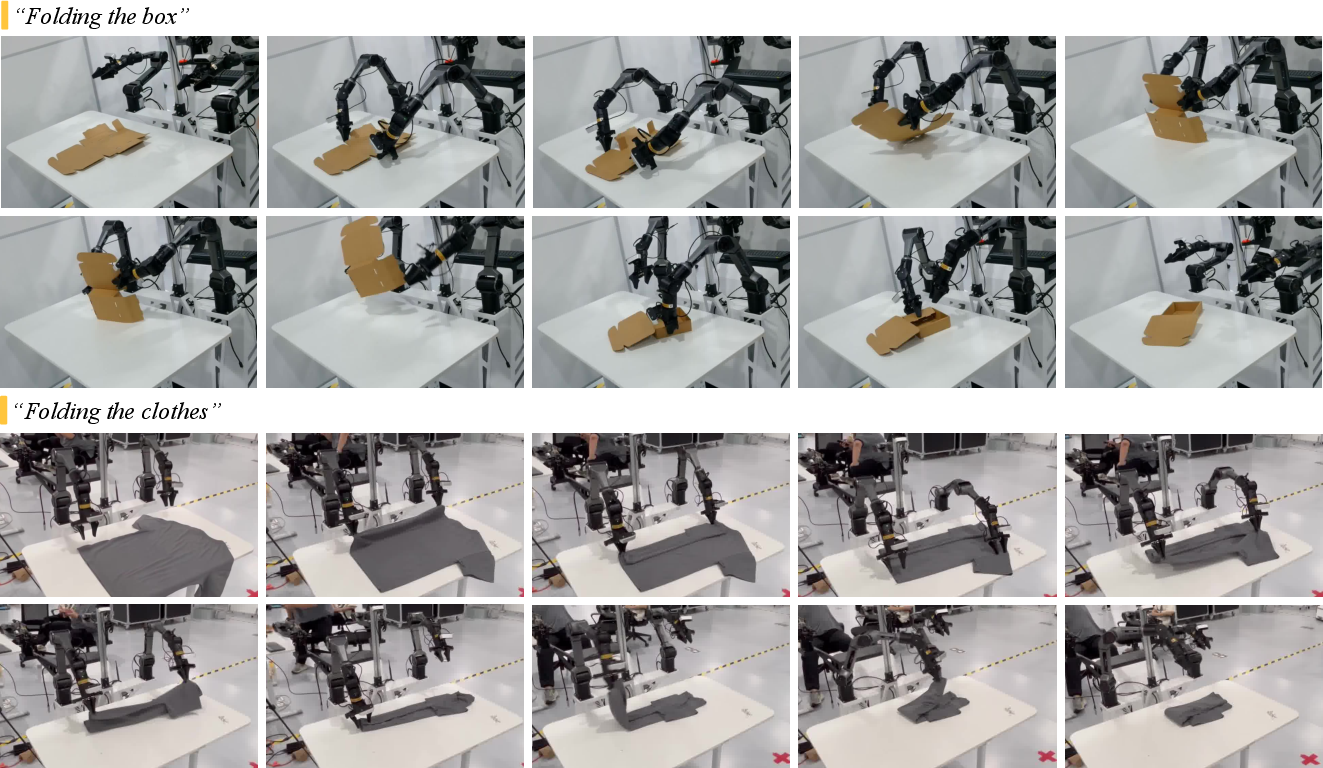

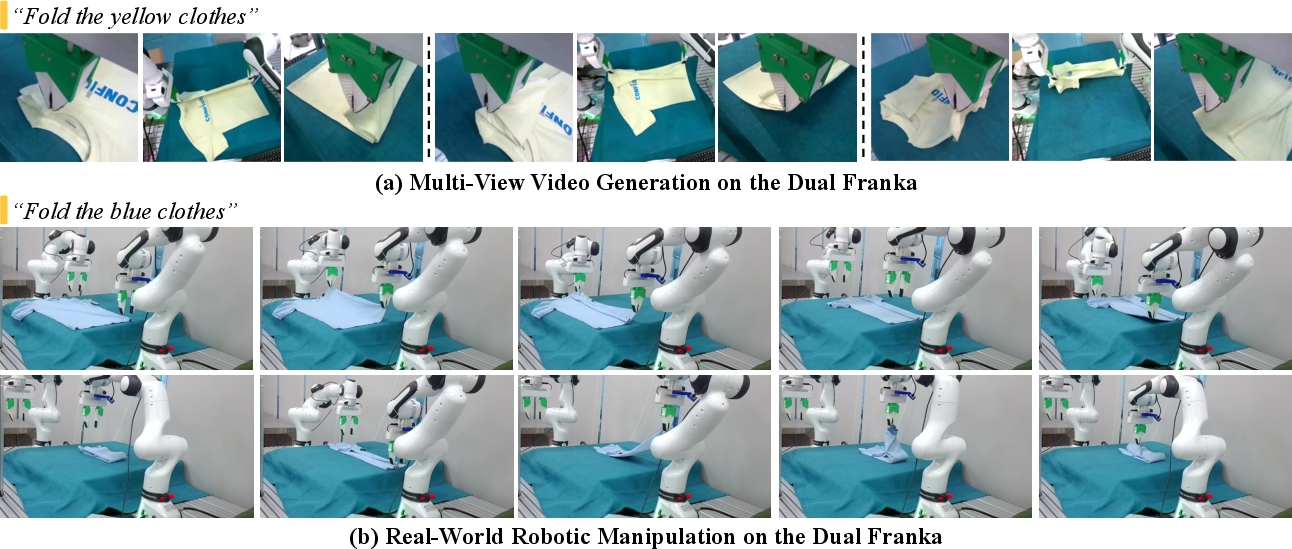

Cross-embodiment generalization is validated on Agilex Cobot Magic and Dual Franka platforms using few-shot adaptation (1 hour of teleoperated data). GE-Act outperforms baselines in complex deformable object tasks (cloth folding, box folding), achieving high precision and reliability with minimal data.

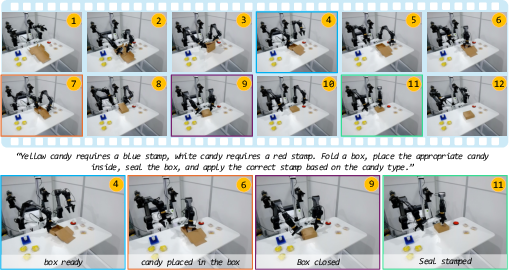

Figure 9: GE-Act demonstration on Agilex Cobot Magic, executing complex packaging with memory-based decision making.

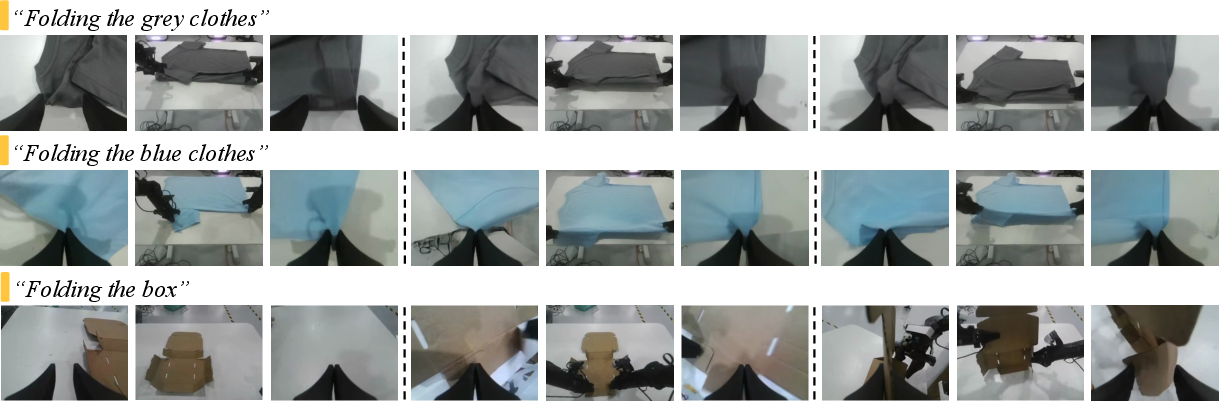

Figure 10: Multi-view video generation on Agilex Cobot Magic for folding tasks.

Figure 11: Real-world demonstrations of GE-Act on Agilex Cobot Magic, including cloth and box folding.

Figure 12: Robotic video generation and manipulation on Dual Franka via GE.

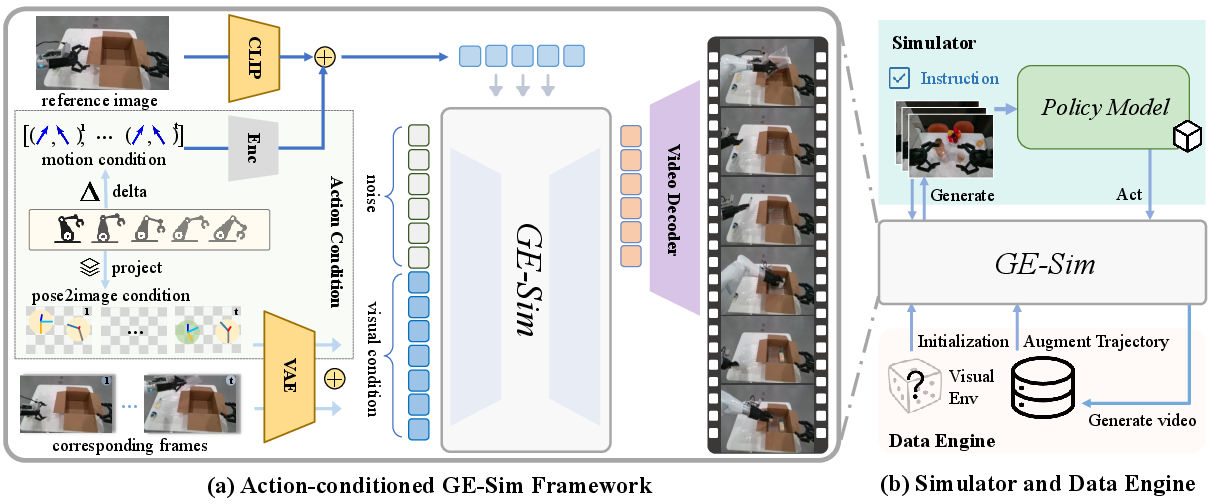

GE-Sim: World Simulator

GE-Sim repurposes GE-Base as an action-conditioned video generator, enabling closed-loop policy evaluation and controllable data generation. It incorporates hierarchical action-conditioning, fusing pose images and motion deltas into the token stream for precise spatial and temporal alignment.

Training uses high-temporal-resolution data and augments with failure cases to improve robustness. GE-Sim supports scalable simulation (thousands of episodes/hour), providing a reliable alternative to physics engines for policy rollout and evaluation.

Figure 13: GE-Sim architecture for action-conditioned video generation and closed-loop simulation.

Figure 14: Visualization of action-conditioned video generation by GE-Sim, showing spatial alignment with control signals.

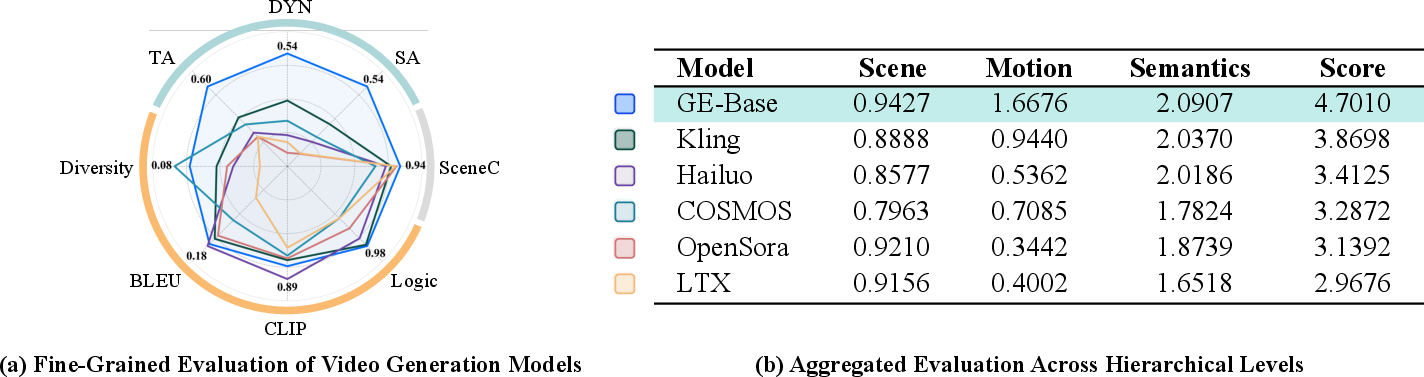

EWMBench: Embodied World Model Benchmark

EWMBench is a comprehensive evaluation suite measuring visual fidelity, physical consistency, and instruction-action alignment. It introduces domain-specific metrics:

- Scene Consistency: Patch-level feature similarity for spatial-temporal fidelity.

- Action Trajectory Quality: Symmetric Hausdorff distance, NDTW, and dynamic consistency via Wasserstein distance.

- Motion Semantics: BLEU, CLIP similarity, logical correctness, and diversity.

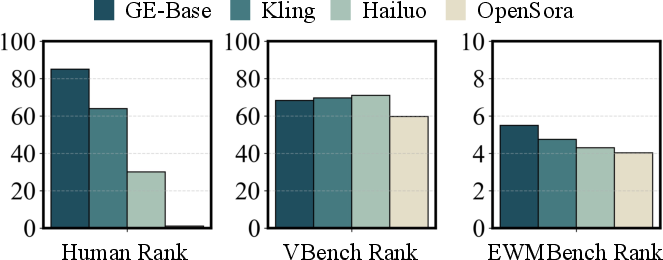

GE-Base and GE-Sim outperform baselines (OpenSora, Kling, Hailuo, COSMOS, LTX-Video) in temporal alignment, dynamic consistency, and control-aware generation fidelity. EWMBench rankings align closely with human preference, validating its reliability for embodied evaluation.

Figure 15: Comprehensive evaluation of video world models for robotic manipulation using EWMBench.

Figure 16: Consistency and validity analysis of EWMBench metrics versus human preference and VBench.

Limitations

Despite its strengths, Genie Envisioner is limited by:

- Data diversity: Reliance on a single real-world dataset restricts embodiment and scene variety.

- Embodiment scope: Focused on tabletop manipulation; lacks dexterous hand and full-body capabilities.

- Evaluation methodology: Proxy metrics and partial human validation; fully automated, reliable assessment remains an open challenge.

Conclusion

Genie Envisioner establishes a unified, scalable foundation for instruction-driven robotic manipulation, integrating high-fidelity video generation, efficient action policy inference, and robust simulation. GE-Base, GE-Act, and GE-Sim collectively enable precise, generalizable control and evaluation across diverse tasks and embodiments. EWMBench provides rigorous, task-relevant metrics, ensuring meaningful assessment of embodied world models. The platform's open-source release will facilitate further research in general-purpose embodied intelligence and scalable robotic learning.

Follow-up Questions

- How does consolidating training, simulation, and evaluation improve the reproducibility of robotic manipulation experiments?

- What role does multi-view video generation play in enhancing spatial and temporal consistency in GE-Base?

- In what ways does the parallel action branch in GE-Act contribute to real-time robotic control?

- How does EWMBench measure and validate the performance of embodied world models in complex tasks?

- Find recent papers about unified robotic manipulation systems.

Related Papers

- Unleashing Large-Scale Video Generative Pre-training for Visual Robot Manipulation (2023)

- Learning Interactive Real-World Simulators (2023)

- Transferring Foundation Models for Generalizable Robotic Manipulation (2023)

- Genie: Generative Interactive Environments (2024)

- Gen2Act: Human Video Generation in Novel Scenarios enables Generalizable Robot Manipulation (2024)

- EnerVerse: Envisioning Embodied Future Space for Robotics Manipulation (2025)

- GR00T N1: An Open Foundation Model for Generalist Humanoid Robots (2025)

- UniVLA: Learning to Act Anywhere with Task-centric Latent Actions (2025)

- DreamGen: Unlocking Generalization in Robot Learning through Video World Models (2025)

- MolmoAct: Action Reasoning Models that can Reason in Space (2025)

Tweets

YouTube

alphaXiv

- Genie Envisioner: A Unified World Foundation Platform for Robotic Manipulation (11 likes, 0 questions)