- The paper presents an open-source three-stage autoregressive model that predicts depth, trace, and action tokens for enhanced spatial reasoning in robotics.

- It employs a ViT-based vision-language backbone with custom tokenization, achieving significant performance gains in zero-shot and real-world settings.

- The model demonstrates superior steerability and robust generalization, with up to +23.3% improvement over baselines under challenging conditions.

MolmoAct: Action Reasoning Models that can Reason in Space

Introduction and Motivation

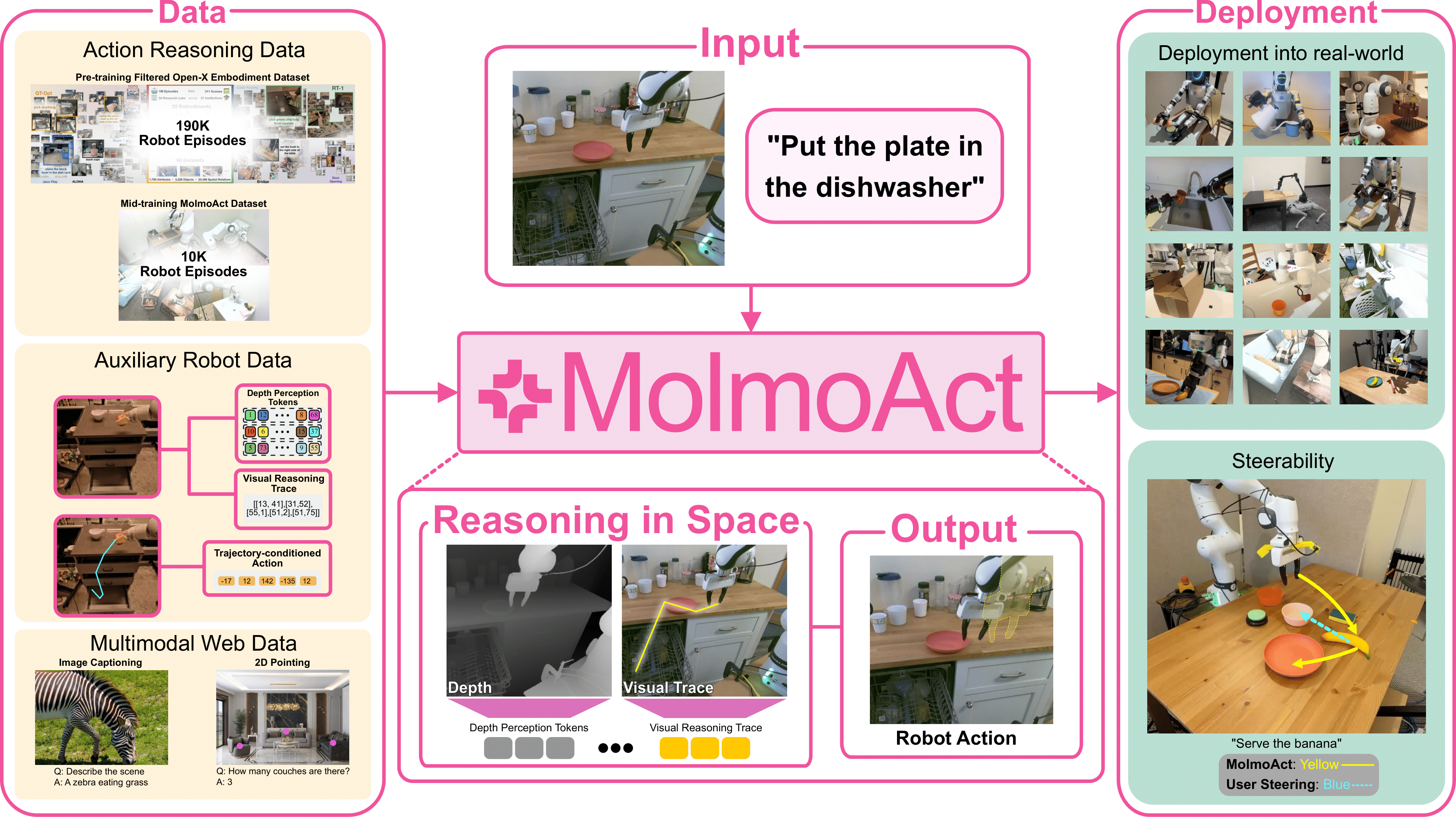

MolmoAct introduces a new class of open-source Action Reasoning Models (ARMs) for robotic manipulation, addressing the limitations of current Vision-Language-Action (VLA) models that map perception and instructions directly to control without explicit spatial reasoning. The core hypothesis is that explicit, structured spatial reasoning—grounded in depth perception and trajectory planning—enables more robust, generalizable, and explainable robotic behavior. MolmoAct operationalizes this by decomposing the action prediction pipeline into three autoregressive stages: depth perception token prediction, visual reasoning trace generation, and low-level action token prediction.

Figure 1: Overview of MolmoAct's three-stage reasoning pipeline: depth perception, visual reasoning trace, and action token prediction, each yielding interpretable outputs.

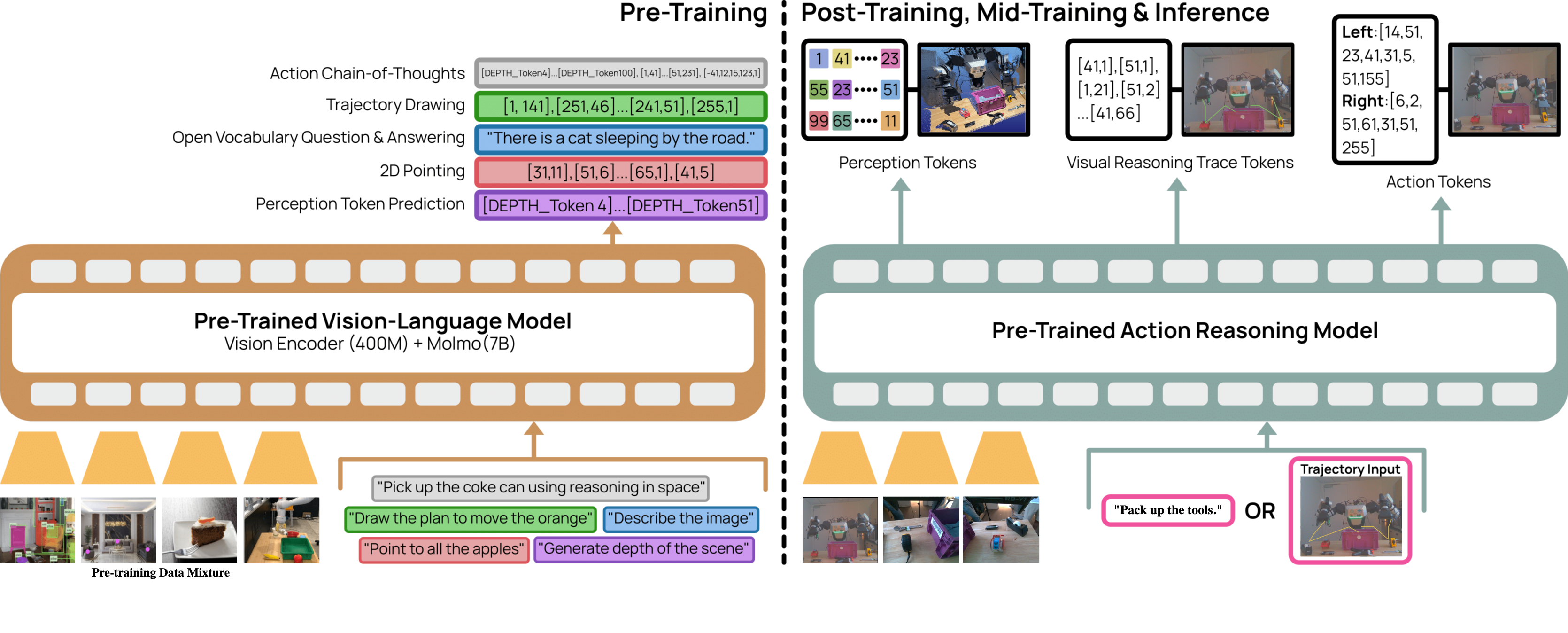

Model Architecture and Reasoning Pipeline

MolmoAct builds on the Molmo vision-language backbone, comprising a ViT-based visual encoder, a vision-language connector, and a decoder-only LLM. The model is extended to support action reasoning by introducing two key intermediate representations:

- Depth Perception Tokens: Discrete tokens representing a quantized depth map of the scene, produced via VQVAE encoding of depth maps from a specialist estimator. This step grounds the model's spatial understanding in 2.5D geometry.

- Visual Reasoning Trace Tokens: A sequence of 2D waypoints (polylines) in the image plane, representing the planned end-effector trajectory, normalized to the image resolution.

- Action Tokens: Discretized, ordinal-structured tokens representing robot control commands, with a custom tokenization scheme that preserves local correlation in the action space.

The model autoregressively predicts these three token sequences, conditioning each stage on the outputs of the previous, thereby enforcing explicit spatial grounding at every step.

Figure 2: MolmoAct's training process, showing pre-training on diverse multimodal and robot data, and post-training with multi-view images and either language or visual trajectory inputs.

Data Curation and Training Regime

MolmoAct is trained on a mixture of action reasoning data, auxiliary robot data, and multimodal web data. The action reasoning data is constructed by augmenting standard robot datasets (e.g., RT-1, BridgeData V2, BC-Z) with ground-truth depth tokens and visual traces, generated using a VQVAE-based depth estimator and a vision-LLM for gripper localization, respectively. The MolmoAct Dataset, collected in-house, provides over 10,000 high-quality trajectories across 93 manipulation tasks in both home and tabletop environments, with a long-tailed verb distribution.

Figure 3: Data mixture distribution for pre-training, highlighting the increased proportion of auxiliary depth and trace data in the sampled subset.

Figure 4: Example tasks and verb frequency in the MolmoAct Dataset, illustrating task diversity and the long-tail action distribution.

The training pipeline consists of three stages:

- Pre-training: On 26.3M samples from the OXE subset, auxiliary data, and web data.

- Mid-training: On 2M samples from the MolmoAct Dataset, focusing on household manipulation.

- Post-training: Task-specific fine-tuning using LoRA adapters, with action chunking for efficient adaptation.

Experimental Evaluation

MolmoAct-7B-D achieves 70.5% zero-shot accuracy on SimplerEnv Visual Matching tasks, outperforming closed-source and proprietary baselines, despite using an order of magnitude less pre-training data. Fine-tuning further improves performance, demonstrating the model's utility as a strong initialization for downstream deployment.

Fast Adaptation and Real-World Transfer

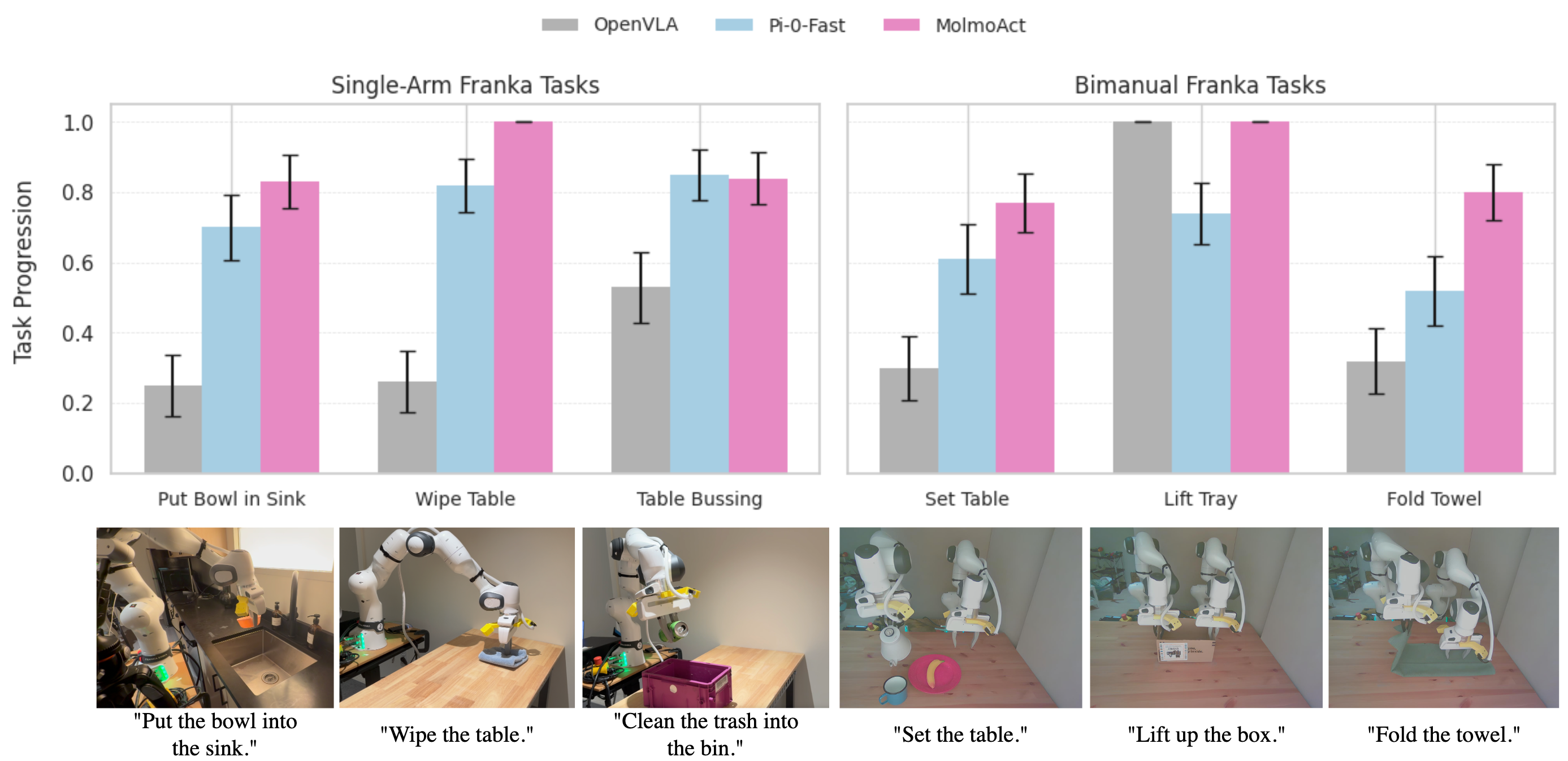

On the LIBERO benchmark, MolmoAct-7B-D attains an 86.6% average success rate, with a +6.3% gain over ThinkAct on long-horizon tasks. In real-world single-arm and bimanual Franka setups, MolmoAct outperforms baselines by +10% (single-arm) and +22.7% (bimanual) in task progression.

Figure 5: Real-world evaluation on Franka tasks, showing MolmoAct's superior task progression across both single-arm and bimanual settings.

Out-of-Distribution Generalization

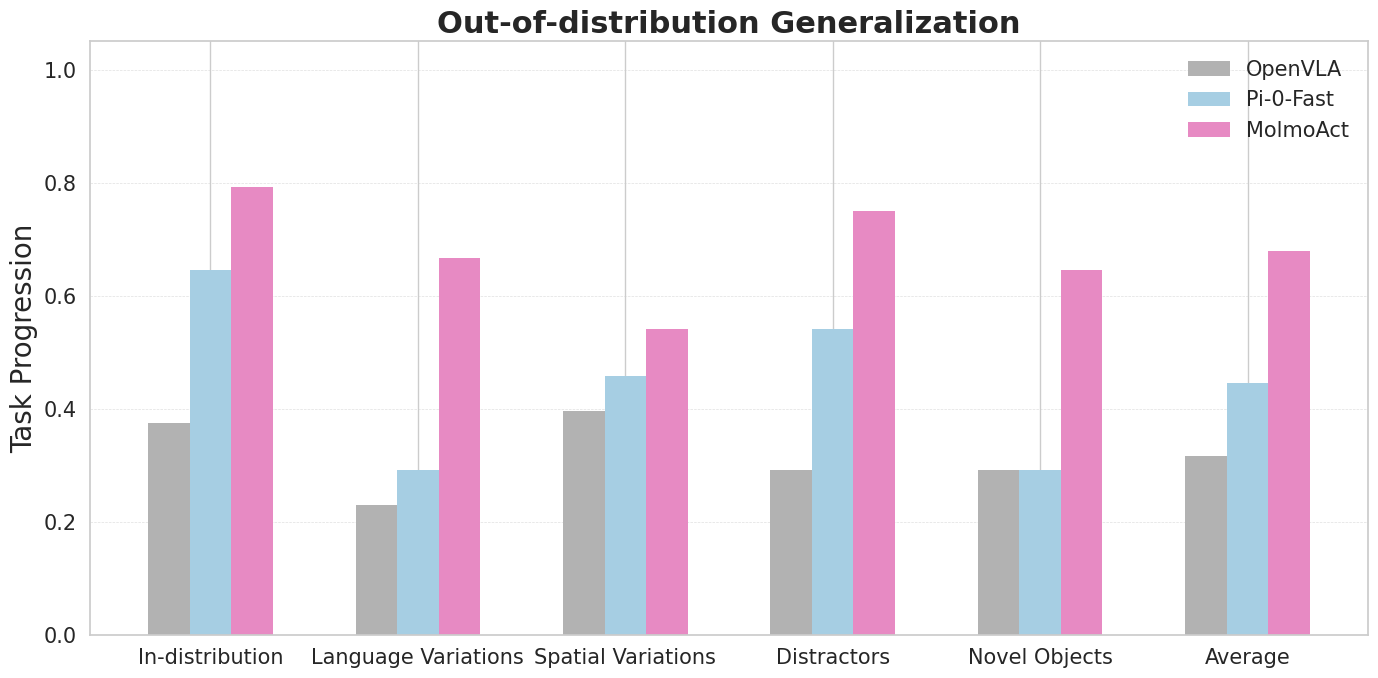

MolmoAct demonstrates strong robustness to distribution shifts, achieving a +23.3% improvement over baselines in real-world OOD generalization, and maintaining high performance under language, spatial, distractor, and novel object perturbations.

Figure 6: MolmoAct's generalization beyond training distributions, with consistent gains across OOD conditions.

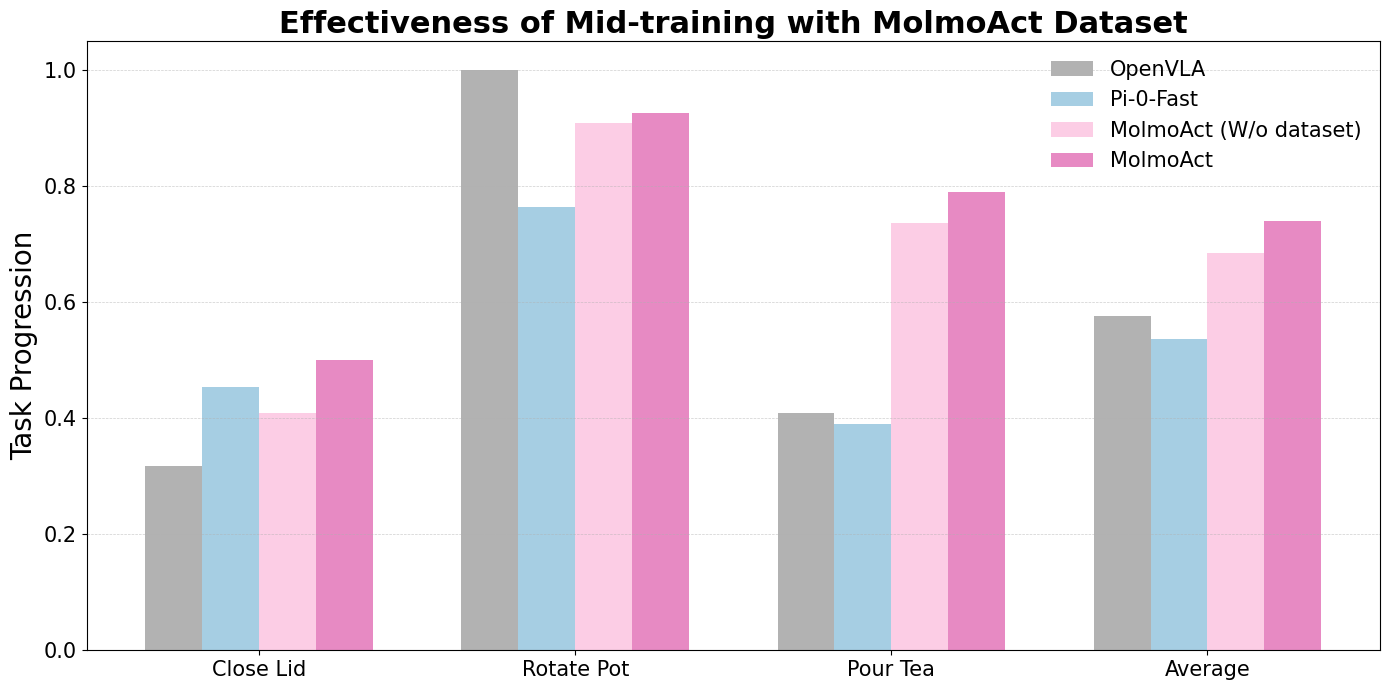

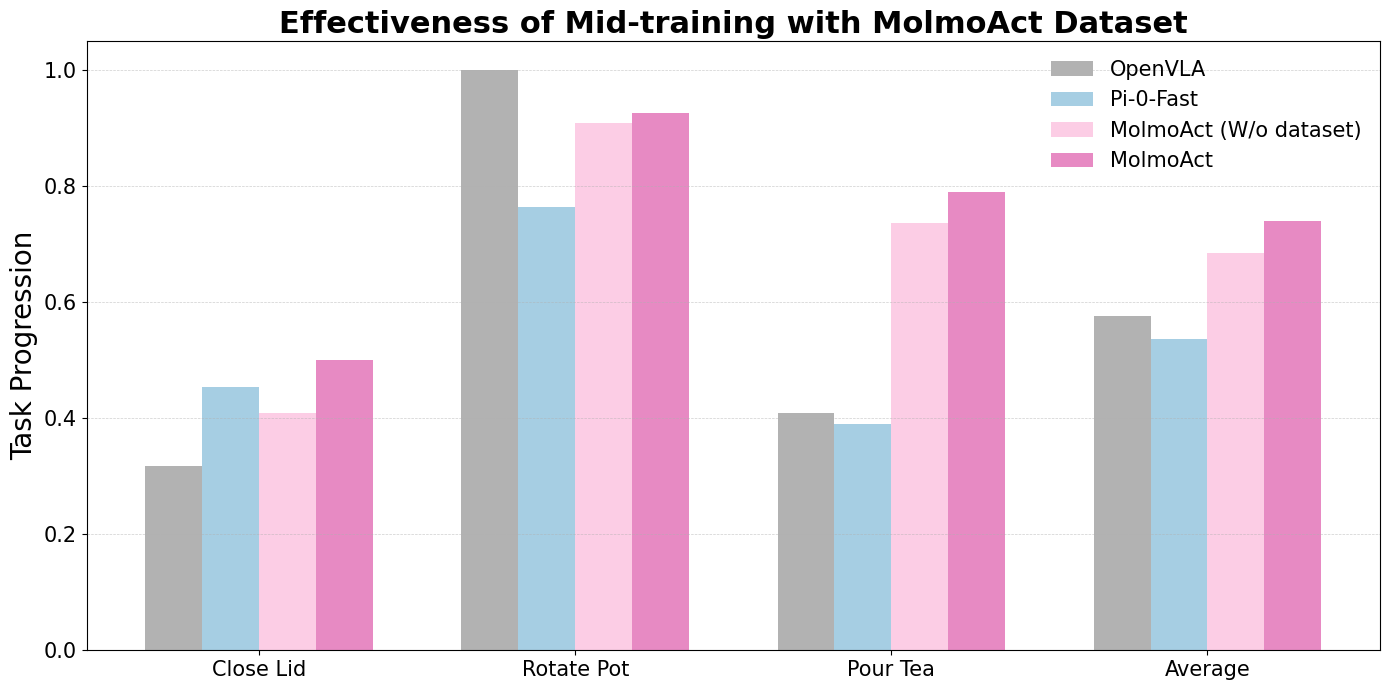

Impact of the MolmoAct Dataset

Mid-training on the MolmoAct Dataset yields a 5.5% average improvement in real-world task performance, confirming the value of high-quality, spatially annotated data for generalist manipulation.

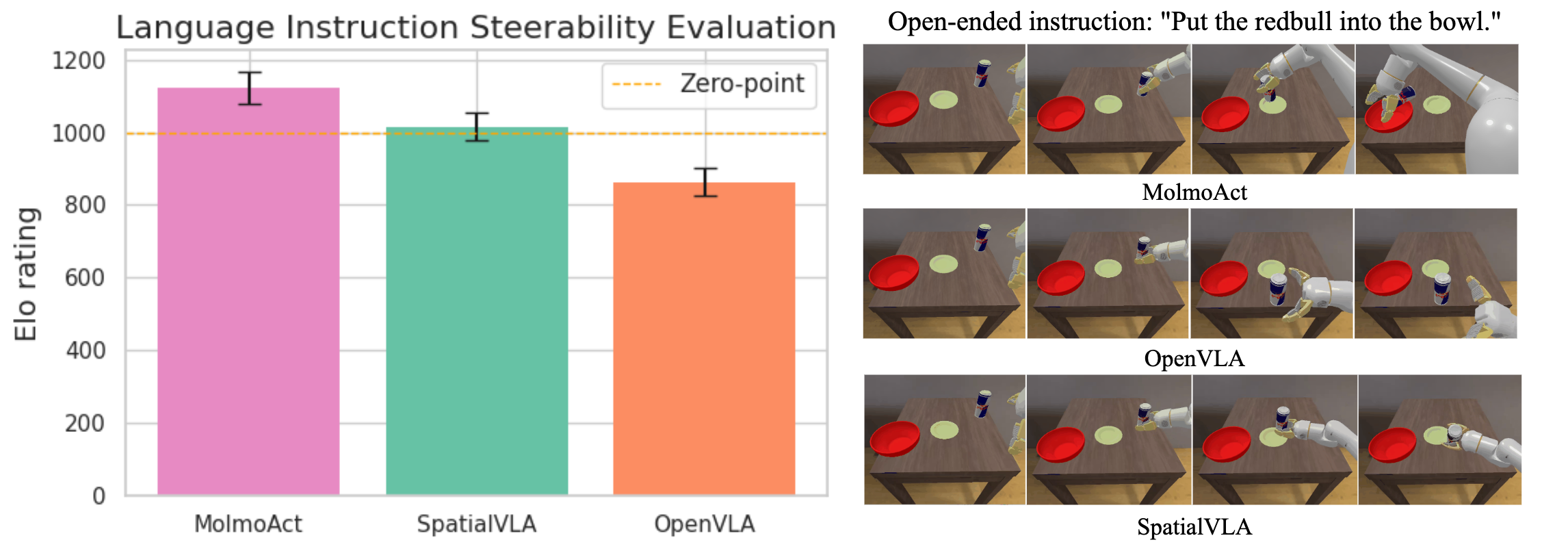

Instruction Following and Steerability

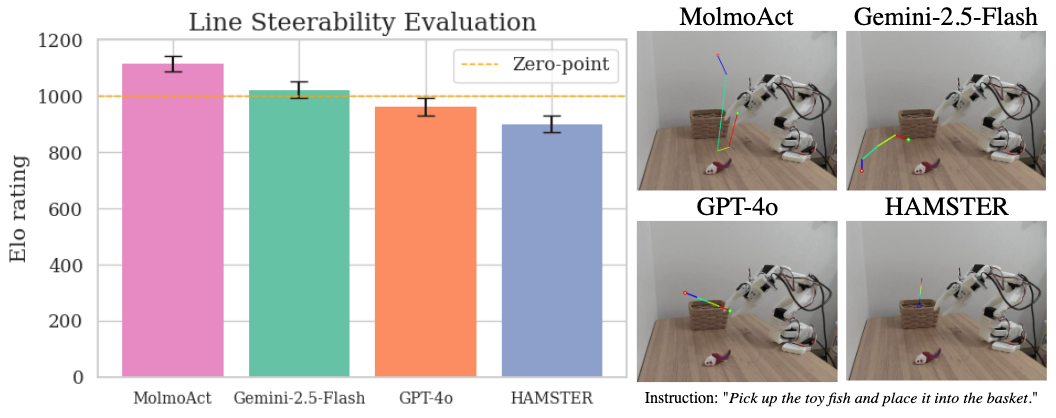

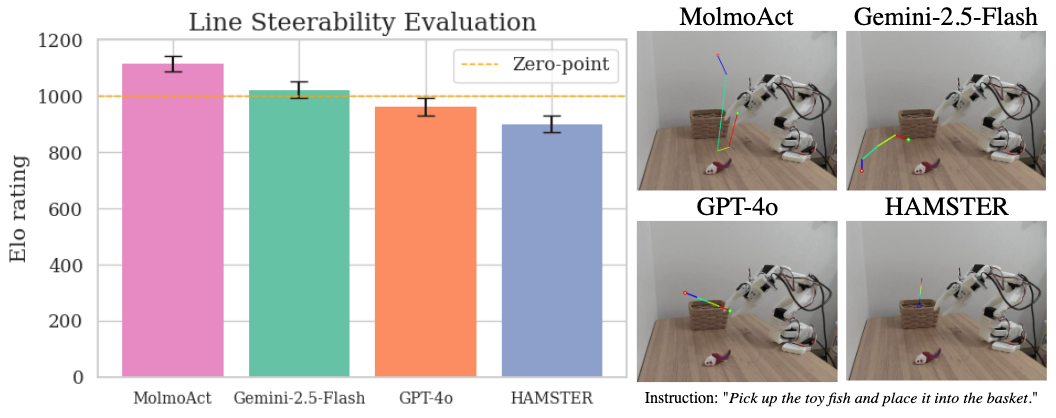

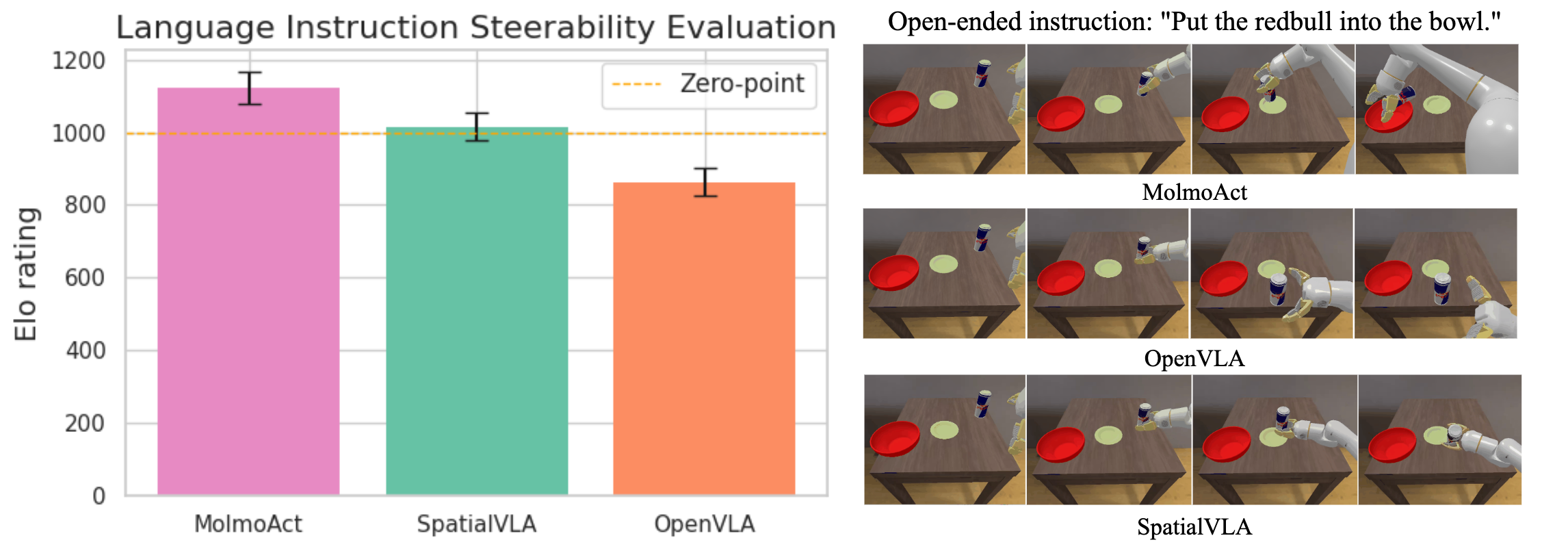

MolmoAct achieves top human-preference Elo ratings for open-ended instruction following and visual trace generation, outperforming Gemini-2.5-Flash, GPT-4o, HAMSTER, SpatialVLA, and OpenVLA.

Figure 7: Line steerability evaluation, with MolmoAct achieving the highest Elo ratings and superior qualitative trace alignment.

Figure 8: Language instruction following, with MolmoAct's execution traces more closely matching intended instructions.

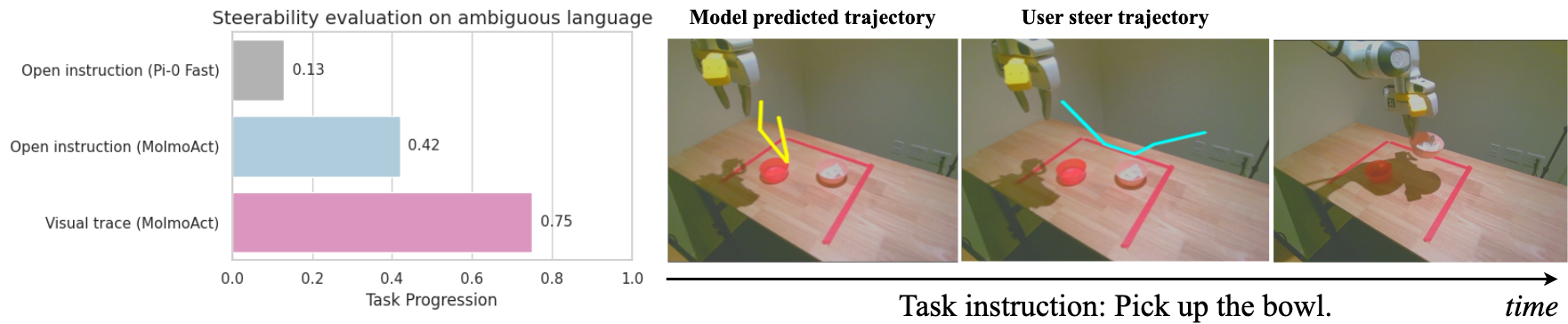

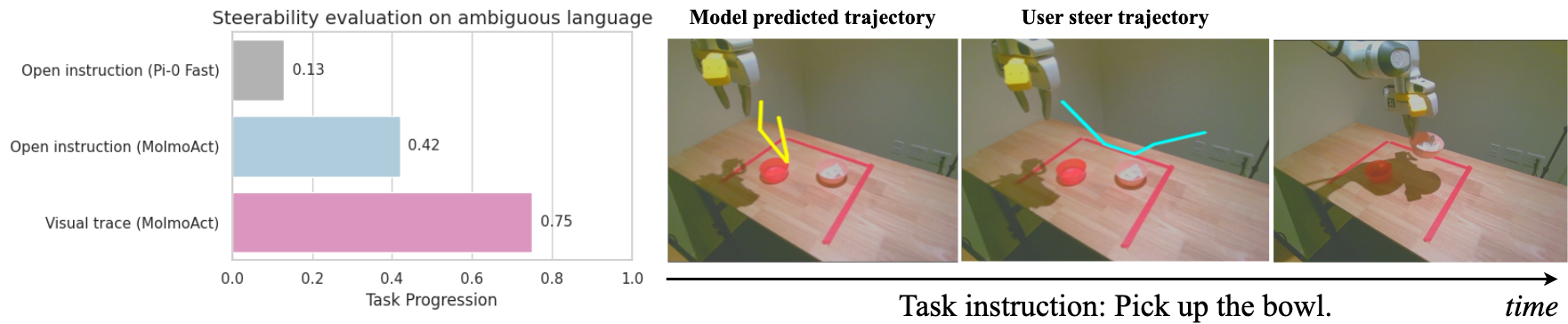

The model's steerability is further validated by interactive experiments: visual trace steering achieves a 0.75 success rate, outperforming both open-instruction variants and language-only steering by significant margins.

Figure 9: Steerability evaluation, showing the effectiveness of visual trace steering in correcting and guiding robot actions.

Technical Contributions and Implementation Details

- Action Tokenization: The use of ordinal-structured, similarity-preserving action tokens reduces training time by >5x compared to GR00T N1, with improved optimization stability.

- Depth and Trace Token Generation: VQVAE-based quantization of depth maps and VLM-based gripper localization enable efficient, scalable annotation of spatial reasoning targets.

- Multi-Stage Autoregressive Decoding: The explicit factorization of p(d,τ,a∣I,T) ensures that each action is grounded in both inferred 3D structure and planned 2D trajectory.

- Steerability Interface: Direct conditioning on user-drawn visual traces in the image plane provides a robust, unambiguous mechanism for interactive policy steering, circumventing the ambiguity and brittleness of language-only control.

Implications and Future Directions

MolmoAct demonstrates that explicit spatial reasoning—via depth and trajectory tokenization—substantially improves the generalization, explainability, and steerability of robotic foundation models. The open release of model weights, code, and the MolmoAct Dataset establishes a reproducible blueprint for future ARMs. The results suggest that further gains may be realized by:

- Extending spatial reasoning to full 3D trajectory planning, potentially leveraging predicted depth tokens for 3D trace lifting.

- Improving the resolution and precision of depth and trace tokenization for fine-grained manipulation.

- Optimizing inference speed and model size for real-time, edge deployment.

- Integrating temporal reasoning and memory architectures for long-horizon, multi-stage tasks.

Conclusion

MolmoAct advances the state of the art in generalist robotic manipulation by introducing a structured, spatially grounded action reasoning pipeline. The model achieves strong numerical results across simulation and real-world benchmarks, with robust generalization and interactive steerability. The open-source release of all components positions MolmoAct as a foundation for future research in embodied AI, emphasizing the importance of explicit spatial reasoning in bridging perception and purposeful action.