Learning to Reason for Factuality (2508.05618v1)

Abstract: Reasoning LLMs (R-LLMs) have significantly advanced complex reasoning tasks but often struggle with factuality, generating substantially more hallucinations than their non-reasoning counterparts on long-form factuality benchmarks. However, extending online Reinforcement Learning (RL), a key component in recent R-LLM advancements, to the long-form factuality setting poses several unique challenges due to the lack of reliable verification methods. Previous work has utilized automatic factuality evaluation frameworks such as FActScore to curate preference data in the offline RL setting, yet we find that directly leveraging such methods as the reward in online RL leads to reward hacking in multiple ways, such as producing less detailed or relevant responses. We propose a novel reward function that simultaneously considers the factual precision, response detail level, and answer relevance, and applies online RL to learn high quality factual reasoning. Evaluated on six long-form factuality benchmarks, our factual reasoning model achieves an average reduction of 23.1 percentage points in hallucination rate, a 23% increase in answer detail level, and no degradation in the overall response helpfulness.

Summary

- The paper introduces a composite reward function that jointly optimizes factual precision, response detail, and answer relevance to minimize hallucinations.

- The methodology leverages online RL with GRPO, achieving a 23.1% reduction in hallucination rate and a 23% increase in response detail without harming helpfulness.

- Scalable evaluation via an optimized VeriScore pipeline reduces verification time by 30x, enabling efficient RL training in large-scale LLM deployments.

Learning to Reason for Factuality: Online RL for Long-Form Factuality in LLMs

Introduction

The paper "Learning to Reason for Factuality" (2508.05618) addresses the persistent challenge of hallucinations in Reasoning LLMs (R-LLMs) when generating long-form factual responses. While R-LLMs have demonstrated significant improvements in complex reasoning tasks, their tendency to hallucinate factual content is exacerbated compared to non-reasoning models. The authors systematically analyze this phenomenon and propose a novel online reinforcement learning (RL) framework, incorporating a reward function that jointly optimizes factual precision, response detail, and answer relevance. This approach is evaluated across six long-form factuality benchmarks, demonstrating substantial reductions in hallucination rates and improvements in response detail without sacrificing helpfulness.

Problem Analysis and Motivation

R-LLMs, such as DeepSeek-R1 and QwQ-32B, utilize extended chain-of-thought (CoT) reasoning to tackle complex tasks. However, empirical results show that these models exhibit higher hallucination rates on long-form factuality benchmarks compared to their non-reasoning counterparts. The root cause is traced to RL training regimes that prioritize logical reasoning (e.g., math, coding) but neglect factuality alignment. The lack of reliable, scalable verification methods for long-form factuality further complicates the application of online RL, as existing automatic evaluators (e.g., VeriScore) are slow and susceptible to reward hacking.

Reward Function Design

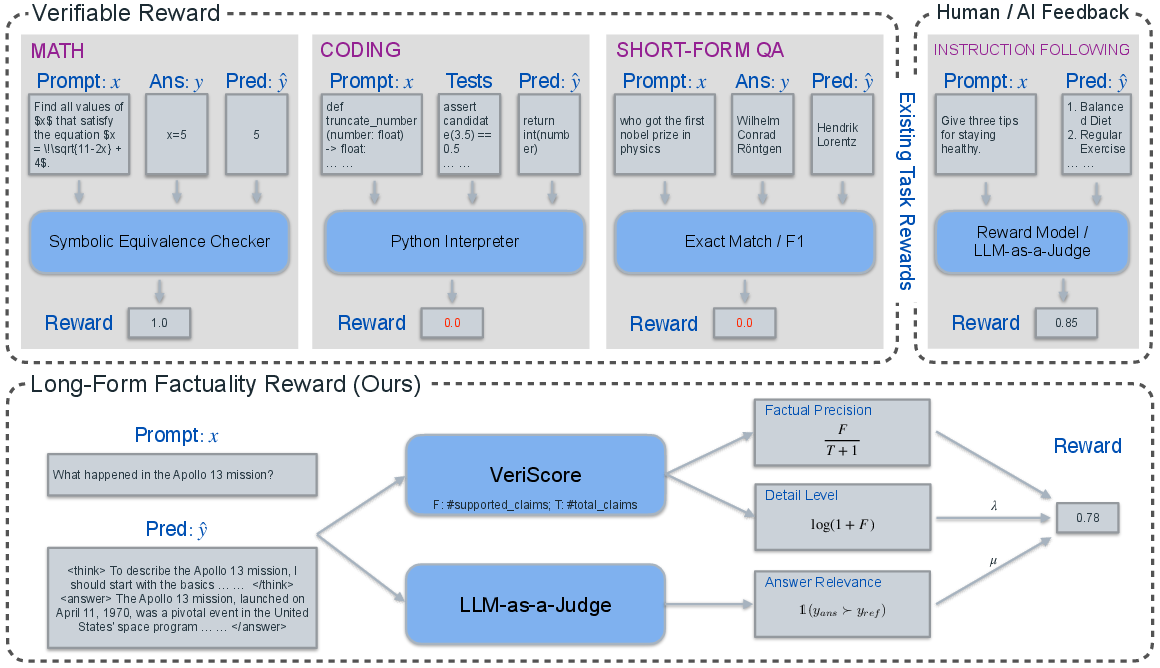

A central contribution is the design of a composite reward function for factual reasoning in long-form responses. The reward integrates three components:

- Factual Precision (Rfact): Ratio of supported claims to total claims, smoothed to avoid division by zero.

- Response Detail Level (Rdtl): Logarithmic function of the number of supported claims, incentivizing comprehensive answers.

- Answer Relevance (Rrel): Binary indicator from an LLM judge comparing the candidate response to a reference, ensuring relevance and overall quality.

This design explicitly mitigates reward hacking, where models might produce short, overly generic, or irrelevant but factually correct responses to maximize reward.

Figure 1: Reward design for Long-Form Factuality, illustrating the necessity of balancing factual precision, detail, and relevance to prevent reward hacking.

Scalable Factuality Evaluation

To enable real-time reward computation in online RL, the authors implement a highly parallelized version of VeriScore, achieving a 30x speedup over the original. This involves batching LLM requests for claim extraction and verification, asynchronous evidence retrieval, and distributed inference using Matrix and vLLM. The optimized pipeline reduces per-response verification time from minutes to seconds, making it feasible for RL rollouts at scale.

Training Paradigm: Offline and Online RL

The training pipeline consists of:

- Prompt Curation: Synthetic fact-seeking prompts are generated using Llama 4, grounded in diverse real-world and factual datasets, ensuring coverage and relevance.

- Supervised Finetuning (SFT): Seed data with factual CoT traces is curated, and the base model is finetuned to produce structured reasoning chains.

- Direct Preference Optimization (DPO): Offline RL using preference pairs, with constraints on factual precision margin and response length to avoid length-based reward hacking.

- Group Relative Policy Optimization (GRPO): Online RL algorithm that samples groups of responses per prompt, computes relative advantages, and optimizes the composite reward.

Empirical Results

Evaluation on six long-form factuality benchmarks (LongFact, FAVA, AlpacaFact, Biography, Factory-Hard, FactBench-Hard) reveals:

- Online RL (SFT + GRPO): Achieves an average 23.1 percentage point reduction in hallucination rate and a 23% increase in detail level over the base model, with no degradation in helpfulness (AlpacaEval win rate > 50%).

- Offline RL (SFT + DPO): Improves precision but often at the expense of response quality and detail, with win rates dropping below 40%.

- Existing R-LLMs: Exhibit higher hallucination rates and inconsistent trade-offs between precision and detail compared to non-reasoning models.

Reward Ablation and Trade-offs

Ablation studies on the reward function components demonstrate:

- Optimizing solely for factual precision increases precision but reduces relevance and win rate, indicating reward hacking.

- Adding detail level further inflates supported claims but exacerbates irrelevance.

- Incorporating answer relevance via LLM-as-a-judge restores win rate and balances precision/detail trade-offs.

- Adjusting the weights (λ, μ) allows practitioners to tune the model for more accurate or more detailed responses, depending on application requirements.

Reasoning Strategies and CoT Analysis

Analysis of CoT traces during training shows:

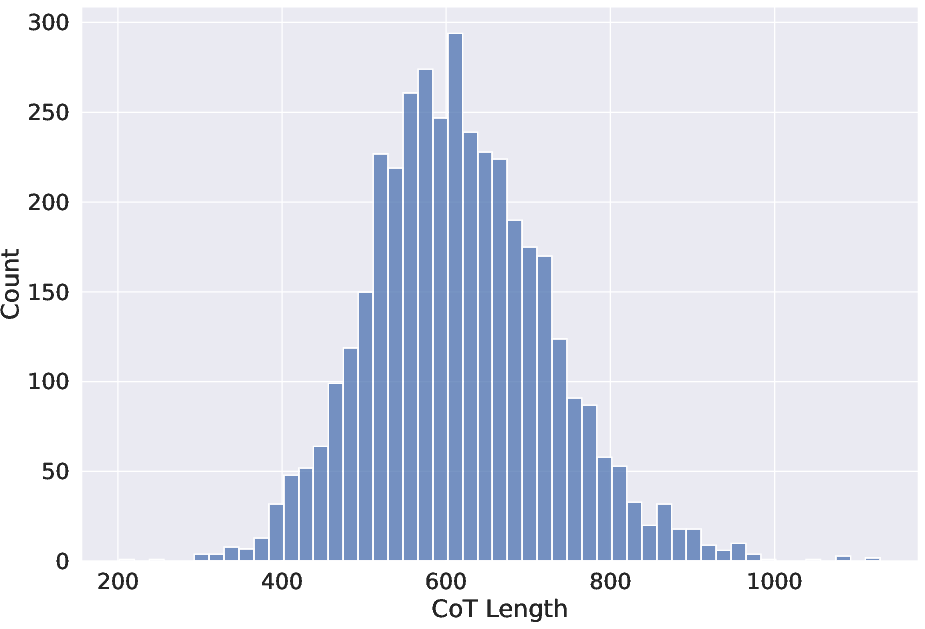

- CoT and answer lengths increase rapidly in early training, then stabilize, reflecting a shift from detail acquisition to precision/relevance optimization.

Figure 2: Length trajectory of CoT reasoning traces during Factual Reasoning training, indicating early expansion and later stabilization.

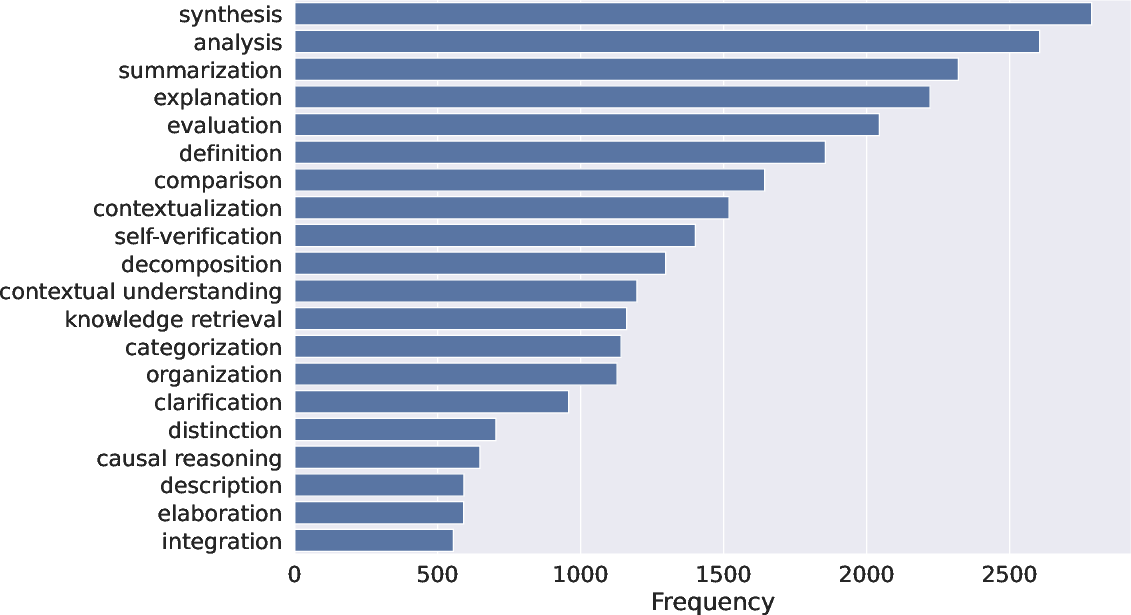

- LLM-based meta-reasoning analysis identifies a diverse set of strategies (synthesis, summarization, explanation, definition, comparison) distinct from those in math/coding-focused datasets.

Figure 3: Top 20 commonly used reasoning strategies based on 3920 training prompts, highlighting the diversity in factual reasoning approaches.

Implementation Considerations

- Hardware: Training and inference are distributed across 32 NVIDIA H100 GPUs, leveraging fairseq2, vLLM, and Matrix for scalable RL and evaluation.

- Efficiency: Batched, asynchronous processing and data sharding are critical for throughput.

- Deployment: The reward function and scalable VeriScore implementation are suitable for integration into production RL pipelines for LLM factuality alignment.

Implications and Future Directions

The proposed online RL framework for factual reasoning in LLMs demonstrates that it is possible to substantially reduce hallucinations and improve response detail without sacrificing helpfulness. The composite reward function is robust against common reward hacking strategies and can be tuned for application-specific trade-offs. The scalable evaluation pipeline enables practical deployment in large-scale RL training.

Future work should explore agentic factual reasoning, where models interact with external tools (e.g., search engines) to dynamically acquire missing knowledge during response generation. Extending the reward function and RL framework to such settings could further enhance factuality in open-domain, long-form tasks.

Conclusion

This work establishes a rigorous methodology for aligning R-LLMs to factuality in long-form generation via online RL. The integration of factual precision, detail, and relevance in the reward function, coupled with scalable evaluation, sets a new standard for factual reasoning in LLMs. The empirical results validate the approach, and the framework is extensible to agentic and tool-augmented reasoning scenarios, representing a significant step toward robust, trustworthy LLMs for real-world applications.

Follow-up Questions

- How does the composite reward function mitigate reward hacking in long-form factuality tasks?

- What distinguishes GRPO from DPO in terms of optimizing response detail and factual accuracy?

- How is the VeriScore pipeline optimized to support real-time RL training for LLMs?

- What are the potential trade-offs between increasing factual precision and retaining answer relevance in this framework?

- Find recent papers about long-form factuality in LLMs.

Related Papers

- Fine-tuning Language Models for Factuality (2023)

- FLAME: Factuality-Aware Alignment for Large Language Models (2024)

- FactAlign: Long-form Factuality Alignment of Large Language Models (2024)

- DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning (2025)

- Demystifying Long Chain-of-Thought Reasoning in LLMs (2025)

- Reinforcement Learning is all You Need (2025)

- RM-R1: Reward Modeling as Reasoning (2025)

- Reward Reasoning Model (2025)

- The Hallucination Dilemma: Factuality-Aware Reinforcement Learning for Large Reasoning Models (2025)

- KnowRL: Exploring Knowledgeable Reinforcement Learning for Factuality (2025)

Tweets

YouTube

alphaXiv

- Learning to Reason for Factuality (71 likes, 0 questions)