Understanding Factuality in LLM Alignment

The Challenge of Factuality in LLMs

LLMs have been extensively used for various applications requiring the understanding and generation of natural language. A critical step in utilizing these models effectively is called "alignment," which involves fine-tuning the models to follow instructions accurately and usefully. Despite the advancements, a persistent challenge remains: these models often "hallucinate," or produce information that isn't factually correct. This problem not only persists but can be exacerbated by standard alignment techniques, including Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF).

Key Insights from Recent Research

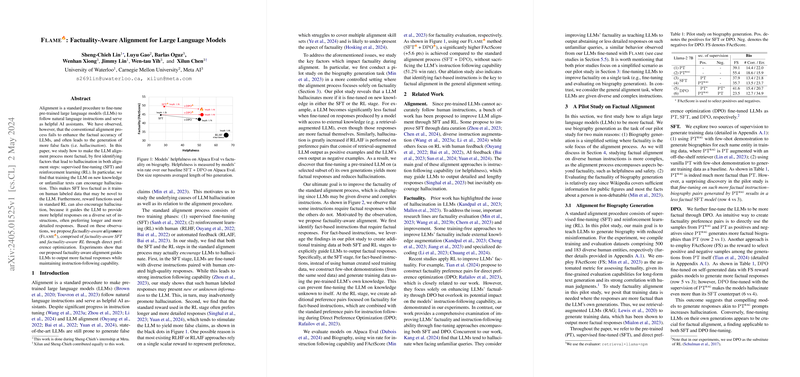

A paper identified specific issues within the alignment processes that contribute to the decrease in factuality:

- Supervised Fine-Tuning (SFT): Typically involves adjusting the LLM based on human-labeled data. However, if this data contains new or unknown information to the LLM, it can lead to increased rates of hallucination.

- Reinforcement Learning (RL): This method often favors longer, more detailed responses to cover various instructions comprehensively. Unfortunately, this preference can lead to a decrease in factual accuracy as the model may "invent" details to fulfill the length and detail criteria.

The researchers proposed a novel approach, termed "Factuality-Aware Alignment," focusing on maintaining or improving the factuality of responses during the alignment process without compromising the model's ability to follow instructions.

Factuality-Aware Alignment: A Closer Look

The introduction of Factuality-Aware Alignment includes modifications to both SFT and RL phases:

- Supervised Fine-Tuning (SFT) Adjustments:

- For fact-based instructions, the model leverages its existing knowledge to generate training data, thus minimizing the incorporation of unfamiliar information.

- The training examples for SFT are generated using controlled few-shot prompts, ensuring that the information used has been previously "seen" by the model.

- Reinforcement Learning (RL) Tweaks:

- The model's reward function is adjusted to include a direct preference for factuality, especially for fact-based instructions.

- This adjustment ensures that the model learns not only to create helpful responses but also to ensure that these responses are factually correct.

Impressive Results and Practical Implications

The implementation of this approach showed noteworthy results:

- The Factuality-Aware Alignment method resulted in more factual outputs compared with traditional alignment processes. Specific numerical improvements include a +5.6 points increase in a FActScore, a metric for measuring factuality.

- Importantly, these gains in factuality did not come at the expense of the model's ability to follow instructions effectively, with instruction following capability measured at a 51.2% win rate.

These results suggest that it's possible to fine-tune LLMs in a way that significantly reduces the generation of incorrect information without sacrificing the utility of the model for practical applications.

Future Directions

While Factuality-Aware Alignment shows much promise, the continual evolution of LLMs and alignment strategies provides a fertile ground for further research. Future studies could explore:

- Multiple Alignment Skill Sets: Extending this approach to other necessary skills like logical reasoning or ethical alignment could provide more robust LLMs.

- Adapting to New and Emerging Data: As new information becomes available, models need continually updated training to maintain factual accuracy.

- Scalability and Efficiency: Implementing these strategies efficiently at scale is another challenge, particularly for more extensive, more complex models used in various real-world applications.

In summation, while significant challenges remain in the effective and factual use of LLMs, the advancements outlined in this paper provide a potentially impactful way forward, offering both immediate improvements and a roadmap for further enhancement.