Fine-Tuning LLMs for Factuality: An Expert Overview

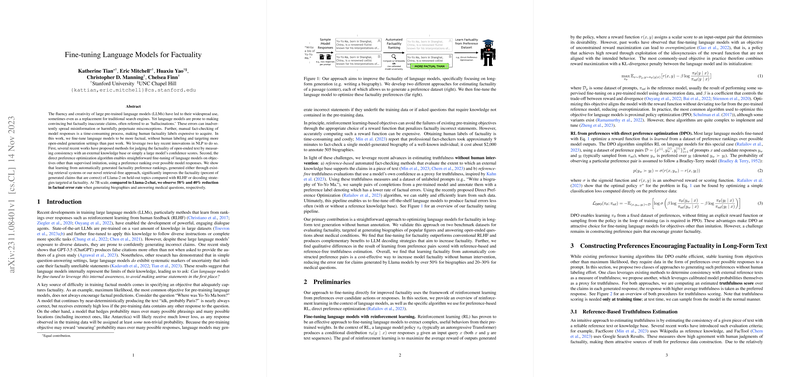

"Fine-tuning LLMs for Factuality," authored by Katherine Tian, Eric Mitchell, Huaxiu Yao, Christopher D. Manning, and Chelsea Finn, addresses the critical issue of factual inaccuracy, often termed "hallucinations," in the outputs generated by large pre-trained LLMs. The paper introduces methodologies for reducing these inaccuracies through targeted fine-tuning, leveraging preference-based learning without the need for human annotation. Furthermore, it explores both reference-based and reference-free approaches to estimate the truthfulness of the model's text.

Introduction

Despite considerable advancements and widespread applications, LLMs still struggle with generating factually accurate content. Such inaccuracies can lead to misinformation, which is particularly concerning given the increasing reliance on LLMs for tasks typically reserved for traditional search engines. The authors identify this problem and propose a solution: fine-tuning models to enhance factuality using automatically generated factuality preference rankings derived from two distinct approaches.

Methodology

The paper outlines two novel methodologies for generating preference rankings aimed at factuality improvement:

- Reference-Based Truthfulness Estimation: This method evaluates the consistency of a generated text with external knowledge sources. The authors use the FactScore metric, which relies on Wikipedia to judge the factuality of claims in the text. Claims are first extracted using GPT-3.5, and their truthfulness is evaluated through a fine-tuned Llama model.

- Reference-Free Confidence-Based Truthfulness Estimation: This approach uses the model's internal uncertainty to estimate factuality. After extracting claims and converting them into specific questions, the model's confidence in its answers (based on the probability distribution of responses) serves as a proxy for truthfulness. This method avoids the need for external data sources, making it scalable and self-sufficient.

Direct Preference Optimization (DPO)

The authors utilize the Direct Preference Optimization (DPO) algorithm, an efficient approach for preference-based RL. It simplifies the optimization process compared to traditional reinforcement learning algorithms like PPO by converting the problem into a classification task, making it suitable for handling the generated preference pairs.

Experimental Validation

The authors validate their approach on two benchmark datasets: biography generation and medical question-answering. The generated data includes detailed statistics and several questions per dataset entity. The paper compares the fine-tuned models against standard supervised fine-tuning (SFT), RLHF models such as Llama-2-Chat, and other state-of-the-art factuality methods like Inference Time Intervention (ITI) and Decoding by Contrasting Layers (DOLA).

Results

Experimental results clearly demonstrate the effectiveness of factuality fine-tuning. Notably, fine-tuning with reference-based factuality-scored pairs (FactTune-FS) consistently improves the factual accuracy across different tasks, showing reductions in the number of incorrect facts and, in some cases, an increase in the number of correct facts.

- For Llama-2, FactTune-FS achieves up to 17.06 correct facts with only 2.00 errors for biography generation.

- In medical QA, a noticeable improvement is also achieved with reductions in error rates of up to 23% compared to standard baselines.

Additionally, the practicality of combining factuality fine-tuning with existing factuality-boosting decoding methods proves effective as demonstrated by the compatibility with DOLA, indicating complementary benefits.

Theoretical and Practical Implications

The proposed methodologies offer substantial practical implications. The reference-free approach, in particular, provides a scalable solution for enhancing factuality without necessitating human intervention or extensive external datasets, making it economically attractive and readily applicable to various domains.

From a theoretical standpoint, fine-tuning LLMs using automatically generated preference pairs aligns with insights on model calibration and self-awareness of uncertainty, propelling future studies in self-supervised learning and robust AI systems.

Future Directions

Future research may explore:

- Scaling the methods to larger models and more diverse datasets.

- Further refining the integration of preference-based RL with RLHF.

- Extending the evaluation framework to additional long-form text generation tasks, enhancing generalizability.

Conclusion

This paper presents innovative solutions to mitigate the factual inaccuracy in LLM outputs through advanced fine-tuning techniques. It underscores the feasibility and effectiveness of preference-based learning strategies, significantly contributing to the field of natural language processing and the development of more reliable AI systems.