- The paper introduces a novel multi-agent framework that condenses codebases by approximately 80% to enable more efficient bug localization using LLMs.

- It employs natural language summaries to represent code structures, allowing the system to navigate large codebases and classify code elements for precise debugging.

- Experiments on the SWE-bench Lite dataset show state-of-the-art results with 84.67% file-level and 53.0% function-level accuracy, along with significant cost reduction.

Introduction

The paper "Meta-RAG on Large Codebases Using Code Summarization" (2508.02611) explores the application of LLMs and Retrieval Augmented Generation (RAG) for bug localization in extensive software codebases. The authors introduce a novel multi-agent system, Meta-RAG, designed to overcome the challenges associated with localizing bugs in large-scale repositories by employing natural language summaries to condense and represent codebases. This method significantly reduces codebase size by approximately 79.8% on average, making it more manageable for LLMs to process.

Methodology

Code Summarization

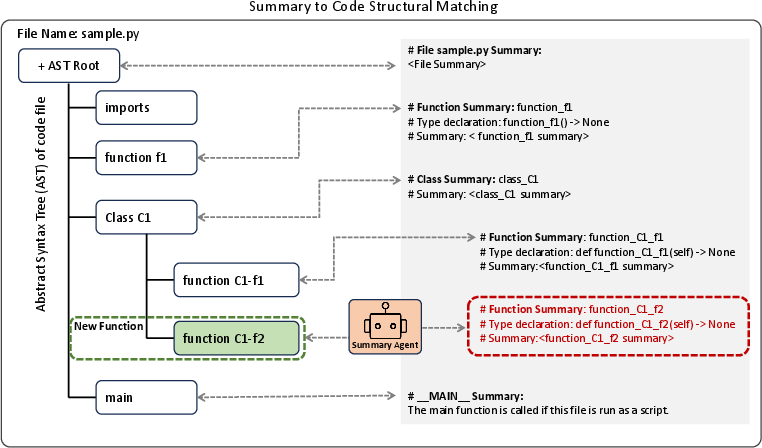

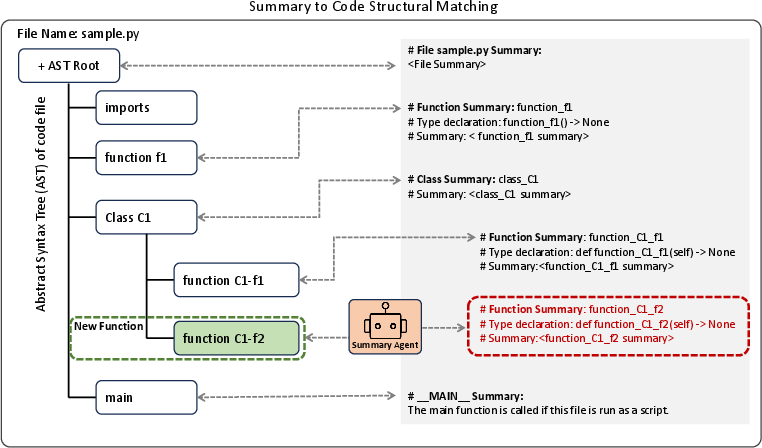

The core of Meta-RAG's approach lies in its capacity to generate concise summaries of codebases. These summaries provide a structured, natural language representation of the code, which is critical for informing the LLM about codebase architecture and functionality. The process involves generating and storing summaries that condense each code file, detailing files, classes, and functions, along with their interrelationships. Notably, these summaries reduce the average codebase size by approximately 80%, thus effectively addressing context window limitations and diminishing attention concerns associated with LLMs.

Figure 1: Example of a summary template (right) and a summary update using the AST.

Meta-RAG employs a multi-agent system featuring a Control Agent and a Code Agent, working synergistically to facilitate efficient bug localization. It starts by delivering code summaries to the LLM, prompting it to identify relevant code components, which are then classified into Read-list, Write-list, and New-list. This hierarchical approach in traversing the code summary enables precise bug localization without overwhelming the LLM's context window.

Furthermore, the use of summaries enhances the system's capacity to handle the vast token sizes of large codebases, particularly when the number of lines significantly exceeds typical LLM context capabilities.

Figure 2: Meta-RAG architecture diagram.

Experimental Setup and Results

The performance of Meta-RAG is benchmarked using the SWE-bench Lite dataset, consisting of 300 instances across diverse repositories. The framework demonstrated state-of-the-art performance in bug localization, achieving a 84.67% correctness rate at the file level and a 53.0% correctness rate at the function level. These results substantiate the efficacy of the system in accurately pinpointing bug locations, significantly advancing the field of automated software debugging.

The study also outlines computational efficiency, noting a considerable reduction in both token usage and cost when utilizing Meta-RAG in a continuous development environment. The average cost for processing an instance through this system was measured to be below 1 USD, a noteworthy achievement considering the large size of the codebases involved.

Discussion

The findings highlight Meta-RAG's potential to revolutionize bug localization and software maintenance. By leveraging natural language summaries, this approach not only diminishes the computational burden associated with processing extensive codebases but also enhances security by minimizing the need to expose proprietary code. The use of summaries as a form of dynamic documentation also provides developers with valuable insights into code architecture, streamlining collaboration processes.

Meta-RAG's superior performance compared to current methodologies underlines the transformative potential of integrating advanced retrieval and summarization techniques within LLM-based systems. Additionally, its capability to efficiently localize bugs paves the way for further exploration into using similar frameworks for other tasks within software development, such as feature implementation and optimization.

Conclusion

"Meta-RAG on Large Codebases Using Code Summarization" (2508.02611) presents a robust framework for utilizing LLMs in the task of bug localization, offering significant advancements over existing methods. The reduction in token consumption and cost, alongside superior localization accuracy, marks a pivotal stride in employing AI for automated software engineering. Future research may extend this approach to other aspects of the software development lifecycle, further establishing the relevance and applicability of the Meta-RAG framework.