- The paper presents a novel memristive SNN architecture that incorporates a supervised in-situ STDP algorithm for efficient neural training on hardware.

- The architecture leverages a 1T1M crossbar design with lateral inhibition and dual switch control to enable ultra-low-energy pattern recognition and classification.

- Robustness is demonstrated by achieving 93.4% accuracy under 20% input noise and maintaining performance despite various memristor faults and threshold variations.

Efficient Memristive Spiking Neural Networks Architecture with Supervised In-Situ STDP Method

Introduction

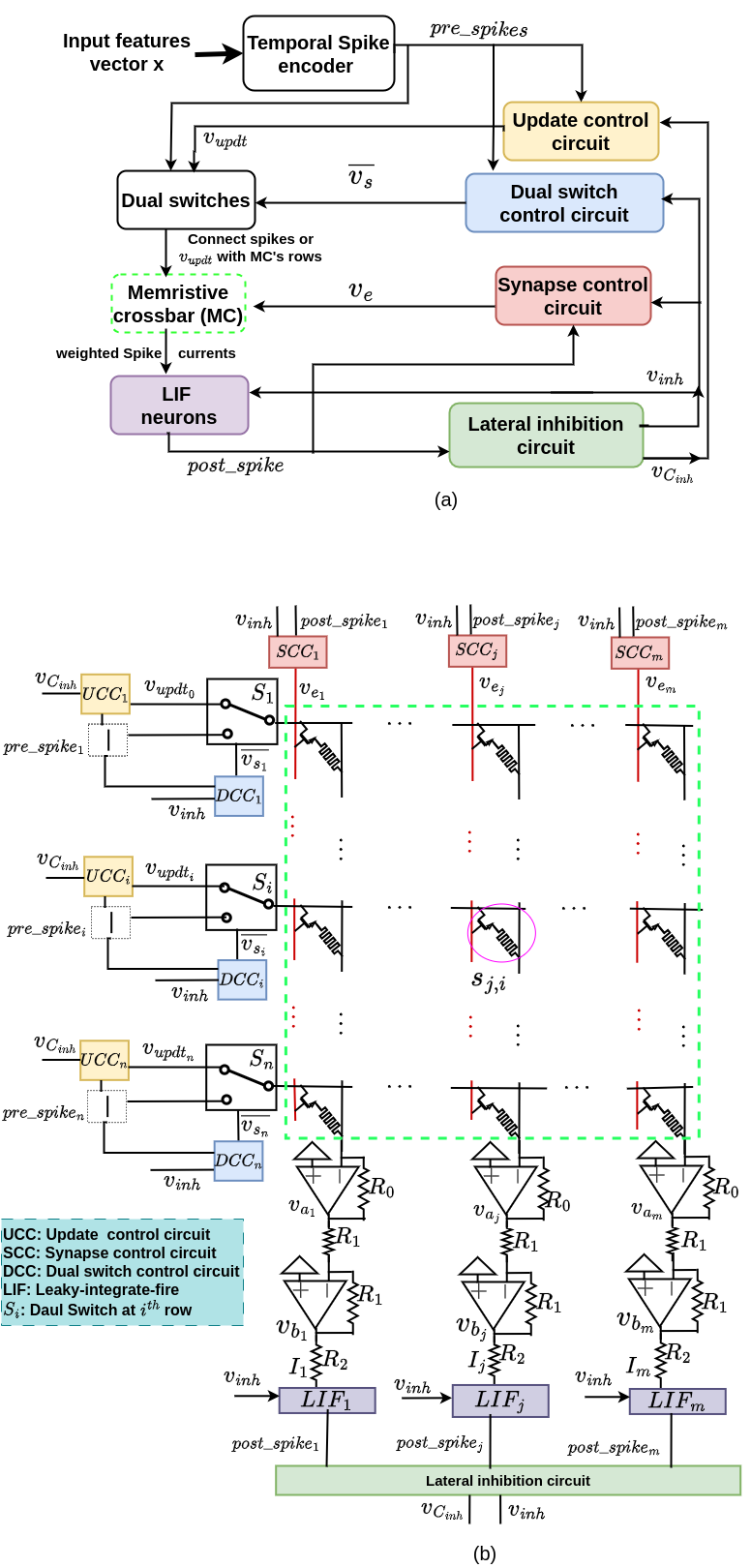

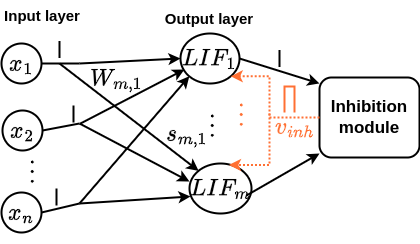

The paper discusses a memristor-based Spiking Neural Network (SNN) architecture designed for ultra-low-energy computation, which makes it suitable for battery-powered intelligent devices. The architecture utilizes a novel supervised in-situ learning algorithm inspired by spike-timing-dependent plasticity (STDP). Key features include lateral inhibition, a refractory period, and parallel synapse updating. These elements are incorporated to improve training efficiency and eliminate the need for external control hardware, thus facilitating scalability in terms of input and output data dimensions.

Architecture Overview

The architecture centers around memristive devices within an SNN framework, offering high-density integration and low power consumption. Memristors are essential circuit elements due to their variable resistance states similar to biological synapses. The proposed SNN architecture leverages a 1T1M memristor crossbar design that supports both pattern recognition and classification tasks without requiring external microcontrollers or ancillary hardware.

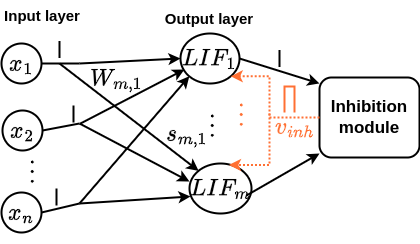

Figure 1: Spiking neural network model. The features at the input layer are encoded into spikes and via synapses they travel to post-synaptic neurons at the output layer.

The architecture comprises several key components:

Training Mechanism

The memristive SNN uses a hardware-friendly supervised in-situ STDP algorithm. Training is performed directly on the neuromorphic hardware to harness memristor fault tolerance and efficiency. The novel algorithm supervises weight changes based on spike-timing differences following STDP rules.

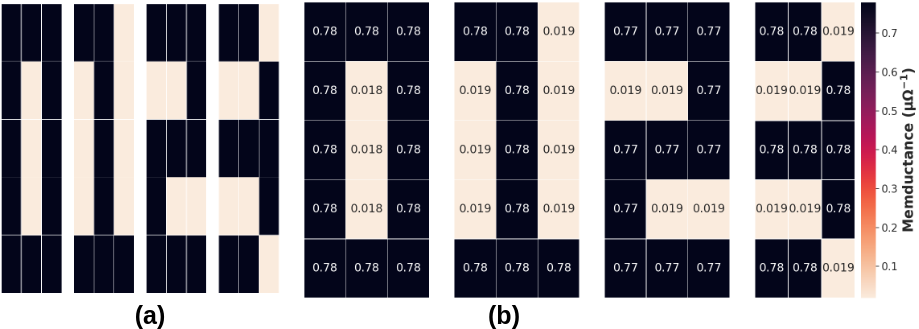

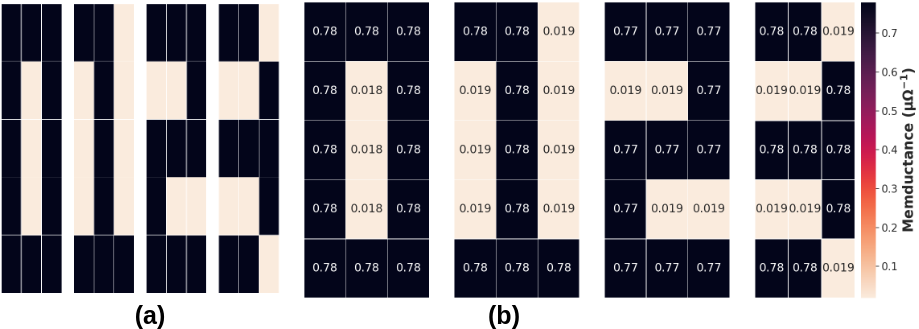

During training, a bias current ensures that a neuron associated with the correct label becomes the "winner," leading to a distinct weight adjustment pattern. Training outcomes are visualized using heat maps to demonstrate synaptic weight consistency post-training.

Figure 3: (a) Binary training patterns, (b) heat map of final synaptic weights in μΩ−1.

Robustness and Fault Analysis

The architecture demonstrates robustness against various noise levels, fault conditions, and device variations:

- Noise Robustness: Achieves 93.4% accuracy even with 20% input noise.

- Stuck-at-conductance Faults: Maintains high accuracy in scenarios where memristors are stuck.

- Resistance and Threshold Variations: Analysis under varied boundary resistance and threshold voltage conditions indicates substantial resilience, albeit sensitivity to excessive threshold variations.

Scalability and Practical Implications

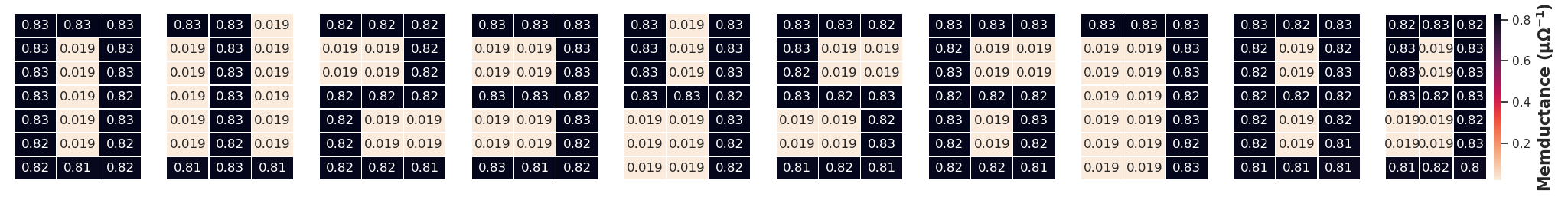

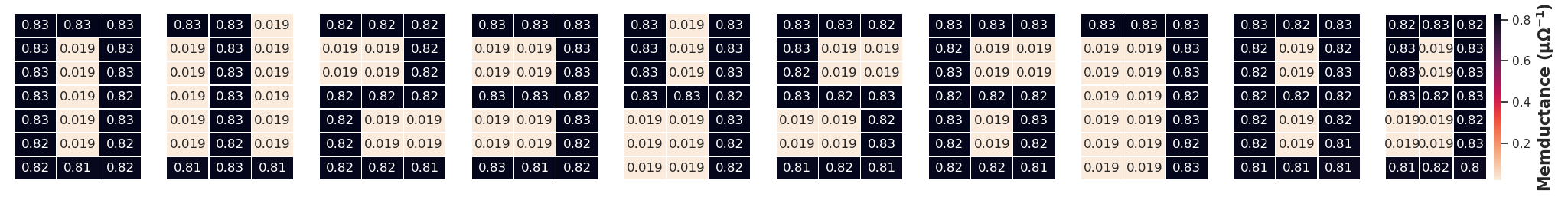

The architecture scales effectively for larger pattern sizes and more classes without compromising accuracy. By employing modular and scalable design principles, the architecture can be adapted to handle more complex datasets, exemplified by training on scaled binary patterns (digits 0-9) while maintaining efficient resource use.

Figure 4: The final weights after training consist of digits 0 to 9 of size 7×3.

Future Directions

Future work aims to explore the practical deployment of the proposed memristive SNN in edge devices, assess detailed energy consumption characteristics, and evaluate the architecture with extensive datasets for real-world applications.

Conclusion

The paper presents a memristive SNN architecture optimized for both classification and pattern recognition tasks, achieving high accuracies with significant efficiency and robustness. Its design facilitates scalability and robustness against noise and device variations, offering promise for future AI applications in energy-constrained environments.