Automatic Prompt Optimization with "Gradient Descent" and Beam Search

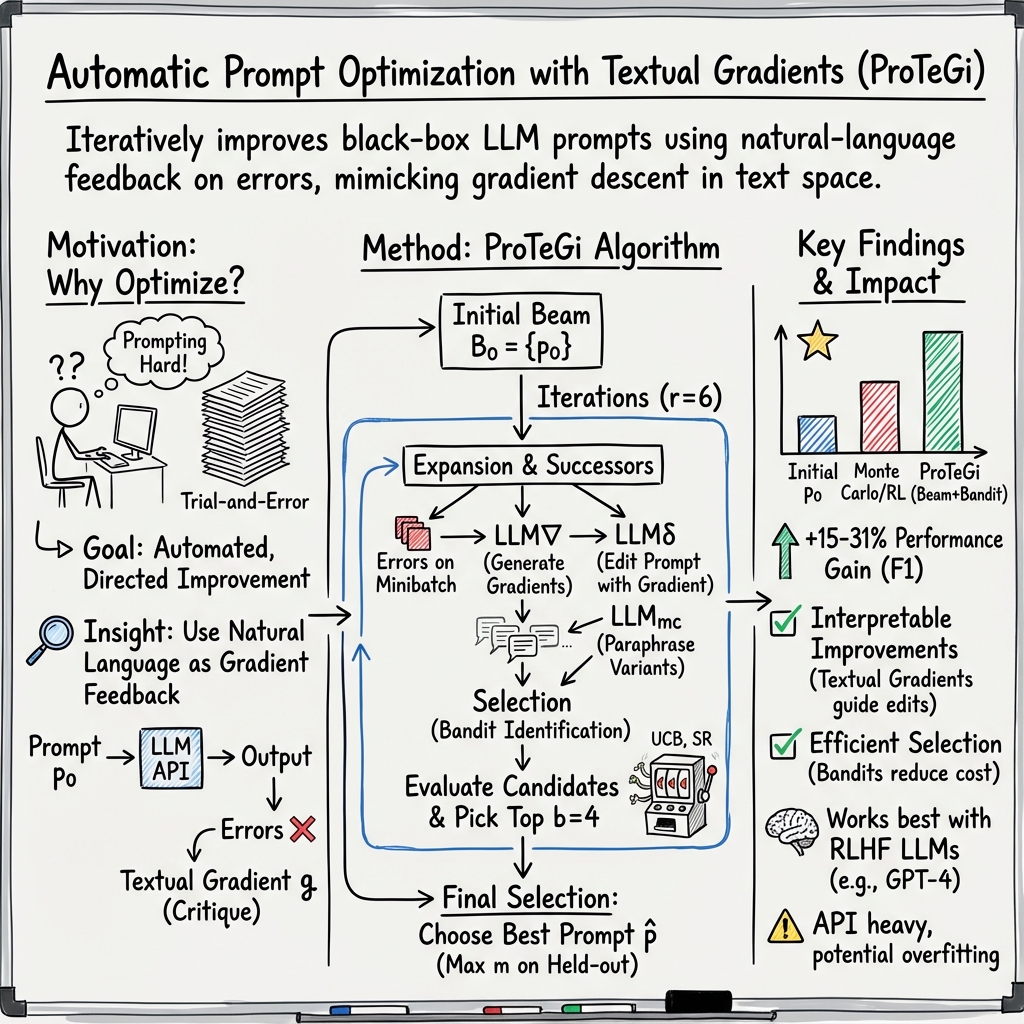

Abstract: LLMs have shown impressive performance as general purpose agents, but their abilities remain highly dependent on prompts which are hand written with onerous trial-and-error effort. We propose a simple and nonparametric solution to this problem, Automatic Prompt Optimization (APO), which is inspired by numerical gradient descent to automatically improve prompts, assuming access to training data and an LLM API. The algorithm uses minibatches of data to form natural language "gradients" that criticize the current prompt. The gradients are then "propagated" into the prompt by editing the prompt in the opposite semantic direction of the gradient. These gradient descent steps are guided by a beam search and bandit selection procedure which significantly improves algorithmic efficiency. Preliminary results across three benchmark NLP tasks and the novel problem of LLM jailbreak detection suggest that Automatic Prompt Optimization can outperform prior prompt editing techniques and improve an initial prompt's performance by up to 31%, by using data to rewrite vague task descriptions into more precise annotation instructions.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper is about helping people write better instructions (called “prompts”) for LLMs like ChatGPT. Good prompts make LLMs perform tasks more accurately, but writing them is often slow and involves a lot of trial and error. The authors propose a simple way to automatically improve prompts using feedback from the LLM itself, so the instructions get clearer and more helpful over time.

What questions does the paper try to answer?

- Can we automatically rewrite a prompt to make an LLM perform better on a task?

- Can we do this using only the LLM’s public API (no special access to its internal parts)?

- Can the method be efficient (not too many costly API calls) and work across different tasks?

How does the method work?

Think of the process like improving a recipe with taste tests:

- Test the current recipe (prompt) on a small plate of dishes (a minibatch of examples).

- Ask a food critic (the LLM) to explain what went wrong in plain language—these comments are “textual gradients.” In standard machine learning, a gradient points uphill toward more error. Here, the “textual gradient” is a short written critique explaining what made the prompt produce mistakes.

- Use those comments to rewrite the recipe (edit the prompt) in the opposite direction—fix the problems the critic mentioned.

- Don’t just make one rewrite—make several versions. Keep the best few and repeat the cycle.

Two ideas help this process:

- Beam search: Imagine a talent show where only the top few contestants move on each round. At each step, the method creates many candidate prompts and keeps only the best handful to continue improving.

- Bandit selection: Testing every candidate on every example would be expensive. Instead, they use a “multi-armed bandit” strategy (like trying different slot machines with limited coins) to quickly estimate which prompts are most promising without fully testing them all. This saves time and money.

In everyday terms: the system repeatedly asks the LLM “what was wrong with these answers?” and “please rewrite the instructions to fix that,” then keeps the most promising rewrites, testing them efficiently until it finds a better prompt.

What did they find?

The authors tested their method (called ProTeGi) on four tasks:

- Detecting “jailbreak” attempts (messages trying to trick an AI into breaking its rules)

- Identifying hate speech (Ethos)

- Detecting fake news (Liar)

- Spotting sarcasm (Arabic sarcasm dataset)

Key results:

- ProTeGi improved the original prompts by up to 31%.

- It beat other prompt-improvement methods (like random edits and some reinforcement learning approaches) by about 4–8% on average.

- It needed fewer API calls than some competitors, making it more efficient.

- RLHF-tuned models (like ChatGPT and GPT-4) worked better with this method than older models.

- Most improvements happened within a few rounds (around three), suggesting you don’t need very long optimization runs.

Why is this important? Because it shows we can use the LLM’s own feedback to systematically refine prompts, turning vague instructions into clear, step-by-step guidelines that help the model perform better.

What does this mean going forward?

If you want an AI to do a job well, good instructions matter. This research shows a practical way to:

- Reduce the guesswork and manual effort in writing prompts

- Get clearer, more precise instructions that improve accuracy

- Do it using only an LLM’s API—no special access needed

Potential impact:

- Faster development of reliable AI workflows (e.g., moderation, fact-checking, safety checks like jailbreak detection)

- More interpretable prompting, because the “textual gradients” are human-readable critiques

- A foundation for future improvements, like adjusting how big each edit should be, trying more types of tasks (summarization, chatbots), and tuning the selection strategies even further

In short, ProTeGi is like a smart editor for AI instructions: it learns from mistakes, rewrites the prompt, and keeps the best versions—making LLMs more accurate and easier to use.

Collections

Sign up for free to add this paper to one or more collections.