An Analysis of "Mixtures of In-Context Learners"

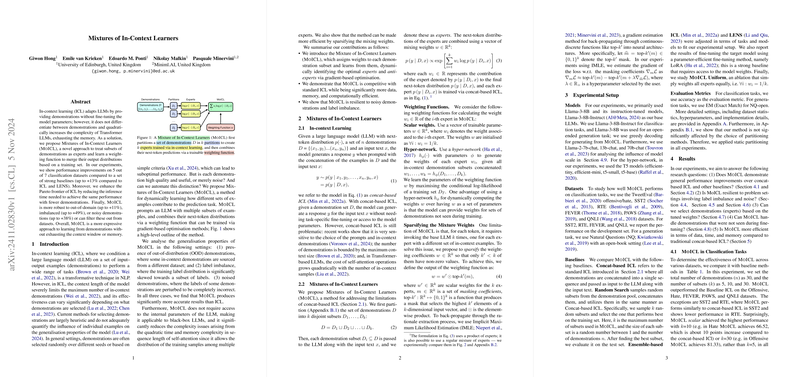

The paper "Mixtures of In-Context Learners" introduces an innovative method to enhance in-context learning (ICL) for LLMs. The primary contribution of the research is the Mixture of In-Context Learners (MOICL), a framework that assigns weights to subsets of demonstrations, treating them as experts, and combines their output via gradient-based optimization. This approach counters the quadratic complexity increase and memory limitation issues associated with traditional ICL by making it more efficient and adaptable to uncertainty in data quality and distribution.

Key Methodological Insights

The proposed MOICL system partitions demonstration sets into several smaller groups, dubbed "experts". Each subset contributes to a next-token prediction based on learned weights rather than a single concatenated input set. This allocation allows for more granular and efficient handling of tasks, especially when dealing with extensive datasets. The weighting mechanism is trainable, allowing for adaptability and learning from data distribution during inference without necessitating access to internal LLM parameters.

The paper investigates several scenarios where MOICL shows distinct advantages:

- Out of Distribution (OOD) and Imbalanced Data: It demonstrates robustness by showing robustness to OOD data (up to +11%), mitigating label imbalance (up to +49%), and handling noisy demonstrations (up to +38%) effectively relative to traditional ICL methods.

- Efficiency: By using a partition-based approach for demonstration selection, the method reduces inference time, demonstrated by highlighting computational complexity improvements over standard ICL practices.

Empirical Results

MOICL is validated across seven classification datasets and showcases superior performance over prominent baselines like standard ICL and LENS, achieving up to a 13% increase in classification tasks. Additionally, the method maintains robustness and accuracy under various adverse conditions such as noisy labels and highly imbalanced data settings.

Importantly, the scalability of the method is examined, indicating that while larger demonstration pools conventionally lead to increased task efficiency, MOICL achieves superior results with smaller, more optimally-weighted demonstration subsets, showcasing its data-efficiency. Furthermore, the hyper-network version of MOICL indicates potential for scalability without direct dependence on specific demonstrations used during training.

Theoretical and Practical Implications

Theoretically, MOICL offers a new perspective on handling demonstration data in LLMs, emphasizing a nuanced approach where not all data contributes equally to the learning task. Practically, it provides a pathway for implementing more computationally efficient LLM inference on limited hardware or when faced with context window constraints without compromising on performance.

The implications suggest a shift towards adopting such modular expert approaches in different AI tasks to leverage the strengths of in-context learning while addressing its limitations. For future research avenues, enhancing the interpretability of weighted subset selection and extending this method to handle a broader range of generative tasks beyond classification is recommended.

Overall, the MOICL framework enriches the methodological tools available for leveraging demonstrations in machine learning, promoting both efficiency and robustness in LLM applications.