AlphaGo Moment for Model Architecture Discovery (2507.18074v1)

Abstract: While AI systems demonstrate exponentially improving capabilities, the pace of AI research itself remains linearly bounded by human cognitive capacity, creating an increasingly severe development bottleneck. We present ASI-Arch, the first demonstration of Artificial Superintelligence for AI research (ASI4AI) in the critical domain of neural architecture discovery--a fully autonomous system that shatters this fundamental constraint by enabling AI to conduct its own architectural innovation. Moving beyond traditional Neural Architecture Search (NAS), which is fundamentally limited to exploring human-defined spaces, we introduce a paradigm shift from automated optimization to automated innovation. ASI-Arch can conduct end-to-end scientific research in the domain of architecture discovery, autonomously hypothesizing novel architectural concepts, implementing them as executable code, training and empirically validating their performance through rigorous experimentation and past experience. ASI-Arch conducted 1,773 autonomous experiments over 20,000 GPU hours, culminating in the discovery of 106 innovative, state-of-the-art (SOTA) linear attention architectures. Like AlphaGo's Move 37 that revealed unexpected strategic insights invisible to human players, our AI-discovered architectures demonstrate emergent design principles that systematically surpass human-designed baselines and illuminate previously unknown pathways for architectural innovation. Crucially, we establish the first empirical scaling law for scientific discovery itself--demonstrating that architectural breakthroughs can be scaled computationally, transforming research progress from a human-limited to a computation-scalable process. We provide comprehensive analysis of the emergent design patterns and autonomous research capabilities that enabled these breakthroughs, establishing a blueprint for self-accelerating AI systems.

Summary

- The paper introduces ASI-Arch, a fully autonomous system that leverages large language models to iteratively propose, implement, and validate novel neural architectures.

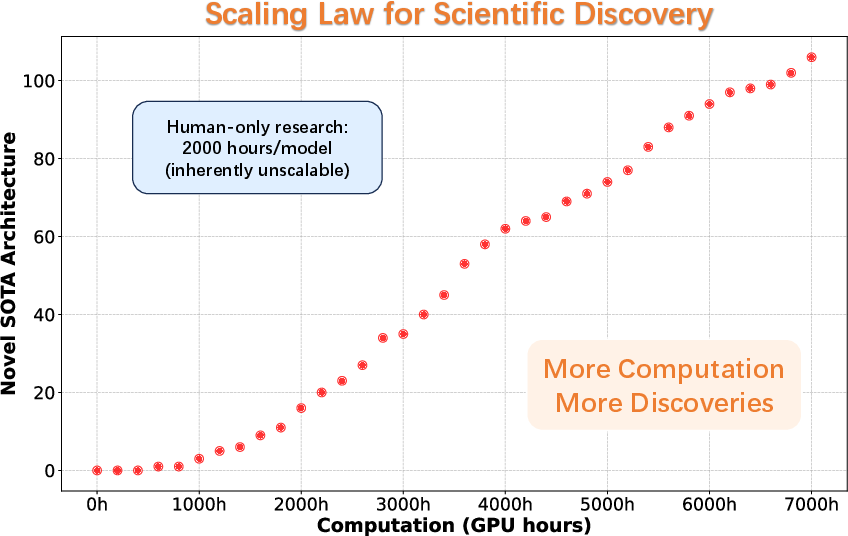

- It demonstrates a linear scaling law, showing that increased compute leads to a proportional rise in state-of-the-art architecture discoveries.

- The study highlights innovative design components and robust empirical evaluation, positioning autonomous discovery as a paradigm shift in AI research.

Autonomous Neural Architecture Discovery with ASI-Arch

Introduction and Motivation

The paper "AlphaGo Moment for Model Architecture Discovery" (2507.18074) presents ASI-Arch, a fully autonomous, multi-agent system for neural architecture discovery. The work addresses the bottleneck in AI progress imposed by the linear scaling of human research capacity, contrasting it with the exponential growth in AI system capabilities. By leveraging LLMs as autonomous agents, ASI-Arch is designed to hypothesize, implement, train, and empirically validate novel neural architectures without human intervention, thus operationalizing the concept of Artificial Superintelligence for AI research (ASI4AI).

The system is evaluated in the context of linear attention architectures, a domain of active research due to the computational inefficiency of standard quadratic attention mechanisms. The authors claim that ASI-Arch not only surpasses traditional Neural Architecture Search (NAS) by escaping human-defined search spaces, but also demonstrates a computational scaling law for scientific discovery, showing that the rate of architectural breakthroughs is linearly proportional to compute expenditure.

System Architecture and Methodology

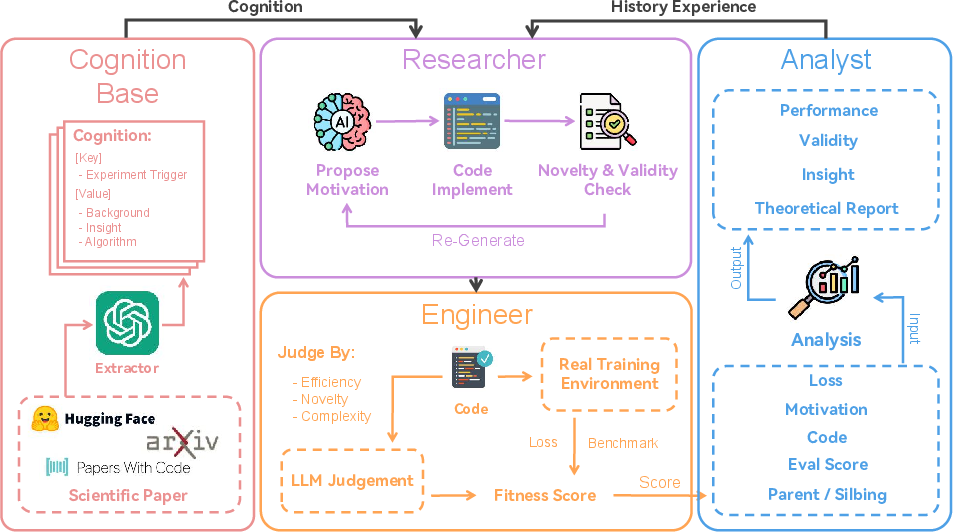

ASI-Arch is structured as a closed-loop, modular multi-agent system comprising four primary modules: Researcher, Engineer, Analyst, and Cognition. The system operates in iterative cycles, with each module fulfilling a distinct role in the autonomous research process.

Figure 1: Overview of the four-module ASI-Arch framework, illustrating the closed evolutionary loop for autonomous architecture discovery.

- Researcher: Proposes new architectures by synthesizing historical experimental data and human expert knowledge. It employs a two-level seed selection strategy to balance exploitation of high-performing designs and exploration of novel directions. Summarization of historical context is performed dynamically to encourage diversity in proposals.

- Engineer: Implements and trains the proposed architectures in a real code environment. A robust self-revision mechanism ensures that the agent iteratively debugs and corrects its own code until successful training is achieved, rather than discarding failed attempts.

- Analyst: Synthesizes experimental results, performing both quantitative and qualitative analysis. The Analyst leverages both empirical data and a curated cognition base (extracted from the literature) to inform subsequent design cycles.

- Cognition: Maintains a knowledge base of distilled insights from seminal papers, enabling retrieval-augmented generation and targeted problem-solving.

The evolutionary process is governed by a composite fitness function that integrates quantitative metrics (loss, benchmark scores) and qualitative assessments (LLM-based architectural evaluation). A two-stage exploration-then-verification protocol is adopted: initial broad exploration with small models, followed by rigorous validation of promising candidates at larger scales.

Empirical Results and Scaling Law

ASI-Arch conducted 1,773 autonomous experiments over 20,000 GPU hours, resulting in the discovery of 106 novel, state-of-the-art (SOTA) linear attention architectures. The system demonstrates a strong linear relationship between compute expenditure and the cumulative count of SOTA architectures discovered.

Figure 2: Linear scaling of SOTA architecture discovery with compute hours, establishing an empirical scaling law for automated scientific discovery.

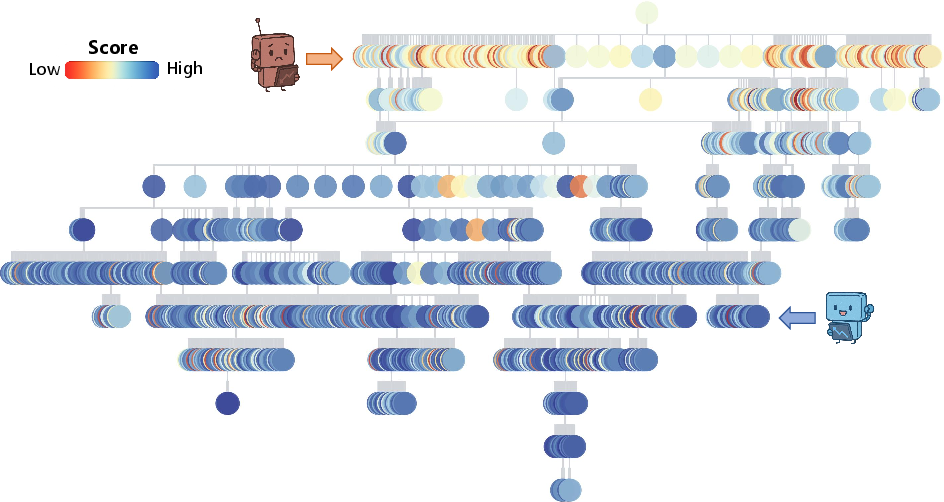

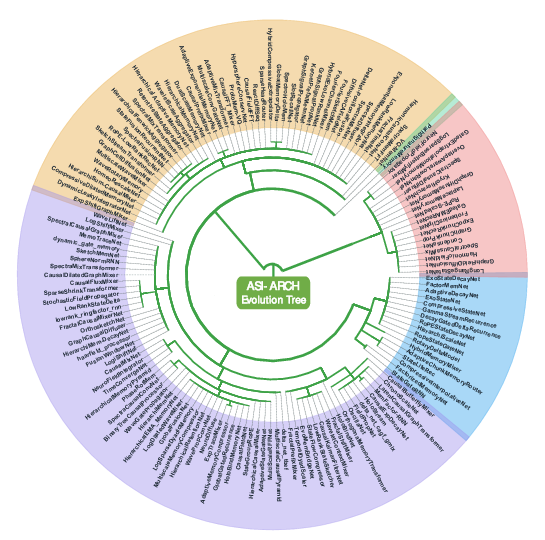

The evolutionary trajectory and phylogenetic relationships among architectures are visualized, revealing the system's capacity for both incremental improvement and radical innovation.

Figure 3: Exploration trajectory tree of the first-stage architecture search, with nodes colored by performance.

Figure 4: Architectural phylogenetic tree, illustrating parent-child relationships and evolutionary branching.

Performance evaluation on LLMing and reasoning benchmarks shows that the top AI-discovered architectures consistently outperform strong human-designed baselines (e.g., DeltaNet, Gated DeltaNet, Mamba2) across a range of tasks, including commonsense reasoning, reading comprehension, and factual question answering.

Analysis of Emergent Design Patterns

The system's design choices and search dynamics are analyzed in detail:

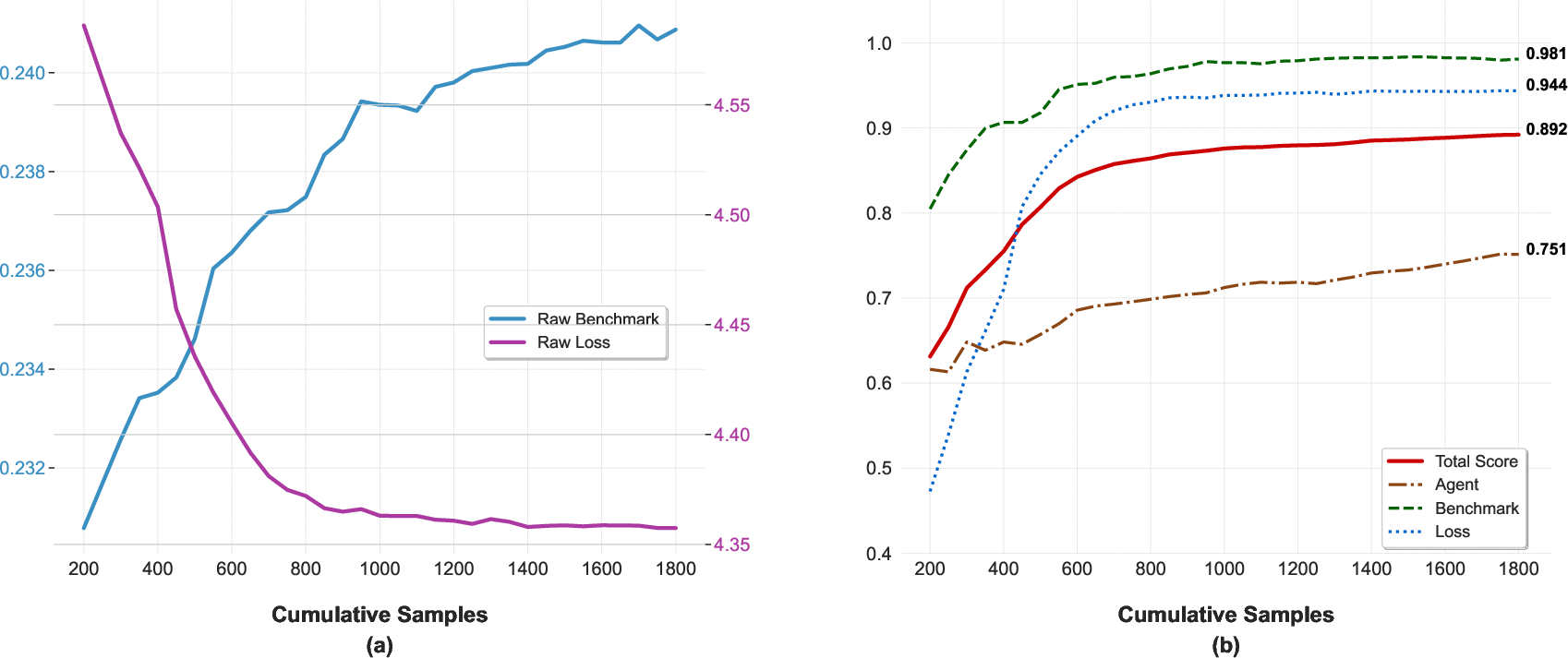

- Fitness Score Dynamics: The average fitness of top candidates exhibits rapid initial improvement, followed by a plateau due to the sigmoid transformation in the fitness function, which prevents reward hacking and over-optimization.

Figure 5: Key performance indicators and fitness score components over cumulative samples, showing steady improvement and plateauing due to fitness function design.

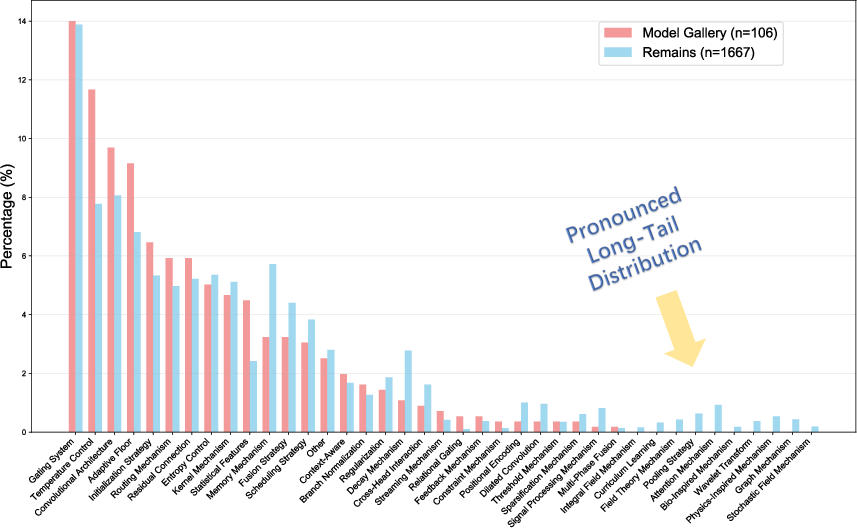

- Component Usage: Statistical analysis reveals a system-wide preference for established components such as gating mechanisms and convolutions. SOTA models exhibit a more focused component distribution, while non-SOTA models display a long-tail of less effective, novel components.

Figure 6: Statistical breakdown of architectural component usage, highlighting focused strategies in SOTA models versus broader, less effective exploration in others.

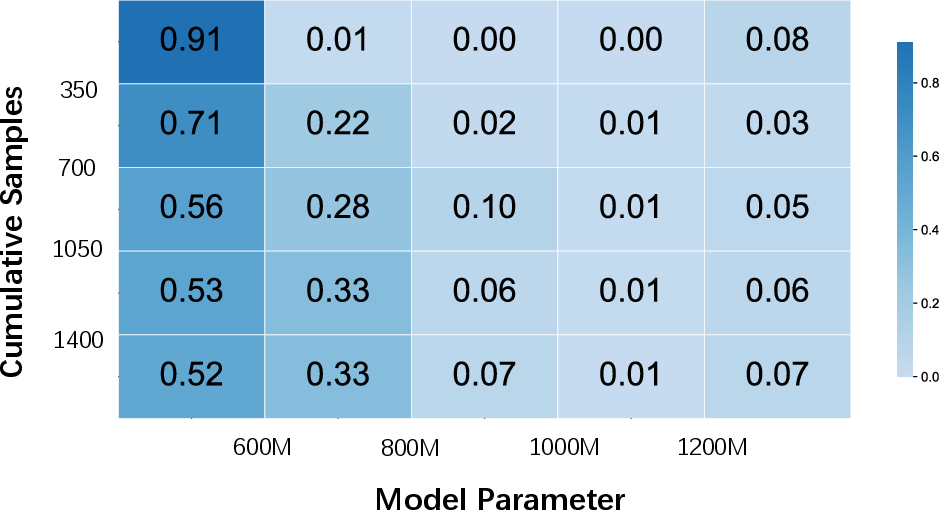

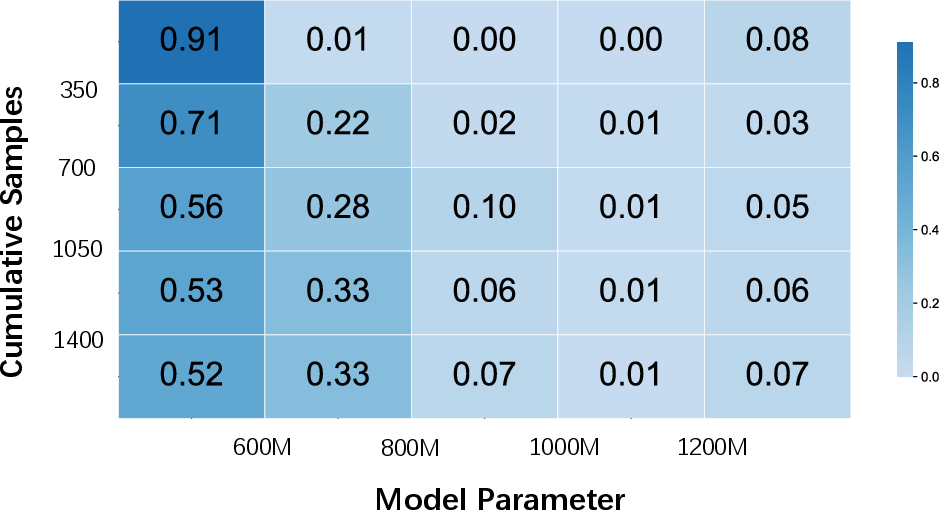

- Parameter Stability: The distribution of model parameter counts remains stable after initial diversification, indicating that performance gains are not driven by increasing model complexity.

Figure 7: Parameter distribution over the exploration stage, demonstrating stability in model complexity.

- Source of Innovation: Analysis of design provenance shows that while the majority of ideas originate from the cognition base (human literature), the highest-performing architectures increasingly rely on empirical analysis of experimental results, rather than direct imitation or random novelty.

Figure 7: Comparison of the influence of pipeline components on SOTA versus other model designs, showing higher dependency on empirical analysis for SOTA architectures.

Architectural Innovations

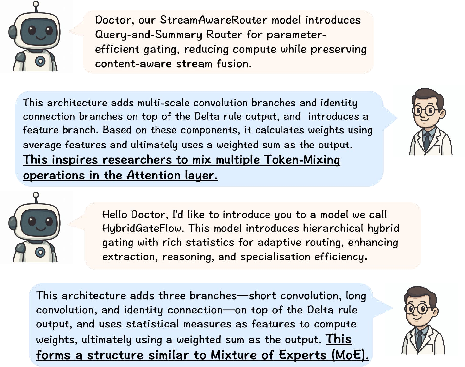

The top-performing architectures discovered by ASI-Arch introduce several novel mechanisms:

- Hierarchical Path-Aware Gating: Multi-stage routers for balancing local and global reasoning.

- Content-Aware Sharpness Gating: Dynamic, content-driven gating with learnable temperature parameters.

- Parallel Sigmoid Fusion: Independent sigmoid gates for each path, breaking softmax-induced trade-offs.

- Hierarchical Gating with Dynamic Floors: Learnable minimum allocations to prevent path collapse.

- Adaptive Multi-Path Gating: Token-level control with persistent entropy penalties to maintain diversity.

These innovations are not mere recombinations of existing components but represent emergent design principles that systematically outperform human baselines.

Figure 8: AI-discovered architectures challenge established design assumptions, analogous to AlphaGo's "Move 37" in Go.

Implications and Future Directions

The empirical scaling law demonstrated by ASI-Arch has significant implications for the future of AI research. By decoupling the rate of scientific discovery from human cognitive limits and tying it to computational resources, the work provides a concrete pathway toward self-accelerating AI systems. The open-sourcing of the framework, discovered architectures, and cognitive traces further democratizes access to AI-driven research.

The authors identify several avenues for future work:

- Multi-Architecture Initialization: Expanding the search to multiple diverse baselines could uncover entirely new architectural families.

- Component-wise Ablation: Systematic ablation studies are needed to isolate the contributions of individual modules (e.g., cognition vs. analysis).

- Engineering Optimization: Implementing custom accelerated kernels for the discovered architectures would enable direct benchmarking of computational efficiency and facilitate deployment in production environments.

Conclusion

ASI-Arch represents a significant advance in autonomous scientific discovery, operationalizing the concept of ASI4AI in the domain of neural architecture design. The system demonstrates that architectural innovation can be computationally scaled, with emergent design principles systematically surpassing human intuition. The work establishes a blueprint for future self-improving AI systems and provides a foundation for further research into fully autonomous, computation-driven scientific progress.

Follow-up Questions

- How does ASI-Arch balance exploration and exploitation in its two-level seed selection strategy?

- What specific metrics and benchmarks were used to evaluate the discovered linear attention architectures?

- How does the system's composite fitness function integrate qualitative and quantitative assessments?

- What role does the cognition module play in synthesizing historical data with emergent experimental findings?

- Find recent papers about automated neural architecture search.

Related Papers

- Hierarchical Representations for Efficient Architecture Search (2017)

- Neural Architecture Search with Reinforcement Learning (2016)

- Can GPT-4 Perform Neural Architecture Search? (2023)

- DeepArchitect: Automatically Designing and Training Deep Architectures (2017)

- Mechanistic Design and Scaling of Hybrid Architectures (2024)

- The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery (2024)

- AgentRxiv: Towards Collaborative Autonomous Research (2025)

- The AI Scientist-v2: Workshop-Level Automated Scientific Discovery via Agentic Tree Search (2025)

- AI-Researcher: Autonomous Scientific Innovation (2025)

- A Survey of Self-Evolving Agents: On Path to Artificial Super Intelligence (2025)

Authors (7)

Tweets

YouTube

HackerNews

- AlphaGo Moment for Model Architecture Discovery (38 points, 7 comments)

- Potential AlphaGo Moment for Model Architecture Discovery? (113 points, 56 comments)

- AI That Researches Itself: A New Scaling Law (5 points, 3 comments)

- Potential AlphaGo Moment for Model Architecture Discovery (1 point, 1 comment)

- AI That Researches Itself: A New Scaling Law (1 point, 1 comment)

- AI That Researches Itself: A New Scaling Law (0 points, 2 comments)

- Potential AlphaGo Moment for Model Architecture Discovery (0 points, 3 comments)

- AlphaGo Moment for Model Architecture Discovery (0 points, 15 comments)

- AlphaGo ASI Discovery Model (0 points, 5 comments)

alphaXiv

- AlphaGo Moment for Model Architecture Discovery (168 likes, 10 questions)