- The paper introduces a unified framework and taxonomy for self-evolving agents, breaking down evolution into what, when, how, and where to evolve.

- It details methodologies including reward-based, imitation, and evolutionary strategies to enable adaptive, self-improving systems.

- The survey highlights challenges such as personalization, generalization, safety, and dynamic evaluation to advance robust, adaptive AI architectures.

A Comprehensive Survey of Self-Evolving Agents: Foundations, Taxonomy, and Pathways Toward Artificial Super Intelligence

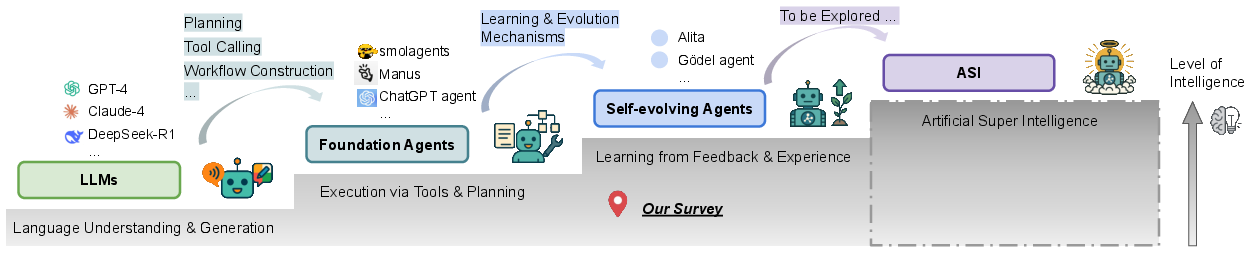

The paper "A Survey of Self-Evolving Agents: On Path to Artificial Super Intelligence" (2507.21046) provides a systematic and in-depth review of the emerging paradigm of self-evolving agents, positioning them as a critical step toward the realization of Artificial Super Intelligence (ASI). The survey introduces a unified theoretical and practical framework for understanding, designing, and evaluating self-evolving agents, dissecting the field along the axes of what, when, how, and where to evolve, and establishing a roadmap for future research and deployment.

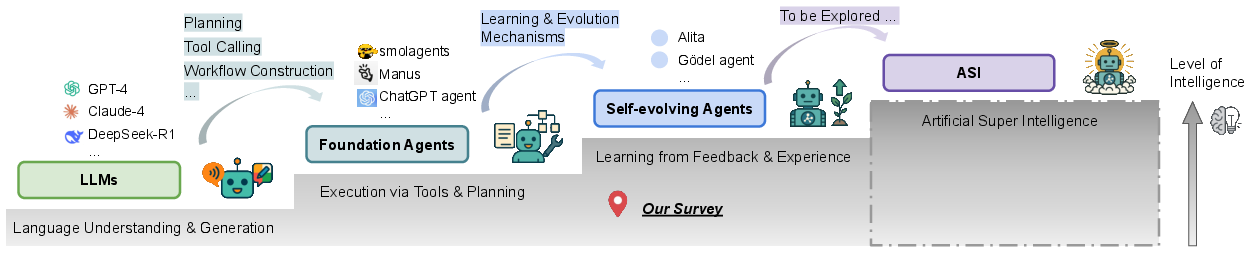

Figure 1: A conceptual trajectory illustrating the progression from LLMs to foundation agents, self-evolving agents, and ultimately toward hypothetical ASI, with increasing intelligence and adaptivity.

Motivation and Conceptual Foundations

The static nature of current LLMs, which are unable to adapt their internal parameters or workflows in response to novel tasks or dynamic environments, is identified as a fundamental bottleneck for deploying robust, general-purpose AI agents. The survey formalizes the notion of self-evolving agents as systems capable of continual, autonomous adaptation—modifying not only their model parameters but also their context, toolset, and architecture in response to real-world feedback and experience.

The paper provides a formal definition of self-evolving agents within a POMDP framework, introducing the self-evolving strategy as a transformation f that maps the current agent system to a new state, conditioned on observed trajectories and feedback. The objective is to maximize cumulative utility across a sequence of tasks, generalizing beyond traditional curriculum learning, lifelong learning, and model editing paradigms by enabling active exploration, structural modification, and self-reflection.

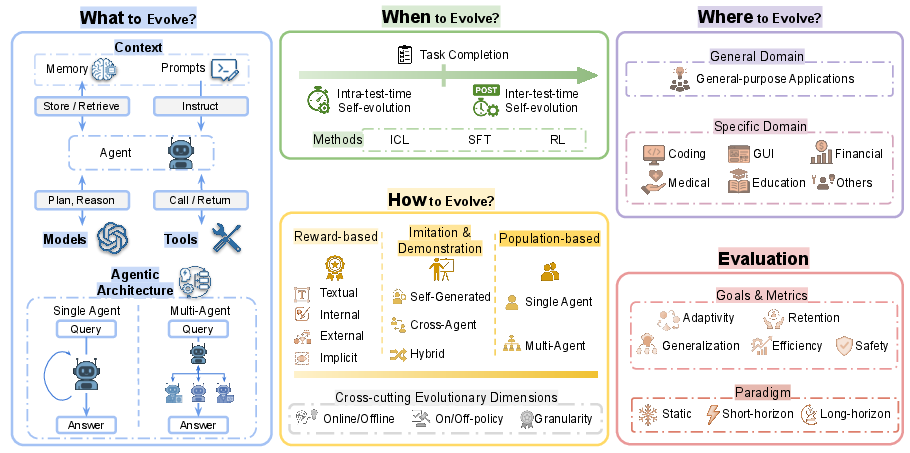

Taxonomy: What, When, How, and Where to Evolve

The survey organizes the field around four orthogonal dimensions, each illustrated with representative methods and systems.

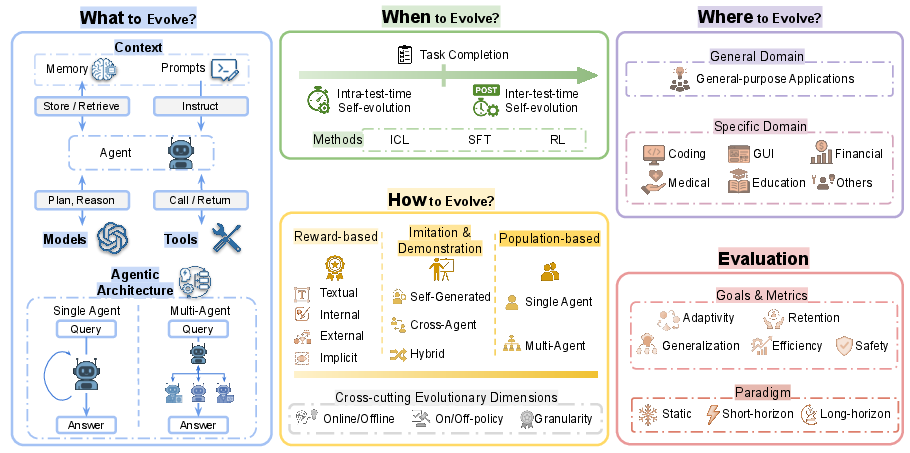

Figure 2: A comprehensive overview of self-evolving agents across key dimensions: what, when, how, and where to evolve, with evaluation goals and paradigms.

What to Evolve

- Model: Includes both policy (parameter) evolution and experience-driven adaptation. Methods such as SCA, SELF, and RAGEN enable agents to generate their own training data, refine parameters via self-generated supervision, and leverage environmental feedback for continual improvement.

- Context: Encompasses prompt optimization and memory evolution. Techniques like PromptBreeder, DSPy, and Mem0 allow agents to refine instructions and manage long-term memory, supporting both in-context adaptation and knowledge retention.

- Tool: Covers autonomous tool creation, mastery, and scalable management. Systems such as Voyager, Alita, and ToolGen enable agents to discover, synthesize, and select tools, moving from tool users to tool makers.

- Architecture: Involves both single-agent and multi-agent system optimization. Approaches like AgentSquare, Darwin Godel Machine, and AFlow demonstrate the evolution of agentic workflows, modular design, and even self-rewriting code.

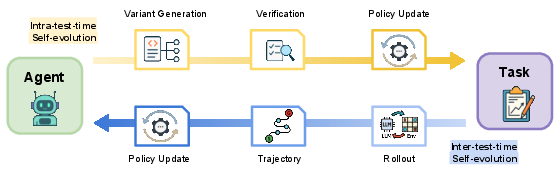

When to Evolve

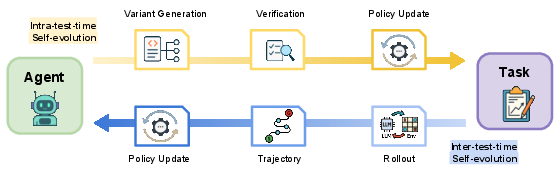

Figure 3: An overview of when to evolve, contrasting intra-test-time (online, within-task) and inter-test-time (offline, between-task) self-evolution.

- Intra-test-time: Adaptation occurs during task execution, leveraging in-context learning, test-time supervised fine-tuning, or reinforcement learning. Reflexion and AdaPlanner exemplify real-time self-reflection and plan revision.

- Inter-test-time: Learning happens retrospectively, using accumulated trajectories for offline SFT or RL. SELF, STaR, and RAGEN illustrate iterative self-improvement and curriculum-driven policy updates.

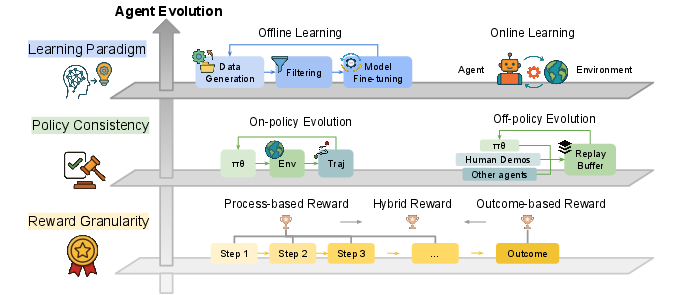

How to Evolve

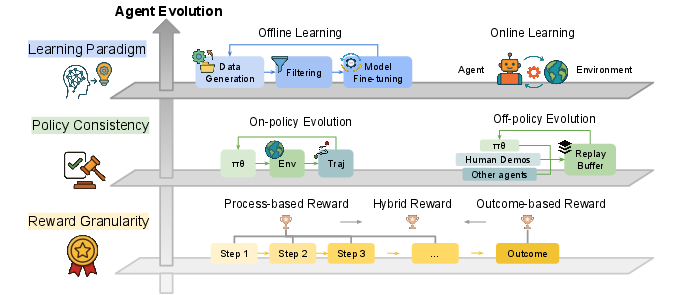

Figure 4: Overview of reward-based self-evolution strategies, categorized into textual, implicit, internal, and external rewards.

- Reward-based: Utilizes scalar rewards, textual feedback, or internal confidence as learning signals. Methods such as Reflexion, SCoRe, and TextGrad demonstrate the efficacy of language-based and numerical feedback for self-improvement.

- Imitation/Demonstration: Agents learn from self-generated or cross-agent demonstrations, as in STaR and SiriuS, bootstrapping reasoning and multimodal capabilities.

- Population-based/Evolutionary: Maintains populations of agent variants, leveraging selection, mutation, and self-play. Darwin Godel Machine and GENOME exemplify open-ended evolution and genetic optimization.

Figure 5: Illustration of cross-cutting evolutionary dimensions: learning paradigm (offline/online), policy consistency (on/off-policy), and reward granularity (process-based, outcome-based, hybrid).

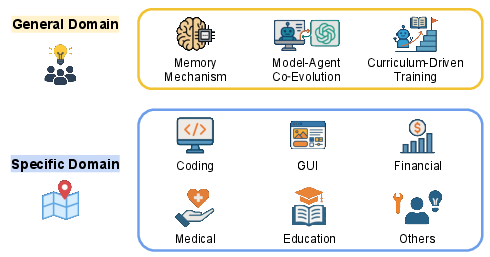

Where to Evolve

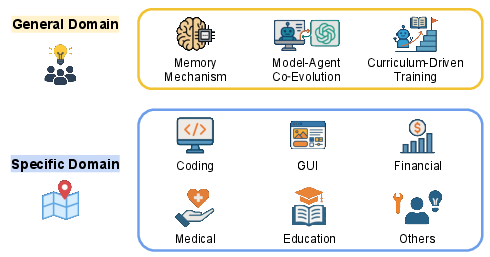

Figure 6: Categorization of where to evolve: general domain evolution (broad capability enhancement) vs. specific domain evolution (domain-specific expertise).

- General Domain: Agents evolve to handle diverse digital tasks, leveraging memory mechanisms, curriculum learning, and model-agent co-evolution (e.g., Voyager, WebEvolver).

- Specialized Domain: Focused on coding, GUI, finance, medical, and education, with agents like SICA, QuantAgent, and Agent Hospital demonstrating domain-specific self-improvement and knowledge accumulation.

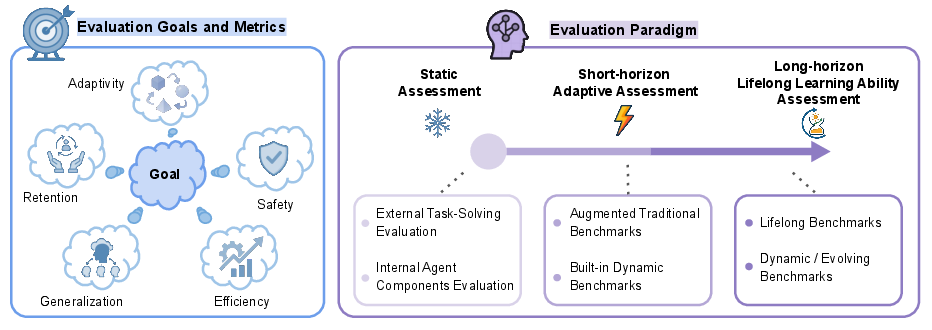

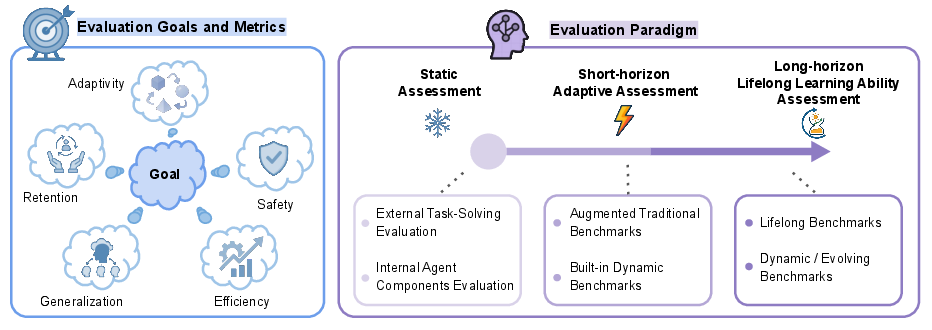

Evaluation: Metrics and Paradigms

Figure 7: Overview of evaluation angles for self-evolving agents, including adaptivity, retention, generalization, safety, and efficiency, across static, short-horizon, and long-horizon paradigms.

The survey emphasizes the need for longitudinal, dynamic evaluation frameworks that capture not only immediate task success but also adaptation speed, knowledge retention (mitigating catastrophic forgetting), generalization to novel domains, efficiency, and safety. It distinguishes between static assessment, short-horizon adaptation, and long-horizon lifelong learning, highlighting emerging benchmarks such as LifelongAgentBench and LTMBenchmark.

Implications, Open Challenges, and Future Directions

The paper identifies several critical challenges and research directions:

- Personalization: Developing agents that can rapidly adapt to individual user preferences and behaviors, even under limited data, while avoiding bias amplification.

- Generalization and Continual Learning: Achieving robust cross-domain transfer, scalable architecture design, and mitigating catastrophic forgetting in resource-constrained settings.

- Safety and Controllability: Ensuring agents remain aligned with human values, avoid unsafe behaviors, and can be reliably controlled as they autonomously evolve.

- Multi-Agent Ecosystems: Balancing individual and collective reasoning, enabling efficient collaboration, and developing dynamic evaluation frameworks for multi-agent systems.

The survey underscores the necessity of integrating advances in models, algorithms, data, and evaluation to realize the full potential of self-evolving agents as precursors to ASI. It calls for the development of more adaptive, robust, and trustworthy agentic systems capable of open-ended, autonomous evolution.

Conclusion

This survey establishes a comprehensive, multi-dimensional framework for understanding and advancing self-evolving agents. By systematically dissecting the field along the axes of what, when, how, and where to evolve, and by highlighting evaluation methodologies and open challenges, the paper provides a foundational reference for researchers and practitioners. The trajectory from static LLMs to self-evolving agents is positioned as a necessary step toward ASI, with significant implications for the design of adaptive, robust, and safe AI systems in both research and real-world deployments.