- The paper demonstrates that LLMs exhibit human-like temporal cognition by developing a subjective temporal reference point that follows the Weber-Fechner law.

- It employs similarity judgment tasks and detailed neural coding analysis across transformer layers to uncover temporal-preferential neurons and logarithmic encoding patterns.

- The findings highlight a hierarchical construction of temporal representations and propose an experientialist perspective that informs AI alignment strategies.

LLMs Exhibit Human-Like Temporal Cognition

This paper investigates the capacity of LLMs to exhibit human-like cognitive patterns, specifically focusing on temporal cognition. Through a similarity judgment task, the research reveals that larger LLMs spontaneously develop a subjective temporal reference point and adhere to the Weber-Fechner law in their perception of time. The paper further explores the mechanisms underlying this behavior at neuronal, representational, and informational levels, proposing an experientialist perspective to understand LLMs' cognition as a subjective construction of the external world.

Experimental Setup: Similarity Judgment Task

The authors employed a similarity judgment task to evaluate temporal cognition in LLMs. Models were prompted to rate the similarity between pairs of years from 1525 to 2524 on a scale of 0 to 1. Control experiments replaced "year" with "number" to discern distinct cognitive mechanisms. The paper included 12 models, including closed-source models (Gemini-2.0-flash and GPT-4o) and open-source models (Qwen2.5 and Llama 3 families) of varying sizes.

To quantify the models' similarity judgments, the researchers employed three distance metrics: the psychological Log-Linear distance (dlog(i,j)=∣log(i)−log(j)∣), the Levenshtein distance ($\mathrm{d}_{\text{lev}(i,j) = \min {k: i \xrightarrow{k_{\text{ops}j}\)$, and a novel Reference-Log-Linear distance ($\mathrm{d}_{\text{ref}(i,j) = |\log(|R-i|) \circ \log(|R-j|)|$). Linear regression analysis was then used to assess how well each theoretical distance predicted the models' judgments, with the coefficient of determination (R2) used to compare the goodness-of-fit. Additionally, a diagonal sliding window method was applied to estimate the temporal reference points of the models non-parametrically.

Neural Coding Analysis: Temporal-Preferential Neurons

To uncover the neural mechanisms underlying temporal cognition in LLMs, the authors analyzed the activation patterns of neurons in the Feed-Forward Networks (FFN) across all transformer layers. They identified temporal-preferential neurons based on three criteria: a large activation difference between temporal and numerical conditions (Cohen's di>2.0), a statistically significant preference for the temporal condition (FDR-corrected p<0.0001), and a consistent preference across most years (Consistencyi>0.95). The paper then visualized the average activations of the top 1000 temporal-preferential neurons and performed layer-wise regression analysis to assess whether their response patterns conformed to logarithmic encoding principles.

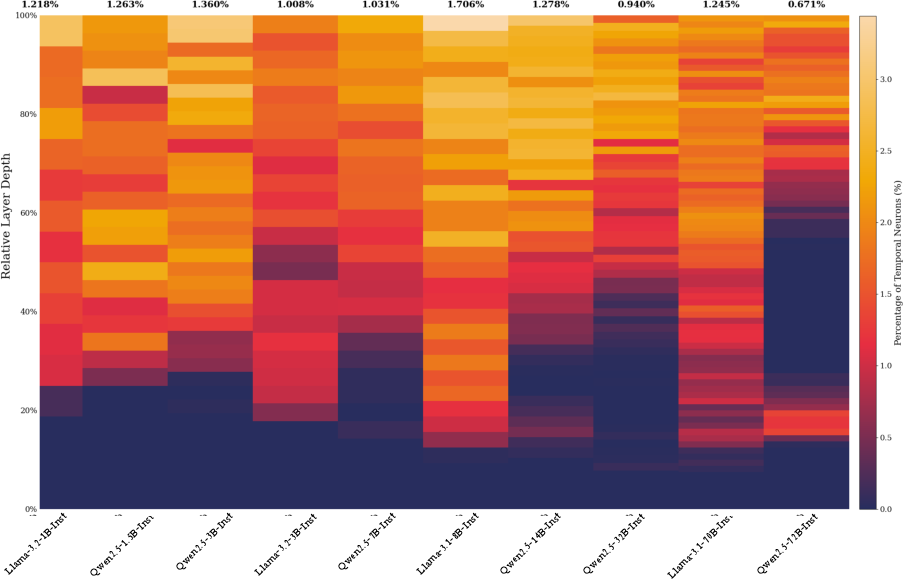

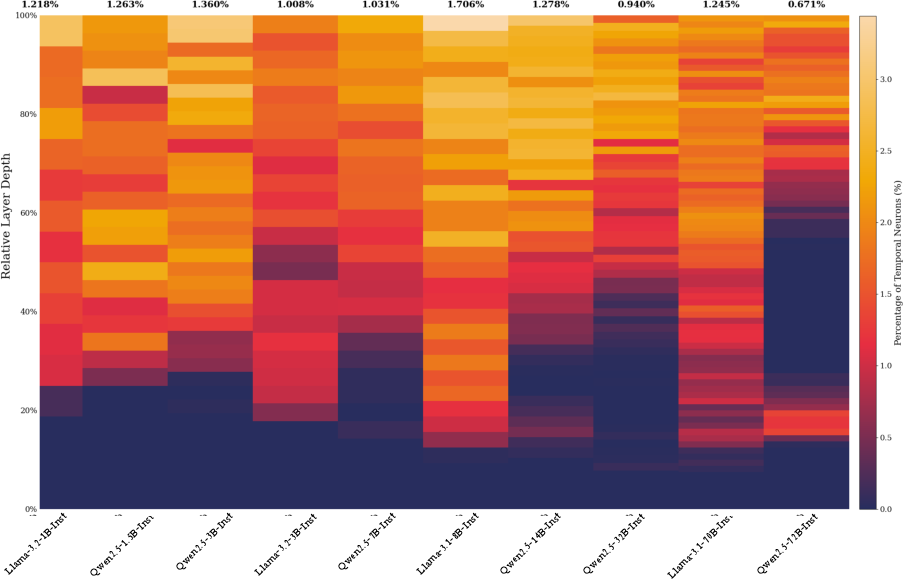

Figure 1: Distribution of temporal-preferential neurons across all layers among 10 models.

The analysis revealed that temporal-preferential neurons represent a small fraction of the total FFN and are concentrated in the middle-to-late stages of the neural network (Figure 1), suggesting that temporal representation is a high-level abstract feature. Furthermore, the mean activation curve of these neurons formed a distinct trough, bottoming out at a particular year, with activation levels rising as years receded into the past or advanced into the future. Regression analysis demonstrated that neurons across all models exhibit a logarithmic encoding scheme to some extent, with the precision of this encoding strengthening with model scale.

Representational Structure: Hierarchical Construction

The paper further investigated how temporal information is encoded across the network's layers by analyzing the representations of the three theoretical distances (dlog, dlev, and dref) within the hidden states of each model layer. Linear probes were trained to predict these distances directly from the hidden states, and their performance was assessed using adjusted R2.

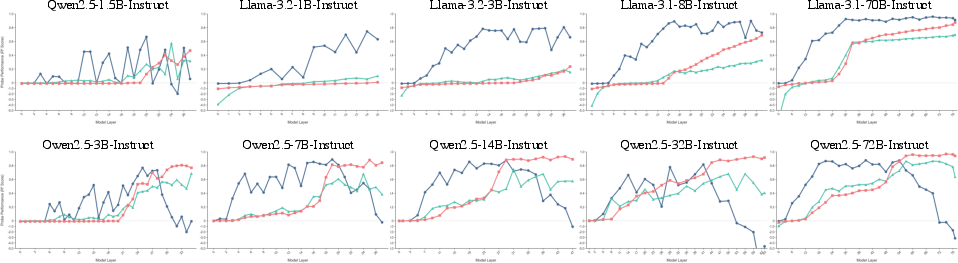

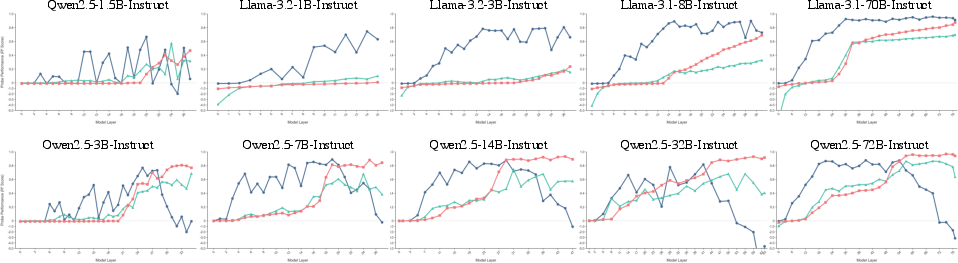

Figure 2: Layer-wise performance (R2) of linear probes for Log-Linear distance, Reference-Log-Linear distance, and Levenshtein distance across 10 models.

The results (Figure 2) revealed a hierarchical construction process: models first encode the numerical properties of years (dlog) in early layers and subsequently develop a more complex temporal representation centered on a reference time (dref) in deeper layers. The representational mechanism varied across models, with some models exhibiting a suppression of the dlog representation as the dref representation emerged.

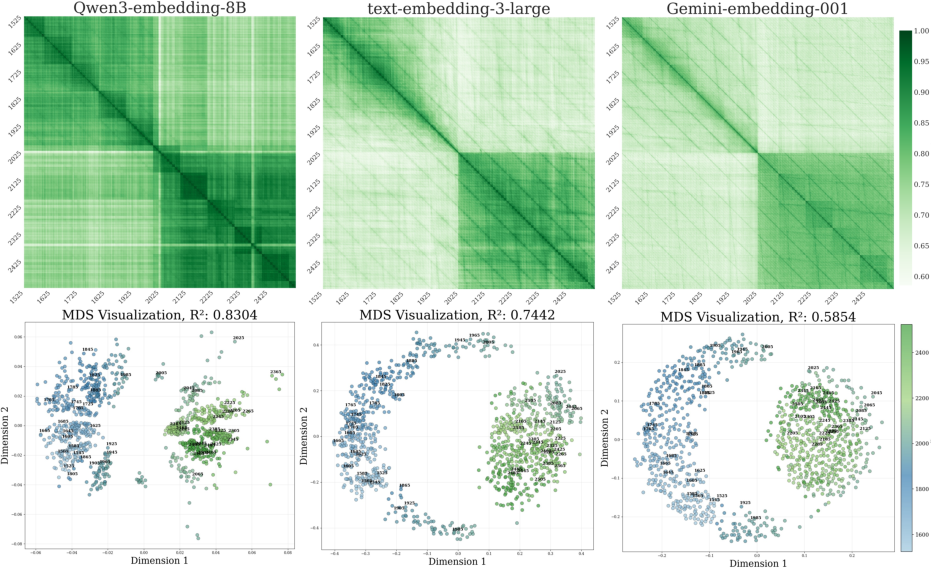

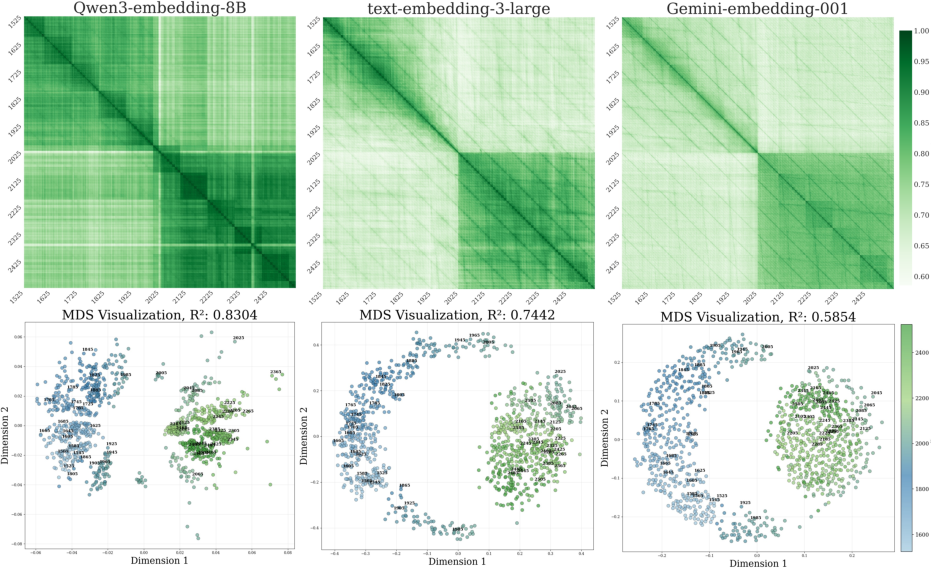

To assess the contribution of training data to the observed temporal cognitive patterns, the authors analyzed the semantic distribution of years using independently pre-trained embedding models (Qwen3-embedding-8B, text-embedding-3-large, and Gemini-embedding-001). Cosine similarities between year pairs were computed to construct a semantic similarity matrix, and Multidimensional Scaling (MDS) was applied to visualize year distributions in the semantic space of the training data.

Figure 3: Pair-wise cosine similarity matrices between embeddings and corresponding MDS visualizations from three outperformed embedding models.

The visualization (Figure 3) revealed a non-linear temporal structure, characterized by dense clustering of years in the distant past and future. Linear regression analysis further suggested that the models' exposure to pre-existing informational structure within their training data likely contributes to the emergence of human-like temporal cognition.

Experientialist Perspective: Implications for AI Alignment

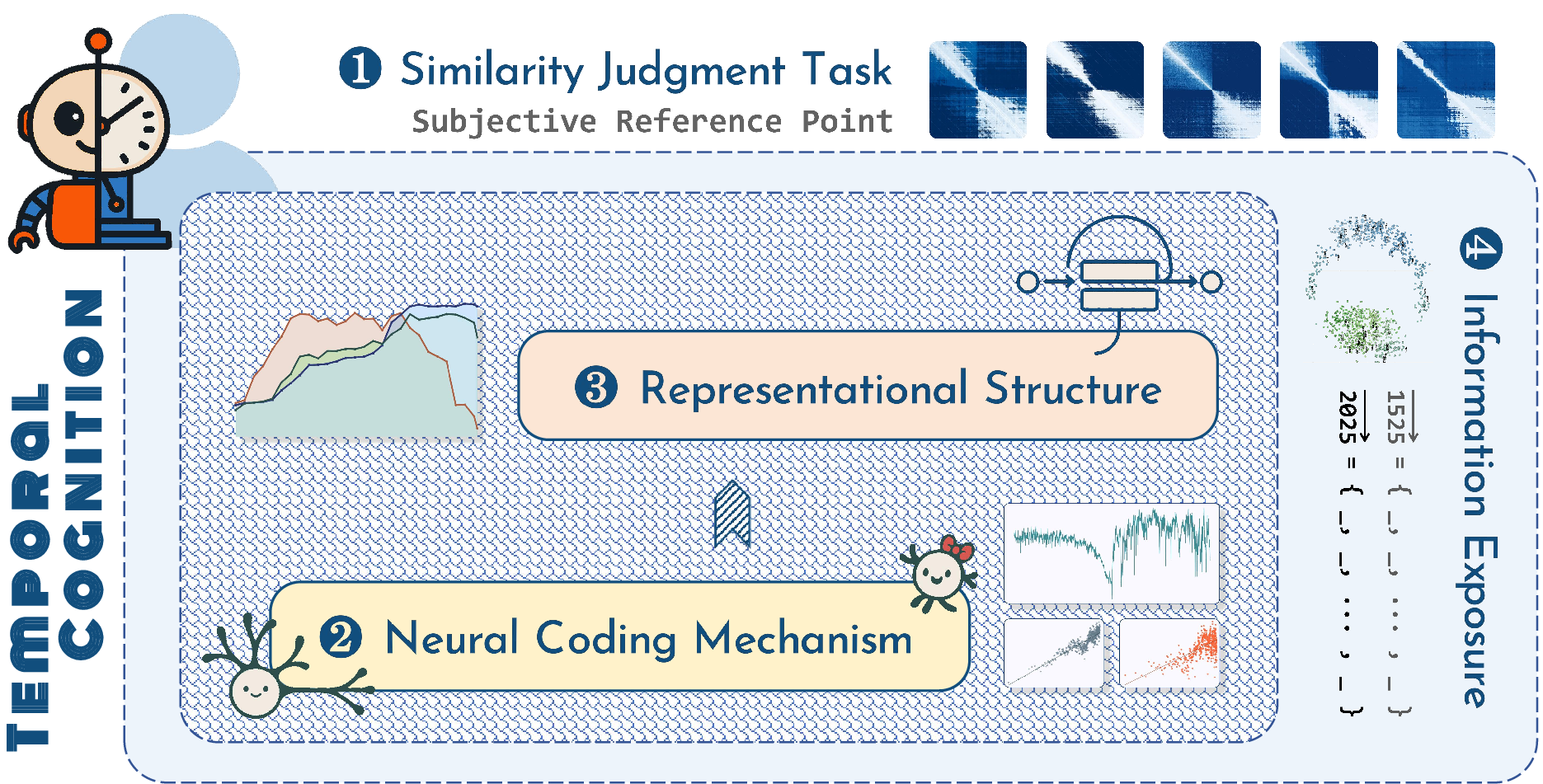

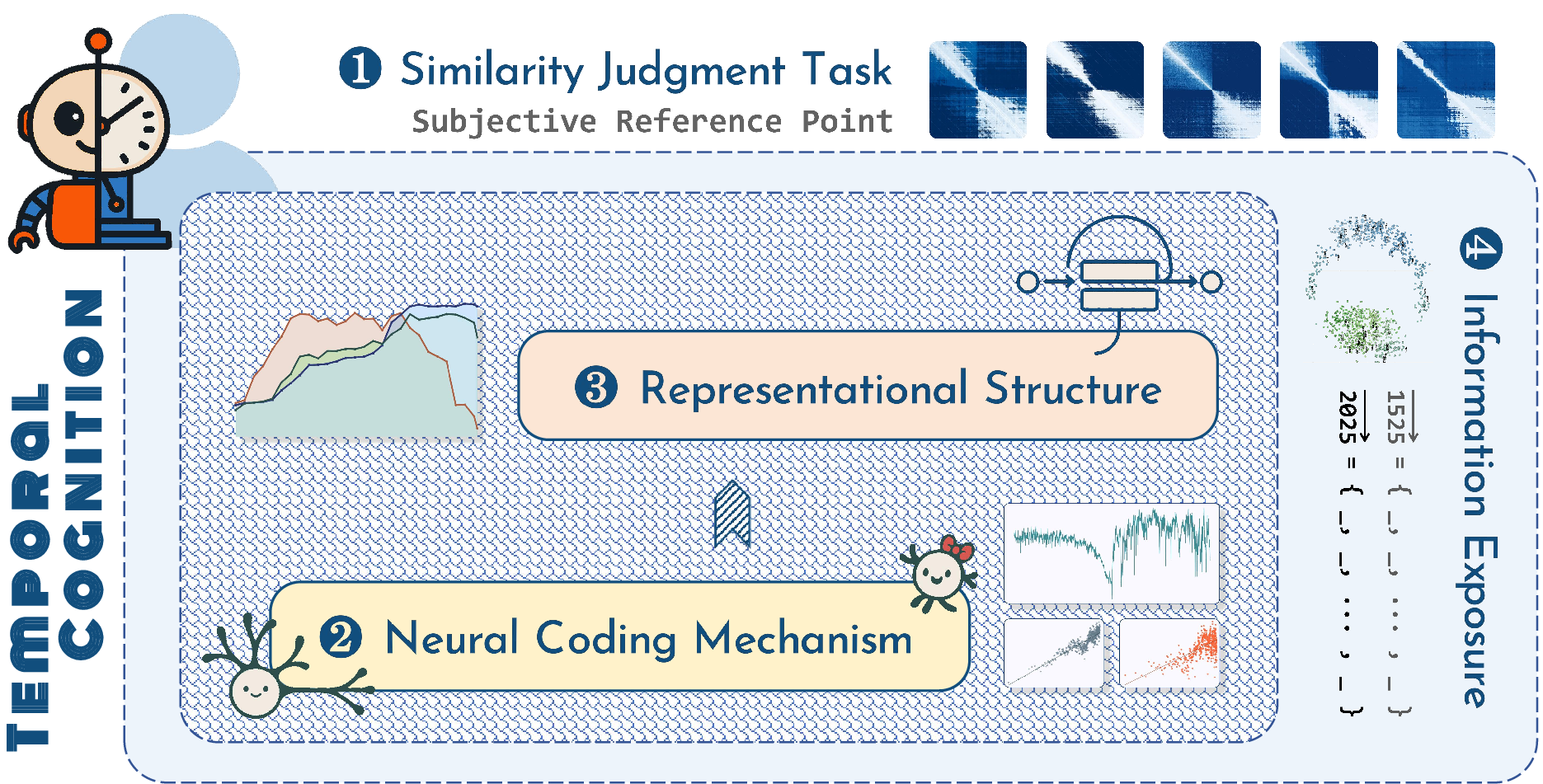

Based on these findings, the authors propose an experientialist perspective, viewing LLMs' cognition as a subjective construction of the external world, shaped by its internal representational system and data experience (Figure 4).

Figure 4: An experientialist perspective of LLMs human-like cognition as a subjective construction of shared external world by convergent internal representational system.

This perspective emphasizes that cognition arises from the dynamic interplay between architecture and information. The authors argue that while LLMs may exhibit cognitive patterns convergent with human cognition, fundamental differences persist, and the most significant risk may be the development of alien cognitive patterns that we cannot intuitively anticipate. This has implications for AI alignment, suggesting that robust alignment requires engaging directly with the formative process by which a model's representational system constructs a subjective world model.

Conclusion

The paper demonstrates that LLMs, particularly larger models, exhibit human-like temporal cognition, adhering to the Weber-Fechner law and establishing a subjective temporal reference point. This phenomenon is attributed to a multi-level convergence with human cognition, involving neuronal coding, representational structure, and information exposure. The experientialist perspective highlights the need for AI alignment strategies that guide the formation of a model's internal world, rather than simply controlling its external behavior.