- The paper introduces a novel LLM-based framework that implicitly profiles chatbot users in ITSec by mapping interactions to a structured, domain-specific taxonomy.

- It employs dynamic scoring and weighted averaging to quickly reduce profiling error with a mean absolute error metric after the first interaction.

- Evaluation with synthetic and human trials demonstrates the framework’s adaptability and potential for high-stakes personalized interactions.

ProfiLLM: An LLM-Based Framework for Implicit Profiling of Chatbot Users

Introduction

The paper presents "ProfiLLM," a framework for implicit user profiling in chatbot interactions, specifically aimed at domains where personalization is crucial, such as IT/cybersecurity (ITSec). ProfiLLM leverages LLMs to create a structured taxonomy for domain-specific profiling based solely on user interactions with chatbots. This approach addresses the challenge of dynamic and adaptive user profiling, filling the gap left by traditional methods that often rely on static user categories or explicit self-reported information.

Methodology

Domain-Adapted Taxonomy Generation

ProfiLLM's methodology begins with creating a domain-specific taxonomy for user profiling. In the ITSec domain, the authors designed a taxonomy with 23 subdomains distributed across five main domains: Hardware, Networking, Cybersecurity, Software, and Operating Systems. This taxonomy was informed by existing courses, governmental resources, and expert opinion.

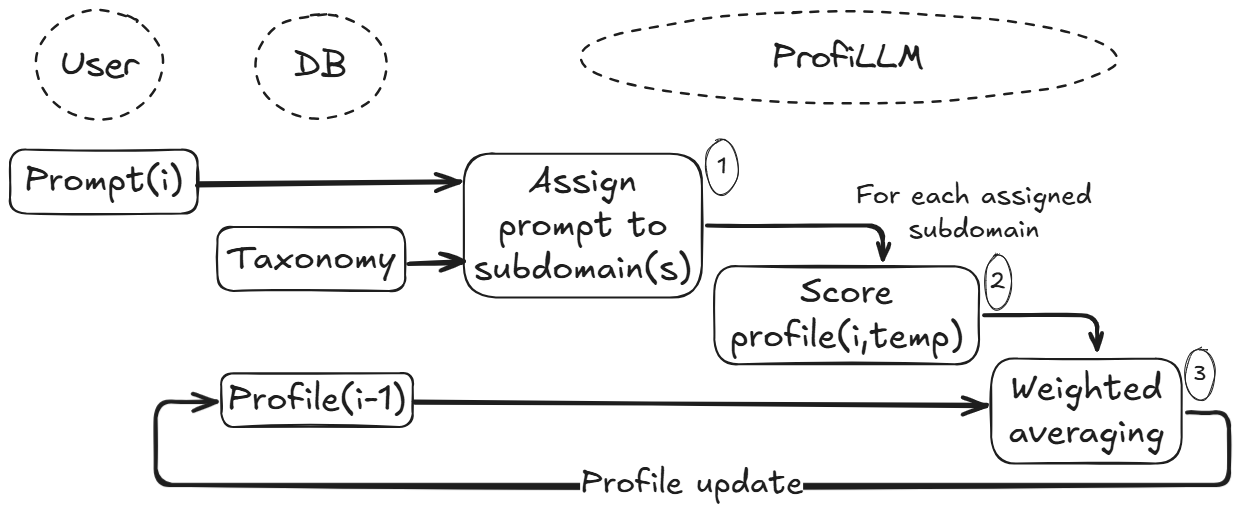

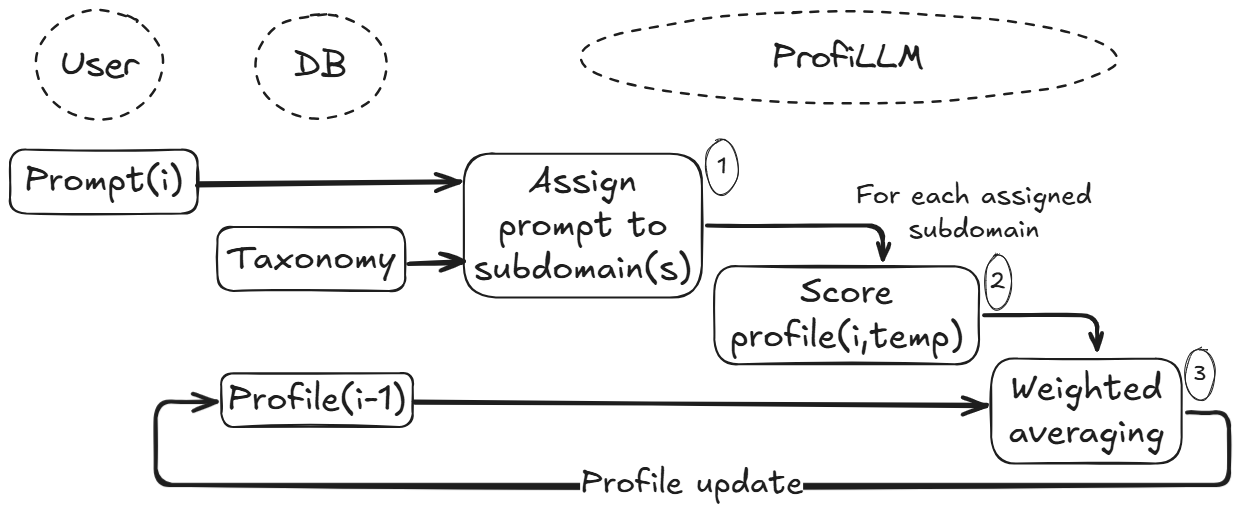

Figure 1: User Profile Inference in Terms of the Taxonomy.

User Profile Inference and Scoring

ProfiLLM assigns user prompts to relevant subdomains within the taxonomy. Each prompt is then scored on a five-point scale that assesses the user's technical knowledge level. The framework uses these scores to update user profiles dynamically, employing a weighted averaging method where new user information initially has a high impact, which gradually stabilizes as more data is gathered.

Evaluation Method

Implementation and Data Generation

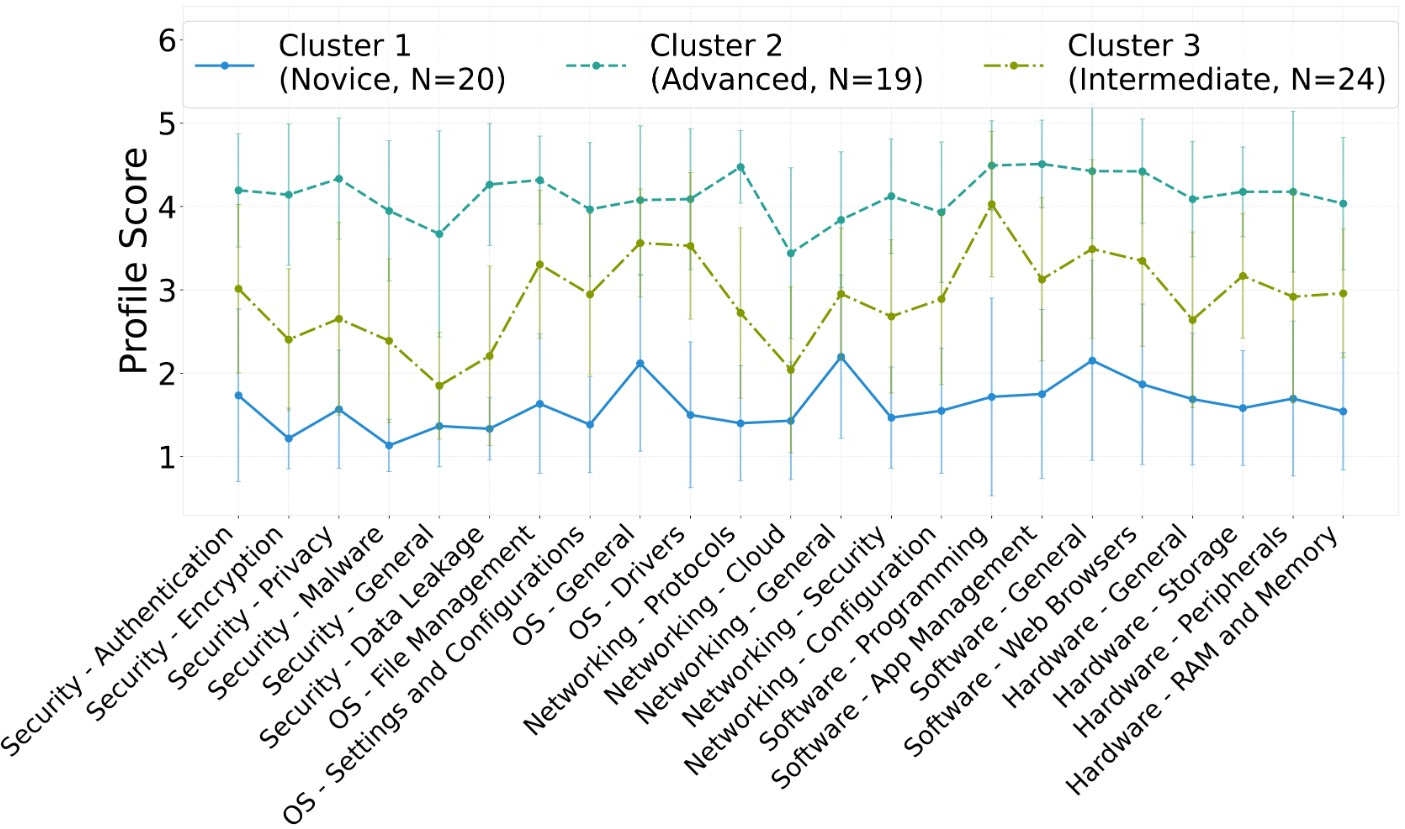

The authors implemented ProfiLLM for ITSec, conducting experiments with synthetic users whose profiles were based on curated archetypes derived from human user data. This was done to ensure the representativeness of the profiling mechanism without relying on static datasets. The synthetic users participated in chatbot interactions designed to reflect real-world troubleshooting scenarios.

Experimental Setup

ProfiLLM was evaluated using a dataset of 1,315 high-quality conversations from 263 synthetic users. The system's performance was measured using metrics such as mean absolute error (MAE) across various iterations of user interactions.

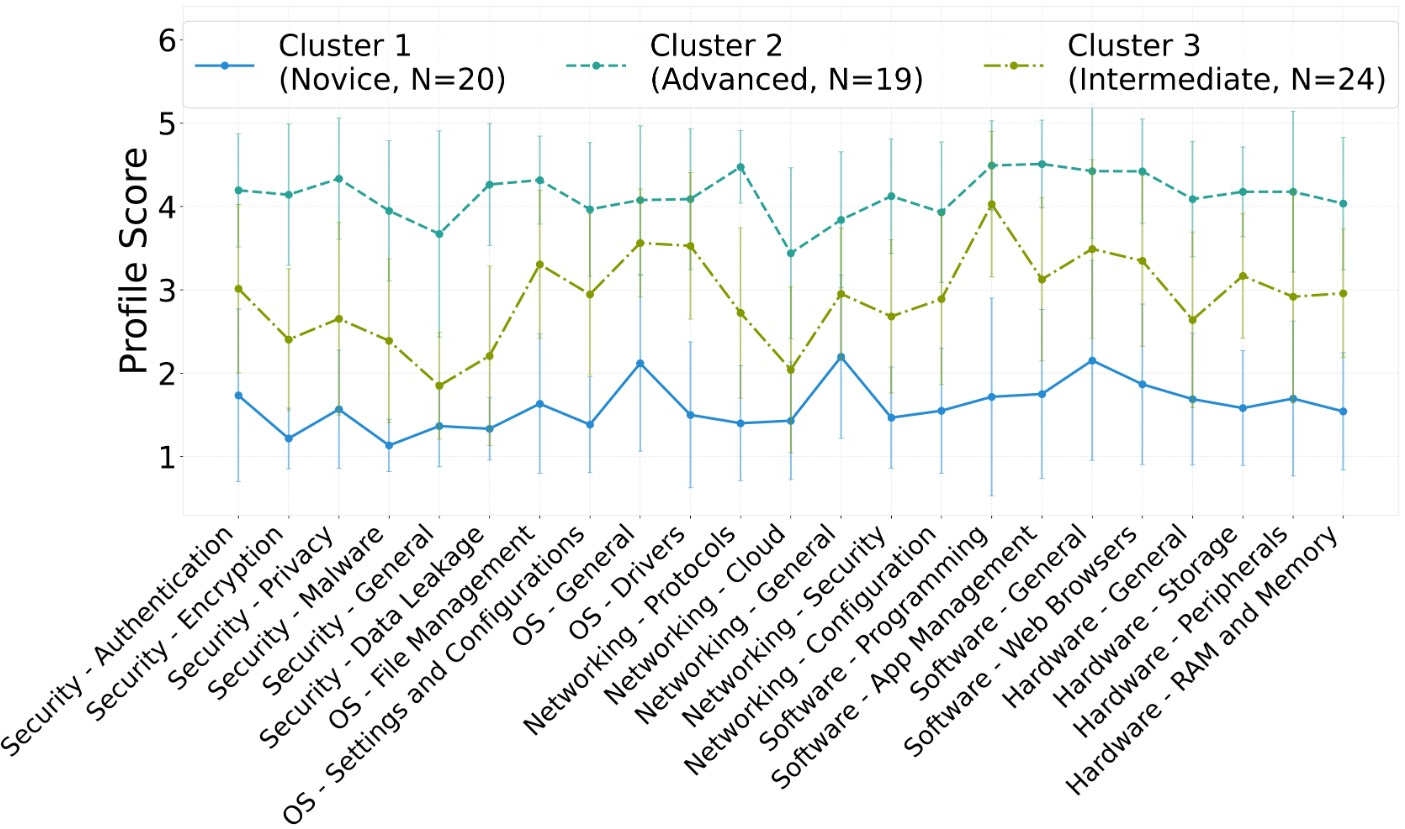

Figure 2: Experimental data collected: cluster centroids and conversation quality.

Hyperparameter Tuning

The paper conducted a grid search to identify the configuration that minimizes MAE, optimizing parameters like the specific LLM used, the context window size, and the decay rate in the scoring function. The evaluation focused on the reduction of profiling error after a limited number of interaction iterations.

Results

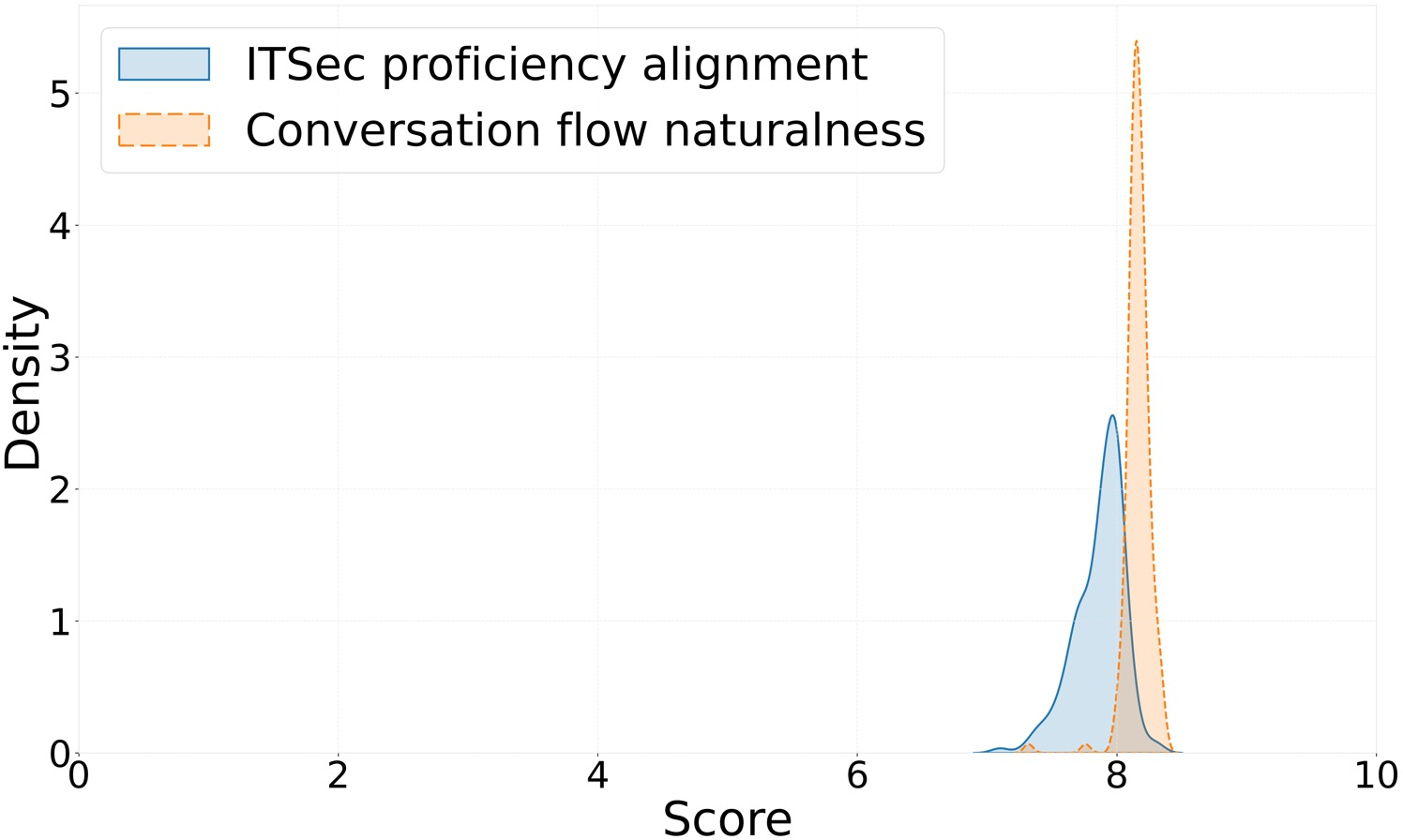

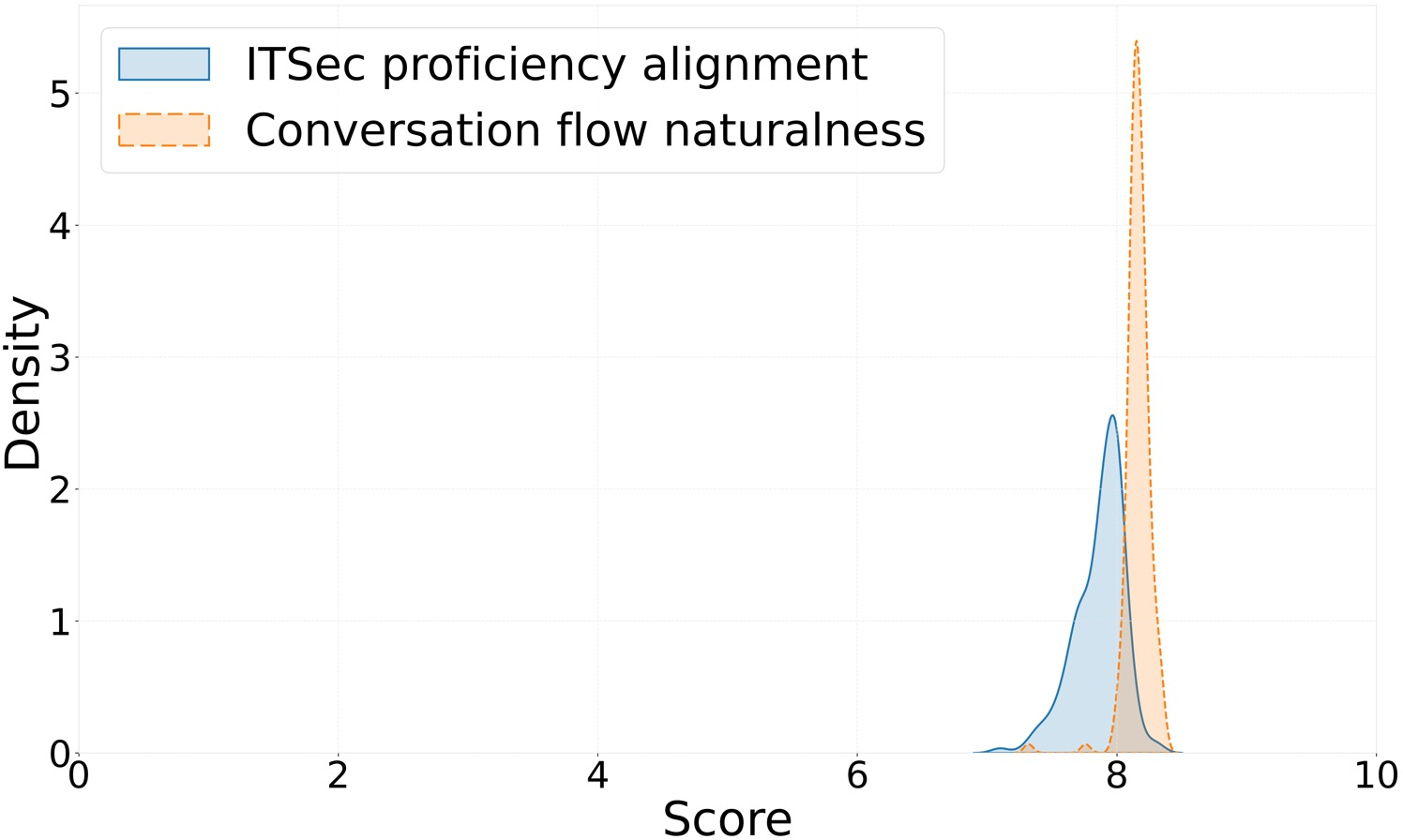

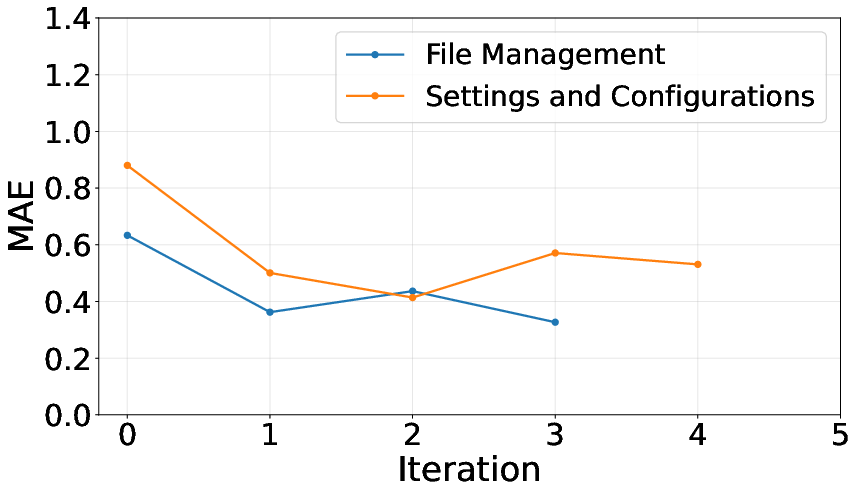

ProfiLLM demonstrated a significant reduction in MAE after just one interaction, indicating that the framework can quickly and accurately infer user profiles. The specific performance varied across different subdomains and user archetypes, highlighting the importance of nuanced understanding in dynamic user profiling.

Figure 3: ITSec^

's performance across profile domains and subdomains.*

Persona Archetypes and Prompt Length

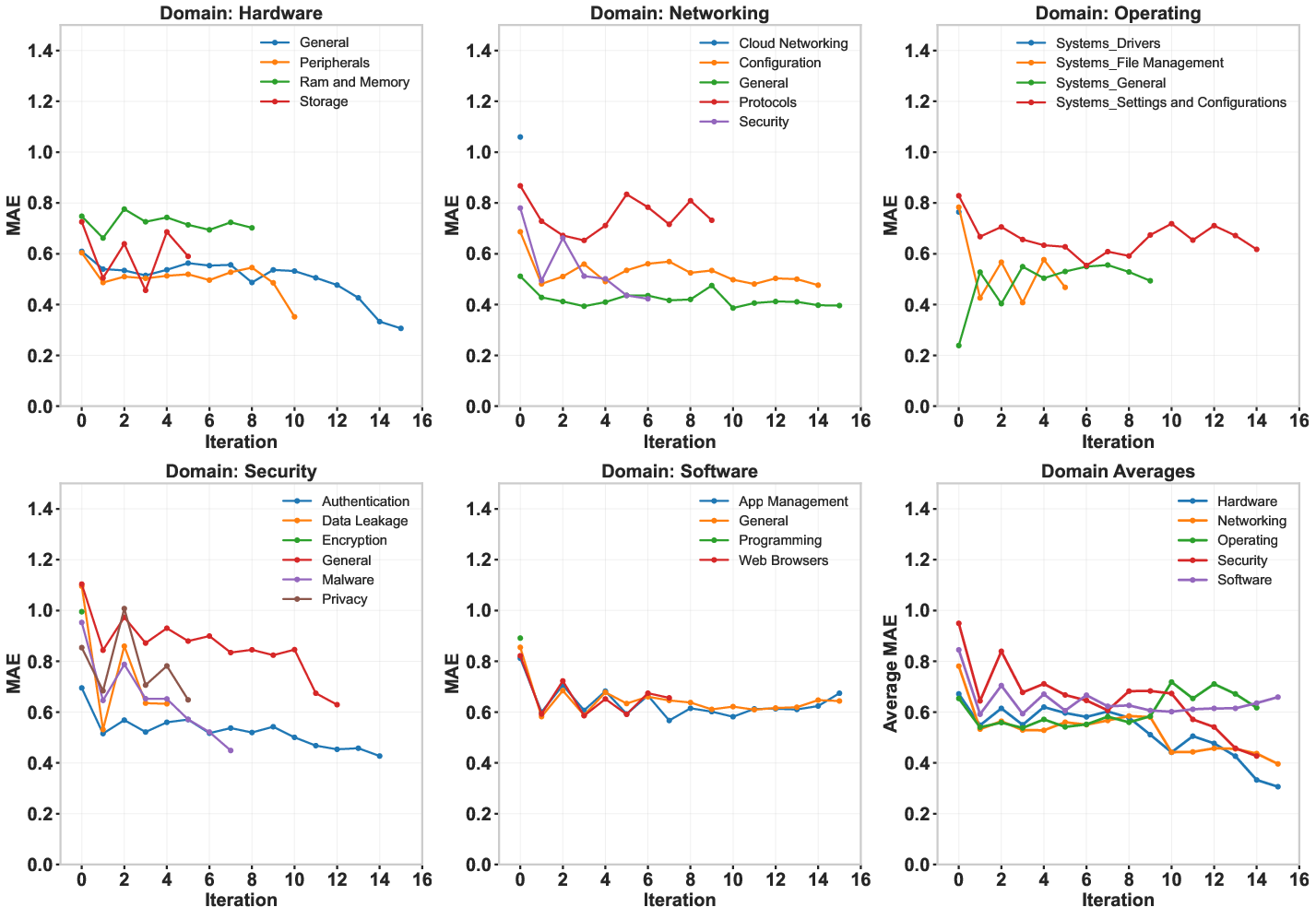

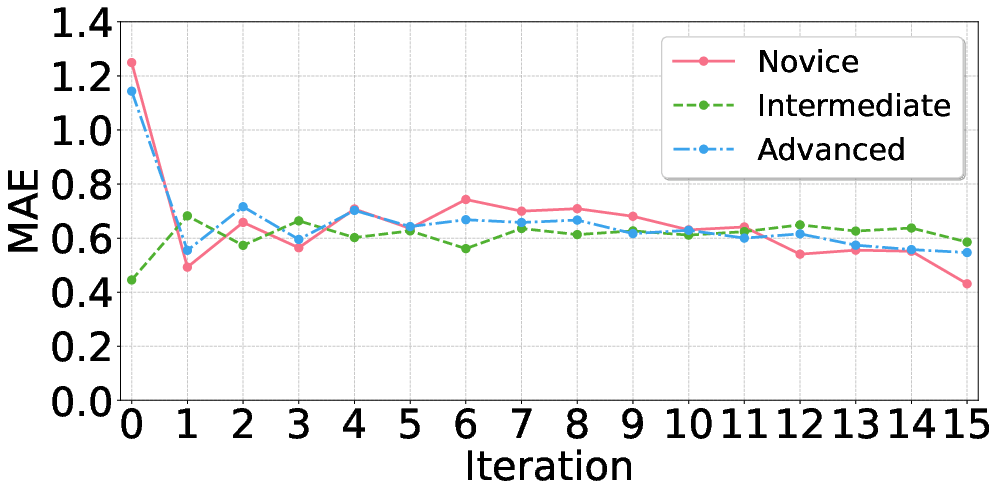

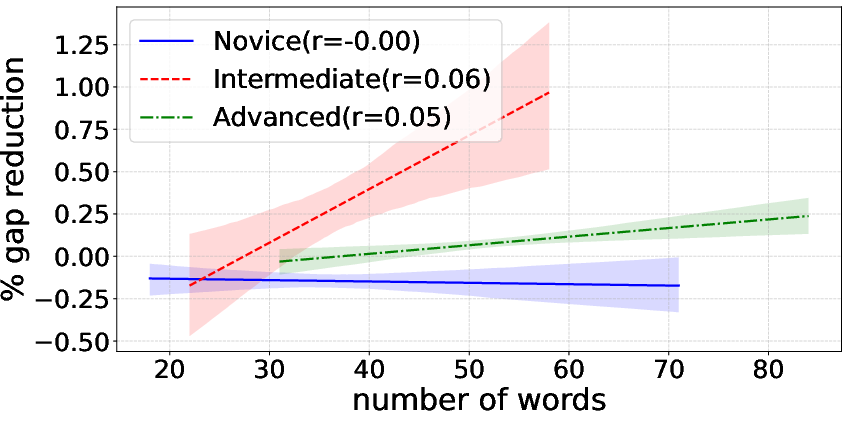

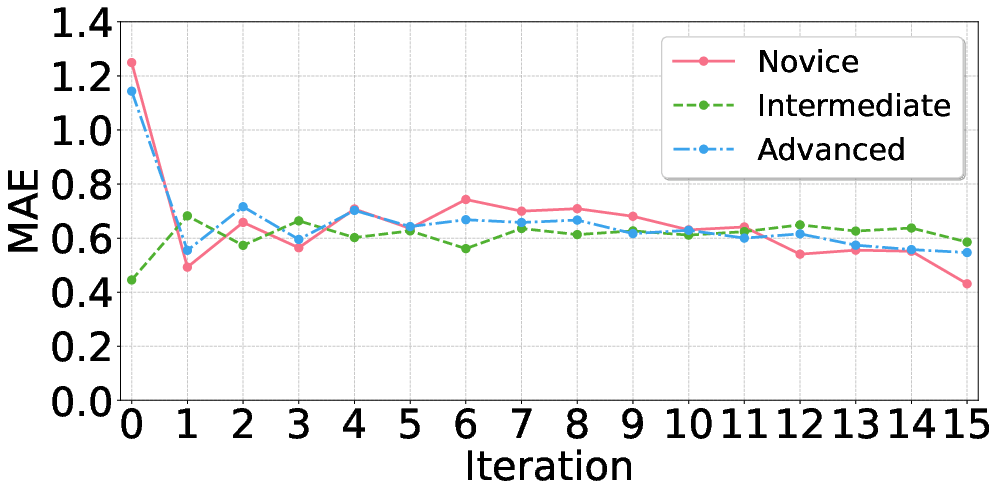

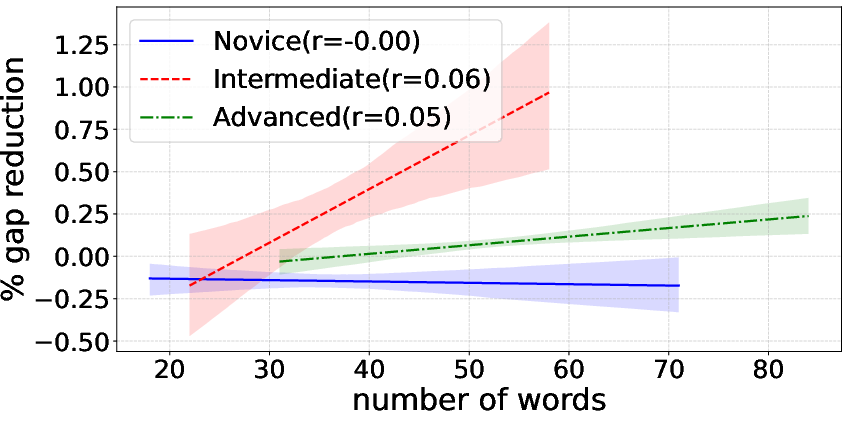

The authors observed performance differences across user archetypes. Novices and experts showed rapid improvements in profile accuracy after the first interaction, whereas intermediate users required more interactions for similar accuracy improvements. Prompt length did not significantly impact profiling accuracy.

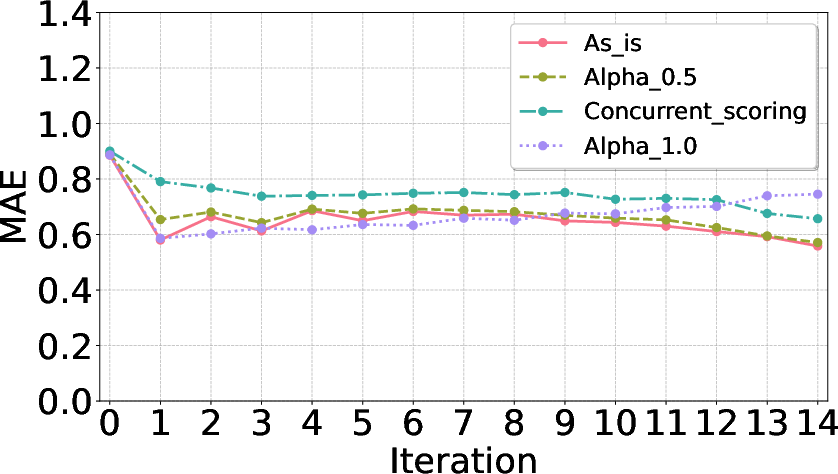

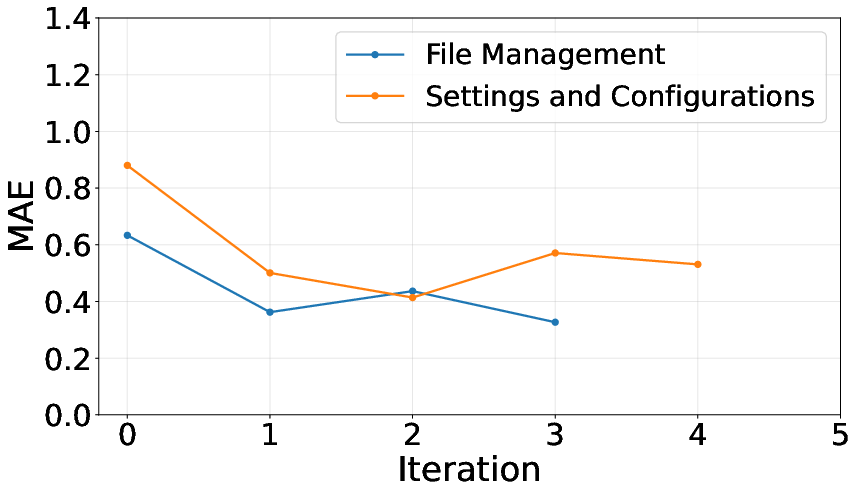

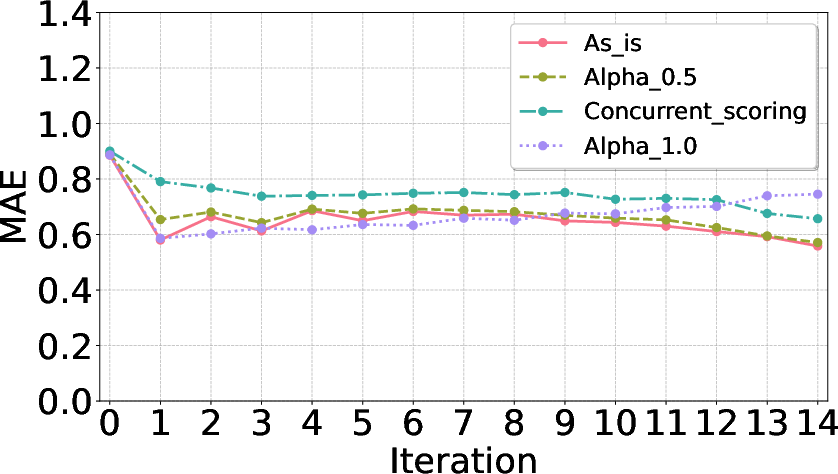

Ablation Study and Human Trials

The ablation paper highlighted the importance of separate subdomain-specific scoring and the dynamic weighting mechanism in maintaining low profiling error. Initial human user trials validated the framework’s applicability beyond synthetic interactions, further corroborating the framework's utility in real-world applications.

Figure 4: Profiling performance by persona archetype and prompt length.

ProfiLLM builds upon and distinguishes itself from prior research by providing a comprehensive framework for skill-based profile inference in high-stakes domains like ITSec, where conversational dynamics and technical savvy are critical.

Implications and Future Work

ProfiLLM offers a significant advancement in personalized chatbot interactions, facilitating real-time adaptability in communication strategies. Future research could explore additional domains, incorporate explicit user inputs to refine accuracy further, and test ProfiLLM in broader, more diverse real-world scenarios.

Figure 5: Profiling performance in the ablation paper and human users experiment.

Conclusion

ProfiLLM represents an impactful step forward in the field of conversational AI, providing an effective solution to implicit and dynamic user profiling for enhanced personalization. The framework's architecture and adaptation for ITSec showcase its potential applicability across various domains where user expertise significantly influences interaction quality.