- The paper introduces \name, a gradient-based adversarial method that crafts subtle visual perturbations to effectively manipulate VLM outputs.

- It demonstrates that visual perturbations cause greater output disruption than textual changes, achieving jailbreak rates over 90% in evaluations.

- The dual-purpose approach serves both adversarial attacks and defensive watermarking, highlighting the urgent need for more robust VLM security.

Attention! You Vision LLM Could Be Maliciously Manipulated

Introduction

The paper examines vulnerabilities of Large Vision-LLMs (VLMs) to adversarial attacks, revealing susceptibility particularly via visual inputs as opposed to textual inputs. VLMs are at the forefront in understanding multimodal data; however, they remain vulnerable to adversarial manipulations that exploit model predictions.

Theoretical Insights and Vulnerability Analysis

VLMs, due to their architecture that integrates visual and textual data, offer a unique vector for attacks. The continuous nature of visual data affords more severe distortions in model predictions compared to text, which is made evident through both empirical studies and theoretical analyses.

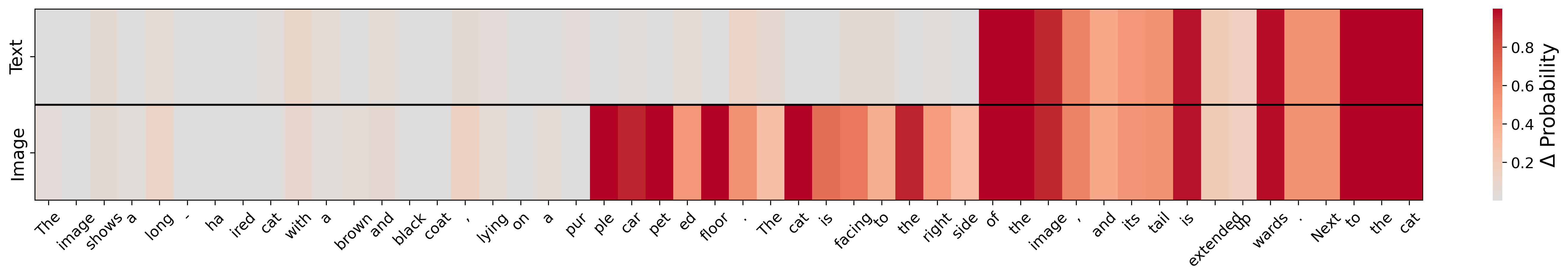

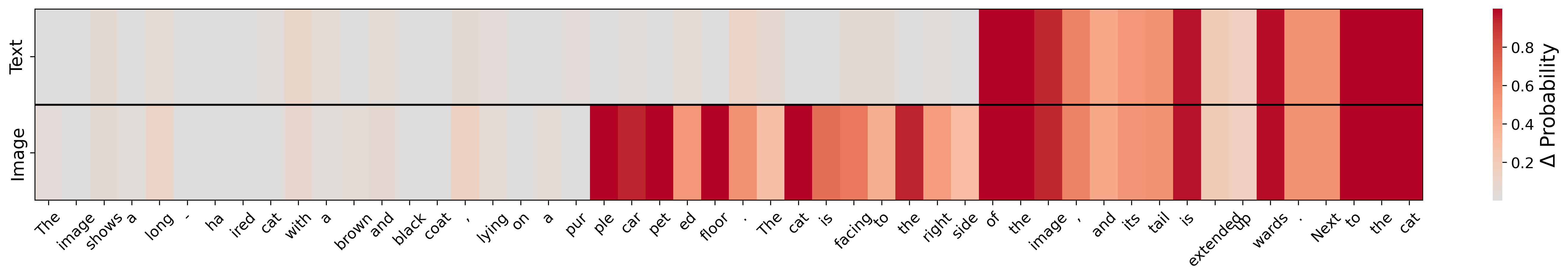

Figure 1: Average token-wise distribution change on textual and visual perturbation. Visual perturbation has a greater effect than textual perturbation on the output probability.

The research establishes that visual perturbations, because of their continuity and greater influence, disrupt VLM outputs more effectively than text modifications. Figure 1 illustrates this disparity starkly, highlighting the changes in output distributions upon different perturbations.

\name: An Enhanced Adversarial Attack

The paper introduces \name, a sophisticated attack methodology that leverages first-order and second-order momentum optimization to craft visual adversarial examples that can manipulate outputs at a token level in VLMs. The methodology extends previous adversarial strategies by integrating differentiable transformations, ensuring continuous and subtle perturbations remain imperceptible to human observers yet disturb the VLMs significantly.

Algorithmic Strategy

The attack uses gradient-based optimization to tweak visual inputs until the model outputs desired tokens. This technique is more refined than traditional methods like PGD, which suffer from local optima and projection discontinuities.

Applications of \name

Adversarial Applications

\name’s capabilities extend into several domains:

- Jailbreaking: Coercing VLMs to output undesirable or unsafe content by bypassing ethical constraints.

- Hijacking: Diverting model outputs towards specific attacker-defined narratives, essentially overriding user prompts.

- Sponge Examples and Denial-of-Service (DoS): Generating examples that exhaust computing resources, targeting the operational stability of models.

Defensive and Protective Measures

Interestingly, while \name can be weaponized, it also acts as a defensive mechanism. By embedding invisible watermarks in images, it protects copyrighted material against misuse by unauthorized AI models, ensuring content accountability.

Experimental Evaluations

Empirical evaluations show \name reaching high attack success rates across multiple tasks and VLM architectures, underscoring a consistent vulnerability. Noteworthy findings include high jailbreak rates (above 90% in several scenarios), revealing the inadequacy of existing VLMs’ security measures.

Figure 2: Adversarial images generated by the proposed \name to manipulate various VLMs to output two specific sequences.

Figure 2 demonstrates real-world manipulations achieved by \name, compelling various models to generate predefined outputs even from benign-looking adversarial inputs.

Conclusion

This paper sheds light on the critical vulnerabilities inherent in VLMs through visual adversarial attacks. Not only does \name expose potential threats, but it also opens dialogue on dual-purpose tools—where the same techniques can serve both attack and defensive strategies in AI security frameworks. Future work must focus on enhancing VLMs’ resilience, potentially revolutionizing defensive architectures to combat these manipulation vectors effectively.