- The paper presents the PsyMem framework that enhances LLM role-playing by incorporating 26 psychological indicators and explicit memory control.

- It employs a dual-stage training strategy on a curated dataset of 5,414 characters and 38,962 dialogues to improve character fidelity and human-likeness.

- Experimental results demonstrate PsyMem’s superiority over baseline models in delivering dynamic, memory-driven role-playing interactions.

PsyMem: Fine-grained Psychological Alignment and Explicit Memory Control for Advanced Role-Playing LLMs

Introduction

The "PsyMem" framework addresses fundamental limitations in existing LLM-based role-playing systems, including superficial characterization and implicit memory modeling, which compromise reliability in applications like social simulations. By introducing detailed psychological attributes and explicit memory control, PsyMem significantly improves character fidelity and human-likeness in role-playing models. PsyMem enriches character modeling with 26 psychological indicators and aligns character responses with dynamically retrieved memory. Training on a specially curated dataset of 5,414 characters and 38,962 dialogues extracted from novels, the PsyMem-Qwen model demonstrates superior role-playing performance compared to baseline models.

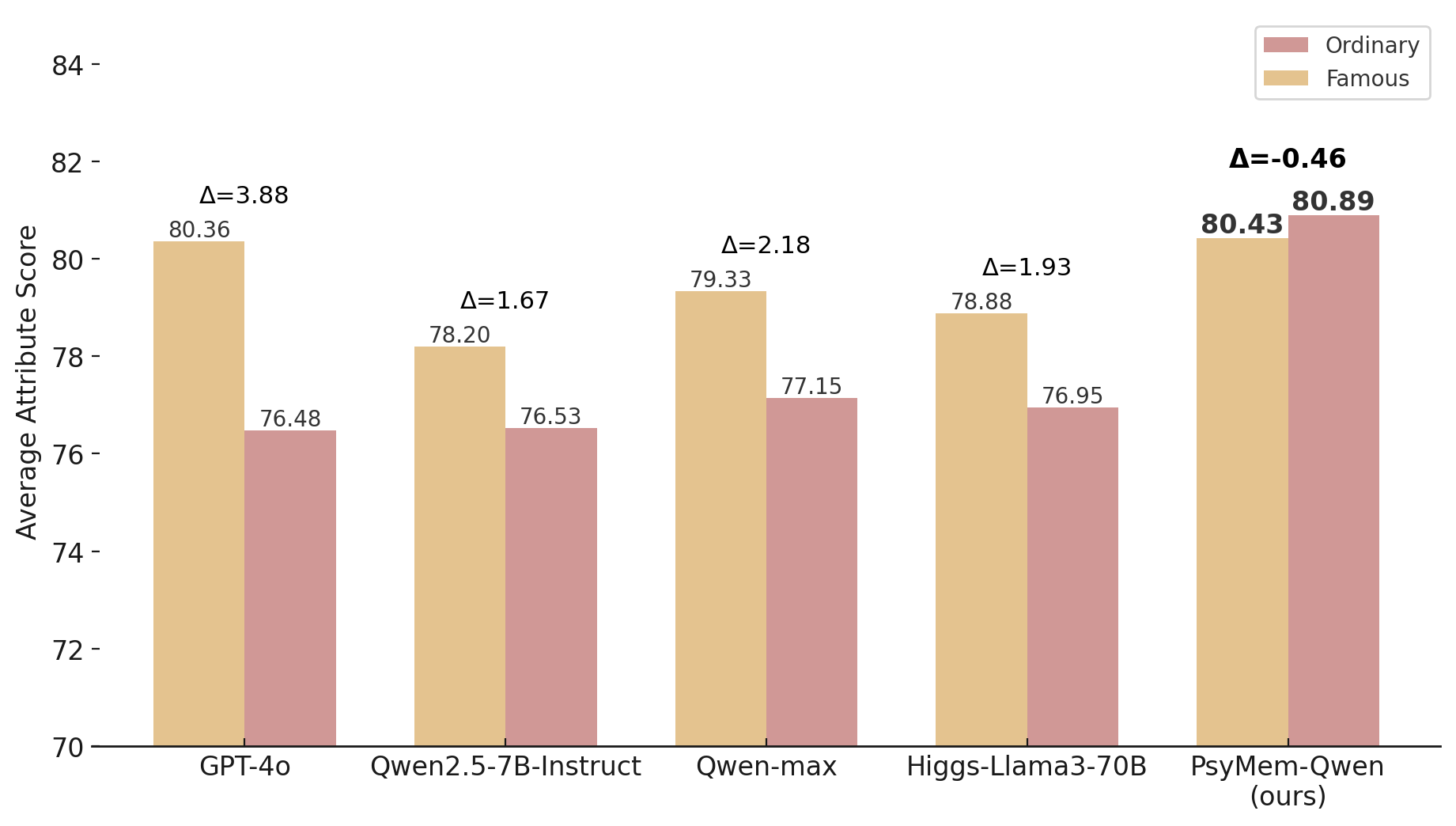

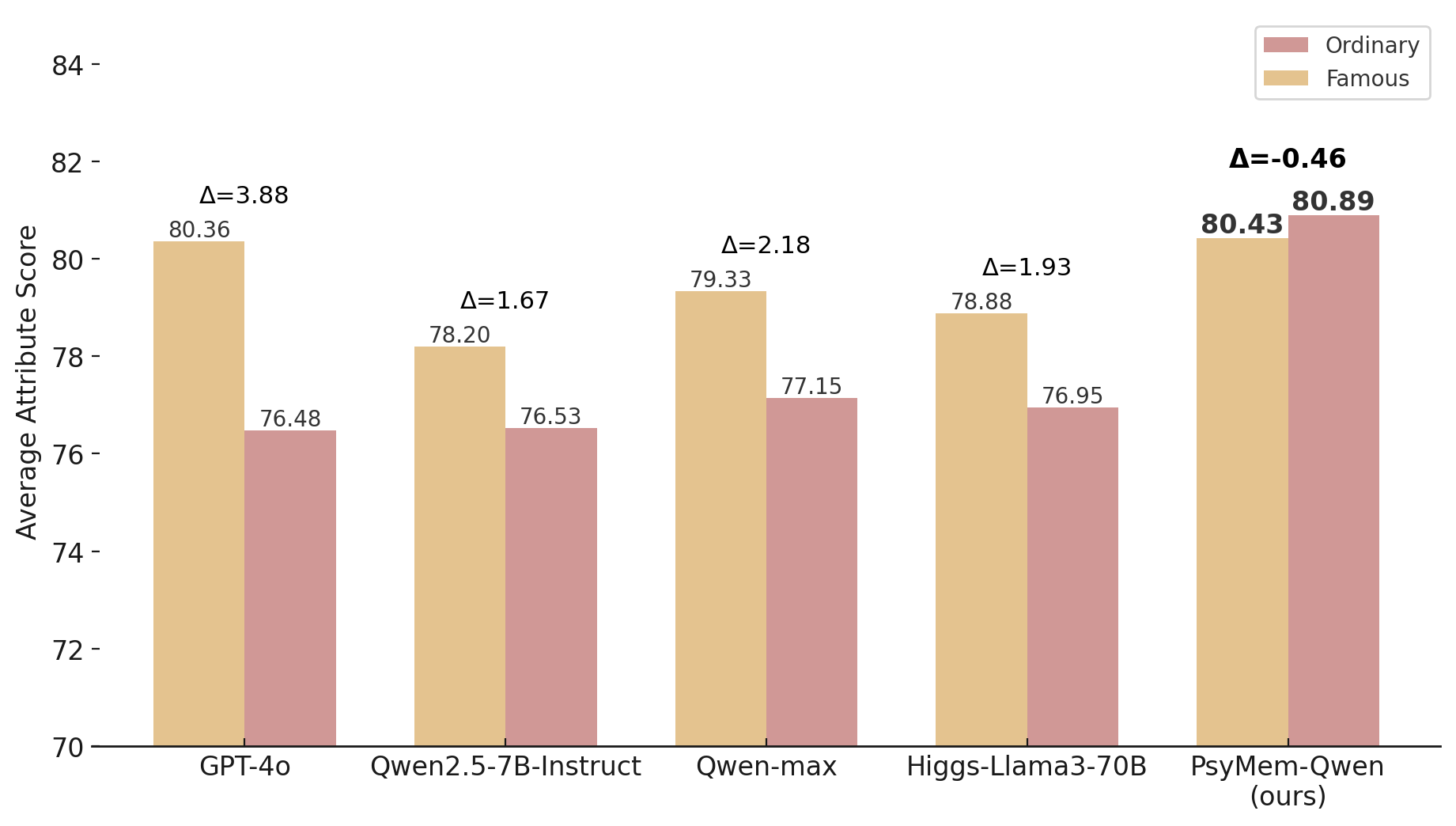

Figure 1: The performance comparison is conducted on two subsets: "Ordinary," consisting of 20 randomly selected characters from our test set, and "Famous," consisting of 20 well-known characters. The average attribute scores are calculated as the mean of the quantized scores across the 20 characters in each subset.

Dataset Architecture

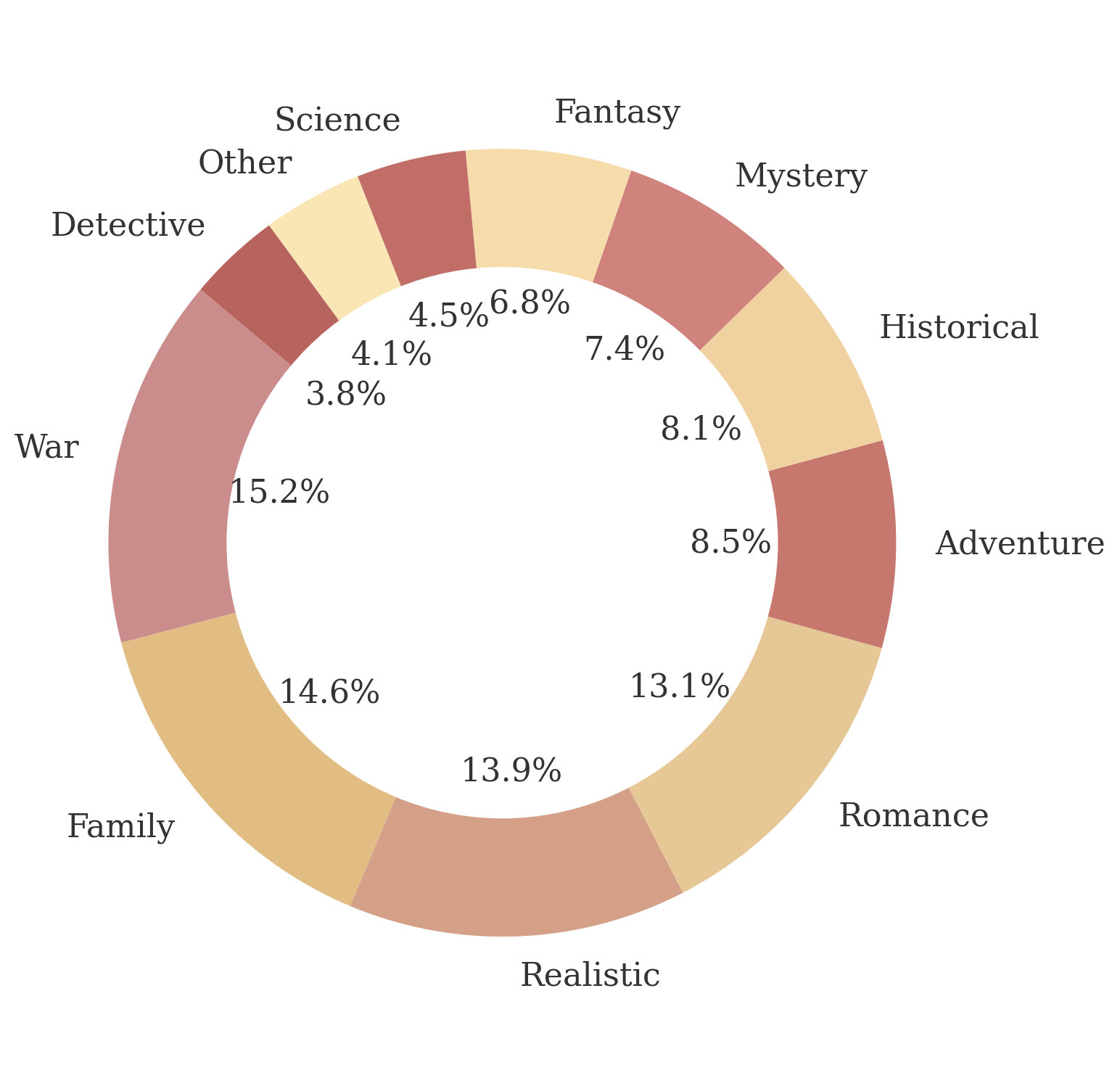

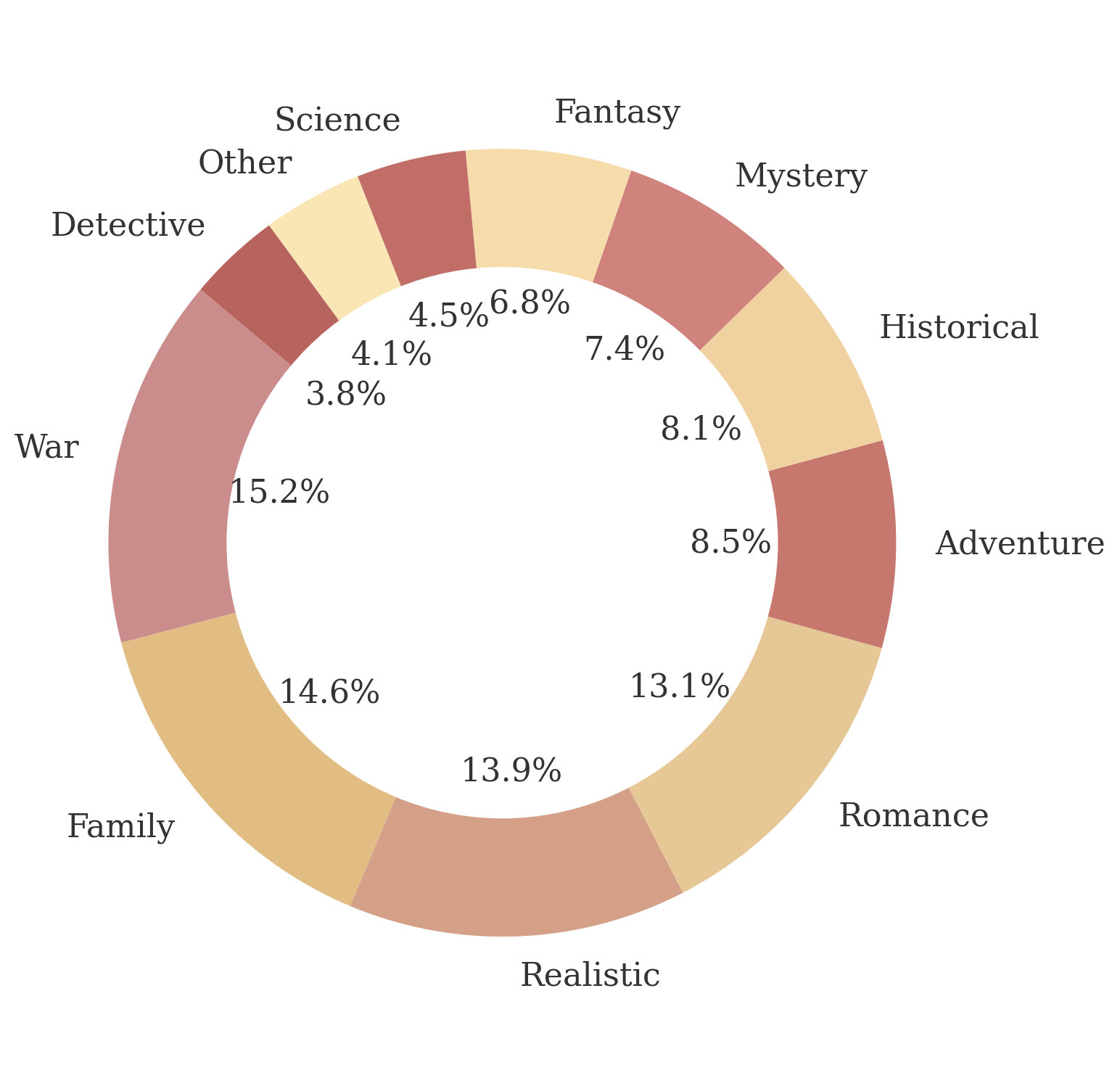

The PsyMem framework introduces a comprehensive dataset architecture, integrating modern psychological attributes and explicit memory. The dataset $D_{\mathrm{RP}$ is organized around role profiles featuring 26 quantitative dimensions, dialogue contexts, queries, responses, and memory components. Data are extracted from 539 novels to maintain character authenticity and diversity.

Figure 2: The genre distribution in the dataset.

Quantifiable Attributes and Memory Integration

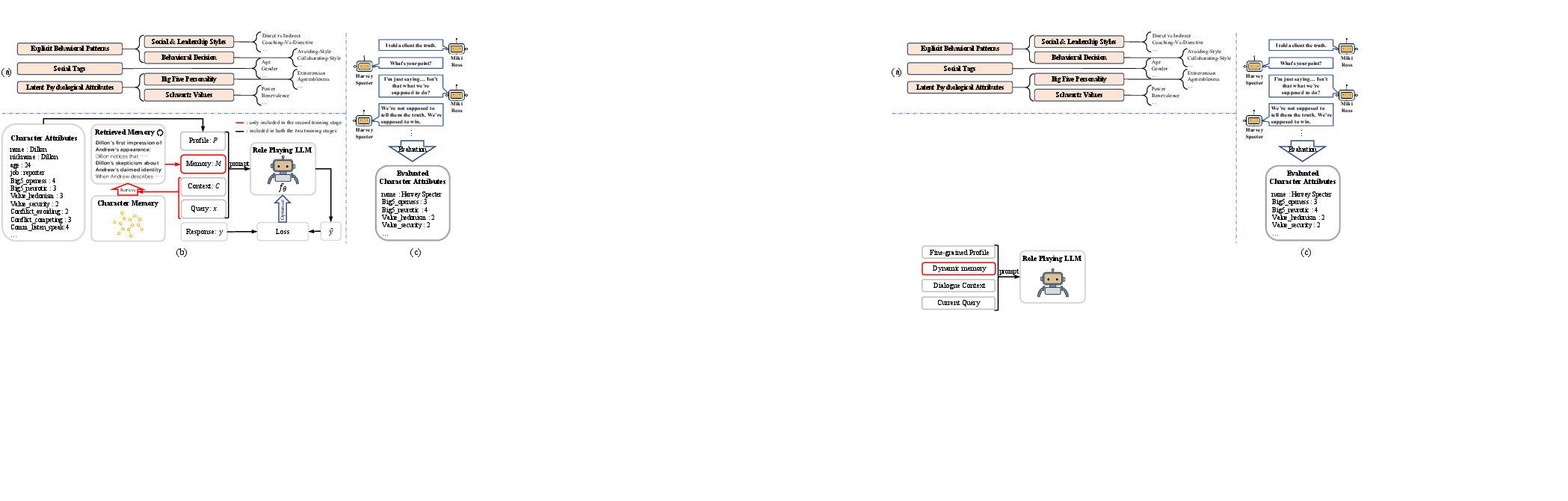

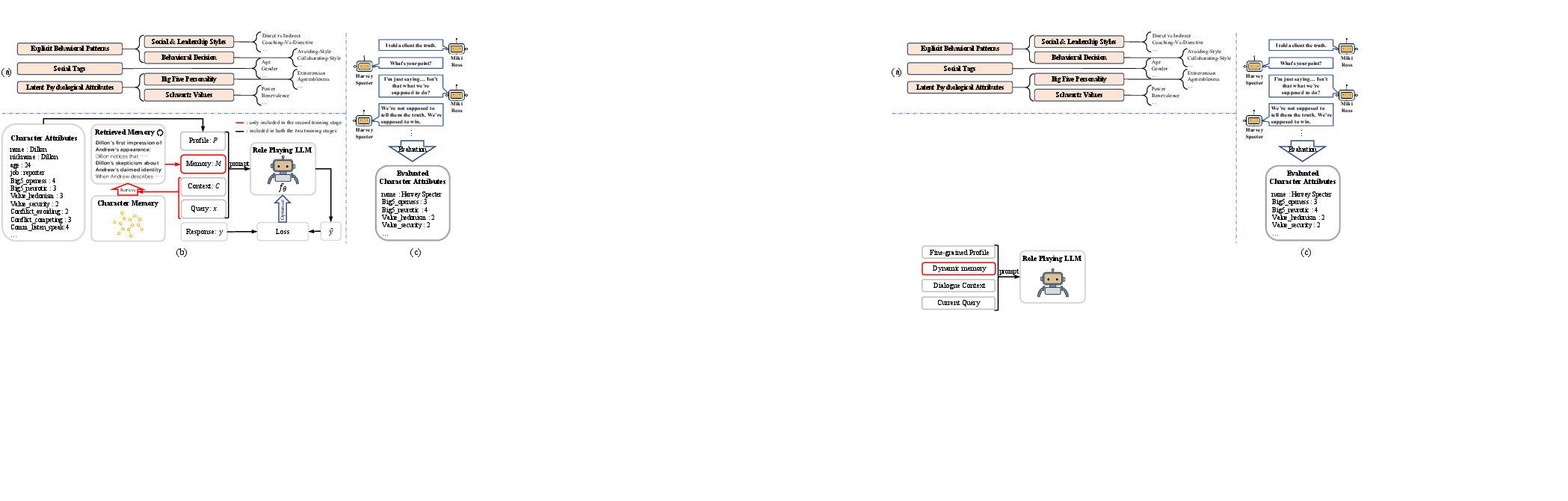

PsyMem systematically categorizes character traits into latent psychological attributes and explicit behavioral patterns, leveraging frameworks like the Big Five Personality Model and Schwartz's Theory of Basic Values. Memory integration involves constructing character-specific knowledge graphs to enhance role fidelity, simulating memory dynamics in inference.

Training and Evaluation

The training process embodies a dual-stage strategy, incorporating basic role-play capacity development and specialized memory-augmented fine-tuning. This approach preserves general language understanding while optimizing character-specific role-playing capability. Evaluation employs a structured approach with criteria centered on character fidelity and character-independent capabilities, using GPT-4o assessment for credibility.

Figure 3: The two stages training of role-playing LLM; fine-grained character profile and contextual memory enhance role-playing precision.

Experimental Results

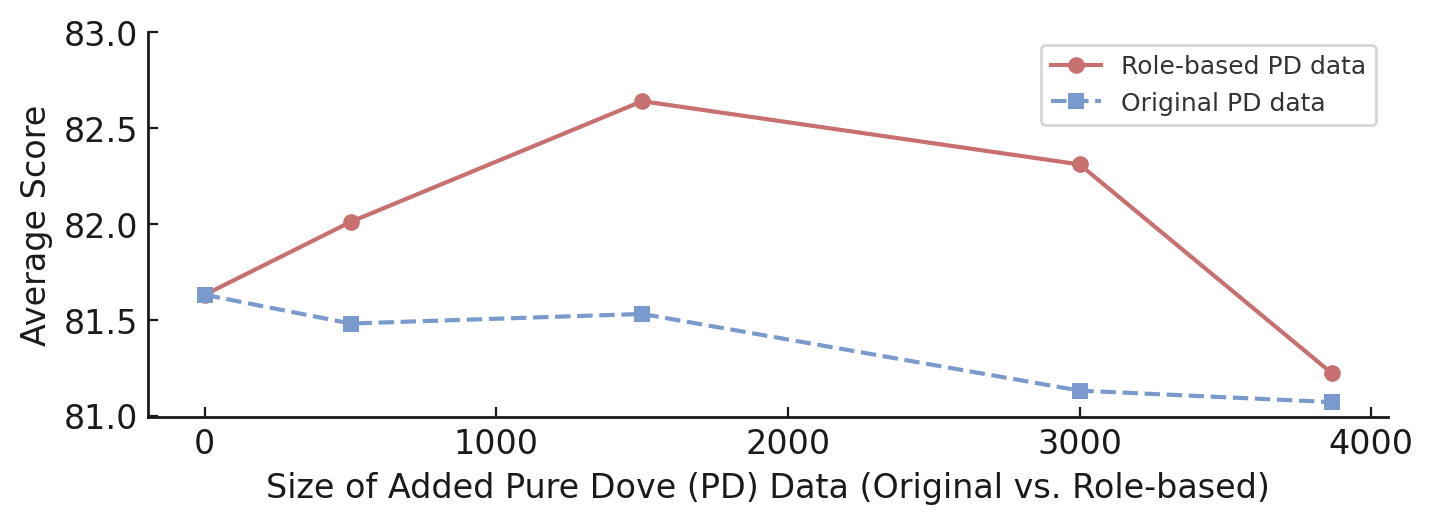

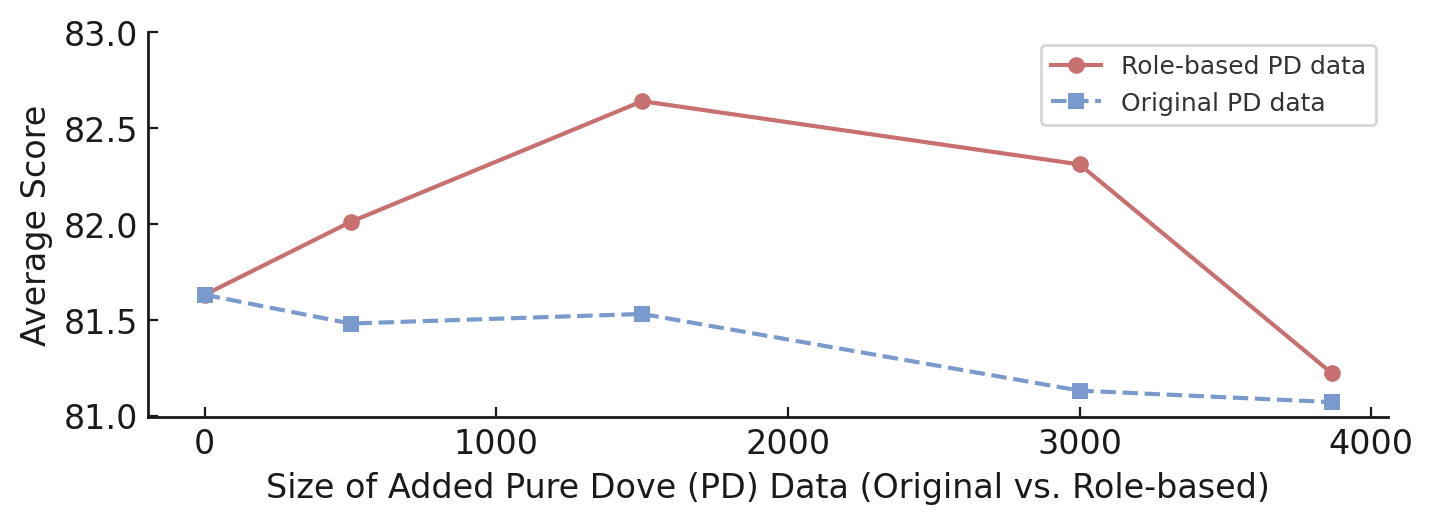

PsyMem-Qwen and PsyMem-LLama models display marked improvements, achieving increased fidelity and human-likeness scores relative to leading LLM benchmarks. Results indicate significant advancements in memory application, showcasing PsyMem's effectiveness in yielding character-consistent, memory-anchored dialogue interactions.

Figure 4: The impact of data size on model performance using the Pure Dove (PD) dataset.

Conclusion

PsyMem establishes a robust methodology for enhancing role-playing LLMs, bridging intrinsic and extrinsic character dimensions with explicit memory control. By fostering superior character consistency and aligning role-play responses with psychological frameworks, PsyMem offers a promising avenue for realistic social simulation applications. Future research could further refine these models, exploring more sophisticated cognitive dimensions and applications in diverse interactive media environments.