Evaluation of Personality Fidelity in Role-Playing Agents with InCharacter

The paper entitled "InCharacter: Evaluating Personality Fidelity in Role-Playing Agents through Psychological Interviews" introduces a novel approach to measure personality fidelity in Role-Playing Agents (RPAs) through structured psychological interviews. This paper addresses an important but underexplored area in AI development, the personality fidelity of RPAs, which refers to how accurately these agents emulate the personalities of their target characters.

Research Motivation and Methodology

RPAs, which are an application of LLMs, are designed to simulate specific characters or roles. While existing assessment methods focus predominantly on replicating linguistics and knowledge, they fall short in evaluating how accurately RPAs mimic the psychological traits of characters. To address this, the authors propose InCharacter, an interview-based method employing psychological scales to assess agent personalities. The research outlines a distinct two-stage process: the interview stage featuring open-ended questions inspired by psychological scales, and the assessment stage, where the responses are quantitatively evaluated.

Key Experimental Insights

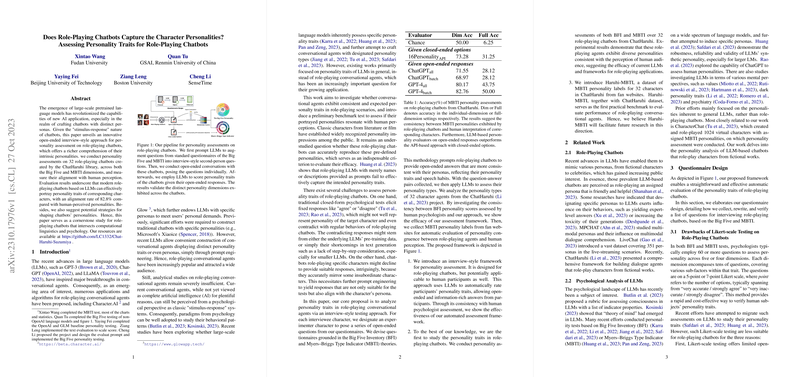

The evaluation involved 32 diverse RPAs, covering characters from various domains, and was conducted across 14 psychological scales, including the Big Five Inventory (BFI) and the 16 Personalities (16P). The most significant finding noted in this paper was the alignment of RPA personalities with those perceived by humans, achieving up to 80.7% accuracy. This was a substantial improvement over traditional self-report measures.

The authors contend that the InCharacter framework simulates expert-driven interviews offering a more nuanced and reliable assessment compared to self-report methods. Furthermore, comparisons between different RPAs suggested that the choice of foundation models and the integration of comprehensive character data play crucial roles in the fidelity of the simulated interaction.

Implications in AI and Future Directions

InCharacter's success in achieving higher fidelity in RPAs encompasses both theoretical and practical implications. Theoretically, it advances our understanding of how LLMs can be harnessed for more intricate tasks, reflecting human-like personalities accurately. Practically, the paper opens avenues for creating more lifelike and relatable AI agents in interactive applications ranging from digital gaming to educational tools.

Regarding future directions, the research hints at exploring the dynamic nature of RPA personalities, as characters themselves can evolve over time. Another avenue could be refining LLMs to reduce biases in personality assessments, enhancing reliability further.

Overall, this paper introduces significant concepts and experimental findings that contribute to the broader understanding and improvement of role-playing artificial intelligence systems. Its emphasis on nuanced psychological evaluation marks a step towards developing AI with more authentic personality simulations.