- The paper presents the RXTX algorithm that reduces computational complexity for calculating XXᵗ by decreasing multiplications and additions.

- It leverages recursive block matrix multiplication and AI-guided strategies to achieve a consistent 9% runtime speedup over traditional BLAS routines.

- Empirical results validate RXTX's efficiency, outperforming previous state-of-the-art methods in 99% of test cases and large dense matrix simulations.

Computation of XXt Using RXTX Algorithm

The RXTX algorithm represents a novel approach to computing the product of a matrix with its transpose, referred to as XXt. This essay provides an in-depth analysis of the RXTX algorithm presented in the paper "XX{t} Can Be Faster" (2505.09814), exploring how it achieves improvements over existing methods for this type of matrix multiplication.

Introduction to RXTX Algorithm

RXTX emerges as an AI-designed algorithm for calculating the matrix-by-transpose product, XXt, with notable efficiency gains. By leveraging AI-driven search combined with combinatorial optimization, RXTX reduces the computational burden by approximately 5% when compared to previous state-of-the-art (SotA) methods. This reduction is achieved by using 5% fewer multiplications and additions even for relatively small matrix sizes.

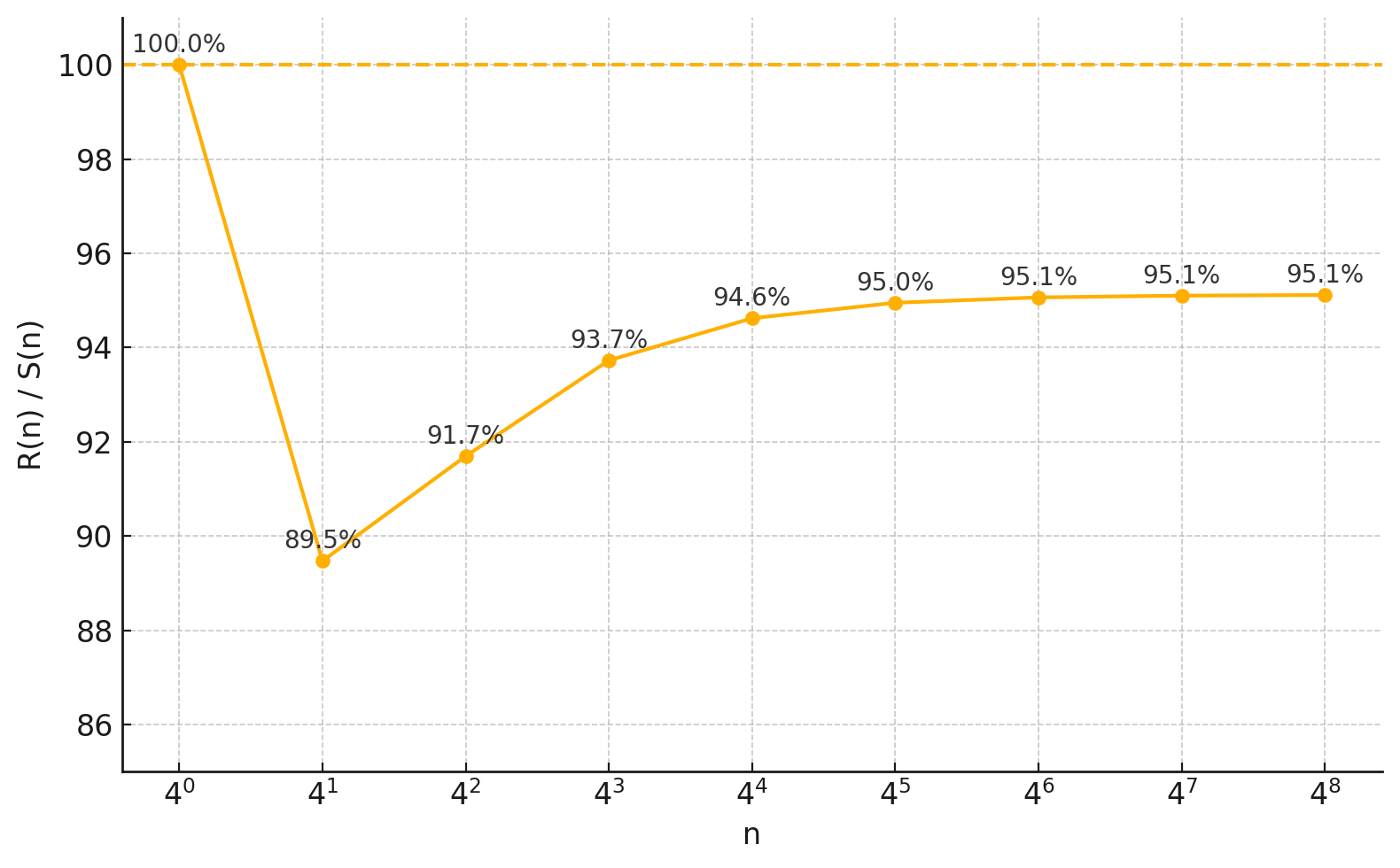

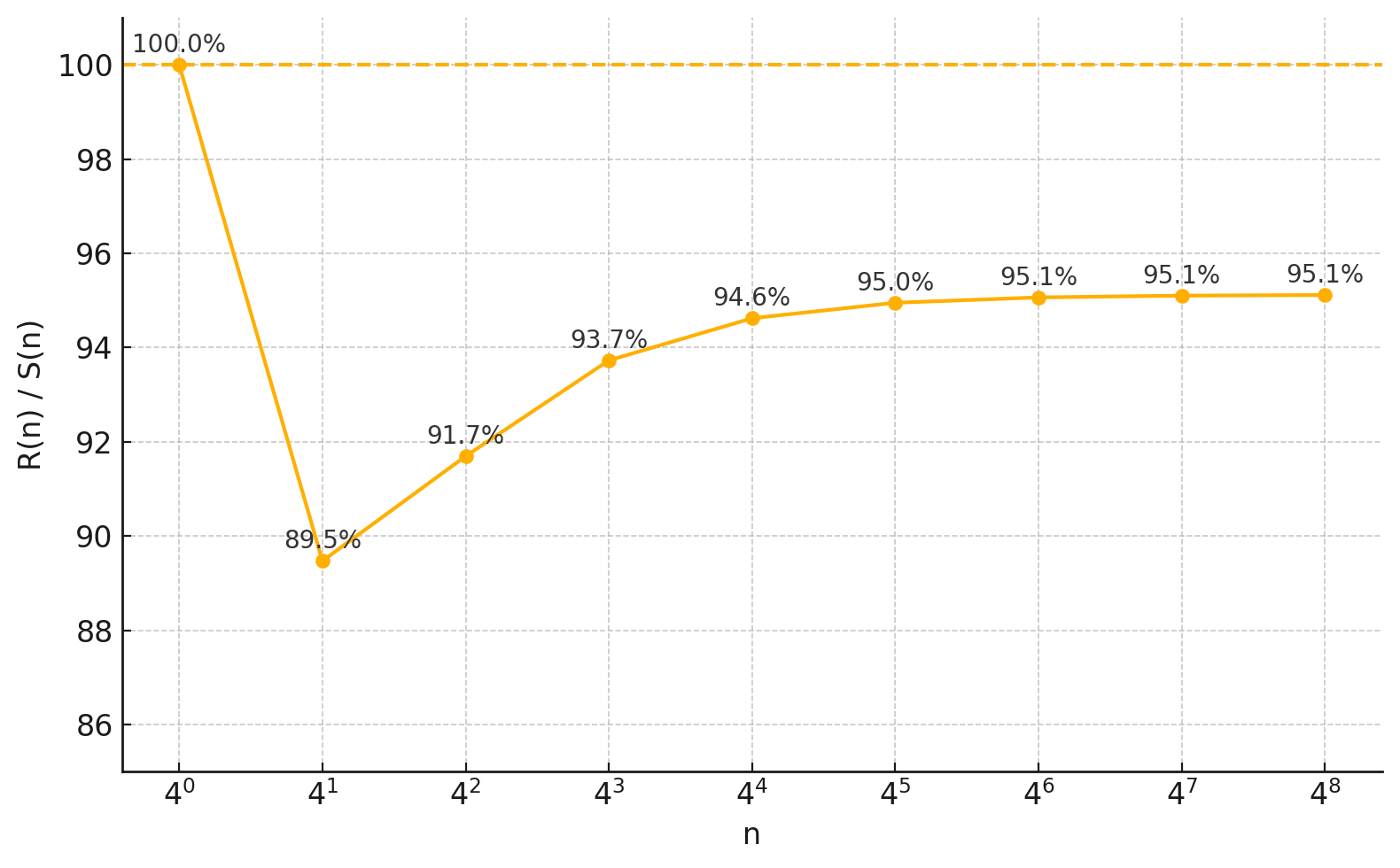

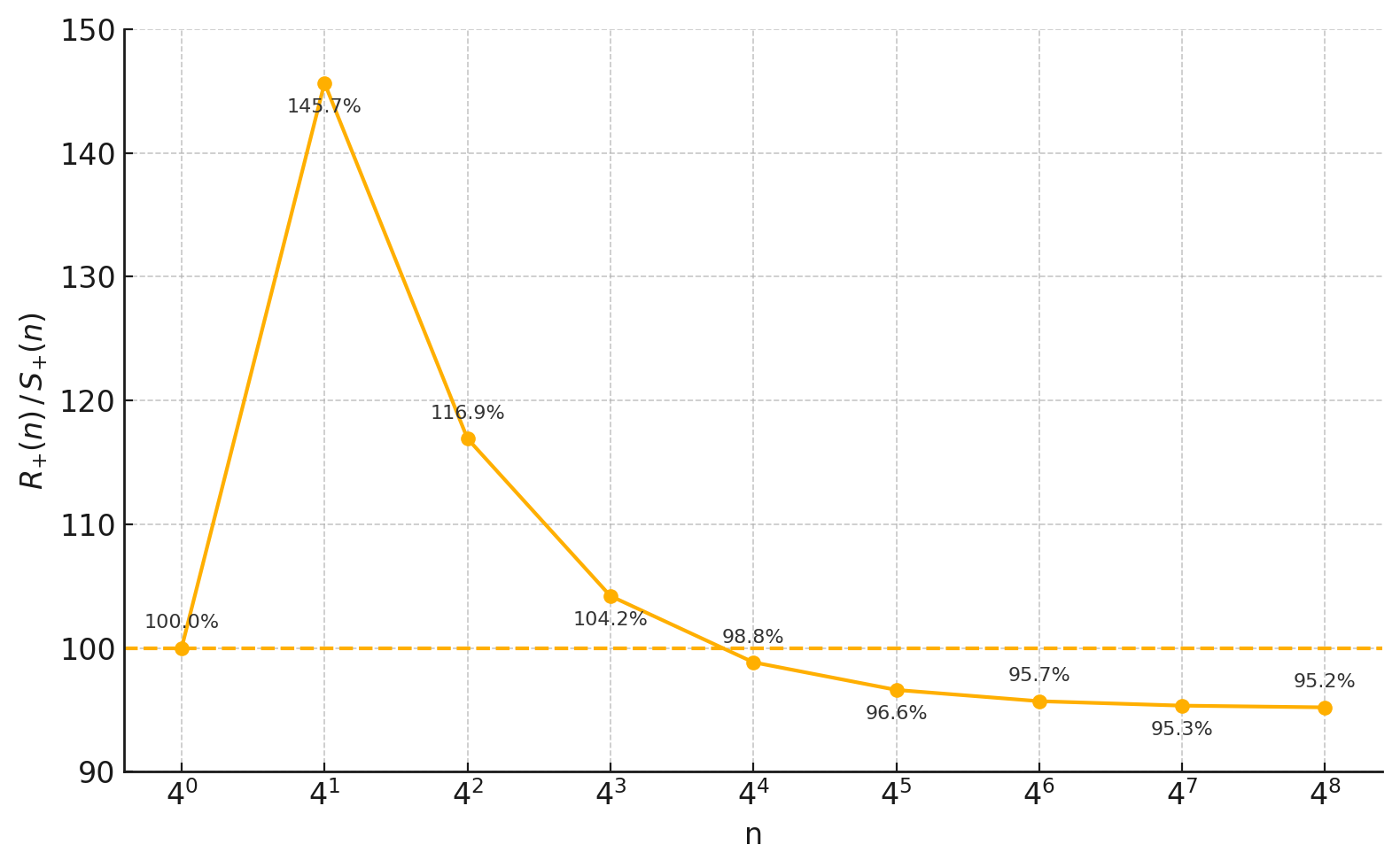

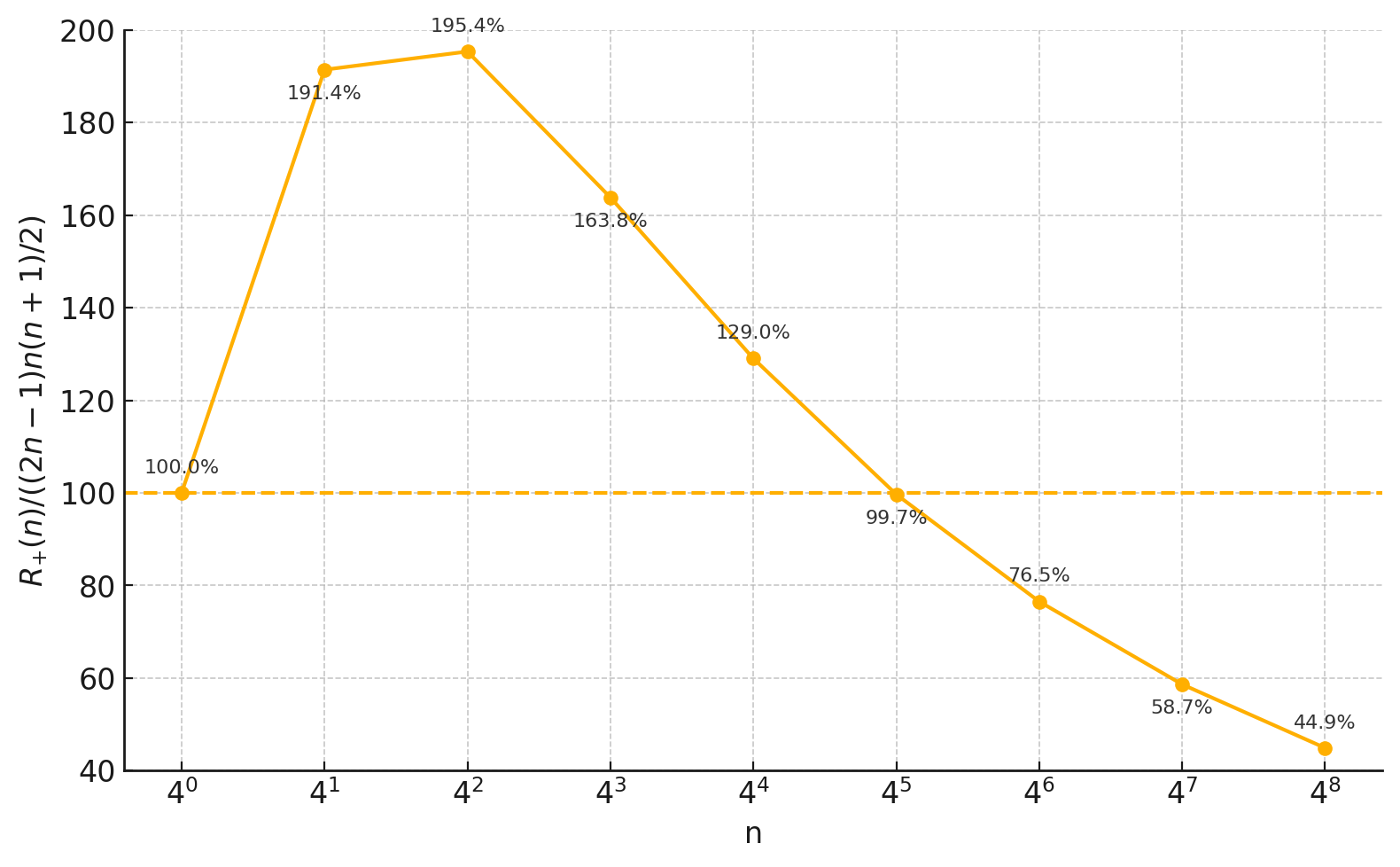

Figure 1: Comparison of number of multiplications of RXTX to previous SotA and naive algorithm.

Computational Core of RXTX

RXTX's architecture capitalizes on recursive block matrix multiplication. When breaking down the recursion and multiplications for matrices sized n×n, RXTX reduces the necessary multiplications and additions through optimized recursive calls:

- Recursive Calls: RXTX uses 8 recursive calls juxtaposed against 4 for recursive Strassen, while maintaining a reduction in multiplications.

- General Products: It integrates 26 general matrix multiplications, optimizing the calculation for 4×4 block matrices as opposed to traditional 16 recursive calls in previous algorithms.

The formal recursive formulas for the number of multiplications and operations highlight RXTX's efficiency over recursive Strassen and naive algorithms. For large matrix sizes, these reductions manifest as concrete runtime benefits seen in practical implementations.

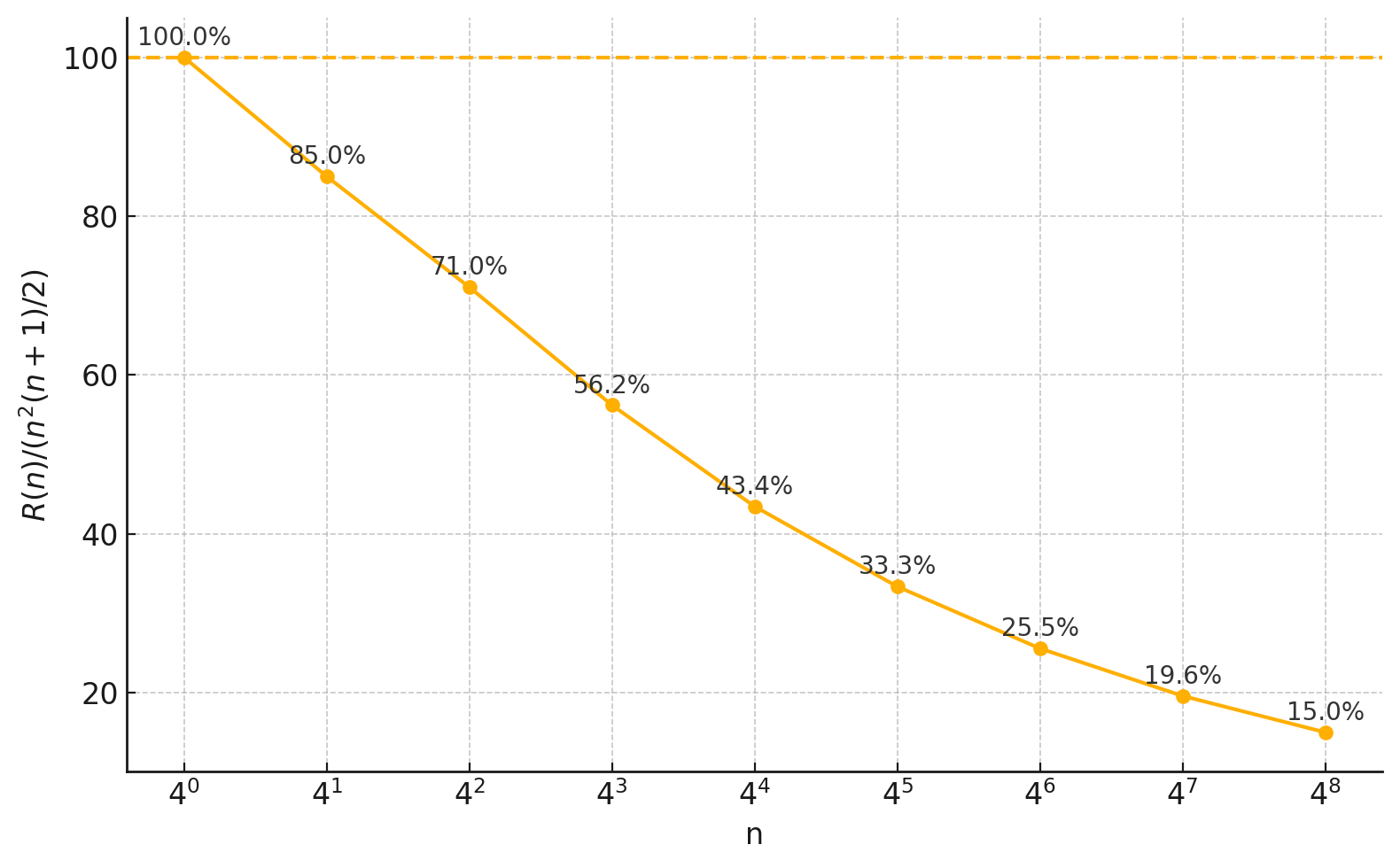

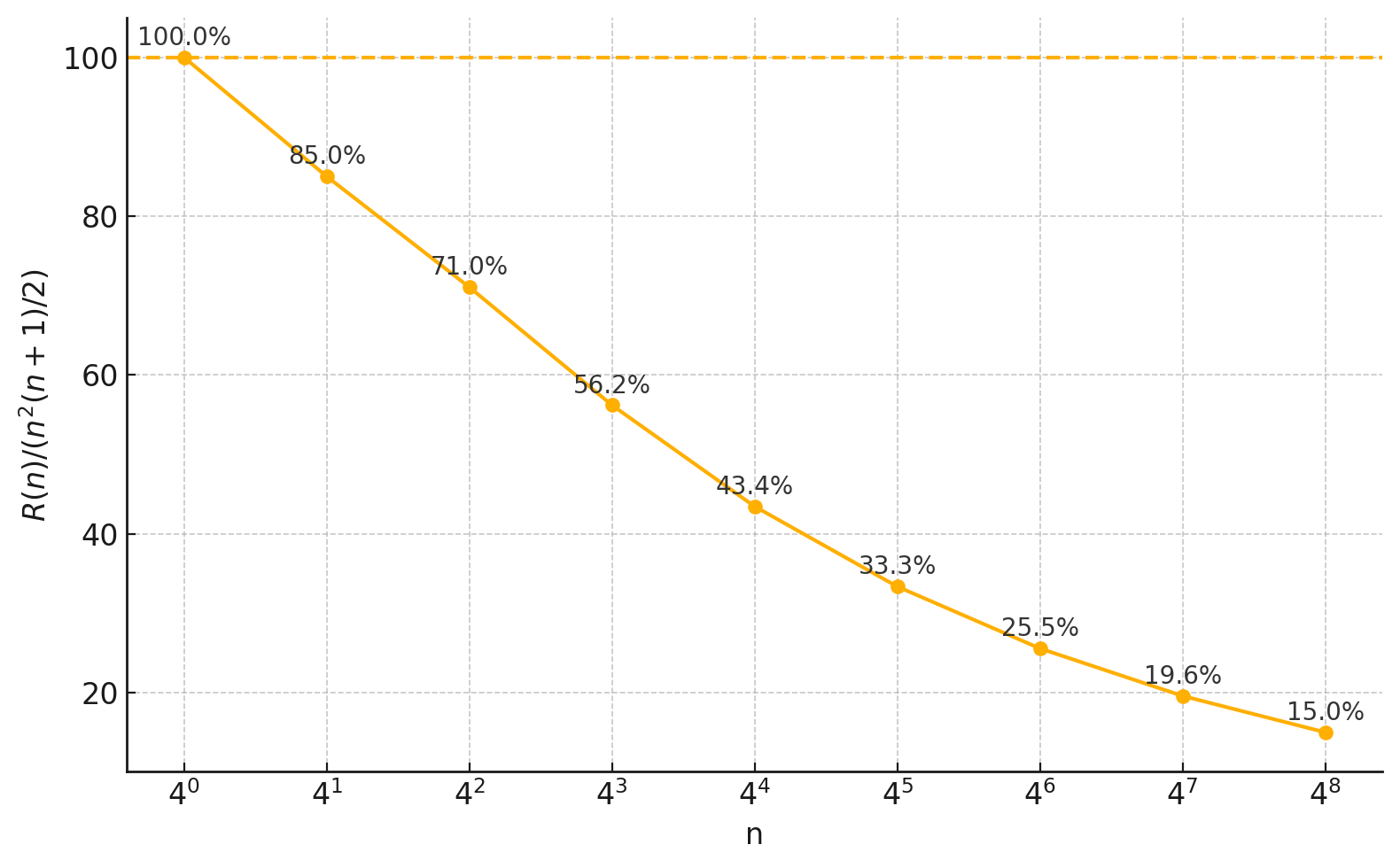

Figure 2: Comparison of number of operations of RXTX to recursive Strassen and naive algorithm. RXTX outperforms recursive Strassen for n≥256 and naive algorithm for $n \geq 1024.</p></p>

<h2 class='paper-heading' id='performance-and-efficiency-gains'>Performance and Efficiency Gains</h2>

<p>RXTX's real-world performance was validated through a series of computational experiments simulating large dense matrices with random normal entries. These tests demonstrated RXTX's capability to outperform traditional matrix multiplication routines available in standard linear algebra libraries like BLAS:</p>

<ul>

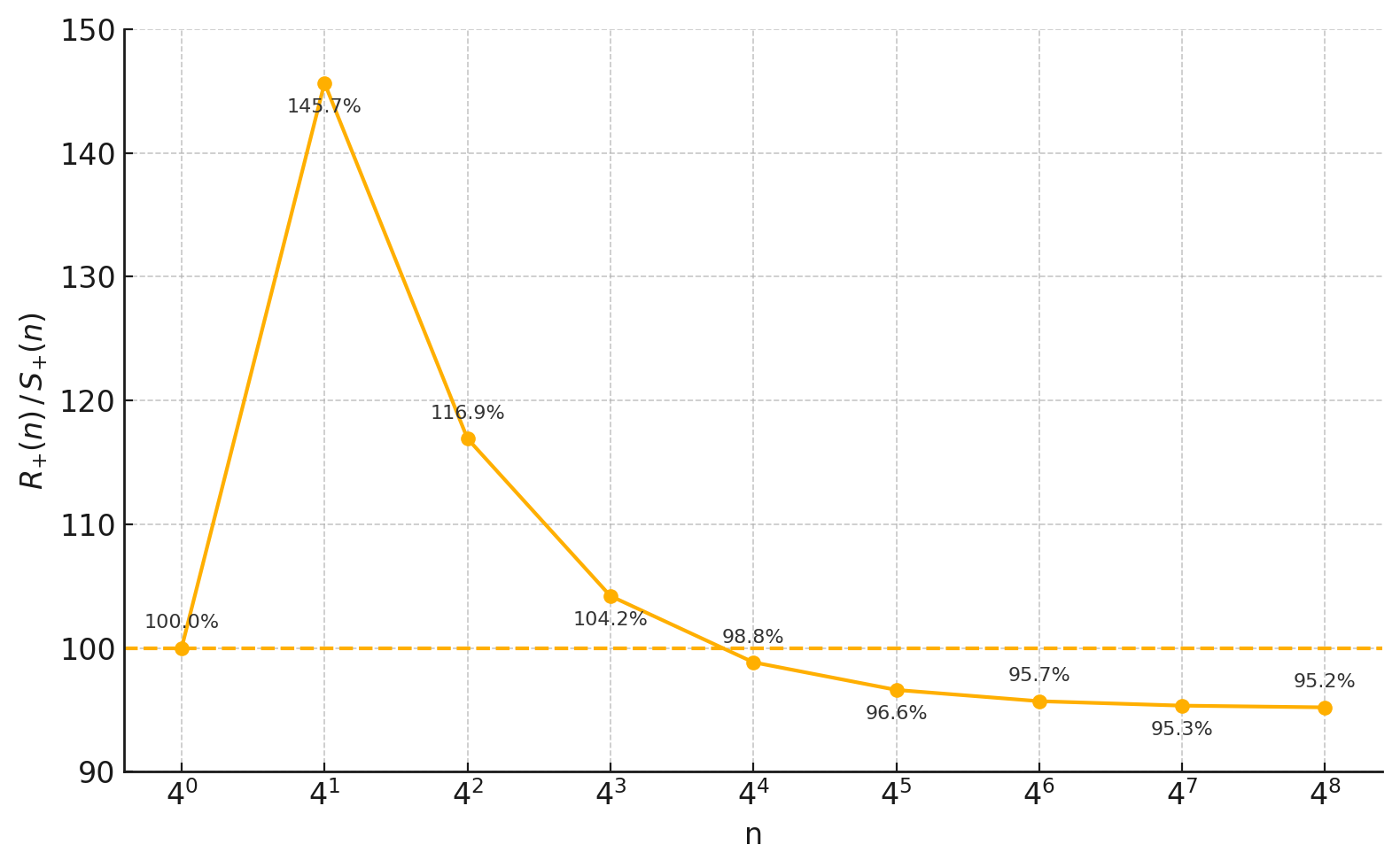

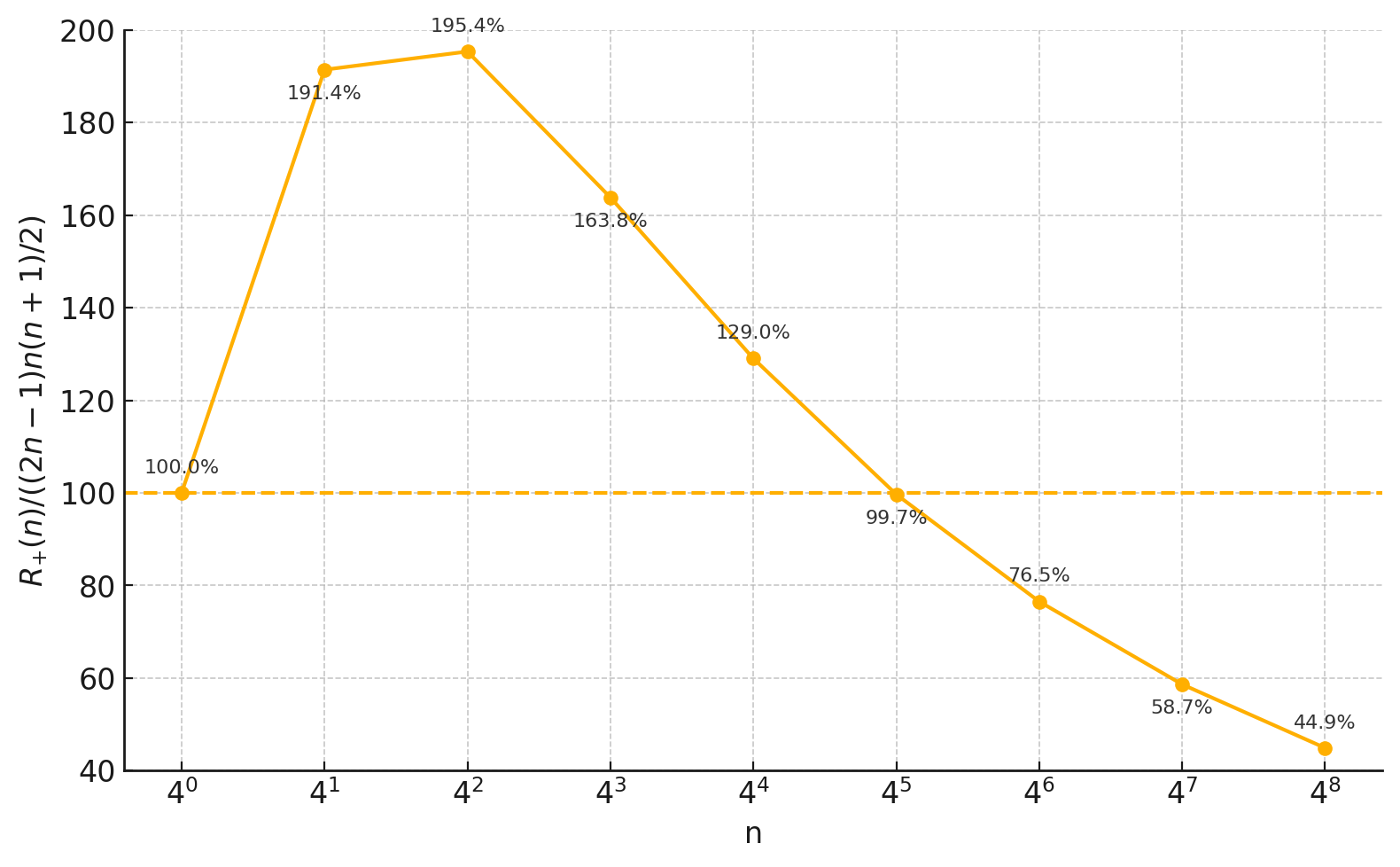

<li><strong>Speedup</strong>: Empirical results suggest an average runtime acceleration of 9% for RXTX, outperforming the reference BLAS routines used for direct $XX^{t}computation.</li><li><strong>Consistency</strong>:RXTXwasfasterin99<imgsrc="https://emergentmind−storage−cdn−c7atfsgud9cecchk.z01.azurefd.net/paper−images/2505−09814/rxtx2509.png"alt="Figure3"title=""class="markdown−image"loading="lazy"><pclass="figure−caption">Figure3:TheaverageruntimeforRXTXis2.524s,whichis9</ul><h2class=′paper−heading′id=′discovery−methodology′>DiscoveryMethodology</h2><p>RXTXwasdiscoveredusingasophisticatedRL−guidedLargeNeighborhoodSearch(LNS),augmentedbyMixedIntegerLinearProgramming(MILP)strategies:</p><ul><li><strong>RL−guidedLNS</strong>:Utilizing<ahref="https://www.emergentmind.com/topics/reinforcement−learning−rl"title=""rel="nofollow"data−turbo="false"class="assistant−link"x−datax−tooltip.raw="">reinforcementlearning</a>agentstoproposerank−1bilinearproductsets.ThesecandidatesetsarerefinedthroughMILP−basedexhaustiveenumerationtoidentifycompactcombinationsachievingthetargetexpressions.</li><li><strong>MILPPipeline</strong>:Two−tierMILPoptimizesthesubsetselectionensuringcomprehensivecoverageofXX^{t}targetexpressions,streamliningthealgorithmtoitsmostefficientform.</li></ul><p>ThisapproachparallelssimplifiedstrategiesoftheAlphaTensorRLframeworkbutisuniquelytailoredtotargettensorproductsforstructuredmatrixoperations.</p><h2class=′paper−heading′id=′conclusion′>Conclusion</h2><p>TheRXTXalgorithmembodiesasignificantadvancementinefficientmatrixmultiplication,particularlyforcomputationsinvolvingXX^{t}$. Its AI-assisted discovery process and the subsequent computational gains underscore the pivotal role of modern algorithms in enhancing linear algebra operations. The indicating performance metrics set a new benchmark within the domain, inviting further exploration and potential extension of RXTX to other structured matrix scenarios.