- The paper presents ThreatLens, a framework that automates threat modeling and test plan generation for hardware security verification using LLMs.

- It employs a multi-agent design with retrieval-augmented generation to extract security policies and generate structured test plans, demonstrated on the NEORV32 SoC.

- The framework improves scalability and reduces manual errors, while its reliance on closed-source models indicates potential for further enhancements.

ThreatLens: LLM-guided Threat Modeling and Test Plan Generation for Hardware Security Verification

The paper introduces "ThreatLens", an innovative framework designed to automate threat modeling and test plan generation for hardware security verification using LLMs. This system is significant as it addresses the limitations of manual security verification processes which are labor-intensive, error-prone, and inefficient in scaling with modern SoC complexities.

Problem Context

The evolving complexity of hardware designs and the rising reliance on third-party IP necessitate robust security verification processes. Traditional methods often involve extensive manual processes that are not only time-consuming but also susceptible to human error. With the growing adoption of LLMs in various domains, they present an opportunity to automate these processes using advanced natural language understanding, thus ensuring comprehensive and scalable security verification.

The ThreatLens Framework

Framework Overview

ThreatLens is a multi-agent framework that comprises several specialized agents designed to interactively engage with verification engineers, extract relevant security insights using a retrieval-augmented generation (RAG) system, and create comprehensive test plans.

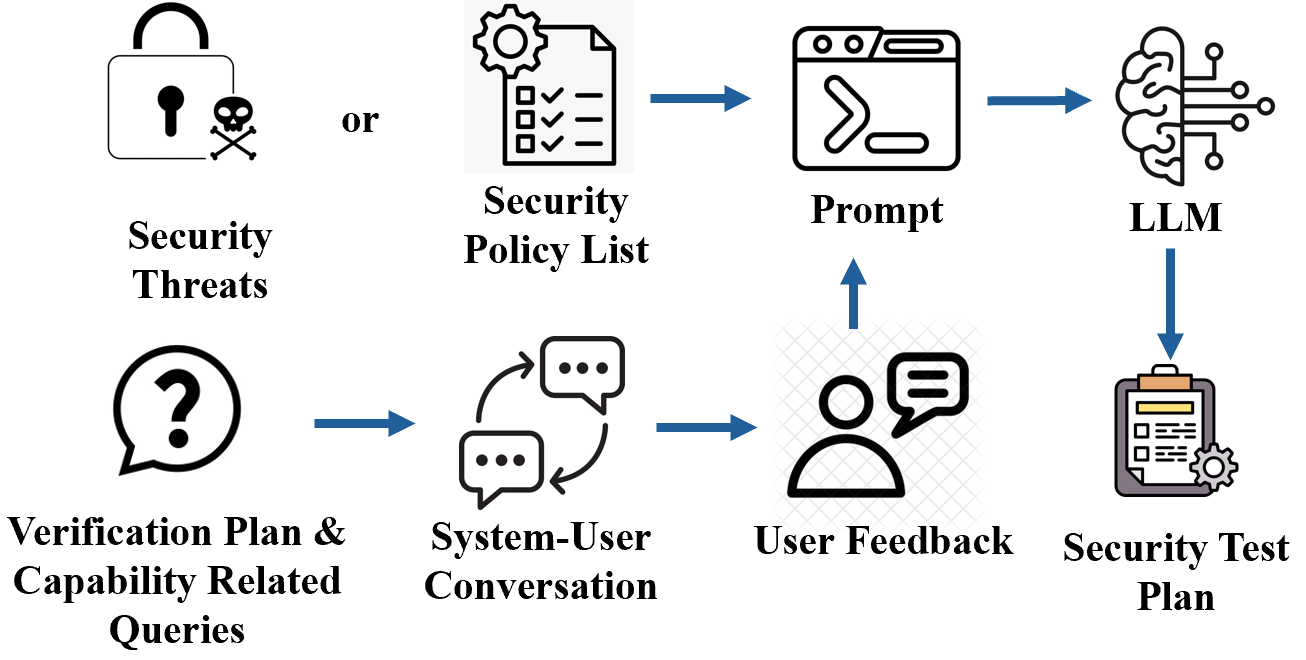

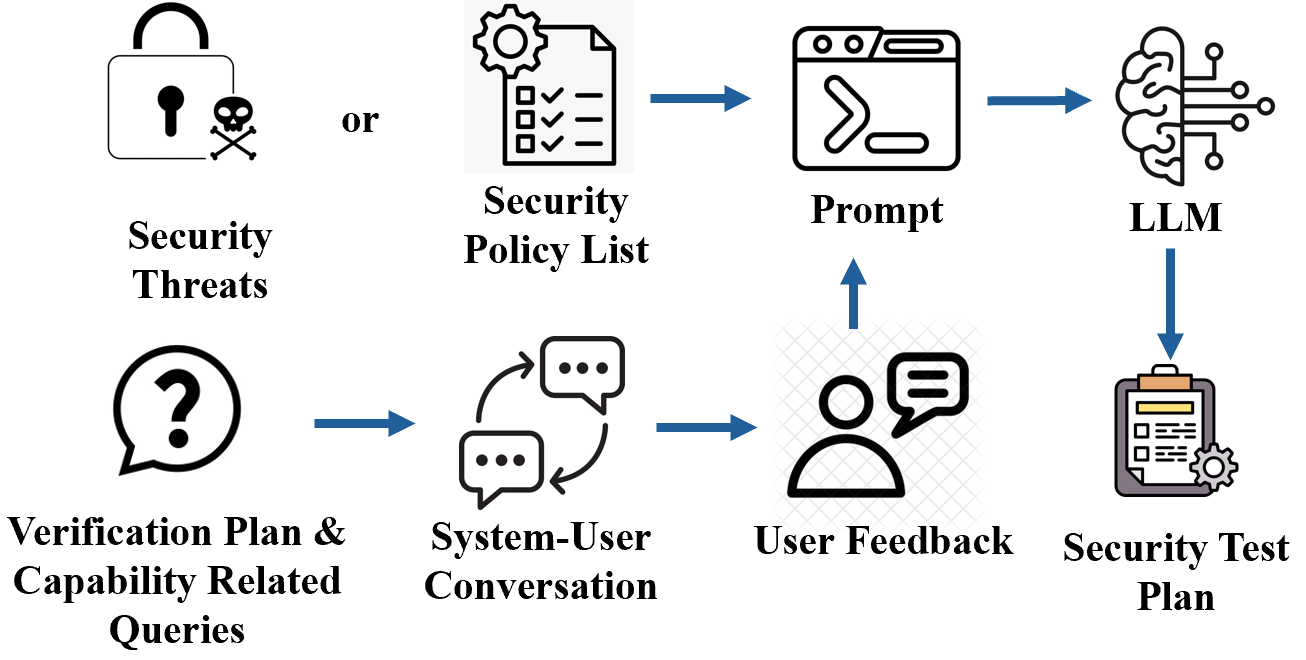

Figure 1: Overview of proposed ThreatLens framework. The blue arrows indicate the flow for test plan generation for physical and supply chain attacks and the orange arrows indicate the flow for that of software-exploitable hardware vulnerabilities.

Threat Identification Agent

This agent induces a systematic approach combining RAG-based retrieval with LLM reasoning. Four crucial steps define its operation, beginning with the extraction of security knowledge from a predefined database, followed by an interactive query generation step to gather insightful feedback from engineers. The LLM then evaluates these threats to develop a refined list for consideration.

Figure 2: Overview of Threat Identification Agent.

Security Policy Generator Agent

Focused on identifying software-exploitable vulnerabilities, this agent extracts design-specific security policies using RAG mechanisms. The policies are then analyzed for their relevance to a pre-identified threat list derived from both specification documents and ISA guidelines.

Figure 3: Overview of Security Policy Generator Agent.

Test Plan Generator Agent

The test plan generation hinges on the previously identified threats and policies. This agent engages with engineers to identify feasible verification strategies and constraints, thereby drafting a structured test plan, detailing test methodologies, expected results, and evaluation criteria.

Figure 4: Overview of Test Plan Generator Agent.

Output and Application

By applying the framework to the NEORV32 SoC, ThreatLens effectively generated 854 unique security policies, which are crucial for the SoC's security verification. Case studies reveal the practical utility of ThreatLens' outputs, highlighting the consequences of failing to adopt the framework's recommendations and the significance of automated threat identification in addressing real-world security vulnerabilities.

Experimental Results

The framework was evaluated on the NEORV32 SoC, where it successfully extracted extensive security policies and generated a structured test plan. This showcases its potential to automate laborious verification processes, depicting its capability through realistic scenarios. Using advanced retrieval and inference systems like LangChain and FAISS, ThreatLens demonstrated enhancing security assurance with a methodological test case generation.

Conclusion

ThreatLens signifies a pivotal shift towards automated, scalable security verification processes in hardware security, powered by LLMs. Despite its promising application, the framework encounters limitations, including reliance on closed-source models like GPT-4o and partial automation of the threat modeling process. Further advancements could include integrating open-source LLMs and more comprehensive security asset extraction that refines the threat assessment processes, paving the way for broader applicability and refined efficiency in the hardware security verification domain.