Generative AI and LLMs for Cyber Security

Introduction

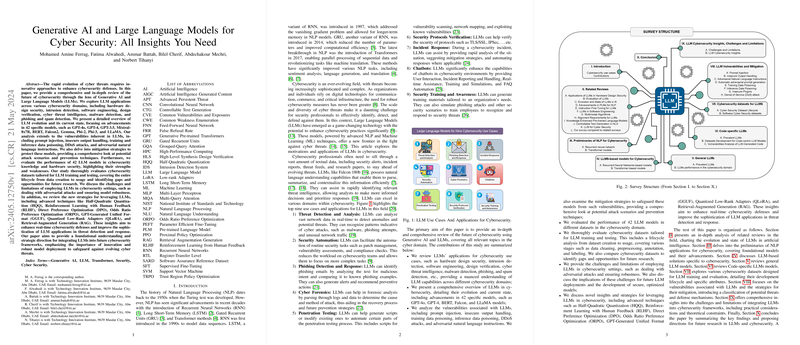

The rapid evolution of cyber threats necessitates innovative approaches to bolster cybersecurity defenses. A paper explores the transformative potential of Generative AI and LLMs within the cybersecurity landscape. Covering applications across various domains, from hardware design security to phishing detection, the paper sheds light on how LLMs can significantly enhance threat prevention and response capabilities.

LLM Applications in Cyber Security

LLMs have versatile applications in cybersecurity. Here are some notable use cases:

- Threat Detection and Analysis: LLMs can analyze vast amounts of network data in real time, identifying anomalies and potential threats such as malware and phishing attempts.

- Security Automation: LLMs can automate routine security tasks like patch management and vulnerability assessments, freeing up cybersecurity teams to focus on more complex issues.

- Phishing Detection and Response: By analyzing text for malicious intent, LLMs can identify phishing emails and recommend preventive actions.

- Cyber Forensics: LLMs can assist in forensic analysis by parsing logs and data to pinpoint the cause and method of attacks.

- Penetration Testing: LLMs can generate or modify scripts to automate aspects of penetration testing, such as vulnerability scanning and network mapping.

- Incident Response: During cybersecurity incidents, LLMs can provide rapid analysis, suggest mitigation strategies, and automate responses.

- Security Training and Awareness: LLMs can generate tailored training materials and simulate security scenarios to train employees in recognizing and responding to threats.

Evolution and Current State of LLMs in Cyber Security

The paper offers a thorough overview of the evolution of LLMs, highlighting advancements in models like GPT-4, GPT-3.5, BERT, and others. These models have shown significant improvements in understanding and generating human-like text, making them valuable tools in the cybersecurity domain. However, the effective integration of LLMs into cybersecurity strategies requires understanding their strengths and limitations.

Vulnerabilities and Mitigation Strategies

While LLMs offer powerful capabilities, they are not immune to vulnerabilities such as:

- Prompt Injection: Crafting inputs that manipulate LLM outputs.

- Insecure Output Handling: Blindly trusting LLM outputs, leading to security risks like Cross-Site Scripting (XSS) and remote code execution.

- Training Data Poisoning: Manipulating training data to skew LLM learning.

- Adversarial Instructions: Crafting natural language instructions that introduce hidden vulnerabilities.

The paper discusses several mitigation strategies to address these vulnerabilities, including:

- Implementing robust validation and sanitization for LLM outputs.

- Employing advanced code validation and training with adversarial examples.

- Continuous monitoring to detect and neutralize potential attacks.

Performance Evaluation

The paper evaluates the performance of 40 LLM models in cybersecurity knowledge and hardware security, highlighting their various strengths and weaknesses. Additionally, the paper thoroughly assesses cybersecurity datasets used for LLM training and testing, identifying opportunities for future research.

Challenges and Future Directions

Deploying LLMs in cybersecurity comes with challenges like:

- Defending against sophisticated adversarial attacks.

- Ensuring model robustness and reliability.

- Adapting to the ever-evolving landscape of cyber threats.

The paper suggests employing advanced techniques like Reinforcement Learning with Human Feedback (RLHF) and Retrieval-Augmented Generation (RAG) to enhance the real-time applicability of LLMs in cybersecurity. These methods aim to improve the models' ability to respond to complex and dynamic threats effectively.

Conclusion

The paper underscores the significant potential of LLMs to transform cybersecurity practices by integrating innovative AI models into defense strategies. While challenges remain, the strides in LLM capabilities point towards a future where cybersecurity measures are more robust, sophisticated, and adaptive to evolving threats. This foundational understanding provides a strategic roadmap for integrating LLMs into the cybersecurity frameworks, emphasizing continuous innovation and resilience.