- The paper introduces a hybrid RL and beam search approach that achieves 5%-85% improvements in key performance metrics without needing GPU retraining.

- It leverages beam search to prune the state space and manage routing congestion in analog IC design efficiently.

- Experimental results demonstrate that the BS-RL framework outperforms simulated annealing and traditional RL techniques in layout optimization.

Enhancing Reinforcement Learning for the Floorplanning of Analog ICs with Beam Search

Introduction

The paper introduces an innovative hybrid method that combines Reinforcement Learning (RL) with a Beam Search (BS) strategy, enhancing the automated floorplanning of analog integrated circuits (ICs). Traditional learning-based solutions face challenges with the complex trade-offs involved in the layout of analog ICs, largely due to intricate device physics and circuit variability considerations. While Reinforcement Learning has made recent strides in addressing these challenges, particularly in solving floorplanning problems, the paper seeks to overcome persistent issues related to policy retraining and fine-tuning through a novel beam search enhancement.

Problem Definition

The floorplanning problem is articulated as an optimization issue where the objective is to assign coordinates to a set of rectangular modules on a chip, ensuring non-overlapping placement while minimizing a cost metric. This metric is a weighted sum of total area, proxy wire length, and target aspect ratio, providing flexibility to users in optimizing output layouts according to specific needs. The authors introduce a congestion management procedure based on a previously proposed strategy [lai_maskplace_2022], aimed at generating more routing-friendly floorplans by ensuring congestion thresholds are not exceeded.

Beam Search and Reinforcement Learning: Methodology

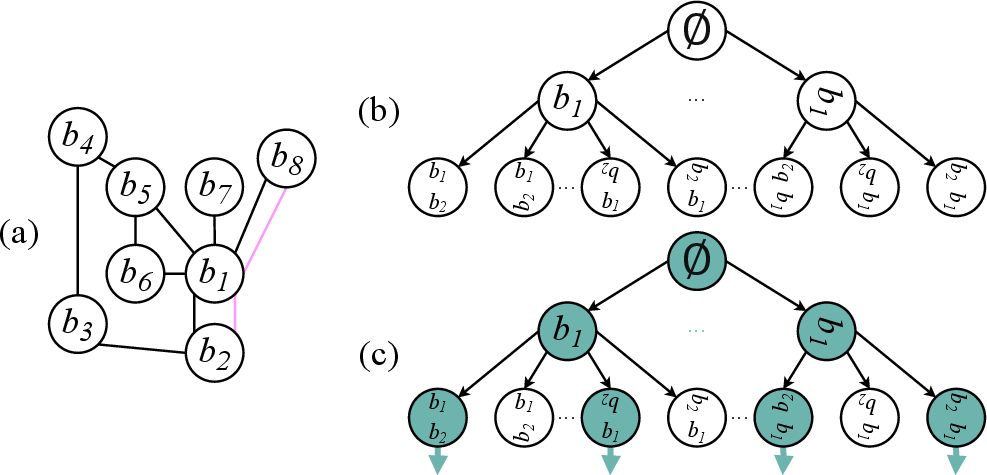

Beam Search

Beam Search is employed as a heuristic search algorithm that incrementally explores a search graph by retaining only the most promising paths according to a predefined beam width (β). This approach strikes a balance between memory efficiency and computational speed while maintaining empirical effectiveness in producing sub-optimal solutions superior to greedy methods.

Reinforcement Learning

The baseline RL agent used is derived from previous implementations [basso_effective_2025]. The floorplanning task is framed as a Markov Decision Process, with the RL agent iteratively optimizing the solution through sequential decision-making. Unlike conventional RL methods relying extensively on policy fine-tuning, the proposed framework allows for enhanced state exploration without necessitating GPU-based retraining.

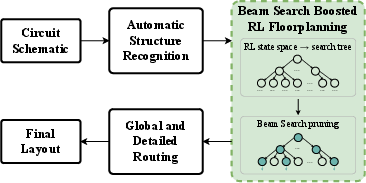

Hybrid BS-RL Approach

The hybrid Beam Search-Reinforcement Learning (BS-RL) methodology employs a state-space tree, where the beam search periodically prunes inferior states, allowing an RL agent to maximize short-term rewards and optimize the long-term cost function:

Figure 1: High-level schematic of the automated layout pipeline presented in \cite{basso_effective_2025}.

Through the use of BS, the resulting framework achieves a marked improvement in computational efficiency and layout optimization compared to traditional reinforcement learning strategies and established metaheuristics.

Experimental Results

Comprehensive experimental evaluations, benchmarked against standard metaheuristics such as Simulated Annealing and existing RL-based approaches, demonstrate the enhanced capability of the BS-RL framework. Significant improvements in key metrics such as area utilization, dead space reduction, half-perimeter wire length, and reward scores underscore the approach's efficacy. Notably, the BS-RL method does not require expensive GPU resources, maintaining runtime efficiency on CPU platforms.

Figure 2: Examples of outputs of BS-RL (k=5, epsilon=0.7, beta=10), applied to the OTA-2 instance.

The experimental results reveal improvements ranging from 5% to 85% in key performance metrics when compared to prior methods. These gains are without sacrificing generalization ability or the integrity of handling complex constraints inherent in analog IC design.

Conclusion

The research provides a meaningful advancement in the domain of electronic design automation for analog ICs, offering a robust RL-based framework enhanced by beam search. The methodology's ability to retain flexibility in floorplan objectives and manage congestion effectively positions it as a valuable tool for practical applications within the industry, overcoming the limitations of previous solutions which necessitated retraining or fine-tuning. Future work may explore further integration of detailed routing information and feedback from post-layout verifications to iteratively refine placements, advancing toward completely automated, clean layout designs compliant with industry verification standards.