- The paper demonstrates a novel approach that uses CXL memory pools instead of PCIe switches to enhance resource utilization and lower costs.

- The methodology leverages shared CXL memory with software-managed cache coherence to maintain sub-microsecond latency and dynamic load balancing.

- Deploying CXL pooling mitigates stranded I/O resources and simplifies orchestration by eliminating single points of failure in datacenters.

My CXL Pool Obviates Your PCIe Switch

Introduction

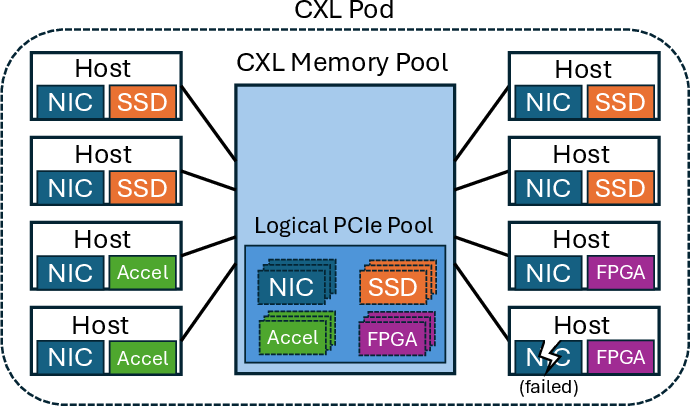

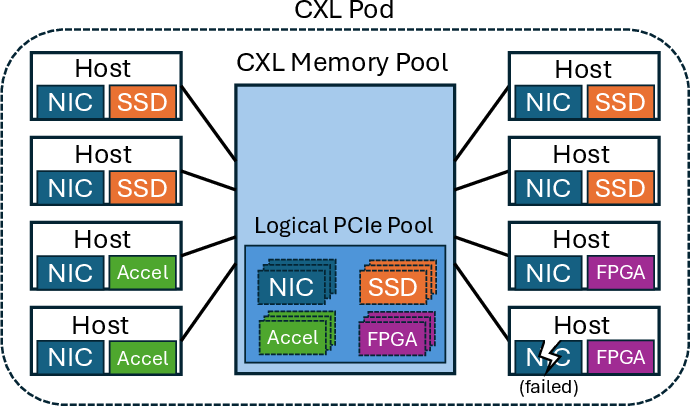

This paper discusses an alternative approach to PCIe device pooling using Compute Express Link (CXL) memory pools, rather than relying on costly and inflexible PCIe switches. By leveraging CXL, a shared memory pool can be realized that offers significant improvements in device utilization, reduces stranded I/O resources, mitigates device failure impacts, and lowers overall datacenter costs.

Figure 1: A CXL memory pool enables hosts to access any PCIe device in a CXL pod, forming a logical pool of PCIe devices.

Need for PCIe Pooling

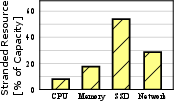

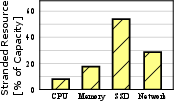

PCIe pooling aims to enhance the utilization of existing resources and improve fault tolerance. Datacenters face challenges such as resource over-provisioning for peak demands, resulting in low utilization of NICs and SSDs. By pooling PCIe resources across multiple hosts, datacenters can potentially decrease resource wastage significantly. In addition, PCIe pooling allows dynamic load balancing and failover capabilities, reducing the redundancy required for high availability and hence the total cost of ownership (TCO).

Figure 2: Percentages of stranded CPU cores, memory capacity, SSD storage, and NIC bandwidth in Microsoft Azure datacenters.

CXL as Enabler for PCIe Pooling

CXL memory pools are poised to transform datacenter resource management. They allow multiple hosts to access and share memory resources efficiently, with support from newly emerging CXL technologies. Modern CXL pods provide cost-effective solutions compared to traditional PCIe switches, thanks to switch-less pod designs, and flexible sharing of any PCIe device, including directly utilizing CXL memory as I/O buffers. This capability is particularly advantageous for data-intensive applications and supports seamless hardware evolution.

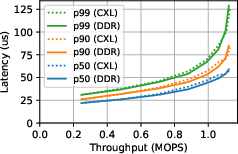

Software Design for PCIe Pooling

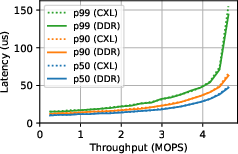

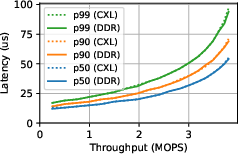

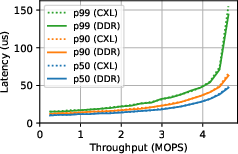

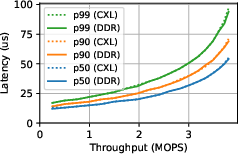

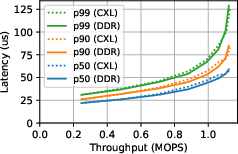

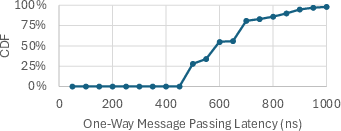

The proposed software design for pooling includes placing I/O buffers in CXL shared memory and ensuring software-managed cache coherence. By allowing PCIe devices to access CXL memory directly, high-speed data paths can be maintained. The design also implements a sub-microsecond latency communication channel across CXL hosts, aiding in efficient event signaling and management of device memory operations.

Figure 3: 75~B packets.

Deployment Strategy and Orchestration

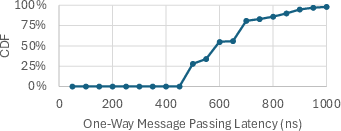

The orchestration component controls device-to-host mapping and dynamically manages resource allocation. It enables load balancing among hosts and mitigates device overloads. This strategy enhances fault tolerance by reallocating failing device workloads to healthy devices, maximizing resource utilization at minimal additional cost.

Figure 4: Latency distribution of message passing.

Discussion

Beyond immediate pooling benefits, CXL adoption could reshape datacenter infrastructure. By leveraging high CXL pod reliability, operators could potentially eliminate single points of failures like top-of-rack switches, resulting in cost reductions. Additionally, soft accelerator disaggregation paired with PCIe pooling could address challenges with underutilized, yet essential, accelerators. This enables flexible load balancing for both compute and storage workloads, increasing efficiency and scalability across distributed systems.

Conclusion

The paper presents a comprehensive analysis of using CXL memory pools as an alternative to PCIe switches for effective and flexible pooling of PCIe devices. CXL technology offers significant opportunities to optimize resource utilization, reduce costs, and enhance the scalability and reliability of datacenter operations. As CXL technology matures, its integration into platform design holds promise for improved datacenter management and operational efficiency.