- The paper presents FACTS&EVIDENCE, an interactive tool that decomposes input text into atomic claims and verifies them using diverse web evidence.

- It employs dense and sparse retrieval methods combined with LLM-based factuality judgments to assign detailed credibility scores.

- Experimental results show a 44-point improvement in F1 error prediction on the FAVA dataset, underscoring its efficacy.

FACTS&EVIDENCE: An Interactive Tool for Transparent Fine-Grained Factual Verification of Machine-Generated Text

Introduction

The increasing prevalence of AI-generated text necessitates robust tools for factual verification to mitigate AI-induced misinformation risks. The paper introduces "FACTS&EVIDENCE," an interactive tool designed to provide transparent and fine-grained factual verifications of machine-generated content. This tool diverges from traditional fact verification approaches by offering an interactive platform where users can navigate through detailed claim breakdowns, supported by diverse evidence sources, attributing a comprehensive credibility score.

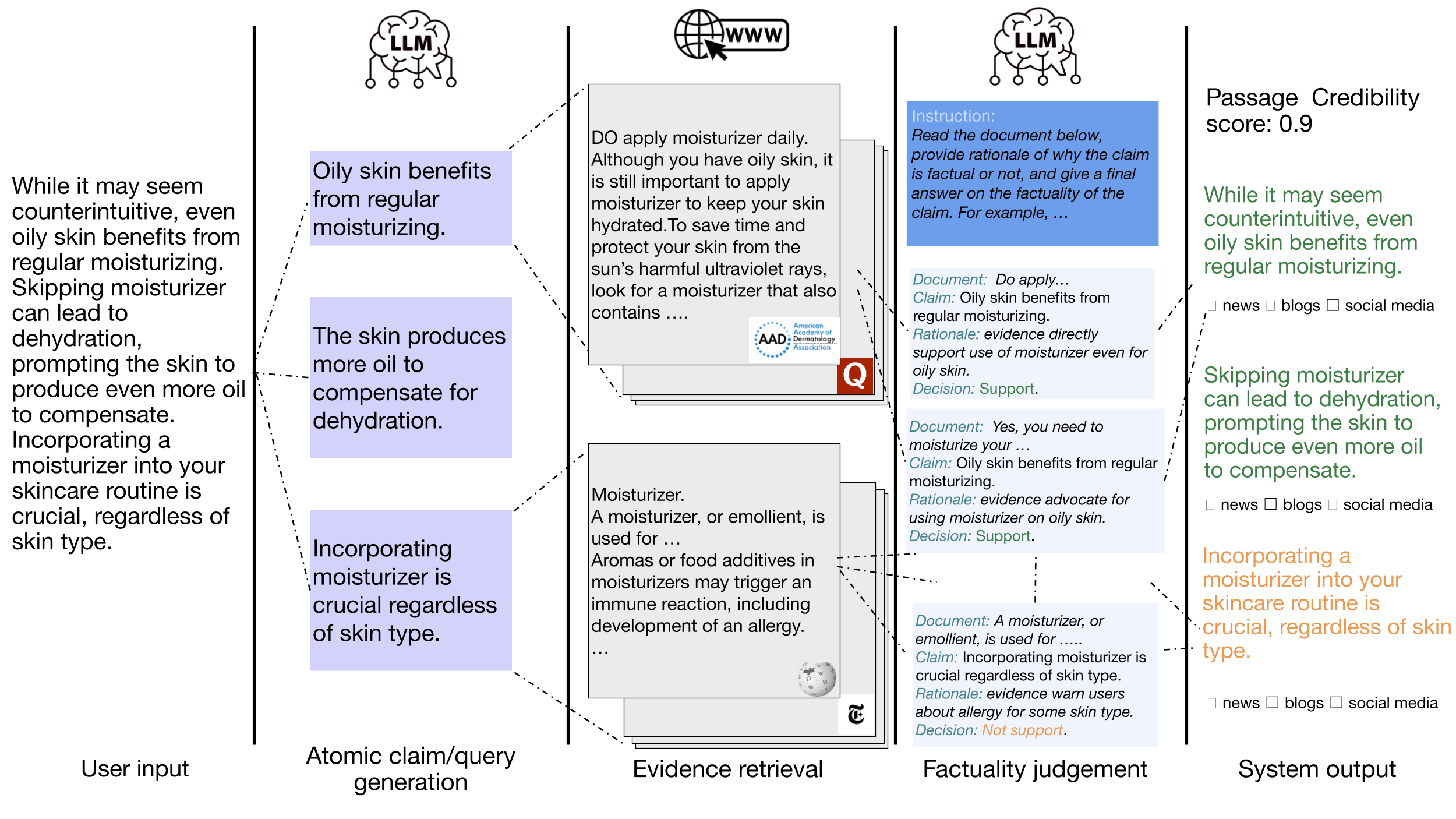

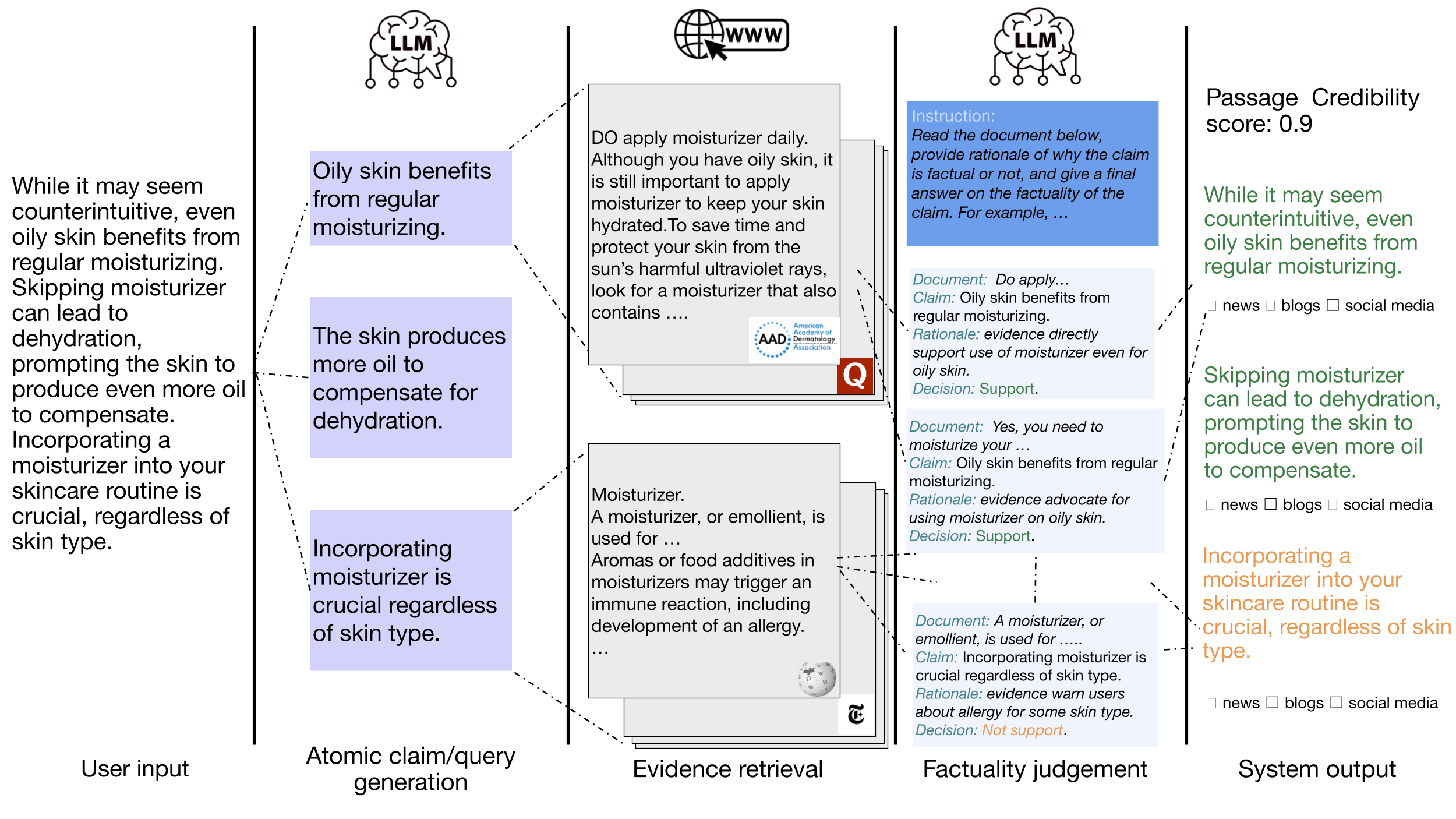

Figure 1: The pipeline figure of Facts. The user input for verification goes through atomic claim and query generation, evidence retrieval, and factuality judgement processes.

System Architecture and Workflow

The architecture of the FACTS tool involves breaking down complex input text into atomic claims. These claims are independently verified against multiple evidence pieces retrieved from the web, enhancing the granularity and reliability of factual verification.

User Interface

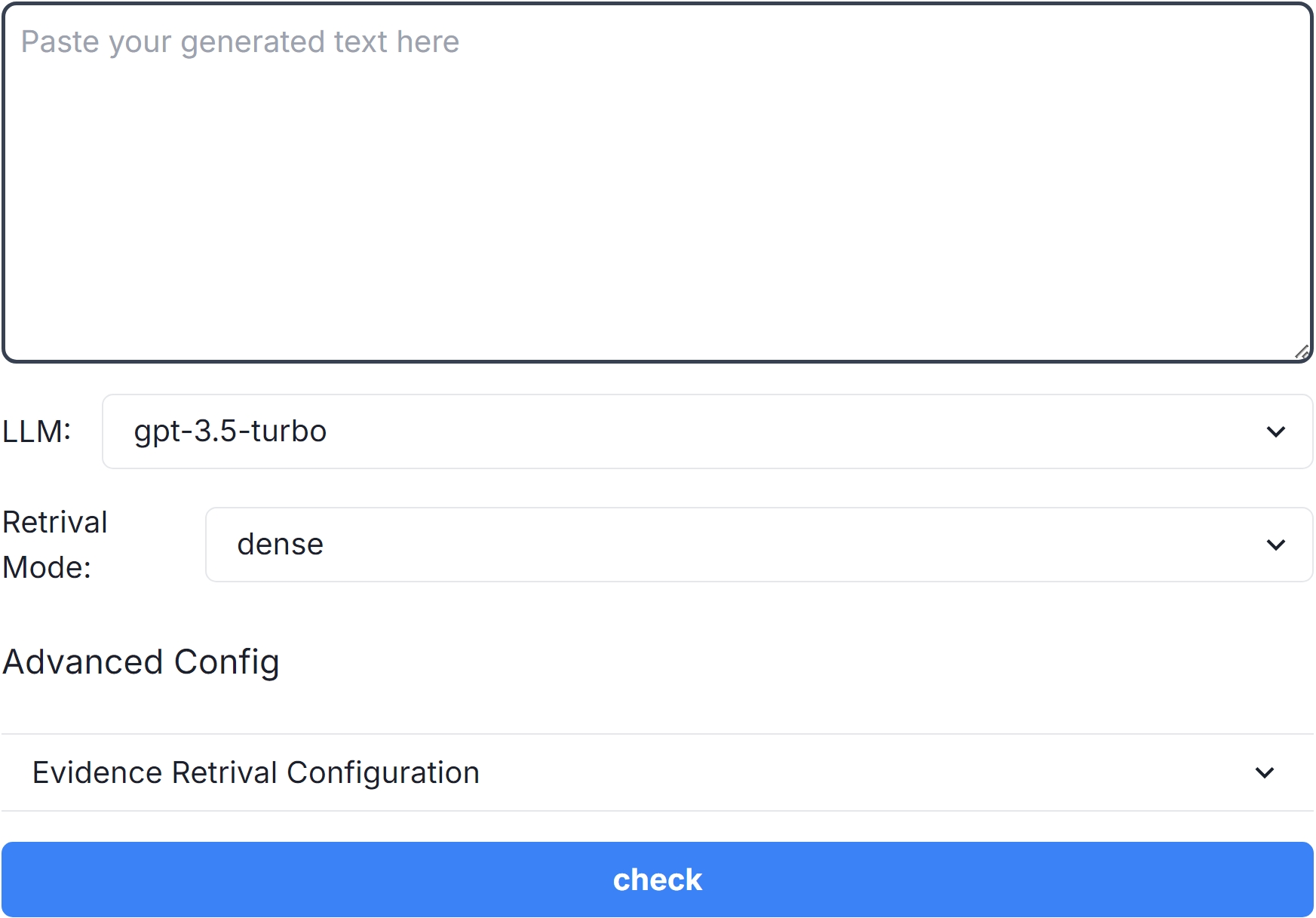

The user interface is tailored to facilitate an intuitive verification process:

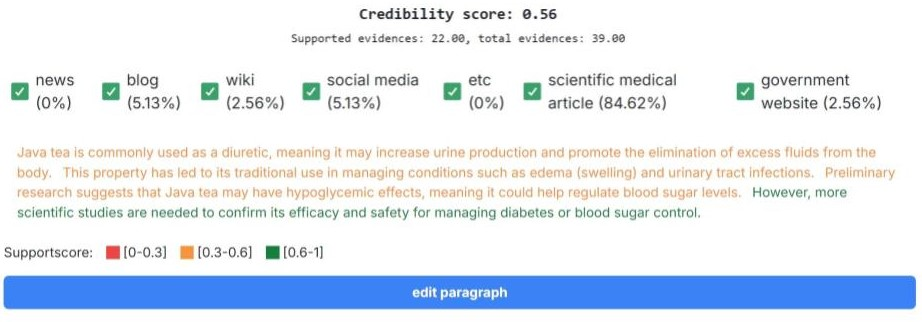

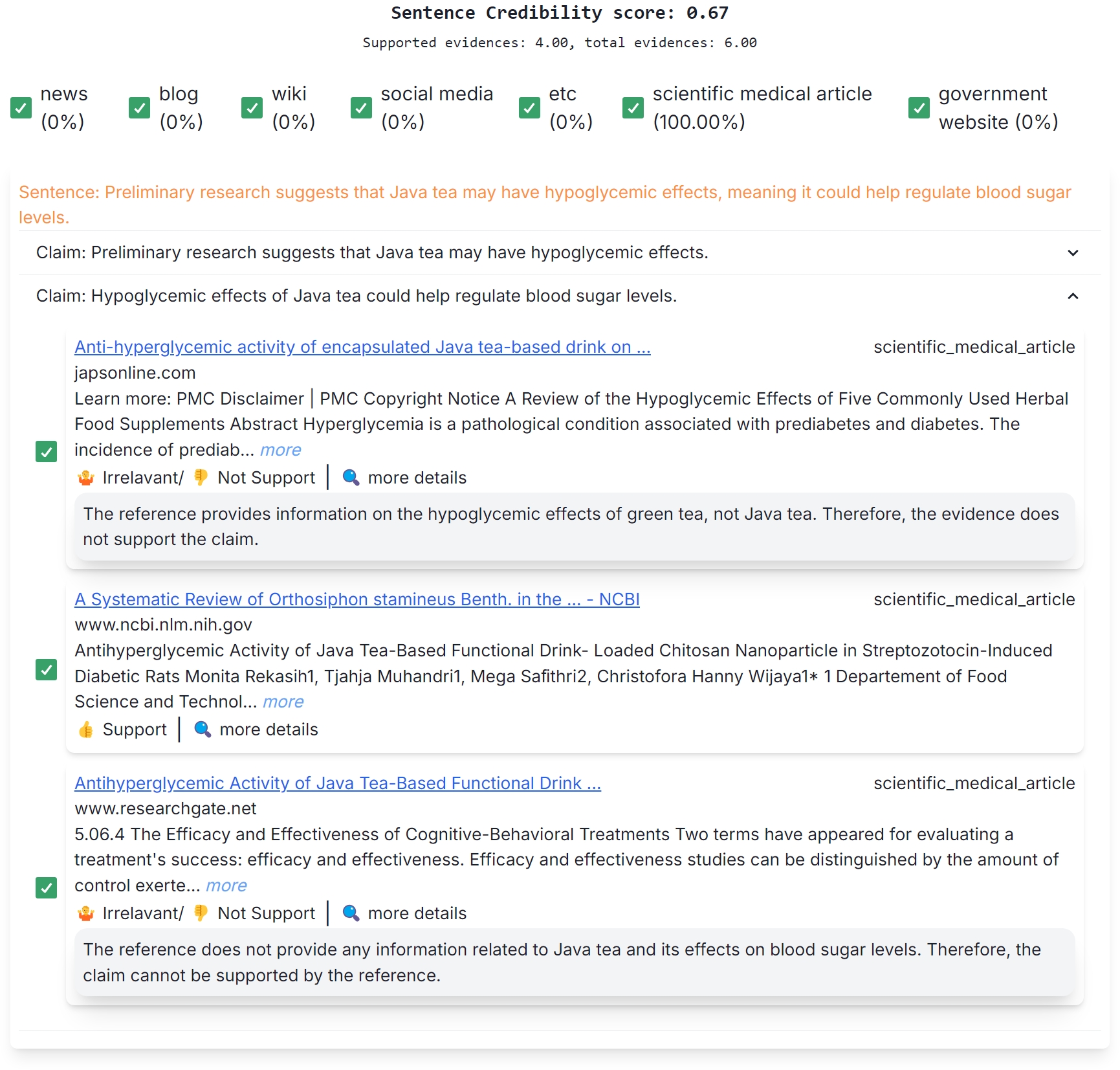

Figure 3: Credibility Panel of Facts

Factual Verification Processing

The tool conducts several sequential processes, including:

- Atomic Claim Generation: Decomposing input text into atomic claims using LLMs, ensuring context-independent understanding.

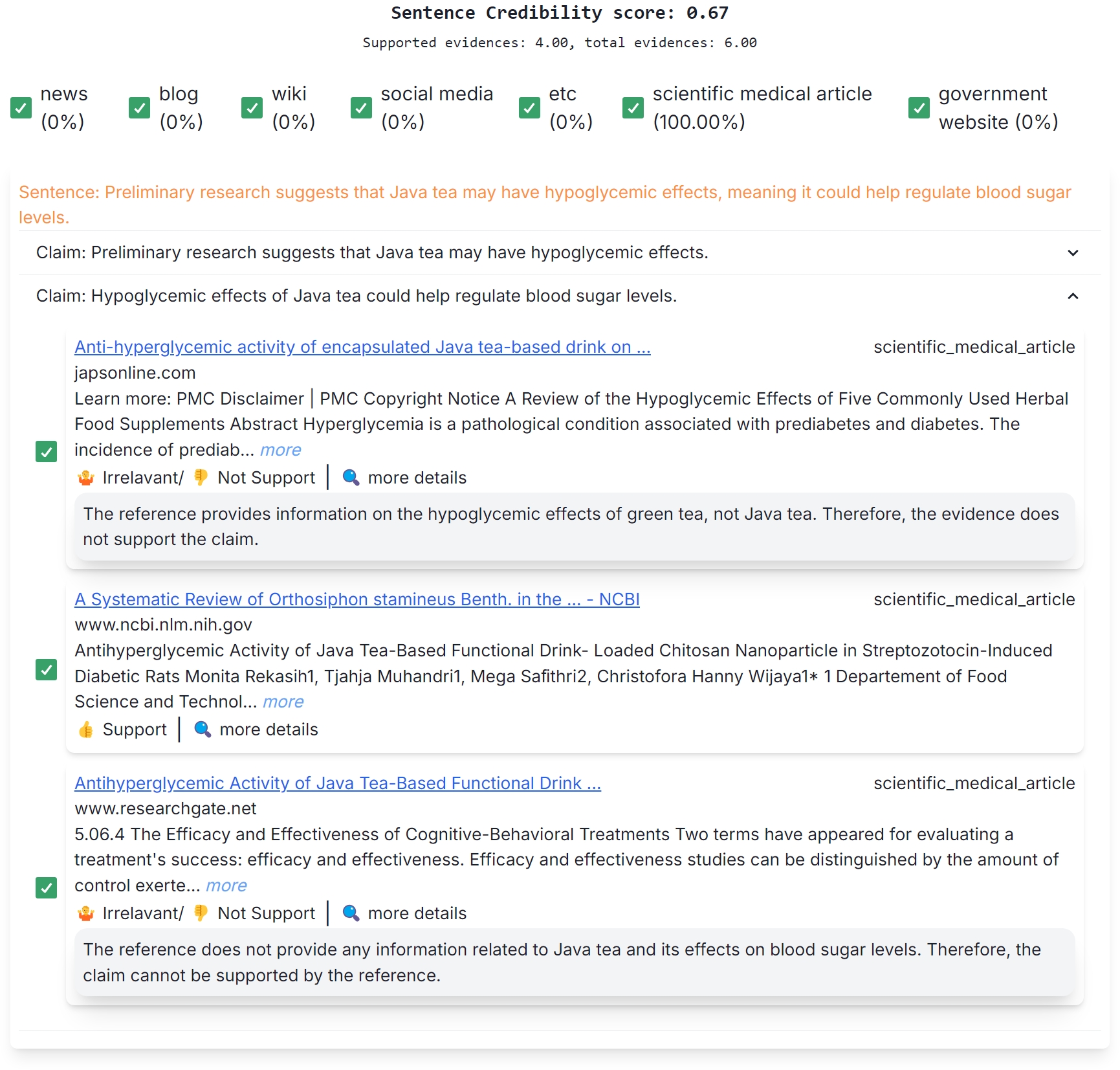

- Evidence Retrieval: Conducting web searches for each claim using both dense and sparse retrieval methods, and categorizing sources for user-filtered verification.

- Factuality Judgement: Utilizing LLMs to evaluate whether retrieved evidence supports or contradicts each claim, producing a supportive rationale.

Experimental Evaluation

The FACTS system was evaluated primarily with the FAVA dataset. Results indicated a significant improvement in error prediction accuracy, outperforming prior systems by approximately 44 F1 points on average.

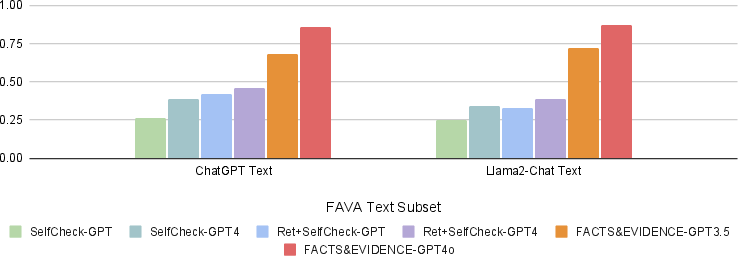

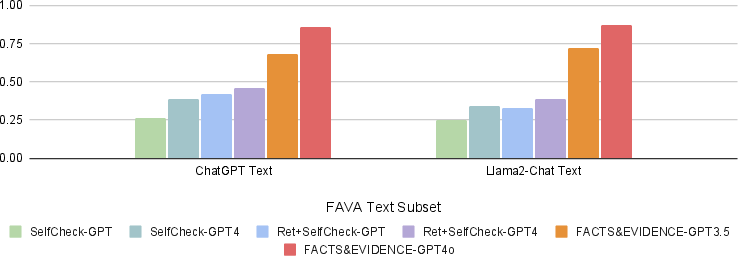

Figure 4: Binary F1 Results on factual error detection. Facts improves error prediction accuracy by ∼44 points on average across the two subsets.

Implications and Future Work

The development of FACTS marks progress toward creating accessible, user-driven fact verification tools. By employing a multi-evidence verification process and enabling user-customizable verification settings, the tool enhances factual transparency and user trust in AI-generated content.

Future studies could enhance the system's efficiency, exploring local finetuning models to optimize processing speed while maintaining high verification standards. Additionally, expanding the source categorization could further refine user-experience and domain-specific accuracy.

Conclusion

FACTS provides a novel solution to the challenge of verifying the factual accuracy of AI-generated text, integrating transparency, interactivity, and empirical evidential support into the verification process. This tool not only sets a benchmark in fine-grained factual verification but also invites further advancements in AI transparency tools. The implementation represents a significant step toward empowering users to critically evaluate AI-generated content with nuanced insights into factual credibility.