Analysis of "Factcheck-GPT: End-to-End Fine-Grained Document-Level Fact-Checking and Correction of LLM Output"

The paper "Factcheck-GPT: End-to-End Fine-Grained Document-Level Fact-Checking and Correction of LLM Output" addresses the critical need for verifying the factual accuracy of outputs generated by LLMs like ChatGPT. This is particularly pertinent given the prevalence of factual errors and hallucinations in LLM outputs, which undermine their real-world applicability. The authors propose an end-to-end framework designed to detect and correct such inaccuracies, operating at a fine granularity level—claims, sentences, and document—enabling a nuanced and precise fact-checking process.

Core Contributions

The authors present several notable contributions:

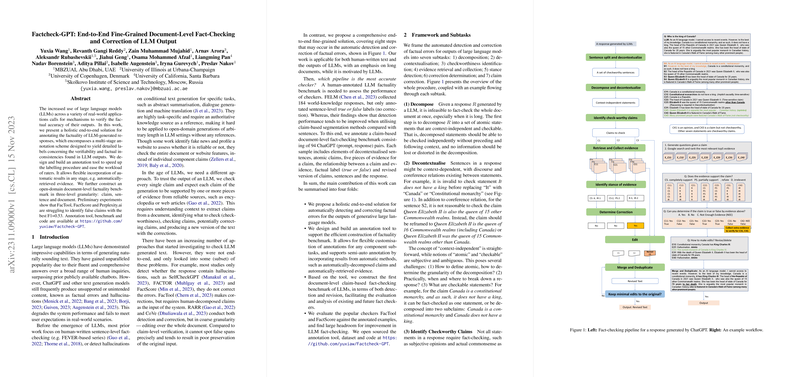

- Pipeline Framework: They introduce a comprehensive fact-checking pipeline for LLM outputs consisting of multiple stages: decomposition, decontextualization, check-worthiness identification, evidence retrieval, stance detection, correction determination, and claim correction. This pipeline allows for systematic processing of text to identify and correct factual errors at a granular level.

- Benchmark Dataset: The authors develop a document-level factuality benchmark comprising 94 ChatGPT-generated response pairs. This benchmark is structured to support different verification levels, providing a robust basis for evaluating and enhancing fact-checking methods used with LLMs.

- Annotation Tool: An annotation tool was designed to efficiently construct the factuality benchmark by supporting flexible customization of annotations with semi-automated assistance.

- Evaluation of Existing Tools: The paper evaluates existing fact-checking tools such as FacTool and FactScore on their dataset, highlighting significant gaps, especially in detecting false claims with the best F1 score at only 0.53.

Results and Discussion

The initial evaluation demonstrates considerable room for improvement, as current systems struggle with precisely identifying and correcting false claims. The systemic benchmarks and methodical approaches underscore the complexities involved in automating fact-checking against large-scale, multi-layered model outputs such as those from LLMs.

The authors argue for precise corrections, claiming that their fine-grained approach surpasses previous efforts that either do not correct errors or do so imprecisely with respect to claim-level fidelity. The proposed framework could, theoretically, produce clean corrected outputs while preserving the text's original intent and stylistic continuity.

Implications and Future Directions

Practically, a successful implementation of this framework could be pivotal in applications dependent on LLMs, where high factual accuracy is required. Theoretically, the research refines the methodology for automating fact-checking by emphasizing a decomposed approach, which can converge varied sub-problems needing specialized techniques.

The paper points toward future work possibilities in refining retrieval techniques for evidence and enhancing the granularity with which LLMs’ claims are verified against diverse, authoritative datasets. An intriguing direction would be integrating this framework with real-time data sources to further bolster accuracy and relevance.

Furthermore, the performance limitations highlighted indicate an opportunity for future models to integrate metadata processing, multi-document verification, and enhanced natural language understanding to overcome current challenges demonstrated by existing systems.

Overall, this paper ventures into critical territory, aiming to refine and advance the factual robustness of LLM outputs through meticulous, layered verification processes. As the field continues to grow, such research underpins the foundational need for automated credibility and reliability in AI-generated text.