- The paper introduces Clio, a system that delivers privacy-preserving analysis of AI assistant usage by extracting, clustering, and summarizing conversation data.

- The methodology leverages semantic clustering and multi-layered privacy techniques to achieve 94% accuracy on synthetic multilingual data while protecting user data.

- Practical implications include enhanced abuse detection, event monitoring, and classifier evaluation, supporting responsible AI deployment and governance.

Clio: Privacy-Preserving Insights into Real-World AI Use

Introduction and Motivation

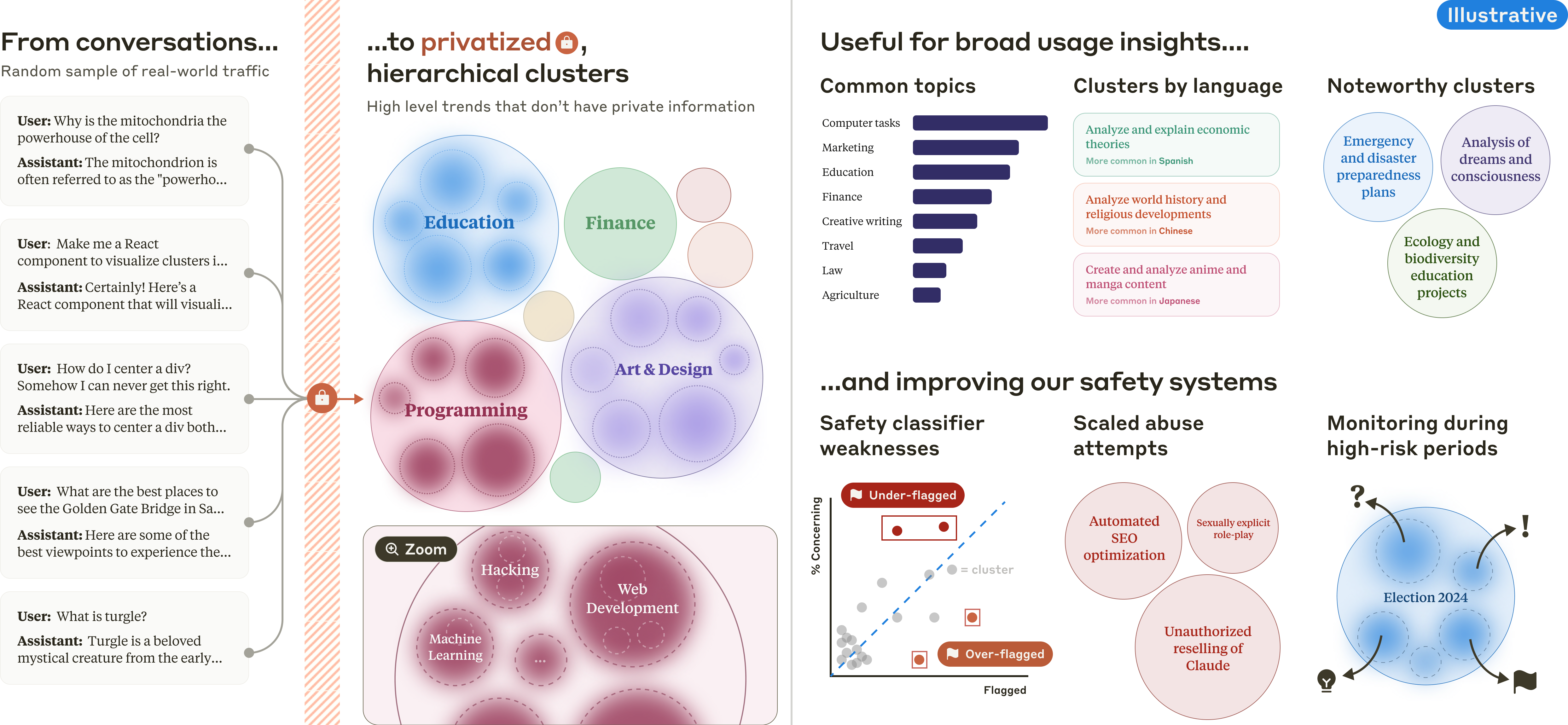

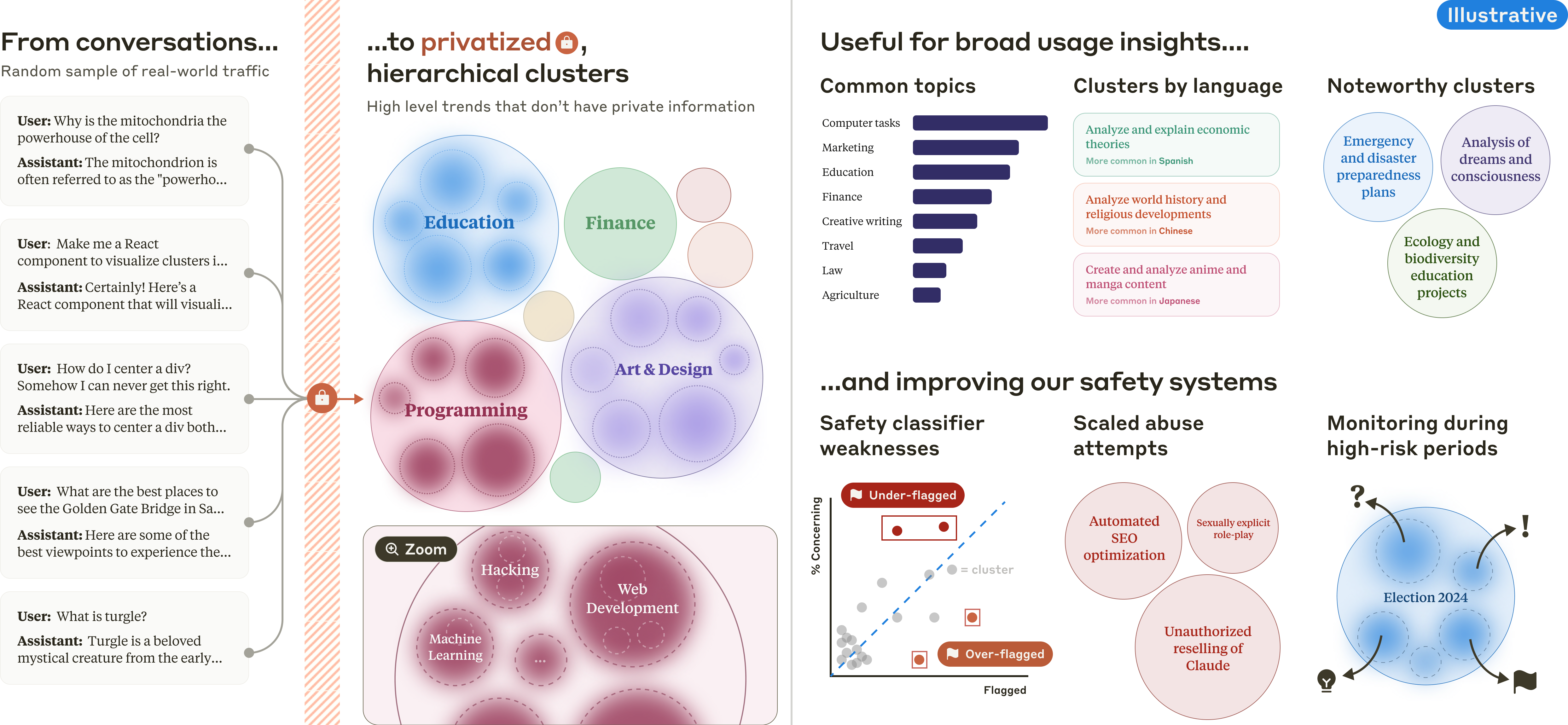

The paper presents Clio, a system for privacy-preserving analysis of real-world AI assistant usage at scale. The motivation is to empirically characterize how users interact with AI assistants, such as Claude.ai, while addressing the substantial privacy, ethical, and operational challenges inherent in analyzing millions of user conversations. Clio leverages AI models themselves to extract, cluster, and summarize usage patterns, enabling model providers to understand adoption, risks, and emergent behaviors without exposing raw user data or requiring human review of sensitive content.

Figure 1: Clio transforms raw conversations into high-level patterns and insights, enabling empirical analysis of real-world AI assistant usage.

System Architecture and Privacy Design

Clio's architecture is a multi-stage pipeline designed to maximize privacy while supporting scalable, bottom-up pattern discovery:

- Facet Extraction: For each conversation, Clio extracts multiple facets (e.g., topic, language, turn count) using a combination of direct computation and model-based summarization.

- Semantic Clustering: Facet embeddings (e.g., using all-mpnet-base-v2) are clustered via k-means, grouping semantically similar conversations.

- Cluster Summarization: Each cluster is summarized by a model, instructed to omit private information and provide a concise, descriptive label.

- Hierarchical Organization: Clusters are recursively grouped into a multi-level hierarchy using further clustering and model-based summarization, supporting exploration from broad categories to fine-grained subclusters.

- Interactive Visualization: Results are presented in a 2D map and tree interface, supporting faceted exploration and overlaying additional attributes (e.g., safety scores, language prevalence).

Figure 2: Clio processes raw conversations through facet extraction, semantic clustering, hierarchical organization, and privacy barriers to produce user-visible, privacy-preserving insights.

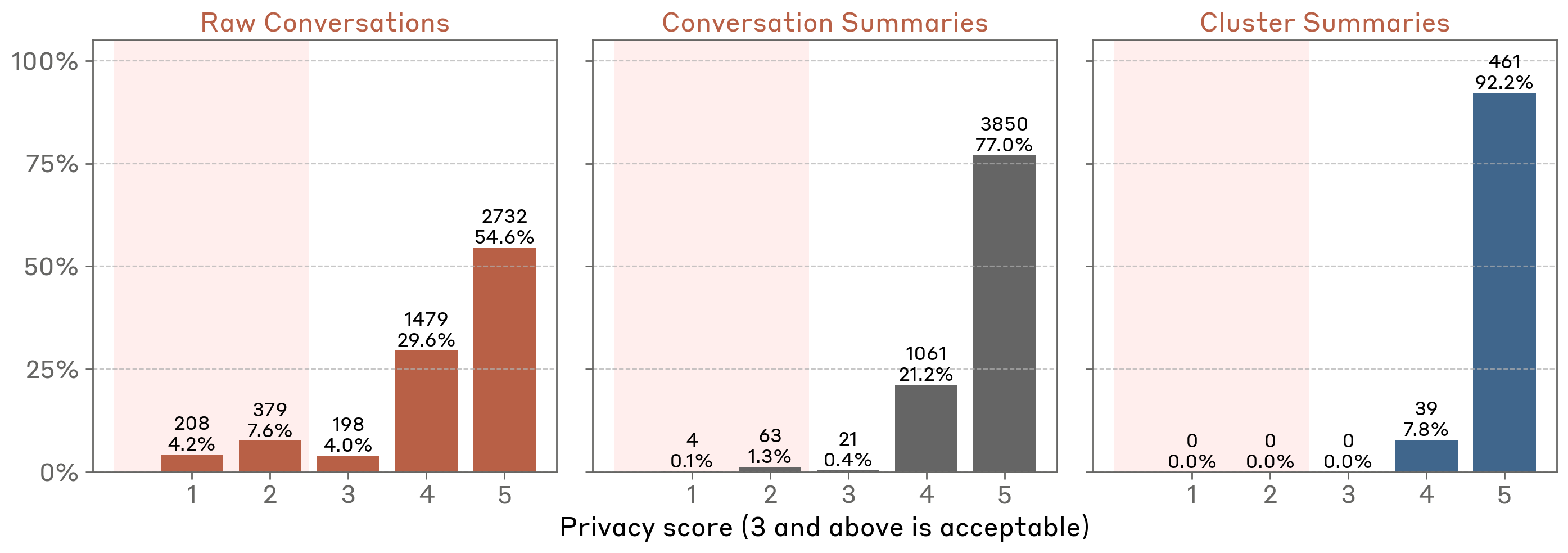

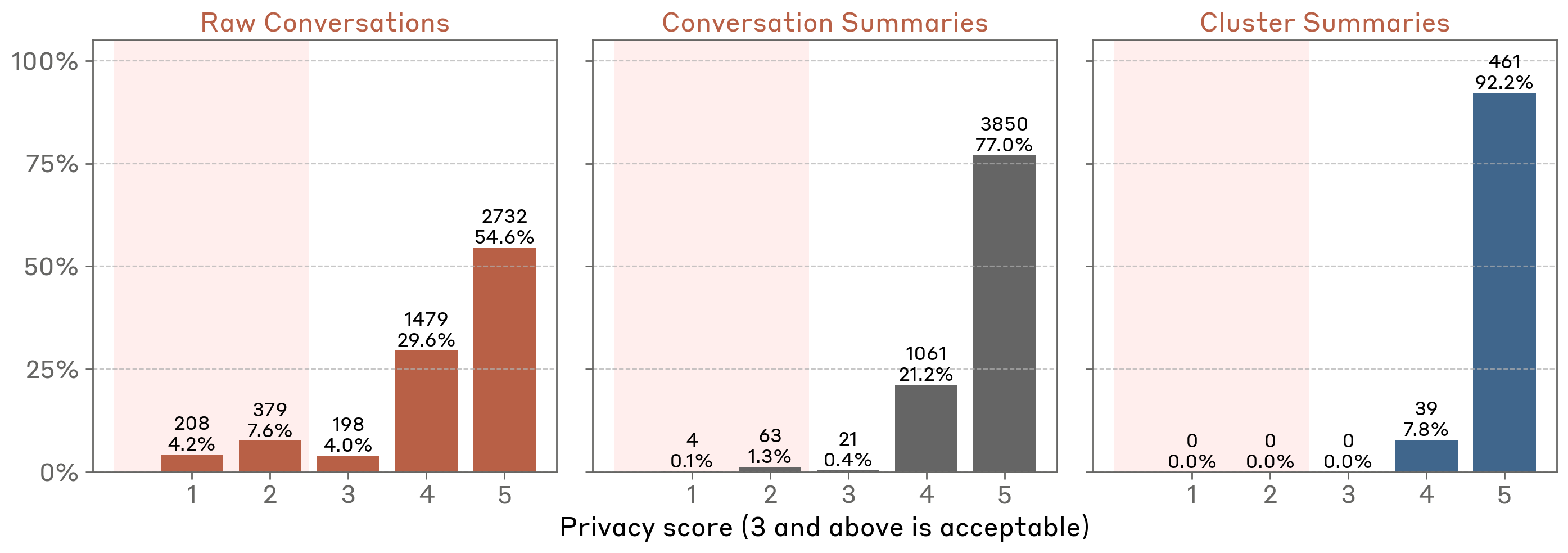

Clio employs four privacy layers: (1) privacy-preserving summarization, (2) cluster aggregation thresholds, (3) privacy-aware cluster summarization, and (4) automated cluster auditing. Empirical evaluation demonstrates that these measures reduce the exposure of private information to undetectable levels in final outputs.

Figure 3: Progression of privacy scores across Clio's pipeline, showing near-complete elimination of private information in cluster summaries.

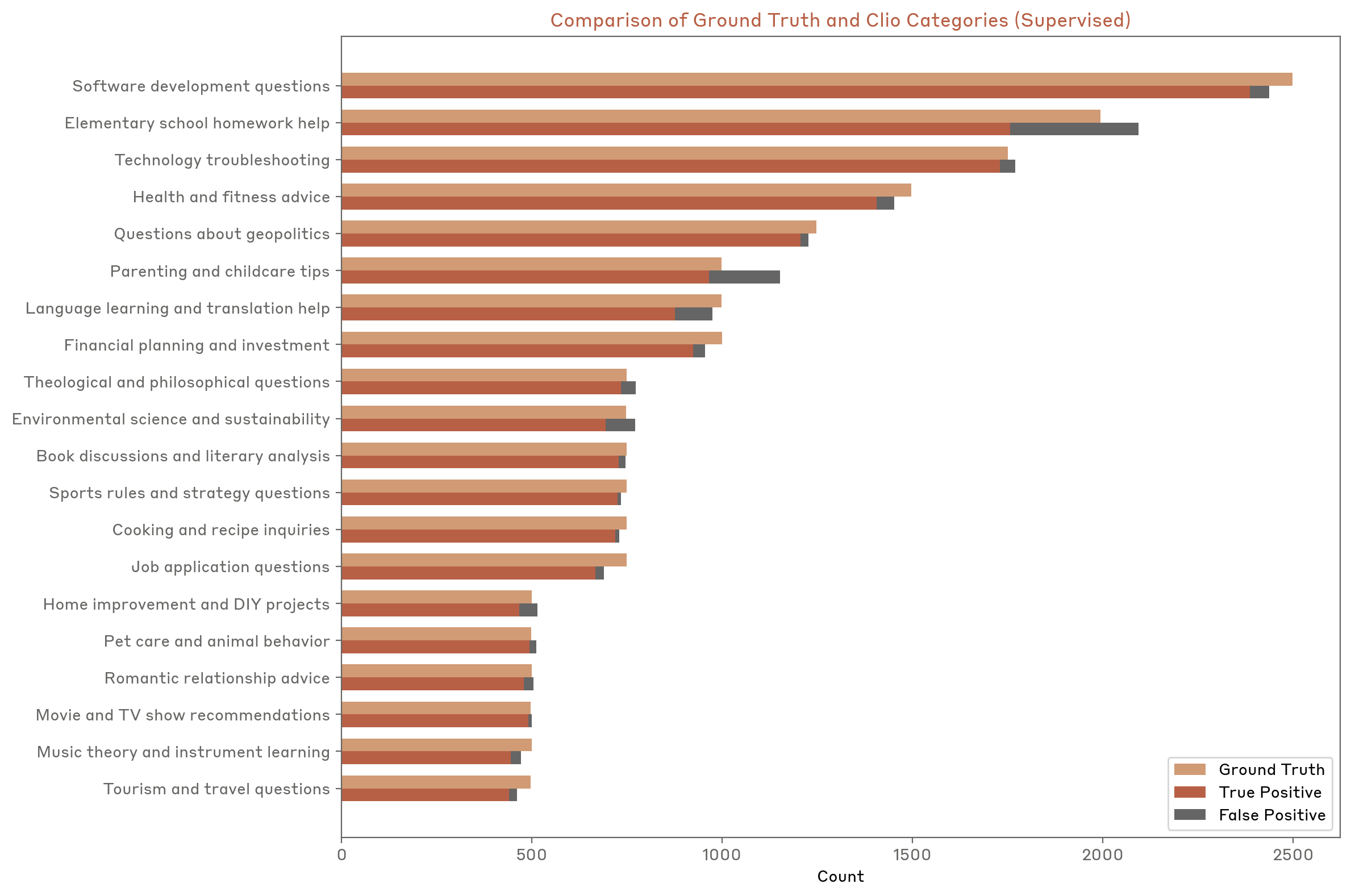

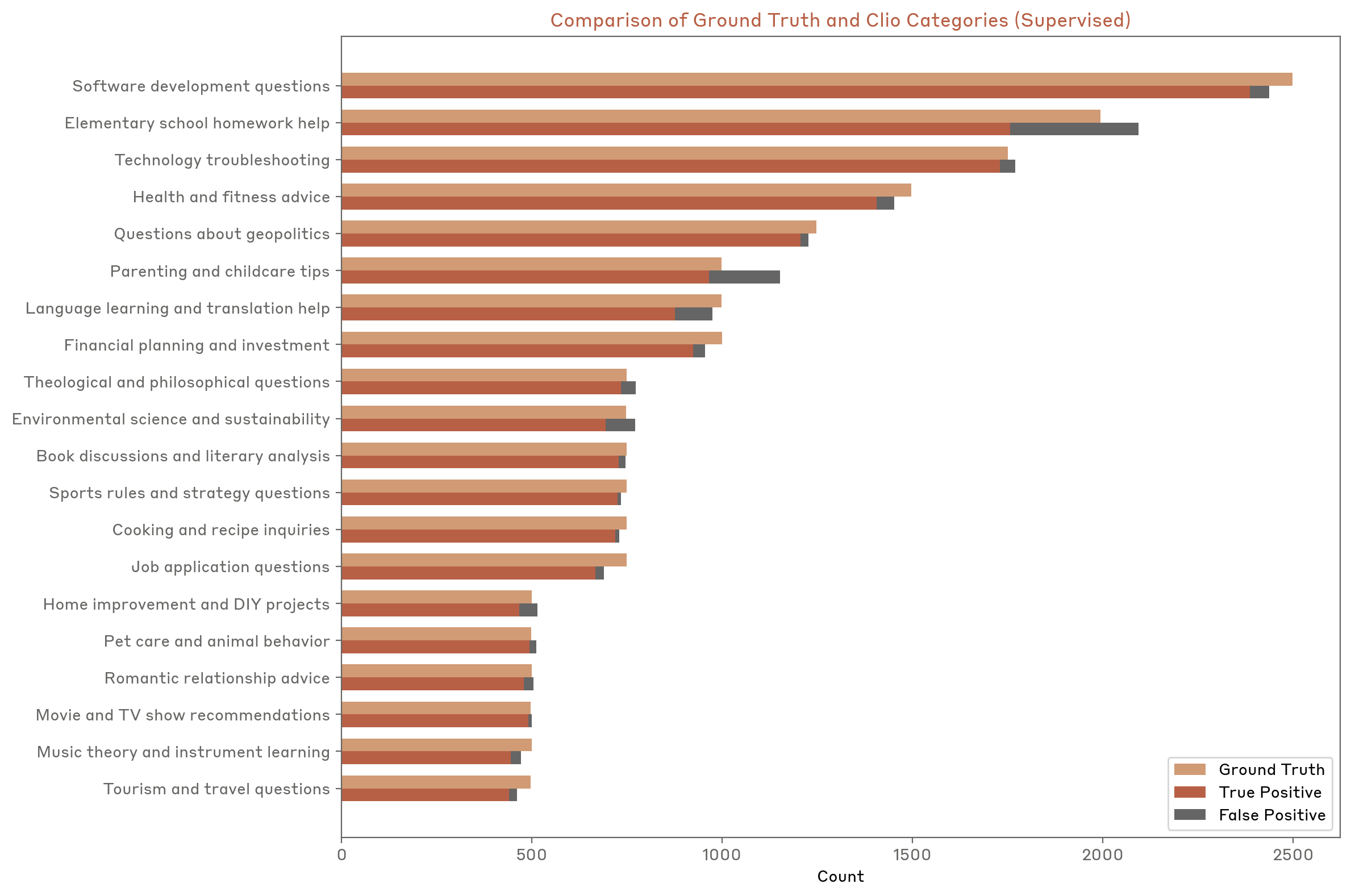

Clio's accuracy is validated through both manual review and synthetic data experiments. On a synthetic multilingual dataset of 19,476 chat transcripts with known ground-truth categories, Clio reconstructs the original distribution with 94% accuracy, far exceeding random guessing (5%).

Figure 4: Clio reconstructs ground-truth categories with 94% accuracy on synthetic data, demonstrating high fidelity in unsupervised clustering and assignment.

Performance is robust across languages, with accuracy above 92% for all tested languages. Manual review of real data shows high accuracy in summarization, clustering, and hierarchical assignment, with minor errors primarily in long, multi-topic conversations.

Empirical Insights into AI Assistant Usage

Clio enables large-scale empirical analysis of Claude.ai usage, revealing dominant use cases and cross-linguistic variation:

- Top Use Cases: Coding and business tasks are most prevalent, with "Web and mobile application development" comprising over 10% of conversations. Writing, research, and educational tasks are also prominent.

- Granular Clusters: Clio identifies fine-grained patterns, such as dream analysis, tabletop RPG roleplay, and transportation modeling.

- Cross-Language Variation: Non-English conversations show higher prevalence of topics like economic theory, elder care, and anime/manga content, with significant differences in usage patterns across Spanish, Chinese, and Japanese.

Figure 5: Top 10 high-level task categories in 1M Claude.ai conversations, highlighting the dominance of coding and writing tasks.

Figure 6: Clusters with disproportionately high prevalence of Spanish, Chinese, and Japanese, illustrating cross-linguistic differences in AI assistant usage.

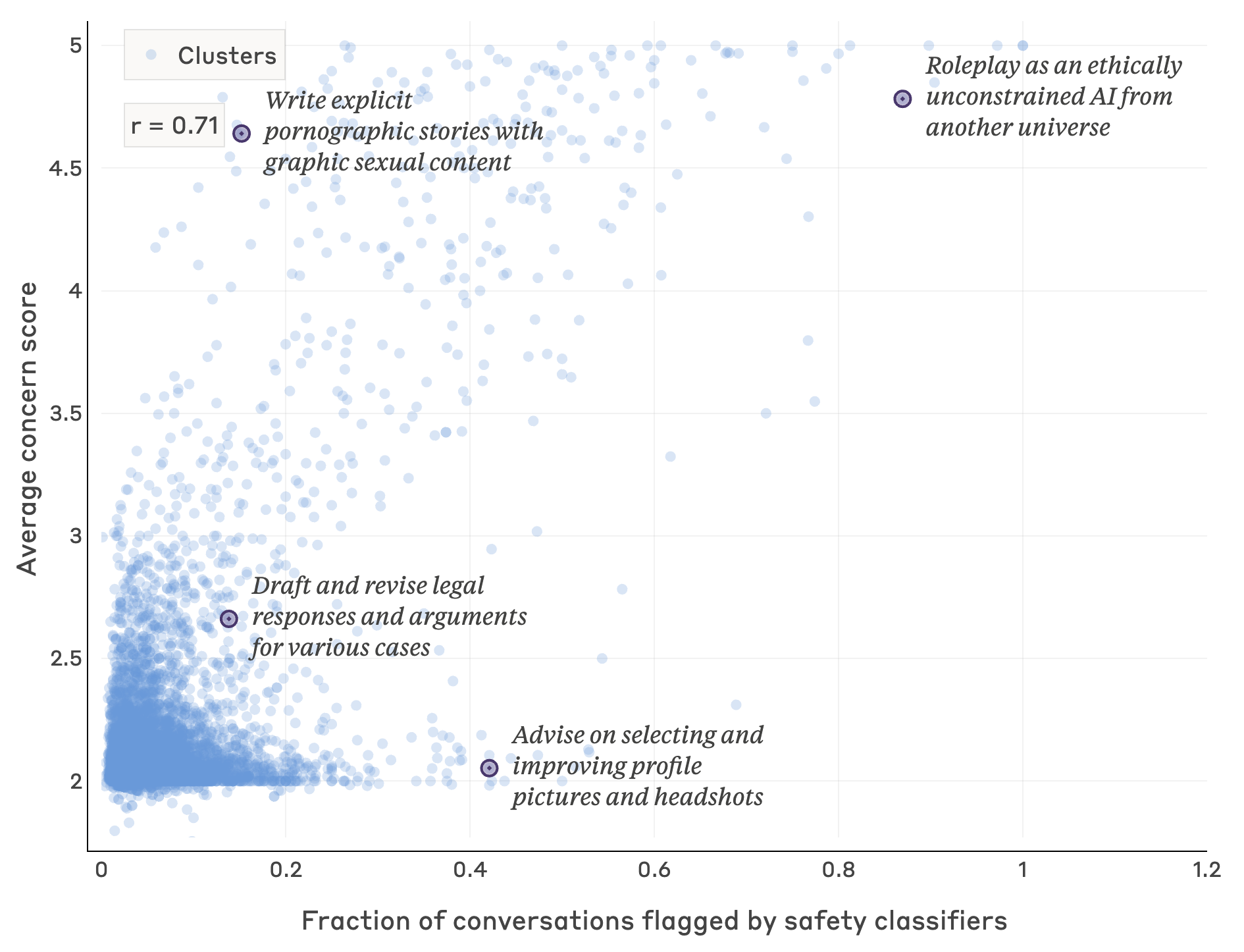

Safety Applications and Classifier Evaluation

Clio is used to enhance safety systems by surfacing coordinated abuse, monitoring for unknown unknowns during high-stakes events, and diagnosing classifier failures:

Limitations

Clio's limitations are both operational and fundamental:

- Operational: Errors may arise in facet extraction (hallucination, misinterpretation), clustering (suboptimal groupings), and labeling (overly broad or inaccurate summaries). Outputs should be considered preliminary and require further validation.

- Fundamental: Clio cannot fully capture user intent, is limited to conversational data (not downstream impact), and must trade off privacy against granularity. Rare behaviors and model-specific patterns may escape detection.

Risks, Ethics, and Mitigations

The paper provides a detailed analysis of risks and ethical considerations:

- Privacy Risks: Potential for PII leakage or group privacy violations is mitigated by multi-layered privacy interventions and regular audits.

- False Positives: Automated enforcement is avoided; human review is required for flagged clusters.

- Potential Misuse: Strict access controls, data minimization, and transparency are implemented.

- User Trust: Radical transparency and engagement with civil society organizations are prioritized to maintain trust and inform best practices.

Clio builds on prior work in privacy-preserving analytics, clustering and summarization of text data, and empirical analysis of AI assistant usage. It extends embed-cluster-summarize paradigms to the domain of AI assistant interactions, with a focus on privacy and safety.

Implications and Future Directions

Clio demonstrates that privacy-preserving, bottom-up analysis of AI assistant usage is feasible and effective at scale. The system provides actionable insights for model providers, supports empirical AI safety and governance, and enables post-deployment monitoring that complements pre-deployment evaluation. As AI systems become more capable and widely adopted, empirical transparency and scalable monitoring will be essential for responsible deployment and risk mitigation.

Future work may focus on improving facet extraction robustness, extending to multimodal and API-based interactions, integrating formal privacy guarantees, and developing cross-model comparative analytics. Clio's approach could inform regulatory frameworks and best practices for AI governance, especially as calls for transparency and empirical evidence in AI policy intensify.

Conclusion

Clio provides a scalable, privacy-preserving platform for empirical analysis of real-world AI assistant usage. Its multi-layered privacy architecture, robust clustering and summarization pipeline, and interactive visualization interface enable model providers to understand adoption, risks, and emergent behaviors without compromising user privacy. Clio's empirical insights inform both product development and safety interventions, and its methodological transparency contributes to the broader culture of empirical AI governance. As AI systems continue to proliferate, tools like Clio will be critical for ensuring responsible, evidence-based oversight and continuous improvement.