- The paper presents a novel one-step UNet-based framework, SuperMat, for estimating PBR material properties such as albedo, metallic, and roughness maps.

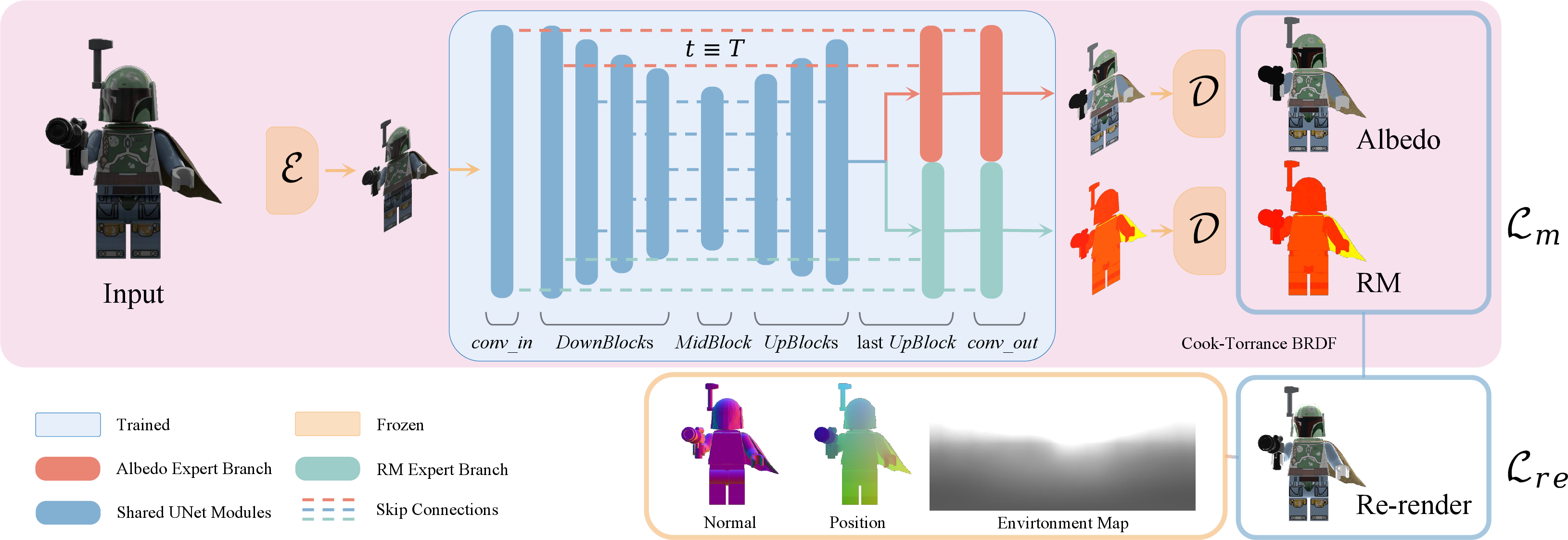

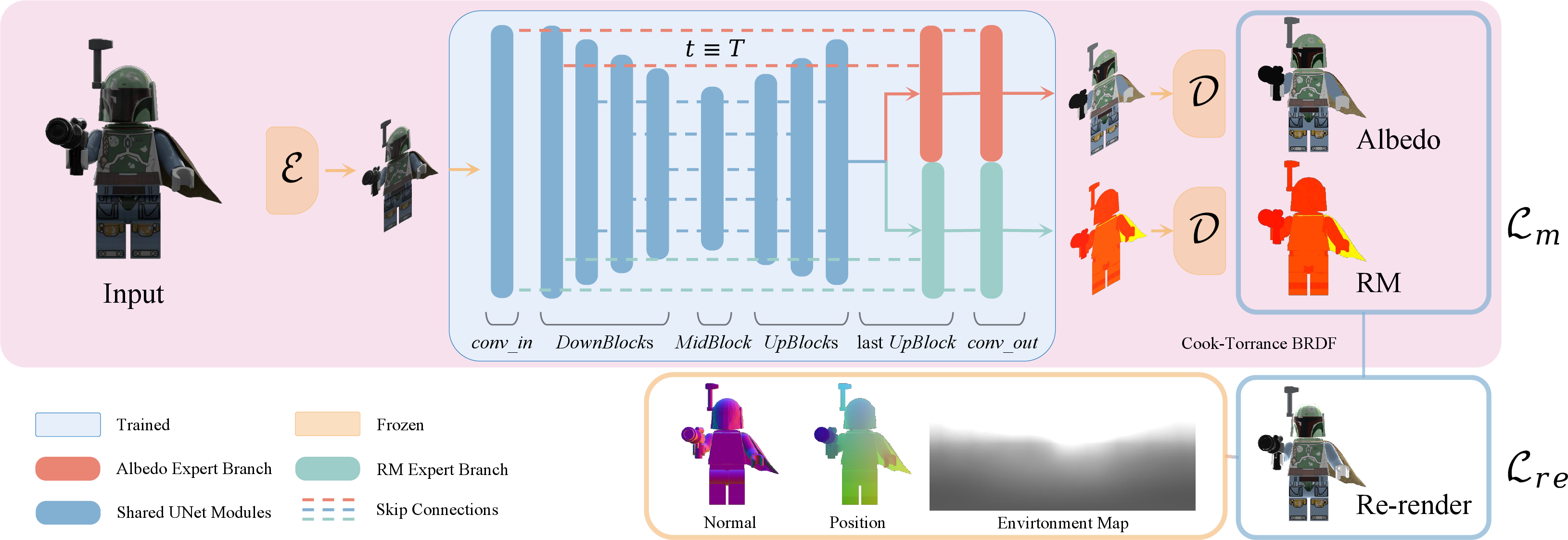

- It utilizes a modified DDIM scheduler and re-render loss to align noise levels and achieve physical consistency under varying lighting conditions.

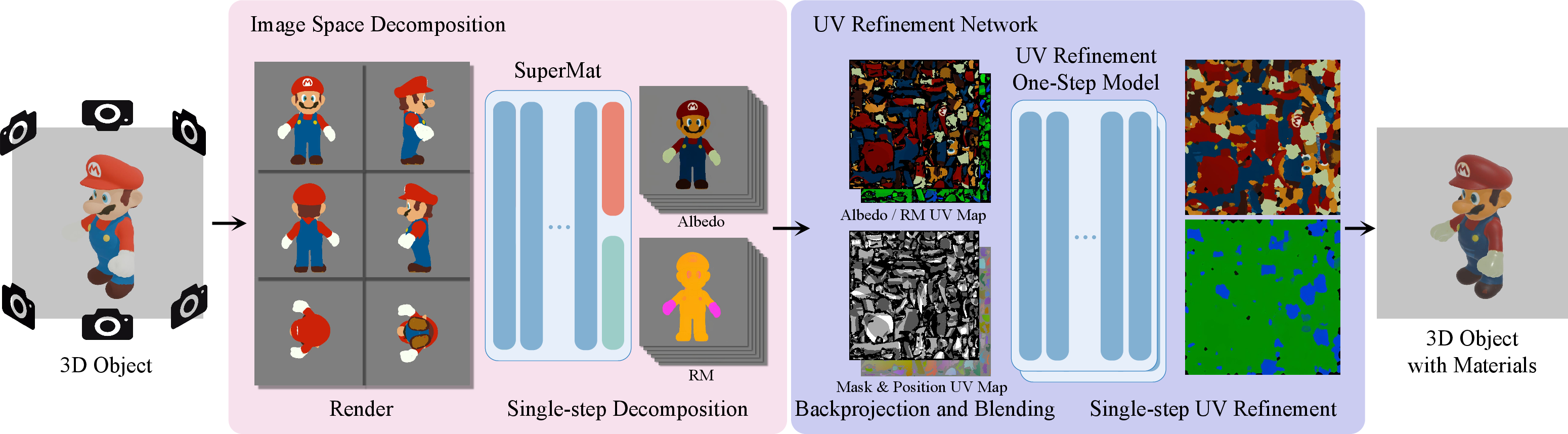

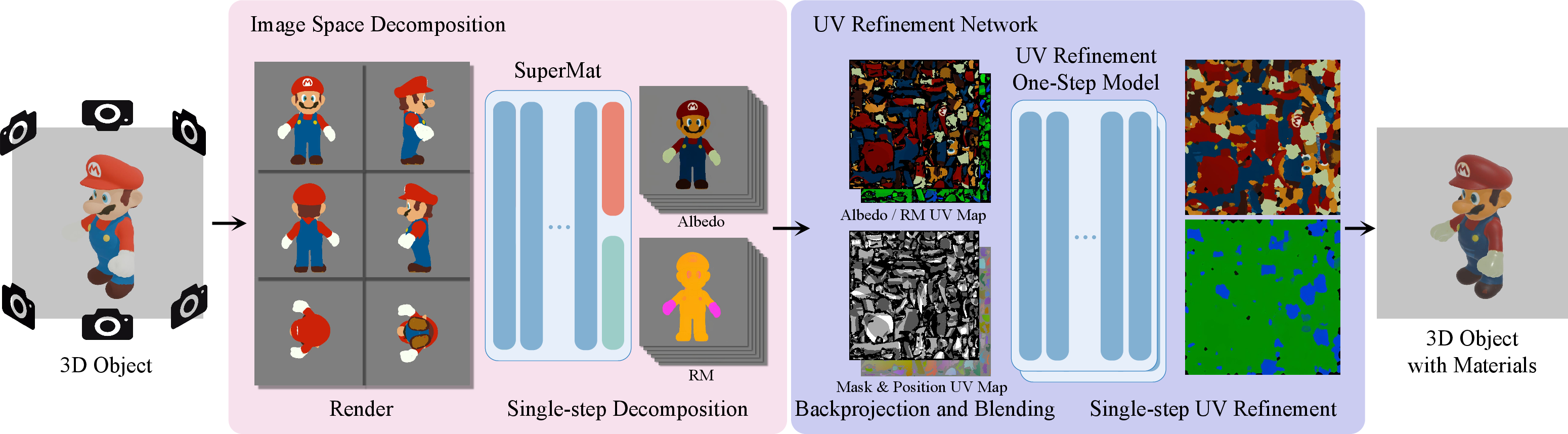

- The method extends to 3D material decomposition via a UV refinement network, delivering rapid and high-fidelity results for interactive applications.

"SuperMat: Physically Consistent PBR Material Estimation at Interactive Rates" Analyzed

This paper introduces a novel framework, SuperMat, for estimating physically-based rendering (PBR) materials at interactive speeds. The method focuses on decomposing images into their constituent material properties—albedo, metallic, and roughness maps—by utilizing a one-step inference approach. This efficient methodology promises to improve both the quality and speed of PBR material estimation, offering significant advancements over traditional models, particularly in real-time applications.

SuperMat Framework and Methodology

SuperMat utilizes a UNet architecture with structural expert branches, enabling simultaneous prediction of multiple material properties while maintaining shared learnings across them. This structural innovation reduces computational overhead, a common bottleneck for real-time applications. The network is trained end-to-end with a deterministic one-step inference process, made feasible by modifying the denoising diffusion implicit models (DDIM) scheduler to align noise levels with timesteps accurately.

Figure 1: Overview of the SuperMat framework. The UNet architecture incorporates structural expert branches for albedo and RM estimation, enabling parallel material property prediction while sharing a common backbone. During training, we leverage a fixed DDIM scheduler and optimize the network end-to-end using both perceptual loss and re-render loss.

The re-render loss is calculated under new lighting conditions to guide the model to produce materials that are not only close to the ground truth but also perform well in varying environments. This approach ensures that the inferred materials align closely with physical realism.

Extension to 3D Material Decomposition

For 3D objects, SuperMat includes a UV refinement network, crafting a pipeline that maintains consistency and high fidelity across different object viewpoints. The refinement network enables efficient inpainting and correction of any discrepancies that arise from single-view decomposition results, ensuring the final UV maps are complete and accurate.

Figure 2: Material Decomposition for 3D objects.

The proposed pipeline processes 3D models in approximately 3 seconds, making it highly suitable for interactive applications where speed is critical.

Experimental Results and Comparisons

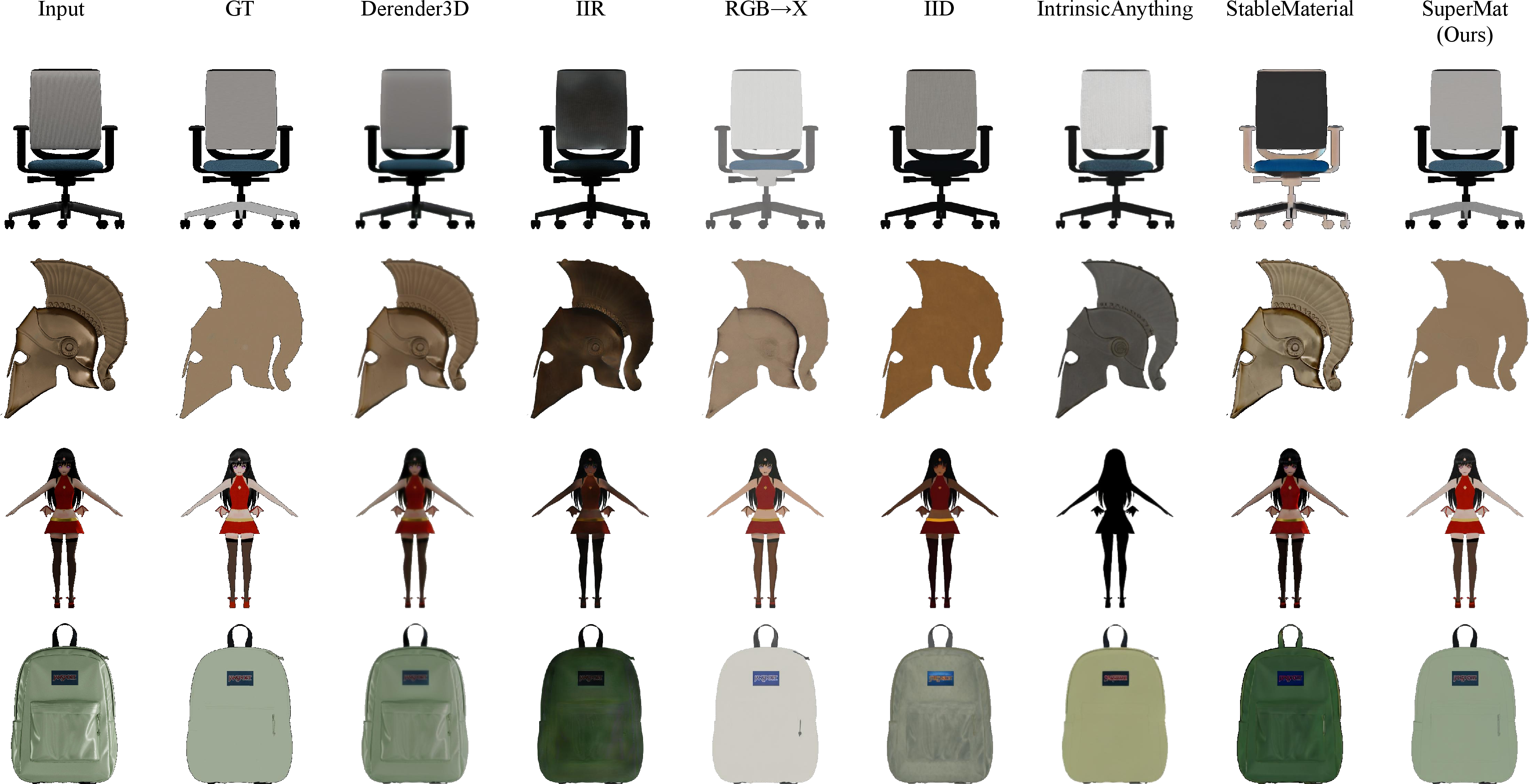

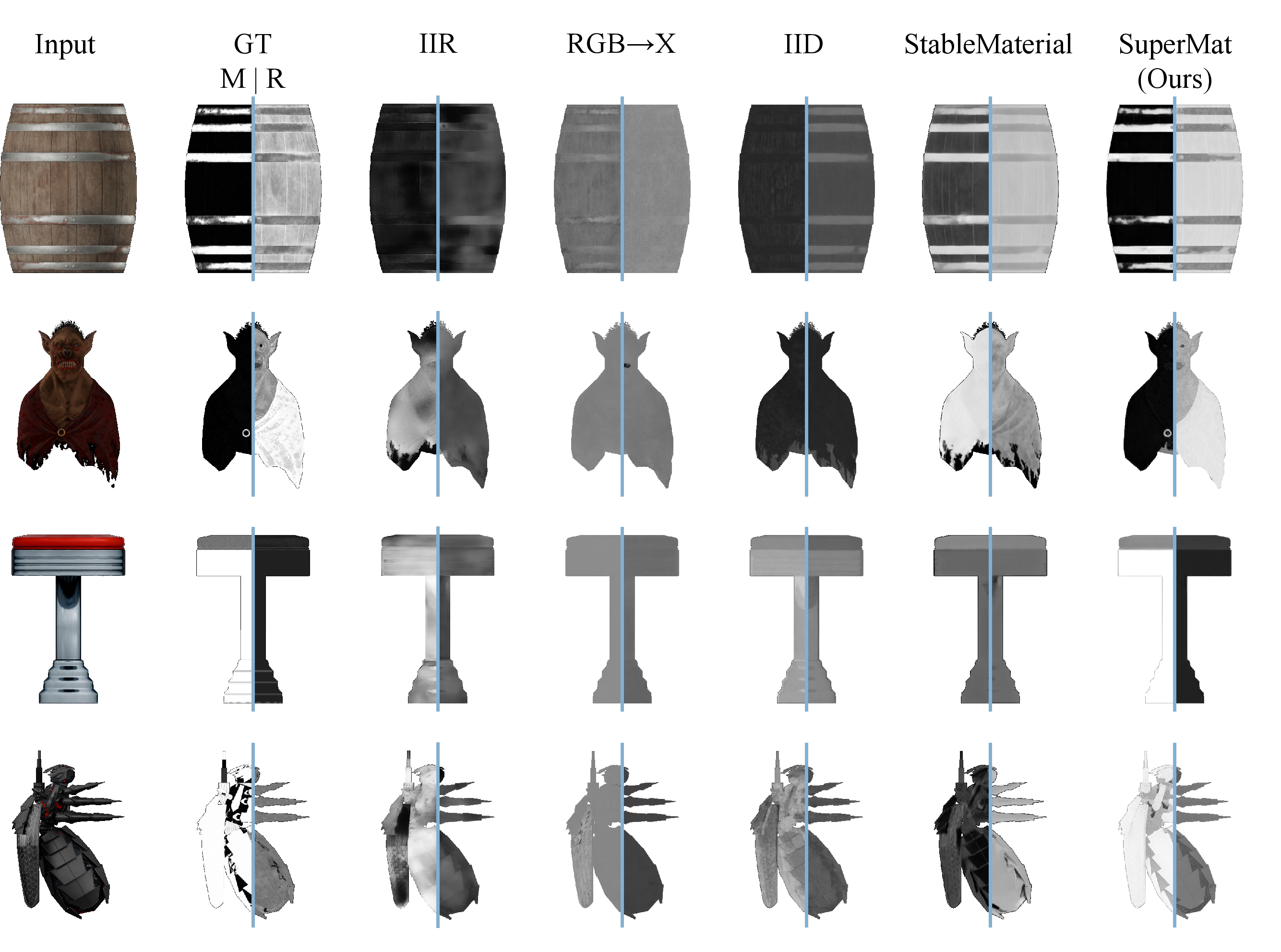

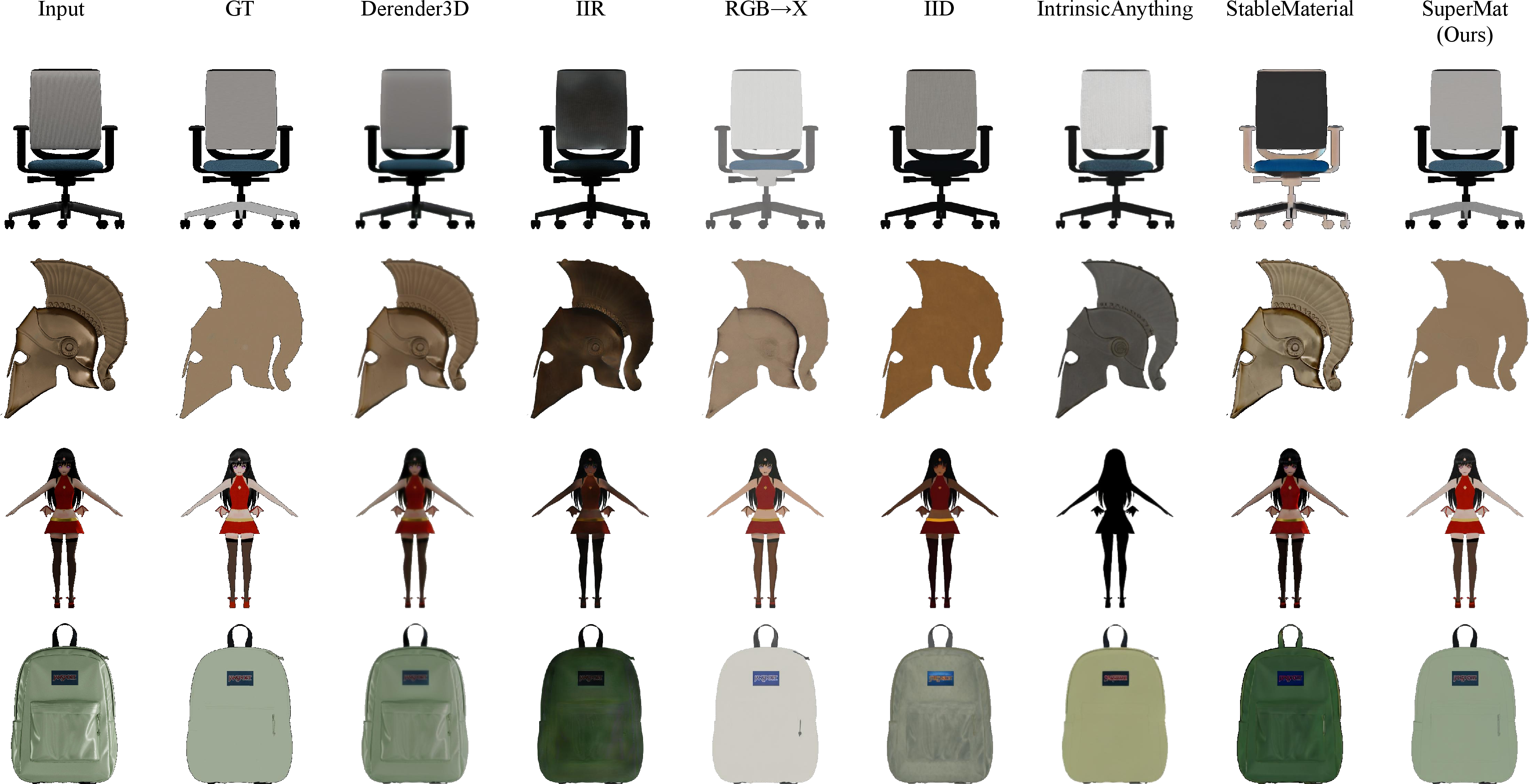

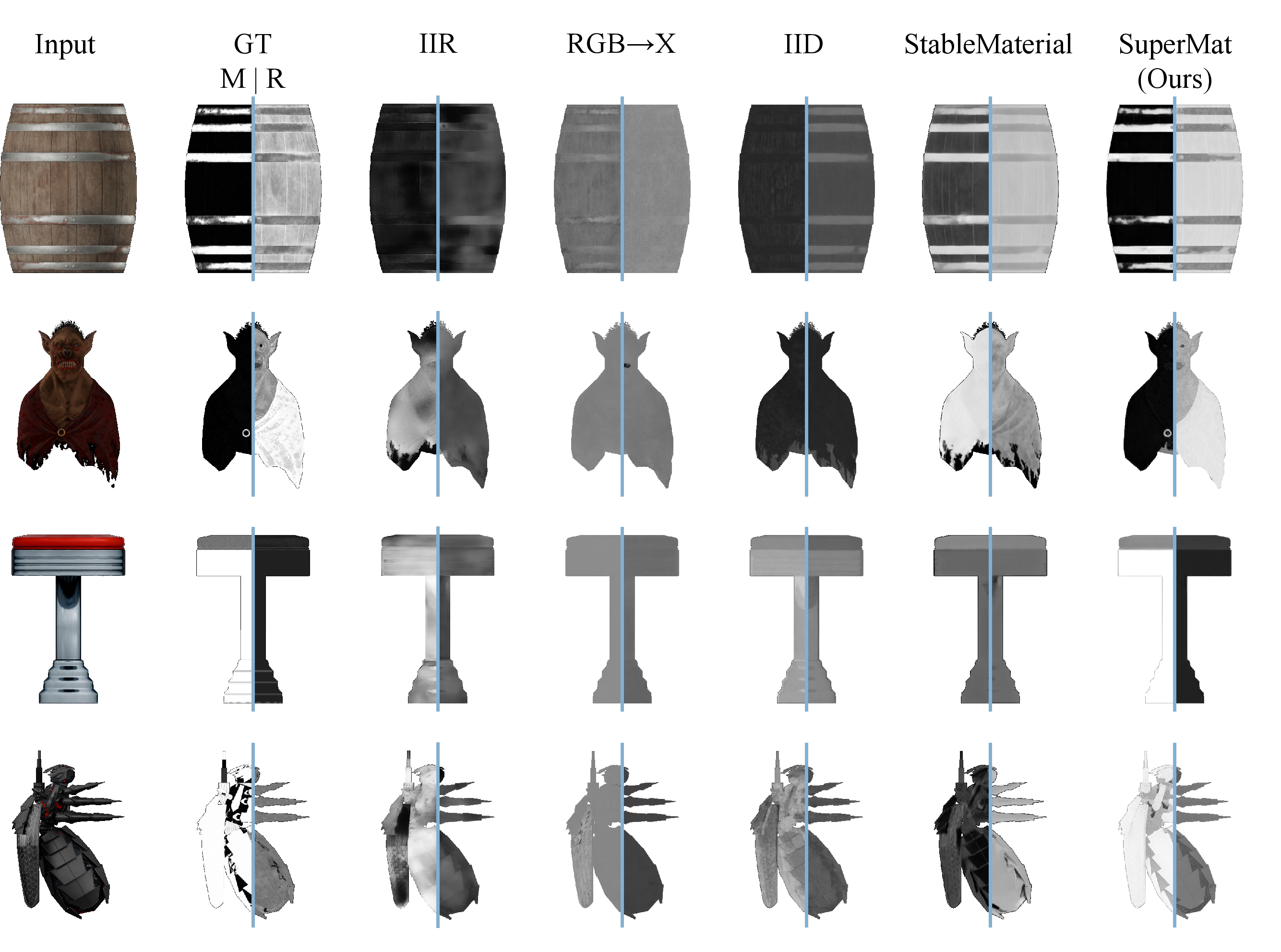

Quantitative tests highlight SuperMat's superior performance in decomposing PBR materials compared to existing diffusion-based approaches. It achieves state-of-the-art results in albedo, metallic, and roughness estimation metrics like PSNR and SSIM, as detailed in the experimental results.

Figure 3: Comparison of our method with others on albedo estimation results.

Figure 4: Comparison of our method with others on metallic and roughness estimation results.

SuperMat's rapid inference (milliseconds per image) and comprehensive material consistency demonstrate its potential for real-time applications in film, gaming, and virtual/augmented reality.

Conclusion

SuperMat represents a significant step forward in the field of material estimation by combining efficient computation with high-fidelity outputs, all while maintaining physical consistency. Its application extends beyond static images to dynamic 3D objects, proving its utility across a wide array of digital production scenarios. Future work could explore further optimization of the UV refinement network and expand its application to an even broader range of digital assets. This holistic approach marks an important advancement towards achieving seamless, real-time material rendering in diverse visual environments.