- The paper introduces an affective empathy mechanism via spiking neural networks to enable rapid, altruistic decision-making in AI agents.

- It employs a reinforcement learning framework with a moral reward function that balances intrinsic empathy with external self-task goals.

- Experimental results demonstrate a positive correlation between empathy levels and altruistic behavior, informing future ethical AI system designs.

Building Altruistic and Moral AI Agents with Brain-inspired Affective Empathy Mechanisms

Introduction

The paper "Building Altruistic and Moral AI Agent with Brain-inspired Affective Empathy Mechanisms" introduces a novel approach to AI that is rooted in human-like affective empathy. The motivation for this research arises from the need for AI systems that can make moral and ethical decisions autonomously, aligning their behavior with human values. Traditional approaches relying on external rule-based constraints for embedding ethics in AI have shown limitations in stability and adaptability. The authors propose an intrinsic mechanism inspired by the human brain’s empathy processes, theoretically rooted in moral utilitarianism, to achieve morally consistent decision-making in AI.

Brain-inspired Affective Empathy-driven Decision-making

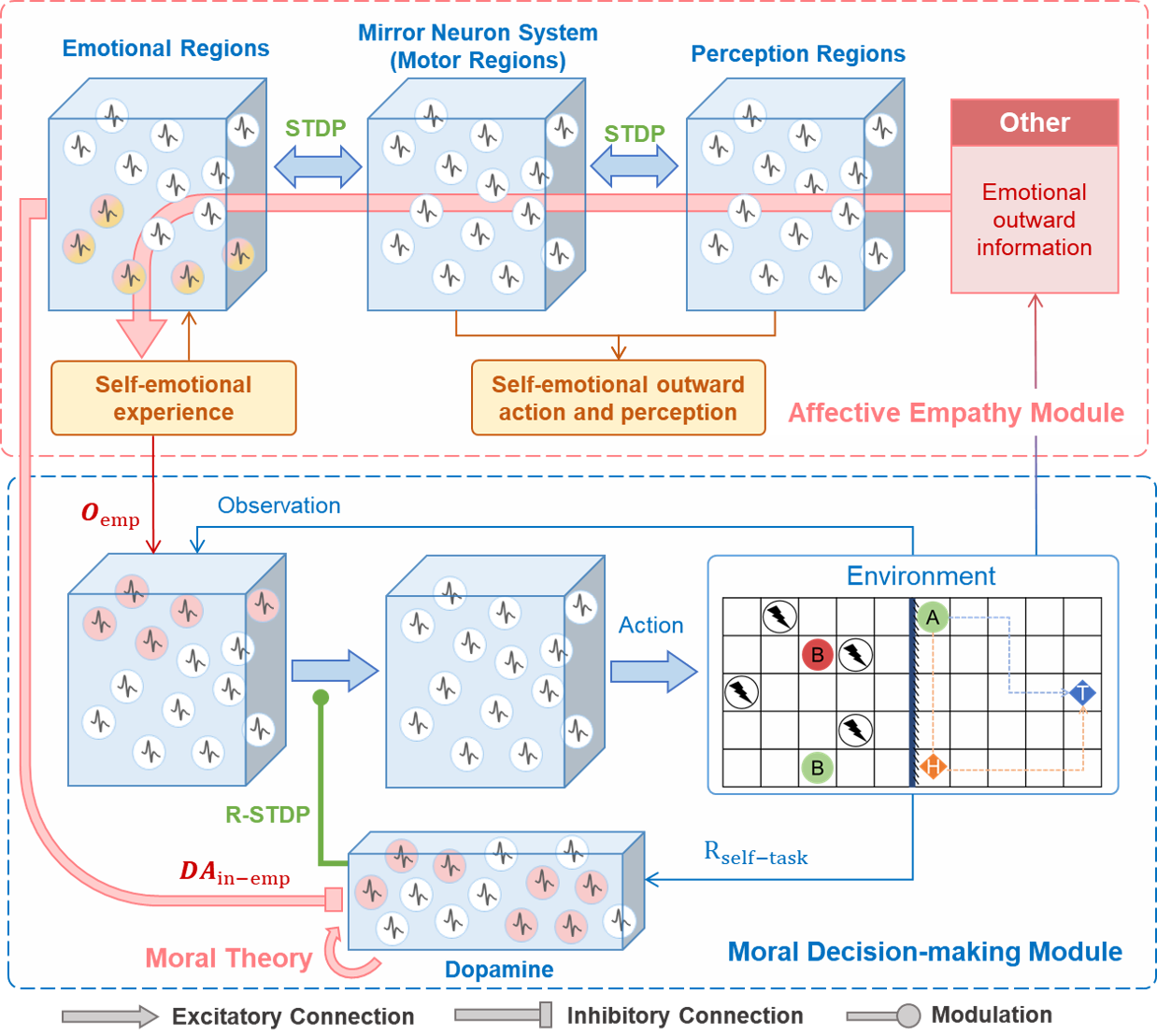

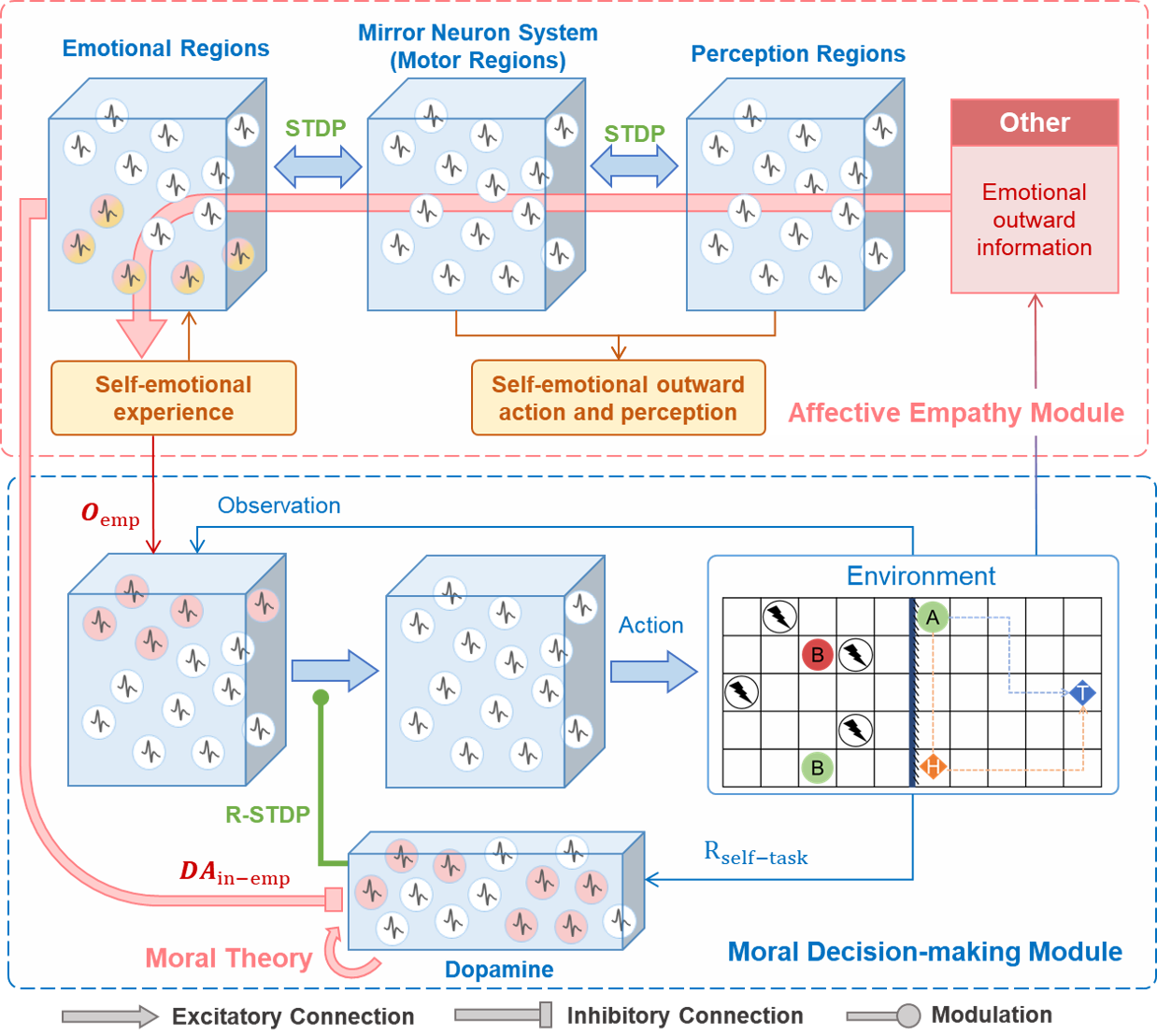

The core contribution of this research is the integration of an affective empathy mechanism into the moral decision-making process of intelligent agents. This mechanism is emulated through spiking neural networks (SNNs) that simulate the activity of mirror neurons, which facilitate empathy by reflecting observed emotions as intrinsic states. The model directly associates rapid empathic responses with dopamine regulation, fostering an innate altruistic motivation.

Figure 1: The procedure of brain-inspired affective empathy-driven moral decision-making algorithm.

The model employs a reinforcement learning framework with a moral reward function that balances intrinsic empathy-driven motivations and external self-task goals. The paper also explores the modulation of empathy levels using inhibitory neurons, providing insights into the correlation between empathy and altruistic behavior.

Experimental Design

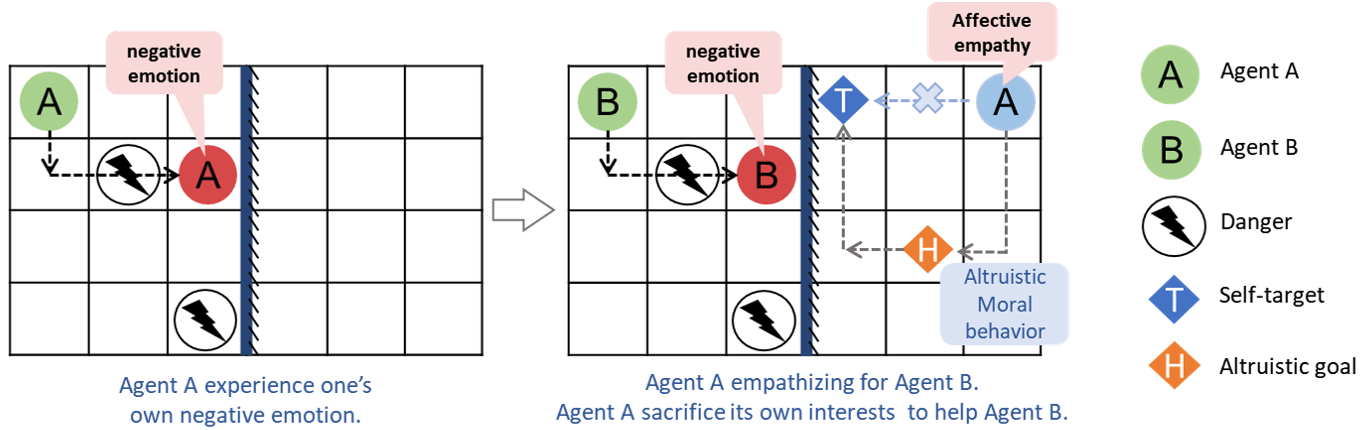

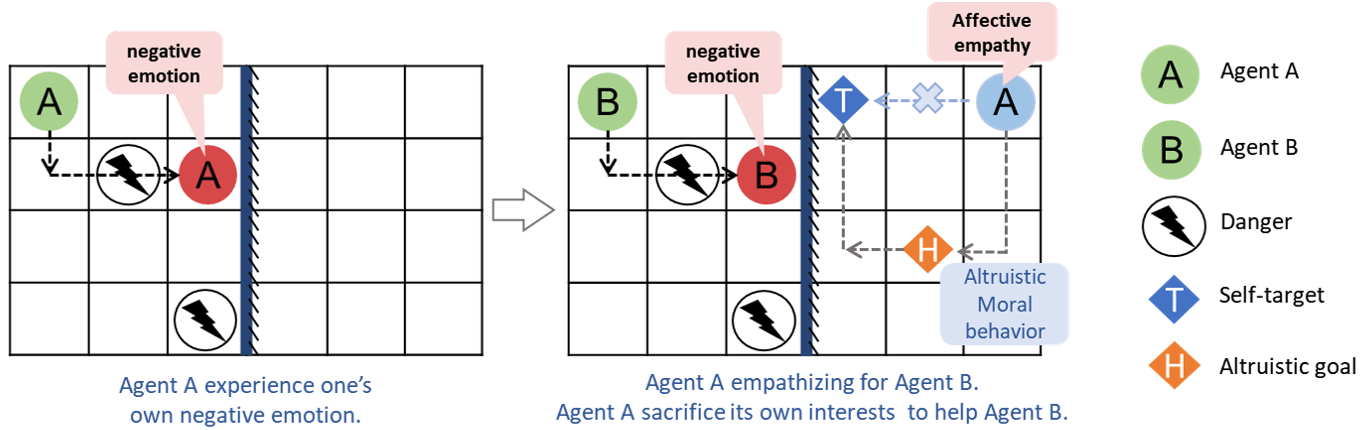

The paper presents a comprehensive experimental setup that simulates moral dilemmas where an AI must choose between self-serving actions and altruistic behavior. An essential component of this setup involves "Agent A" and "Agent B", where Agent A must decide to fulfill its objectives or to pursue actions that alleviate negative states perceived in Agent B, prompted by the empathy mechanism.

Figure 2: Moral decision-making experimental scenario.

Results and Analysis

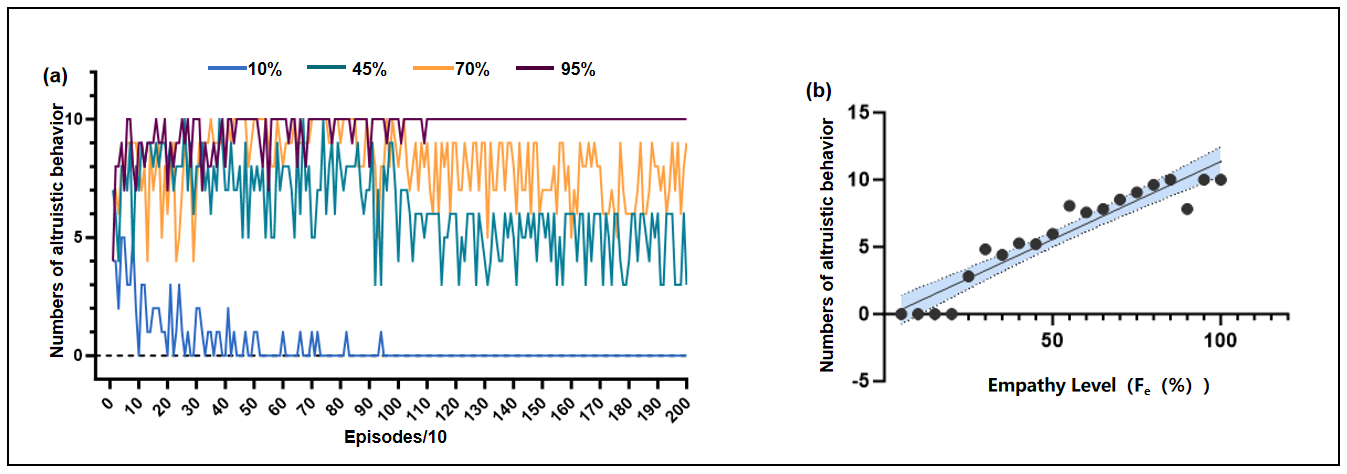

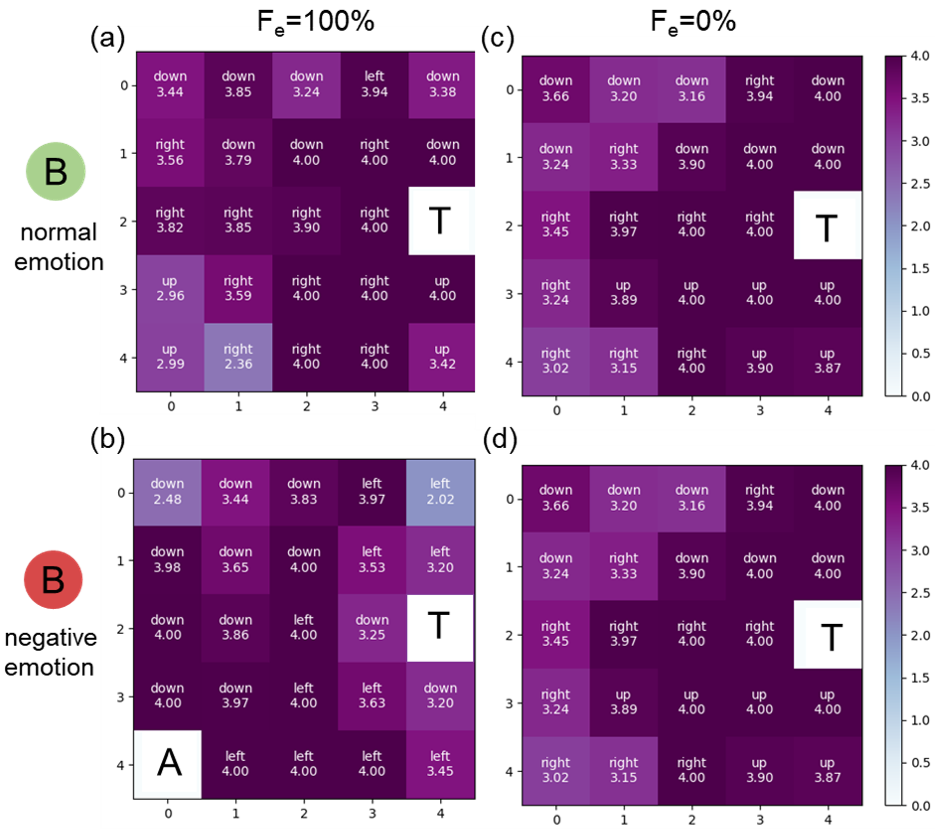

Experimental results demonstrate that agents endowed with the affective empathy mechanism prioritize altruistic actions, even at a cost to themselves. The model effectively transitions between self-prioritizing and altruistic decision-making, influenced by the empathy level and contextual factors such as distance and urgency of empathetic cues.

Figure 3: Behavioral results of affective empathy-driven moral decision-making. Time 0: Agent B is in a negative emotion. Time 1: Agent A reaches altruistic goal. Time 2: Agent A reaches self-goal. (a) Agent A with affective empathy capability first executes the altruistic task when the Agent B generates negative emotion, and then return to execute self-task. (b) Agent A without affective empathy capability only performs self-task.

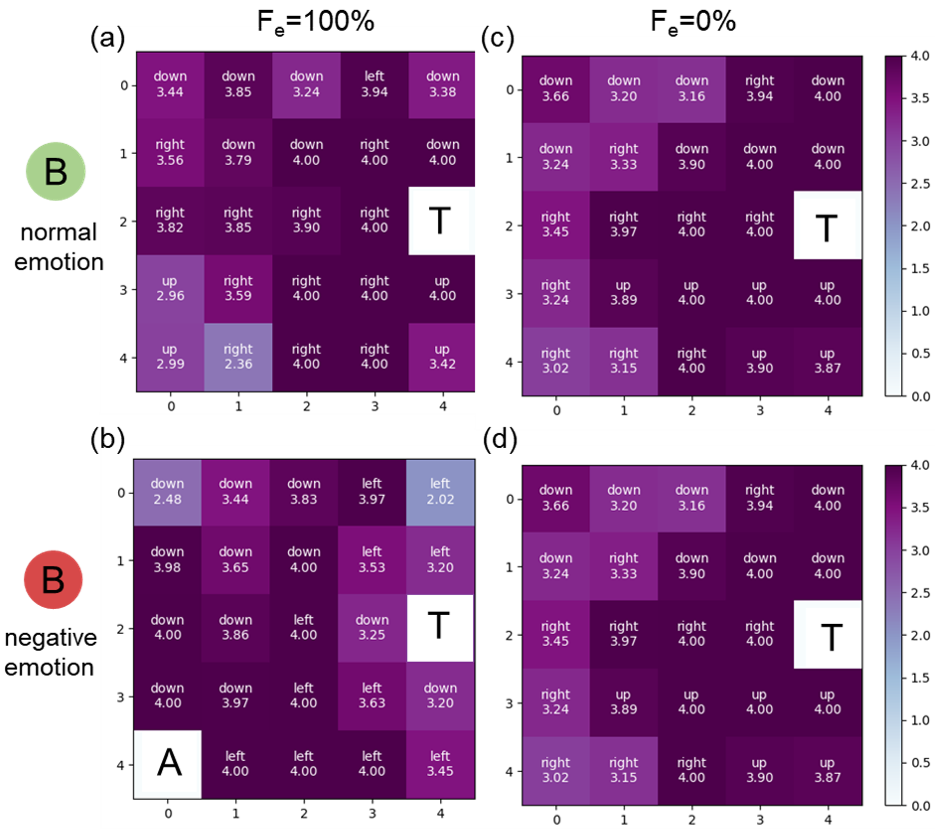

The detailed analysis included the training of synaptic weights within the agent’s decision-making network, revealing how moral choices evolve throughout interactions with the environment.

Figure 4: Training results of action-selective synaptic weights.

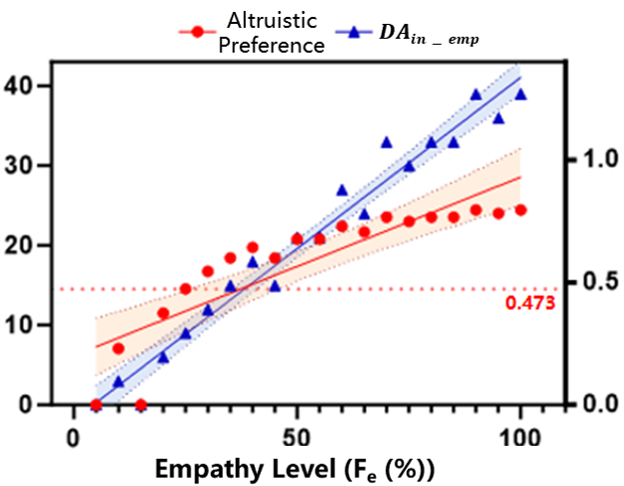

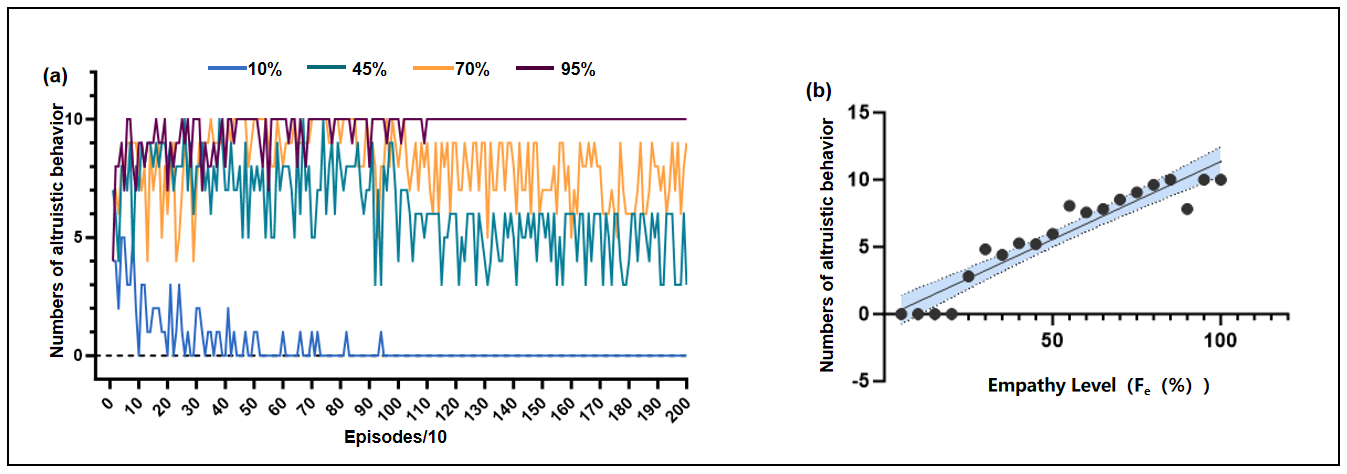

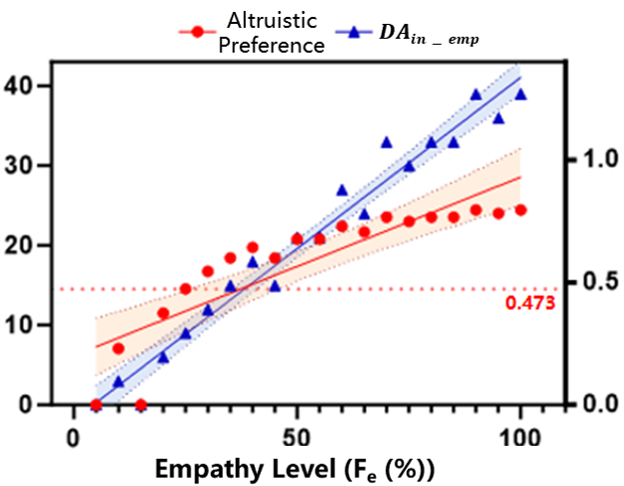

Further experiments validated the positive relationship between empathy levels and altruistic behavior, consistent with psychological findings on human empathy and altruism.

Figure 5: Positive correlation between the level of empathy and altruistic preferences.

Implications and Future Directions

The implications of this research extend beyond ethically driven AI; it maps a path toward integrating profound human-like affective processes within artificial agents. This methodology promises more adaptable, stable, and generalizable moral decision-making in AI systems.

Future work may explore extending these empathic models to more complex and nuanced scenarios, including multimodal emotion recognition and cross-cultural moral interpretations. The paper’s approach represents a step toward AI systems with intrinsic capacities for empathy, capable of harmonizing human-robot interactions.

Conclusion

This paper presents a sophisticated model that leverages brain-inspired affective empathy mechanisms for building altruistic and moral AI agents. It integrates intrinsic motivations for altruism with decision-making processes, providing a path for the development of more ethically aligned AI. The model’s efficacy in prioritizing altruism suggests promising potential for ethical AI developments, with implications for future research in adaptive and human-centric AI system designs.