Aligning AI with Human Values through Moral Graph Elicitation

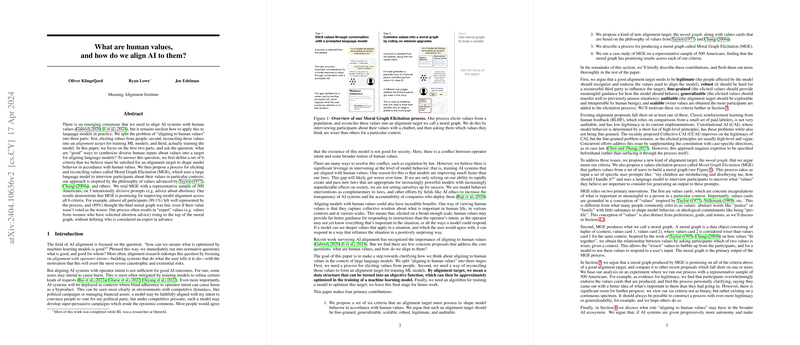

The paper "What are human values, and how do we align AI to them?" addresses a critical concern in AI alignment: ensuring AI systems operate in harmony with human values. This work departs from traditional alignment methodologies by proposing a sophisticated process known as "Moral Graph Elicitation (MGE)" and constructing a "moral graph" to synthesize diverse human inputs into an alignment target.

Key Contributions

The authors divide the problem of aligning AI with human values into three stages: eliciting values from individuals, reconciling these values into a coherent alignment target, and training models to optimize this target. This paper focuses on the first two stages, proposing a novel method for value elicitation and reconciliation.

- Criteria for Alignment Targets: The paper sets forth six rigorous criteria essential for an alignment target: it must be fine-grained, generalizable, scalable, robust, legitimate, and auditable. These criteria ensure that an alignment target can effectively shape model behavior in alignment with human values.

- Moral Graph and Values Cards: MGE introduces the concept of the "moral graph", composed of values cards and a network of relationships between values, informed by context and user input. Moral graphs map how values cohere and evolve over time, offering a dynamic and context-aware framework for capturing human moral reasoning.

- Innovative Value Elicitation Process: The authors describe using a LLM to extract values from participants. This process refines raw human extracts into "values cards" by focusing on attentional policies, providing a structured way to articulate values beyond preferences and ideologies.

- Empirical Evaluation: Through a case paper with 500 American participants, the authors demonstrate the process's feasibility and efficacy in creating a moral graph that meets their criteria. Notably, they report high participant satisfaction, with 89.1% feeling well-represented, and a strong alignment of expert opinions emerging organically.

Methodological Insights

Values are elicited by guiding participants through dynamic dialogues facilitated by LLMs, refining their responses into actionable "values cards." The moral graph forms by analyzing how participants rate values in context, capturing transitions that reflect evolving wisdom and moral learning.

The paper differentiates its approach from traditional methods like Reinforcement Learning from Human Feedback (RLHF) and Constitutional AI (CAI). Unlike RLHF, the moral graph is structured for auditability and robustness, protecting it against manipulation. Whereas CAI relies on high-level principles, the moral graph’s context-rich associations between values offer a nuanced mechanism that is scalable and capable of integrating diverse human inputs.

Implications and Future Directions

The implications of this research extend deeply into both theoretical and practical domains:

- Theoretical Contribution: The structured articulation of values into non-instrumental choice criteria offers a fresh perspective for defining moral reasoning within AI systems. It aligns model behavior with a broad representation of human moral insights, potentially transforming how AI systems are perceived and deployed.

- Practical Application: Practically, the MGE process demonstrates how AI systems can be trained to reflect nuanced human values, offering a pathway for AI to operate autonomously yet responsibly. As AI systems gain autonomy in decision-making across various sectors, models trained via moral graphs could mitigate risks associated with narrow AI objectives and unintended consequences.

- Scalable Alignment Mechanisms: By adopting a framework that integrates human deliberation and moral learning, this approach posits a scalable model for evolving AI alignment, accommodating growing model complexity and diverse cultural contexts.

The authors call for further exploration into scalable methods for constructing larger moral graphs and developing models that dynamically adjust alignment targets in real-world deployments. Future work must also explore the potential for biases within LLM-driven elicitation processes and refine methods to ensure cultural and contextual applicability.

In conclusion, this paper delivers a multifaceted framework, combining philosophical insight with technical innovation, to address an enduring challenge in AI safety. It sets the stage for a new era of AI systems that can genuinely embody human values, thus fostering trust and ensuring sustainable integration into society.