- The paper introduces shapiq, a Python package that integrates state-of-the-art approximation algorithms to efficiently compute Shapley Values and Shapley Interactions in ML.

- It details both exact and approximate computation methods, benchmarked using metrics like MSE and Precision@5 to validate performance and trade-offs.

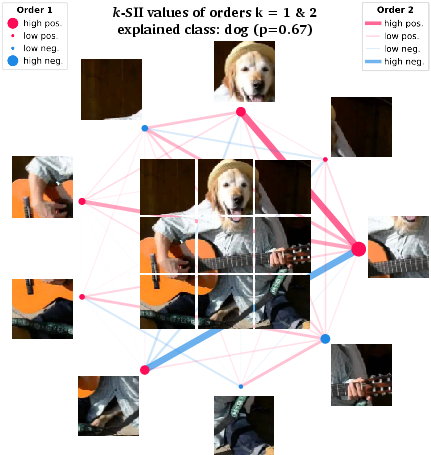

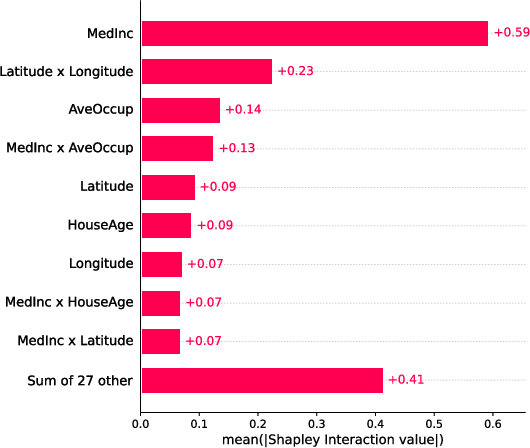

- The toolkit enhances model explainability by quantifying joint feature contributions, supporting advanced applications of cooperative game theory in AI.

shapiq: Shapley Interactions for Machine Learning

Introduction

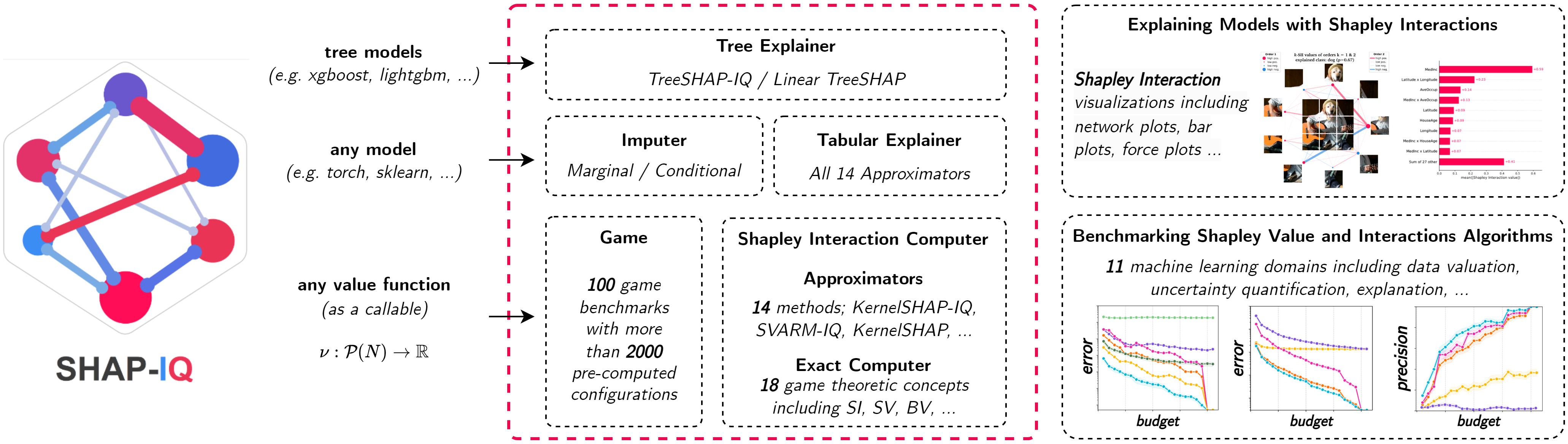

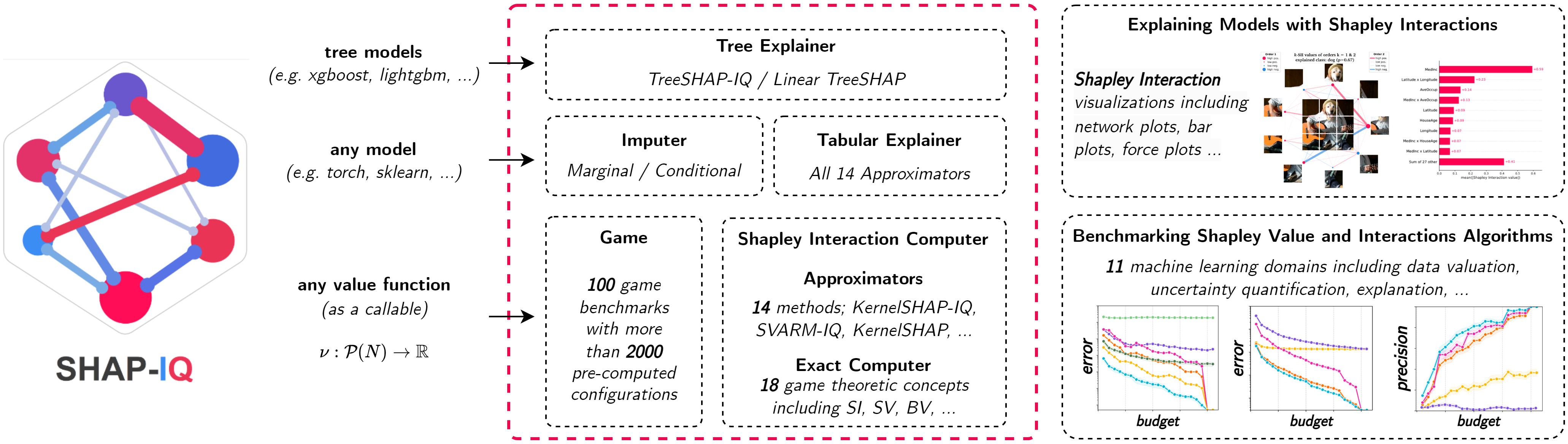

The paper "shapiq: Shapley Interactions for Machine Learning" presents an open-source Python package, shapiq, designed to compute Shapley Values (SV) and Shapley Interactions (SI) in an efficient and application-agnostic manner. The importance of SVs in feature attribution and data valuation within Explainable AI (XAI) is well-documented. However, their computational complexity often limits their practical application. Shapiq aims to address these computational challenges by integrating state-of-the-art approximation algorithms and providing comprehensive tools for their practical application in ML. The paper emphasizes that these interactions can enhance understanding of complex ML models by considering joint contributions of feature groups, going beyond traditional feature attributions.

Figure 1: The shapiq Python package facilitates research on game theory for machine learning, including state-of-the-art approximation algorithms and pre-computed benchmarks. Moreover, it provides a simple interface for explaining predictions of machine learning models beyond feature attributions.

Theoretical Background

SVs are pivotal in cooperative game theory, offering a unique allocation scheme based on specific axioms like efficiency, symmetry, and dummy. These values are crucial in ML for distributing a model's output fairly among input features. SIs further extend these concepts by quantifying the combined contributions of feature groups, which are often necessary for capturing synergies and redundancies among features in complex models.

Computational challenges arise due to the exponential number of calculations required to determine SVs or SIs precisely. Shapiq leverages both structural model properties and stochastic approximations to efficiently estimate these values.

Implementation of shapiq

Shapiq provides an interface to compute SVs and SIs using exact and approximate methods, encompassing a vast array of algorithms suitable for different ML applications:

Benchmarking and Evaluation

The paper provides a comprehensive benchmarking suite within shapiq to assess the performance and utility of different approximators across multiple real-world ML scenarios. This includes:

Implications and Future Directions

Shapiq significantly advances the practical feasibility of SVs and SIs in ML by mitigating computational constraints. Its generalizability allows researchers to apply game-theoretic approaches across various domains, fostering a deeper understanding of model behavior and feature dynamics.

Future developments could enhance computational efficiency further, possibly integrating low-level optimizations or leveraging parallel computing architectures. Additionally, extending visualizations and interpretability features within shapiq could aid in human-centric model evaluations, aligning with the growing emphasis on transparency and accountability in AI systems.

Conclusion

By providing a comprehensive toolkit for SV and SI computation, shapiq positions itself as a crucial resource in the XAI landscape. It not only empowers researchers to explore complex feature attributions and interactions but also sets a benchmark for evaluating the efficacy of current approximation methods across a variety of ML tasks. The package's contributions are expected to facilitate future research in cooperative game theory applications in ML, driving innovations in feature attribution methodologies.