- The paper outlines a systematic framework for integrating tools with LLMs, highlighting methods for understanding user intent and dynamic execution planning.

- It presents challenges such as tool invocation timing, selection accuracy, and error correction, emphasizing the need for robust reasoning processes.

- The study explores fine-tuning and in-context learning strategies, paving the way for LLMs to autonomously create and refine tools in future systems.

Introduction

The integration of tools within LLMs aims to improve the accuracy and efficiency of these models when executing complex, domain-specific tasks. This paper provides a comprehensive overview of the methodologies, challenges, and advancements related to equipping LLMs with tool-using capabilities. By establishing a structured paradigm for tool interaction, the research emphasizes understanding user intent, selecting appropriate tools, and dynamically adjusting execution plans to enhance the capabilities of LLMs beyond pre-existing knowledge bases. This survey highlights challenges such as tool invocation timing, selection accuracy, and reasoning robustness, while exploring strategies in fine-tuning and in-context learning to address them. The paper also entertains the notion of enabling LLMs to autonomously create tools, potentially expanding their functional roles from mere users to creators.

Integration Methodology

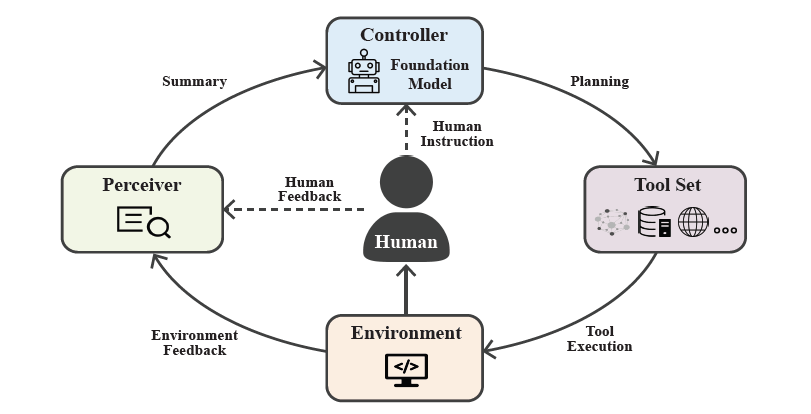

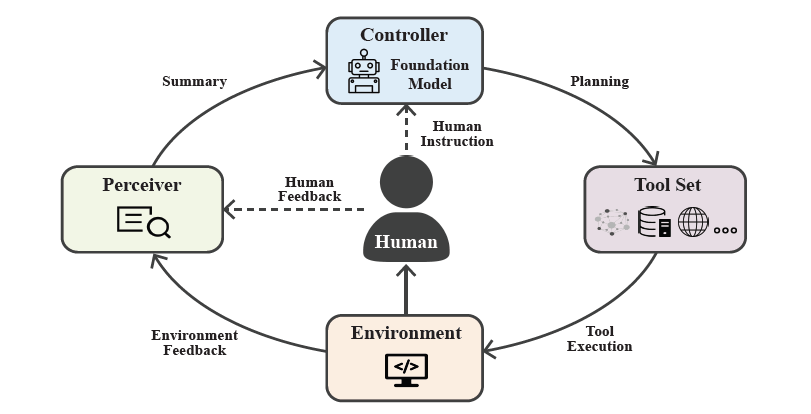

The integration of tools into LLMs involves a systematic framework starting with user instructions, translating these into executable plans, and executing them effectively. This process requires understanding user intentions, which involves accurately interpreting user directives to ensure subsequent steps are relevant. Furthermore, the model needs to understand the scope and limitations of available tools to determine their appropriate usage context. By breaking tasks into manageable subtasks, models can adjust flexibly during execution, incorporating real-time reasoning to adapt plans dynamically.

Figure 1: Whole process of LLM using tools.

Determining the optimal context and timing for invoking a tool is critical. Over-reliance on external tools when the model's inherent capabilities suffice can lead to inefficiencies and inaccuracies. Conversely, invoking tools is essential in scenarios requiring access to real-time data, complex calculations, or processing non-standard document formats.

Accurate tool selection is a pivotal challenge due to varying performance attributes across different tools. For complex, non-linear reasoning processes, maintaining precise tool calls is essential, as inaccuracies can precipitate cumulative reasoning faults.

Robustness and Efficiency

Maintaining the robustness of the reasoning process is crucial, particularly as task complexity grows. Error detection and correction mechanisms are required to manage inference complexities without propagating errors. Time efficiency is another aspect, especially as tool invocation increases the pipeline's temporal demands.

Generalization and Autonomy

The aspiration for AGI underscores the importance of broad generalization abilities, allowing LLMs not only to apply tools effectively but to potentially innovate autonomously. Current models grapple with self-creation and adaptation challenges, emphasizing the need for tools that support continuous learning and optimization.

Fine-Tuning and In-Context Learning

Fine-tuning with specialized datasets enhances tool-integration efficacy by allowing models to internalize specific tool-related knowledge. A guiding framework involves datasets like C∗ that encode instructions and anticipated outcomes, thus refining the model's decision processes for tool applications through frequent feedback integration.

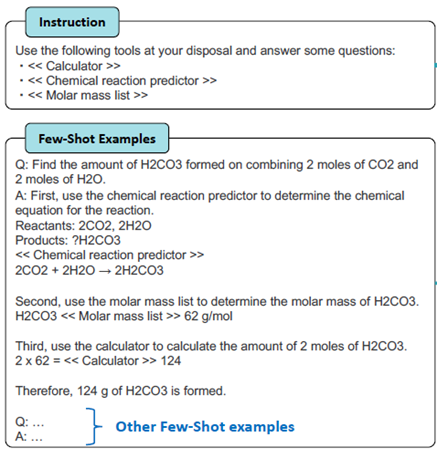

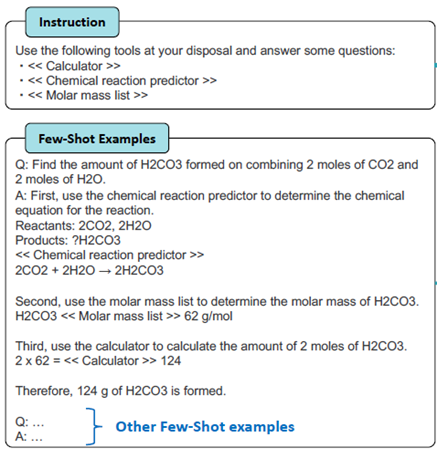

In-context learning, on the other hand, employs modules such as MultiTool-CoT, which use CoT prompting techniques to demonstrate tool utilizations within task progression phases, thereby broadening applicability across complex, problem-solving scenarios.

Figure 2: Example of MultiTool-CoT prompt.

Future Directions and Conclusion

This survey delineates several future research avenues, such as optimizing tool scheduling topologies and enhancing continuous learning models. Additionally, addressing the nuances in tool selection and ensuring that LLMs can self-correct and evolve dynamically with user feedback remain key research challenges. The emergence of models that can not only use but also autonomously create and refine tools redefines the potential of LLMs, indicating profound implications for the AI landscape. Such developments promise to expand the boundaries of what autonomous systems can achieve, underlining the enduring quest for robust, intelligent, and adaptable AI systems.